Introduction

The digital transformation brought about by the social distancing and isolation caused by the global COVID-19 pandemic was both extremely rapid and unexpected. From shortening the distance to our loved ones to reengineering entire business models, we’re adopting and scaling new solutions that are as fast-evolving as they are complex. The full impact of the decisions and technological shifts we’ve made in such short a time will take us years to fully comprehend.

Unfortunately, there’s a darker side to this rapid innovation and growth which is often performed to strict deadlines and without sufficient planning or oversight – over the past year, cyberattacks have increased drastically worldwide [1]. Ransomware attacks rose 40% to 199.7 million cases globally in Q3 alone [2], and 2020 became the “worst year on record” for data breaches by the end of Q2 [1].

In 2020, the U.S. government suffered a series of attacks targeting several institutions, including security agencies, the Congress, and the judiciary, combining in what was arguably the “worst-ever US government cyberattack,” and also affecting major tech companies.

The attacks were reported in detail [3], bringing attention to the mass media [4]. A recent article by Kari Paul and Lois Beckett in The Guardian stated[5]:

“Key federal agencies, from the Department of Homeland Security to the agency that oversees America’s nuclear weapons arsenal, were reportedly targeted, as were powerful tech and security companies including Microsoft. Investigators are still trying to determine what information the hackers may have stolen, and what they could do with it.”

In November of last year, the Brazilian judicial system faced its own personal chapter of this story. The Superior Court of Justice, the second-highest of Brazil’s courts, had over 1,000 servers taken over and backups destroyed in a ransomware attack [6]. As a result of the ensuing chaos, their infrastructure was down for about a week.

Adding insult to injury, shortly afterward, Brazil’s Superior Electoral Court also suffered a cyberattack that threatened and delayed recent elections [7].

In this post, we will briefly revisit key shifts in cyberattack and defense mechanisms that followed the technological evolution of the past several decades. Even after a series of innovations and enhancements in the field, we will illustrate how simple security issues still pose major threats today, and certainly will tomorrow.

We will conclude by presenting a cautionary case study [25] of the trivial vulnerability that could have devastated the Brazilian Judicial System.

The Ever-changing ROI of Cyberattacks

Different forms of intrusion technology have come into and out of vogue with attackers over the decades since security threats have been in the public consciousness.

In the 1980s, default logins and guest accounts gave attackers carte blanche access to systems across the globe. In the 1990s and early 2000s, plentiful pre-authentication buffer overflows could be found everywhere.

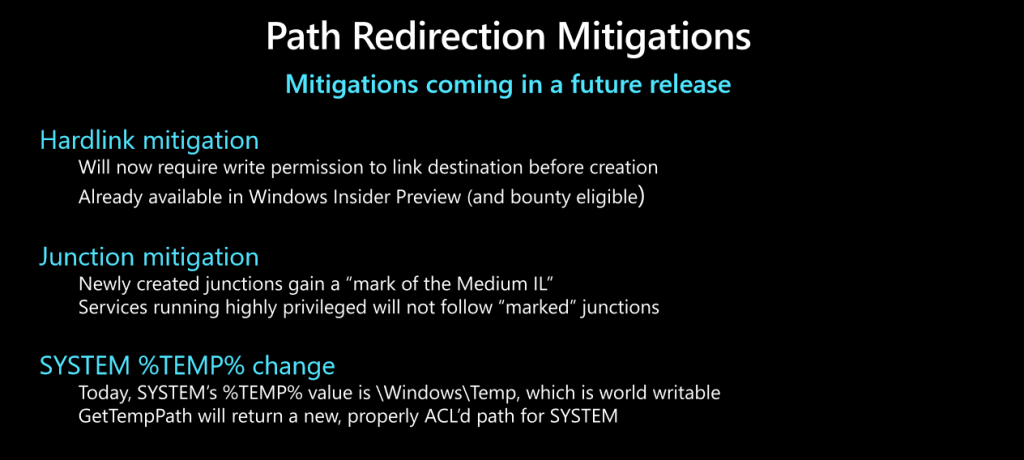

Infrastructure was designed flatly then, with little compartmentalization in mind, leaving computers — clients and servers — vastly exposed to the Internet. With no ASLR [8] or DEP/NX [9] insight, exploiting Internet-shattering vulnerabilities was a matter of a few hours or days of work — access was rarely hard to obtain for those who wanted it.

In the 2000s, things started to change. The rise of the security industry, Bill Gates’ famous 2002 memo, [10] and the growing full-disclosure movement leading the charge in the appropriate regulation of vulnerabilities, brought with them a full stack of security practices covering everything from software design and development to deployment and testing.

By 2010, security assessments, red-teaming exercises, and advanced protection mechanisms were common standards among developed industries. Nevertheless, zero-day exploits were still widely used for both targeted and mass attacks.

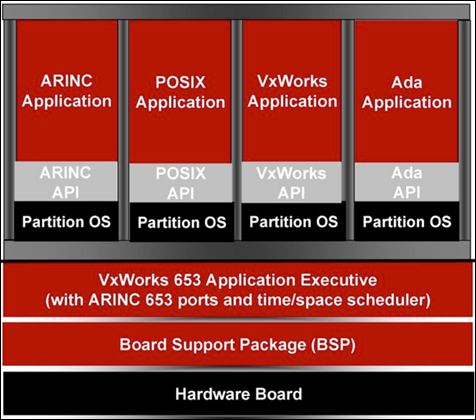

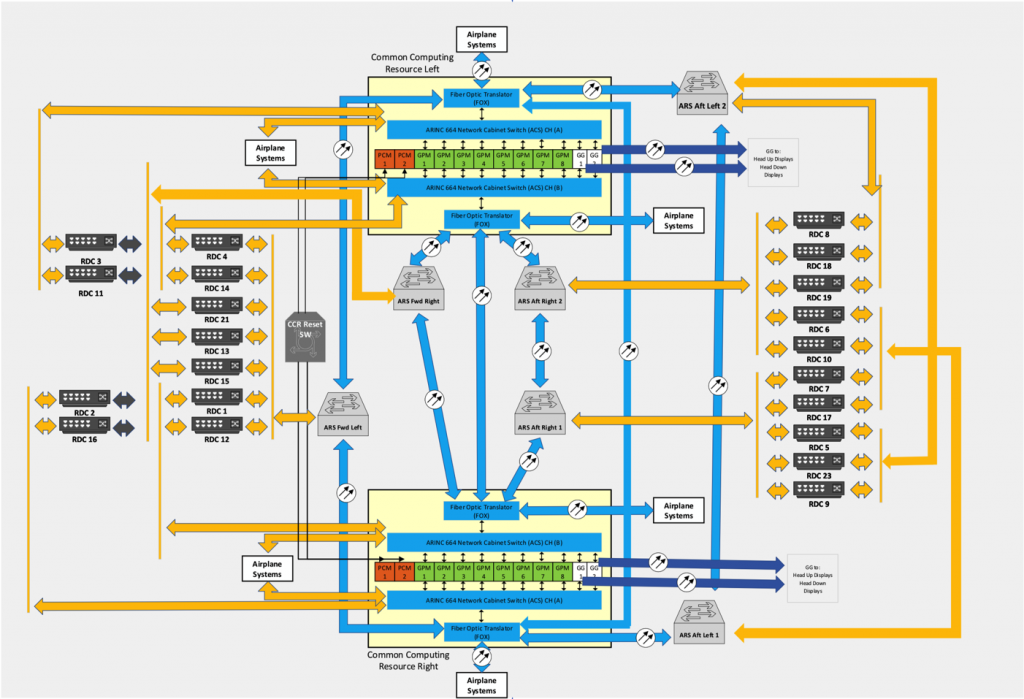

Between 2010 and 2015, non-native web applications and virtualized solutions multiplied. Over the following years, as increasing computing power permitted, hardware was built with robust chain-of-trust [11], virtualization [12], and access control capabilities. Software adopted strongly typed languages, with verification and validation [13] a part of code generation and runtime procedures. Network technologies were designed to support a variety of solutions for segregation and orchestration, with fine-grained controls.

From 2015 onwards, applications were increasingly deployed in decentralized infrastructures, along with ubiquitous web applications and services, and the Cloud started to take shape. Distributed multifactor authentication and authorization models were created to support users and components of these platforms.

These technological and cultural shifts conveyed changes to the mechanics of zero-day-based cyberattacks.

Today at IOActive, we frequently find complex, critical security issues in our clients’ products and systems. However, turning many of those bugs into reliable exploits can take massive amounts of effort. Most of the time, a start-to-end compromise would depend on entire chains of vulnerabilities to support a single attack.

In parallel to the past two decades of security advancements, cyberattacks adapted and evolved alongside them in what many observers compare to a “cyber-arms race,” with scopes on the private and government sectors.

While the major players in cyber warfare have virtually unlimited resources, for the majority of mid-tier cyber-attackers the price of such over-engineering simply doesn’t pay for itself. With better windows of opportunity elsewhere, attackers are instead increasingly relying on phishing, data breaches, asset exposures, and other relatively low-tech intrusion methods.

Simple Issues, Serious Threats: Today and Tomorrow

Technologies and Practices Today

While complex software vulnerabilities remain a threat today, increasingly devastating attacks are being leveraged from simple security issues. The reasons for this can vary, but it often results from recently adopted technologies and practices:

- Cloud services [14] becoming sine qua non make it hard to track assets, content and controls [15] in the overly agile DevOps lifecycle

- Third-party chains-of-trust become weaker as they grow (we’ve recently seen a code-dependency-critical attack based on typosquatting) [16]

- Weak MFA mechanisms based on telephony, SMS, and instant messengers leveraging identity theft and authentication bypasses

- Collaborative development via public repositories often leak API keys and other secrets by mistake

- Interconnected platforms create an ever-growing supply-chain complex that must be validated across multiple vendors

New Technologies and Practices Tomorrow

Tomorrow should bring interesting new shades to this watercolor landscape:

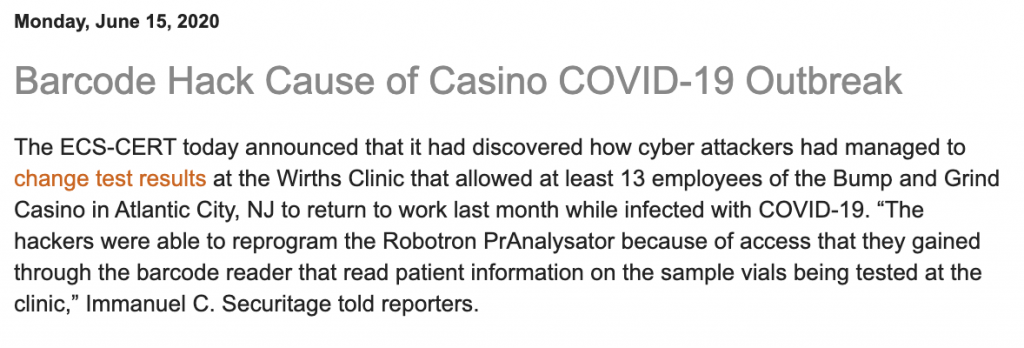

- IoT networks, such as LoRaWAN, with applications in various realms such as smart cities, industry, physical security, smart homes, agriculture, healthcare, mining, and construction, become susceptible to simple security issues with impacts to core infrastructure [17]

- Smart-city-centric attacks [18] (such as parking, lighting, traffic management, metering, and weather monitoring) also have a record of uncomplicated attack vectors [19]

- Worldwide spending on robotics reached $188 billion in 2020; we’ve already started to see on a daily basis how minor issues can have high impact [20]

- Industrial facilities handling sensitive data and core production goods rely on a series of highly automated procedures that have a rich history of security issues, some fairly simple [21]

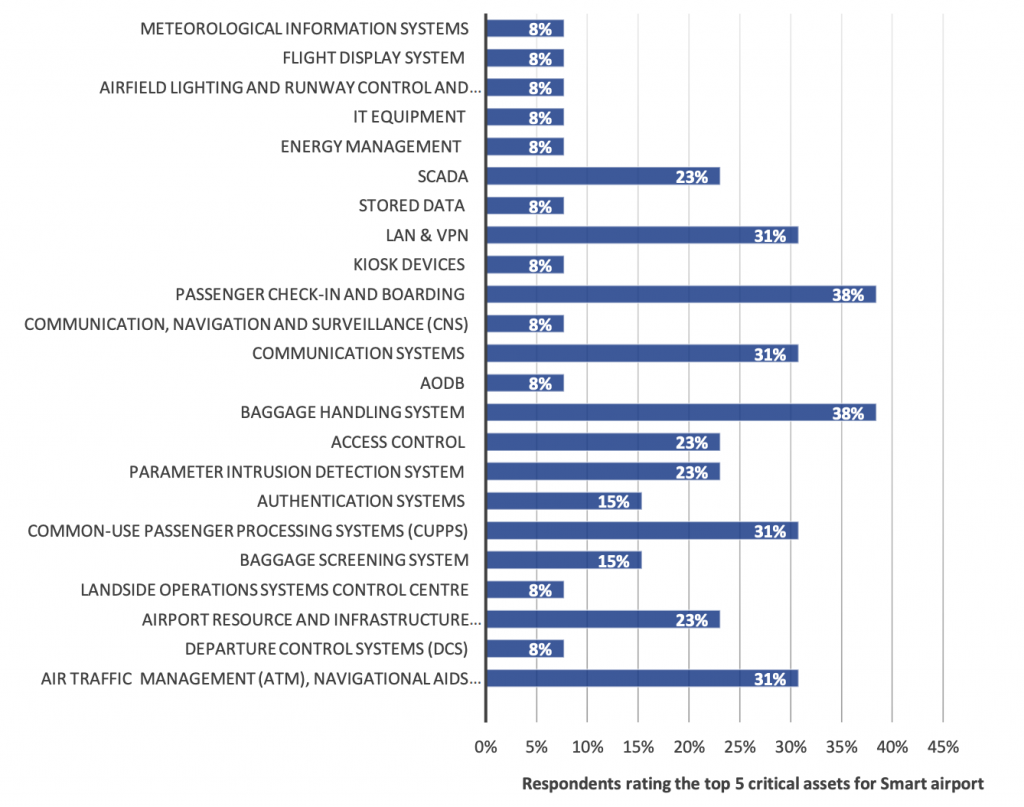

- Critical transportation and communication ventures, like connected cars, avionics and satellites can also fail due to trivial vulnerabilities [22,23]

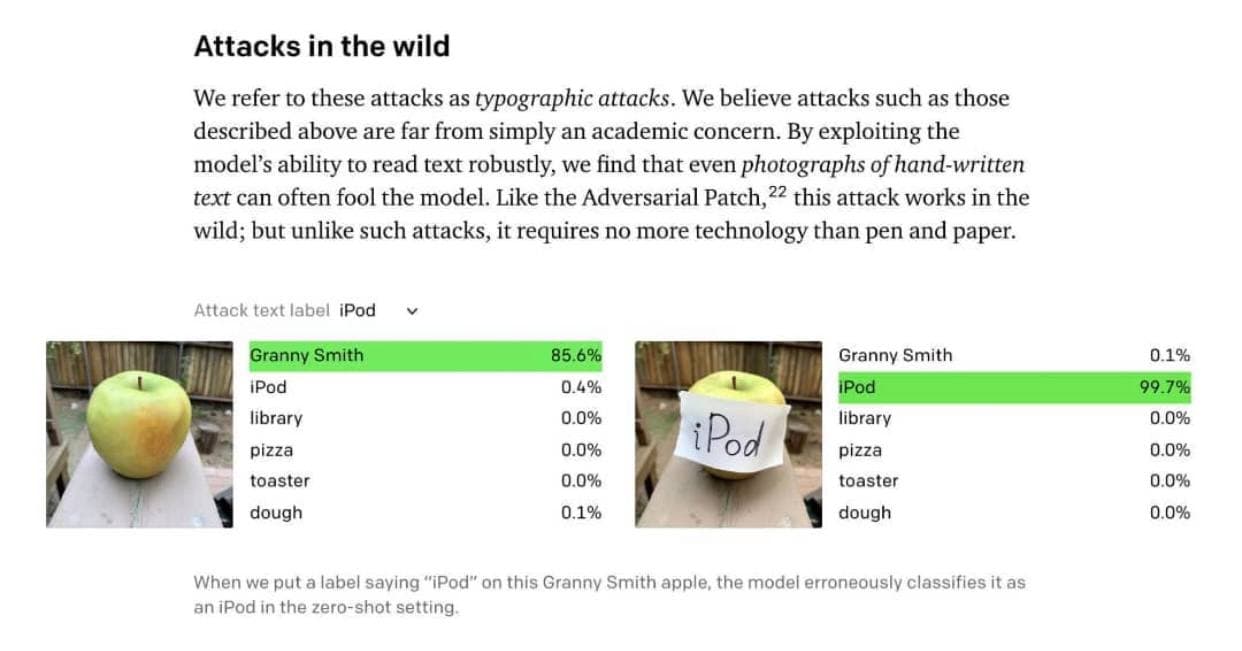

- The growing dependence on AI artefacts impacts not only governments and large enterprises [24], but a full spectrum of startups (from financial to legal tech) whose core businesses are dependent on “the right matches”

Old Technologies and Practices Today and Tomorrow

There is another factor contributing to the method, from which simple security issues continue to present major threats today and will so tomorrow. It echoes silently from a past where security scrutiny wasn’t praxis.

Large governmental, medical, financial, and industrial control systems all have one thing in common: they’re a large stack of interconnected components. Many of these are either legacy components or making use of ancient technologies that lack minimal security controls.

A series of problems face overstretched development teams who often need to be overly agile and develop “full stack” applications: poor SDLC, regression bugs, lack of unit tests, and short deadlines all contribute to code with simple and easily exploitable bugs that make it into production environments. Although tracking and sanitizing such systems can be challenging to industries and governments, a minor mistake can cause real disasters.

Case Study [view the file here]

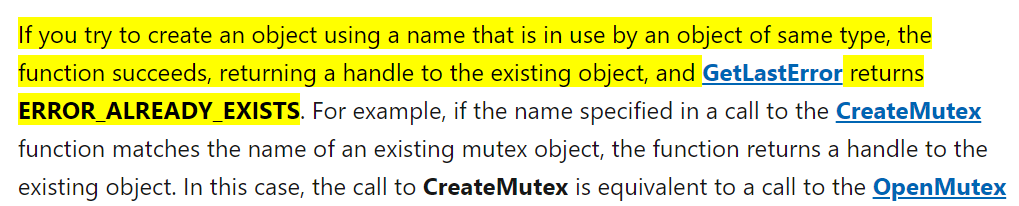

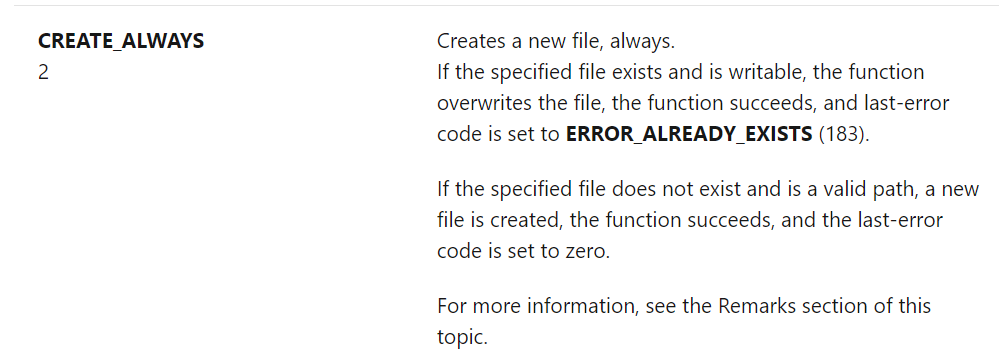

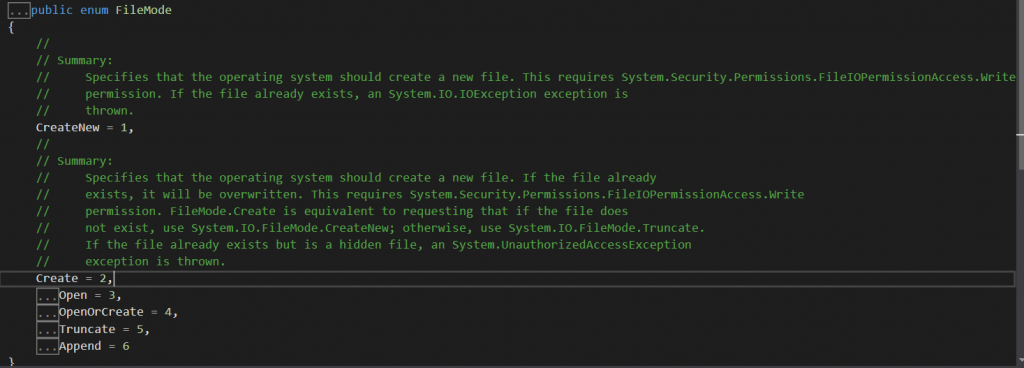

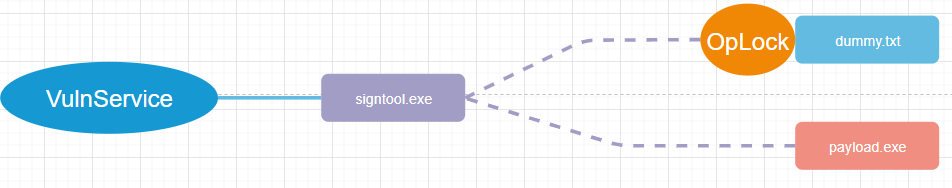

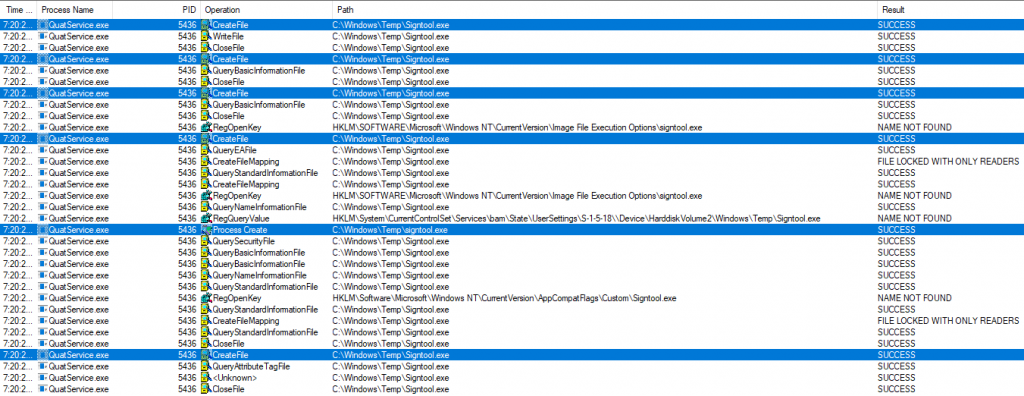

The Brazilian National Justice Council (CNJ) maintains a Judicial Data Processing System capable of facilitating the procedural activities of magistrates, judges, lawyers, and other participants in the Brazilian legal system with a single platform, making it ubiquitous as a result.

The CNJ Processo Judicial Eletrônico (CNJ PJe) system processes judicial data, with the objective of fulfilling the needs of the organs of the Brazilian Judiciary Power: Superior, Military, Labor, and Electoral courts; the courts of both the Federal Union and the individual states themselves; and the specialized justice systems that handle ordinary law and employment tribunals on both the federal and state level.

The CNJ PJeOffice software allows access to a user’s workspace through digital certificates, where individuals are provided with specific permissions, access controls, and scope of access in accordance with their roles. The primary purpose of this application is to guarantee legal authenticity and integrity to documents and processes through digital signatures.

Read the IOActive case study of the CNJ PJe vulnerabilities that fully details the scenario that represented big risks for the Brazilian Judicial System users.

Conclusion

While Information Security has strongly evolved over the past several decades, creating solid engineering, processual, and cultural solutions, new directions in the way we depend upon and use technology will come with issues that are not necessarily new or complex.

Despite their simplicity, attacks arising from these issues can have a devastating impact.

How people work and socialize, the way businesses are structured and operated, even ordinary daily activities are changing, and there’s no going back. The post-COVID-19 world is yet to be known.

Apart from the undeniable scars and changes that year 2020 imposed on our lives, one certainty is assured: information security has never been so critical.

References

[4] https://apnews.com/article/coronavirus-pandemic-courts-russia-375942a439bee4f4b25f393224d3d778

[5] https://www.theguardian.com/technology/2020/dec/18/orion-hack-solarwinds-explainer-us-government

[6] https://www.theregister.com/2020/11/06/brazil_court_ransomware/

[8] https://en.wikipedia.org/wiki/Address_space_layout_randomization

[9] https://en.wikipedia.org/wiki/Executable_space_protection

[10] https://www.wired.com/2002/01/bill-gates-trustworthy-computing/

[11] https://en.wikipedia.org/wiki/Trusted_Execution_Technology

[12] https://en.wikipedia.org/wiki/Hypervisor

[13] https://en.wikipedia.org/wiki/Software_verification_and_validation

[14] https://cloudsecurityalliance.org/blog/2020/02/18/cloud-security-challenges-in-2020/

[15] https://ioactive.com/guest-blog-docker-hub-scanner-matias-sequeira/

[16] https://medium.com/@alex.birsan/dependency-confusion-4a5d60fec610

[17] https://ioactive.com/wp-content/uploads/2025/05/LoRaWAN-Networks-Susceptible-to-Hacking-v1.pdf

[18] https://act-on.ioactive.com/acton/fs/blocks/showLandingPage/a/34793/p/p-003e/t/form/fm/0/r//s/?ao_gatedpage=p-003e&ao_gatedasset=f-2c315f60-e9a2-4042-8201-347d9766b936

[20] https://ioactive.com/wp-content/uploads/2018/05/Hacking-Robots-Before-Skynet-Paper_Final.pdf

[22] https://ioactive.com/pdfs/IOActive_SATCOM_Security_WhitePaper.pdf

[24] https://www.belfercenter.org/publication/AttackingAI

[25] https://ioactive.com/wp-content/uploads/2025/05/IOA-casestudy-CNJ-PJe.pdf

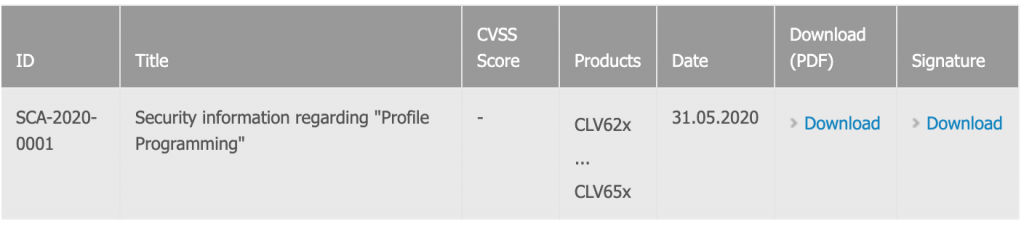

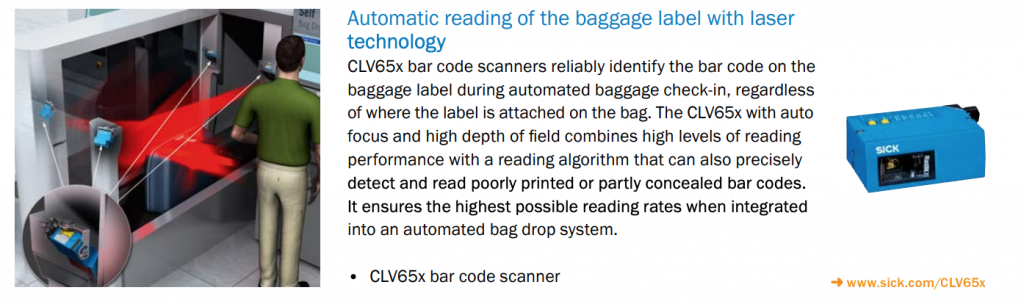

Analyzing CLV65x_V3_10_20100323.jar and CLV62x_65x_V6.10_STD

Analyzing CLV65x_V3_10_20100323.jar and CLV62x_65x_V6.10_STD