“The great thing about a map: it gets you in and out of places in a lot different ways.” – MacGyver

When I was young I was a big fan of the American TV show, MacGyver. Every week I tuned in to see how MacGyver would build some truly incredible things with very basic and unexpected materials — even if some of his solutions were hard to believe. For example, in one episode MacGyver built a futuristic motorized heat-seeking gun using only a set of batteries, an electric mixer, a rubber band, a serving cart, and half a suit of armor.

From that time I always kept the “What would MacGyver do?” spirit in my thinking. On the other hand I think I was “destined” to be an IT guy, and particularly in the security field, where we don’t have quite the same variety of materials to craft our solutions.

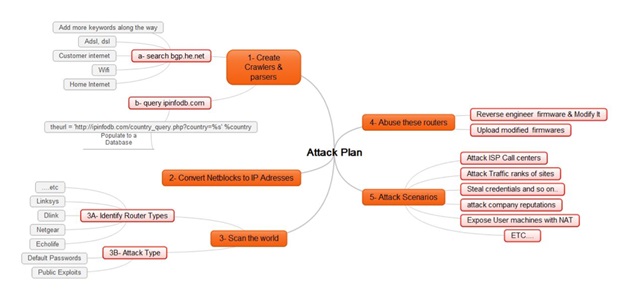

But the “What would MacGyver do?” frame of mind helped me figure out a simple way to completely “own” a network environment in a MacGyver sort of way using just a small set of steps, including:

- Exploiting a bad use of tools.

- A small piece of social engineering.

- Some creativity.

- A small number of manual configuration changes..

I’ll relate how I lucked into this opportunity, how easy it can be to exploit certain circumstances, and especially how easy it would be to use a similar technique to gain domain administrator access for a company network.

The whole situation was due to the way helpdesk support was provided at the company I was working for. For security reasons non-administrative domain users were prevented from installing software on their desktops. So when I tried to install a small software application I received a very respectful “access denied” message. I felt a bit annoyed by this but still wanted my application, so I called the helpdesk and asked them to finish the installation remotely for me.

The helpdesk person was amenable, so I gave him my machine name and soon saw a pop-up window indicating that someone had connected to my machine and was interacting with my desktop.

My first impression was “Oh cool, this helpdesk is responsive and soon my software will be installed and I can finally start my project.”

But when I thought about this a bit more I started to wonder how the helpdesk person could install my software since he was trying to do so with my user privileges and desktop session rather than logging me out and connecting as an administrator.

And here we arrive at our first Act.

Act #1: Bad use of tools

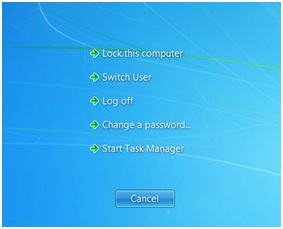

Everything became clear when the helpdesk guy emulated a Ctrl+Alt+Delete combination that brings up the Windows menu and its awesome Switch User option.

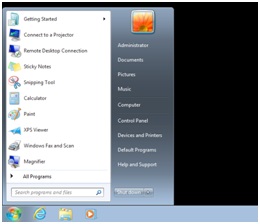

The helpdesk guy clicked the Switch User option and here I saw some magic — namely the support guy logging in right before my eyes with the local Administrator account.

Picture this: the support guy was typing in the password directly in front of my eyes. Even though I am an IT guy this was the first time I ever saw a support person interacting live with the Windows login screen. I wished I could see or intercept the password, but unfortunately I only saw ugly black dots in the password dialog box.

At that moment I felt frustrated, because I realized how close I was to having the local administrator password. But how could I get it?

The magic became clearer when the support guy logged in as an administrator on the machine and I was able to see him interacting with the desktop. That really made my day.

And then something even more magnificent happened while I was watching: for some undefined reason the support guy encountered a Windows session error. He had no choice but to log out, which he did, and then he logged in again with the Domain Administrator account … again right before my eyes!

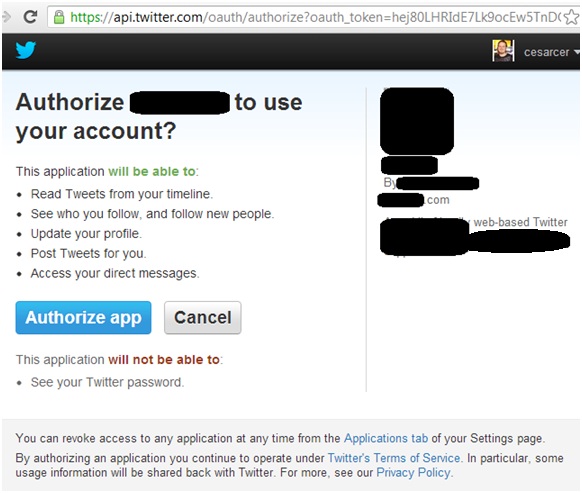

(I don’t have a domain lab set up right now, so I can’t duplicate the screen image, but I am sure you can imagine the login window, which would look just like the one above except that it would include the domain name.)

When he logged in as the domain administrator I saw another nice desktop and the helpdesk guy interacting with my machine right in front of my eyes as the domain admin.

This is when I had a devious idea: I moved my mouse one millimeter and it moved while this guy was installing my software. At this point we arrive at the second Act.

Act #2: Some MacGyver magic

I asked myself, what if I did the following:

- Unplug the network cable (I could have taken control of the mouse instead, but that would have aroused suspicion).

- Stop the DameWare service that is providing access to the support guy.

- Reconnect the network cable.

- Create a new domain admin account (since the domain administrator is the operative account on my computer).

- Restart the DameWare service.

- Log out of the machine.

By completing these six steps that wouldn’t take more than two minutes I could have assumed domain administrator privileges for the entire company.

Let’s recap the formula for this awesome sauce:

1. Bad use of tools: It was totally wrong for the help desk person to open a domain admin session directly under the user’s eyes and giving him the opportunity to take control of the session.

2. A small piece of social engineering: Just call the support desk and ask them to install some software for you.

3. A small amount of finagling on your part: Use the following steps when the help desk person logs in to push him to log in as Domain Admin:

• Unplug the network cable (1 second).

• Change the local administrator password (7 seconds).

• Log out (2 seconds).

• Plug the network cable back in (1 second).

4. Another small piece of social engineering: Call the support person back and blame Microsoft Windows for a crash. Cross your fingers that after he is unable to login as local admin (because you changed the password) he will instead login as a domain administrator.

5. Some more finagling on your part: Do the same steps defined in step 3 to create a new domain admin account.

6. Success: Enjoy being a domain administrator for the company.

Final Act: Conclusion