I presented before the holiday break at Seattle B-Sides on a topic I called “Offensive Defense.” This blog will summarize the talk. I feel it’s relevant to share due to the recent discussions on desktop antivirus software (AV)

What is Offensive Defense?

The basic premise of the talk is that a good defense is a “smart” layered defense. My “Offensive Defense” presentation title might be interpreted as fighting back against your adversaries much like the Sexy Defense talk my co-worker Ian Amit has been presenting.

My view of the “Offensive Defense” is about being educated on existing technology and creating a well thought-out plan and security framework for your network. The “Offensive” word in the presentation title relates to being as educated as any attacker who is going to study common security technology and know it’s weaknesses and boundaries. You should be as educated as that attacker to properly build a defensive and reactionary security posture. The second part of an “Offensive Defense” is security architecture. It is my opinion that too many organizations buy a product to either meet the minimal regulatory requirements, to apply “band-aide” protection (thinking in a point manner instead of a systematic manner), or because the organization thinks it makes sense even though they have not actually made a plan for it. However, many larger enterprise companies have not stepped back and designed a threat model for their network or defined the critical assets they want to protect.

At the end of the day, a persistent attacker will stop at nothing to obtain access to your network and to steal critical information from your network. Your overall goal in protecting your network should be to protect your critical assets. If you are targeted, you want to be able to slow down your attacker and the attack tools they are using, forcing them to customize their attack. In doing so, your goal would be to give away their position, resources, capabilities, and details. Ultimately, you want to be alerted before any real damage has occurred and have the ability to halt their ability to ex-filtrate any critical data.

Conduct a Threat Assessment, Create a Threat Model, Have a Plan!

This process involves either having a security architect in-house or hiring a security consulting firm to help you design a threat model tailored to your network and assess the solutions you have put in place. Security solutions are not one-size fits all. Do not rely on marketing material or sales, as these typically oversell the capabilities of their own product. I think in many ways overselling a product is how as an industry we have begun to have rely too heavily on security technologies, assuming they address all threats.

There are many quarterly reports and resources that technical practitioners turn to for advice such as Gartner reports, the magic quadrant, or testing houses including AV-Comparatives, ICSA Labs, NSS Labs, EICAR or AV-Test. AV-Test , in fact, reported this year that Microsoft Security Essentials failed to recognize enough zero-day threats with detection rates of only 69% , where the average is 89%. These are great resources to turn to once you know what technology you need, but you won’t know that unless you have first designed a plan.

Once you have implemented a plan, the next step is to actually run exercises and, if possible, simulations to assess the real-time ability of your network and the technology you have chosen to integrate. I rarely see this done, and, in my opinion, large enterprises with critical assets have no excuse not to conduct these assessments.

Perform a Self-assessment of the Technology

AV-Comparatives has published a good quote on their product page that states my point:

“If you plan to buy an Anti-Virus, please visit the vendor’s site and evaluate their software by downloading a trial version, as there are also many other features and important things for an Anti-Virus that you should evaluate by yourself. Even if quite important, the data provided in the test reports on this site are just some aspects that you should consider when buying Anti-Virus software.”

This statement proves my point in stating that companies should familiarize themselves with a security technology to make sure it is right for their own network and security posture.

There are many security technologies that exist today that are designed to detect threats against or within your network. These include (but are not limited to):

- Firewalls

- Intrusion Prevention Systems (IPS)

- Intrusion Detectoin Systems (IDS)

- Host-based Intrusion Prevention Systems (HIPS)

- Desktop Antivirus

- Gateway Filtering

- Web Application Firewalls

- Cloud-Based Antivirus and Cloud-based Security Solutions

Such security technologies exist to protect against threats that include (but are not limited to):

- File-based malware (such as malicious windows executables, Java files, image files, mobile applications, and so on)

- Network-based exploits

- Content based exploits (such as web pages)

- Malicious email messages (such as email messages containing malicious links or phishing attacks)

- Network addresses and domains with a bad reputation

These security technologies deploy various techniques that include (but are not limited to):

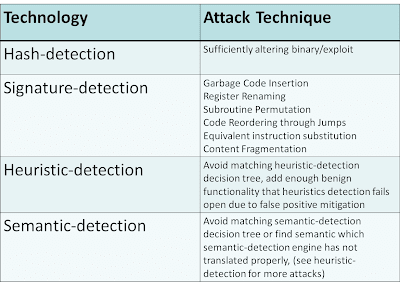

- Hash-detection

- Signature-detection

- Heuristic-detection

- Semantic-detection

- Reputation-based

- Behavioral based

For the techniques that I have not listed in this table such as reputation, refer to my CanSecWest 2008 presentation “Wreck-utation“, which explains how reputation detection can be circumvented. One major example of this is a rising trend in hosting malicious code on a compromised legitimate website or running a C&C on a legitimate compromised business server. Behavioral sandboxes can also be defeated with methods such as time-lock puzzles and anti-VM detection or environment-aware code. In many cases, behavioral-based solutions allow the binary or exploit to pass through and in parallel run the sample in a sandbox. This allows what is referred to as a 1-victim-approach in which the user receiving the sample is infected because the malware was allowed to pass through. However, if it is determined in the sandbox to be malicious, all other users are protected. My point here is that all methods can be defeated given enough expertise, time, and resources.

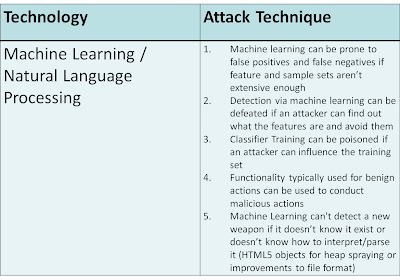

Big Data, Machine Learning, and Natural Language Processing

My presentation also mentioned something we hear a lot about today… BIG DATA. Big Data plus Machine Learning coupled with Natural Language Processing allows a clustering or classification algorithm to make predictive decisions based on statistical and mathematical models. This technology is not a replacement for what exists. Instead, it incorporates what already exists (such as hashes, signatures, heuristics, semantic detection) and adds more correlation in a scientific and statistic manner. The growing number of threats combined with a limited number of malware analysts makes this next step virtually inevitable.

While machine learning, natural language processing, and other artificial intelligence sciences will hopefully help in supplementing the detection capabilities, keep in mind this technology is nothing new. However, the context in which it is being used is new. It has already been used in multiple existing technologies such as anti-spam engines and Google translation technology. It is only recently that it has been applied to Data Leakage Prevention (DLP), web filtering, and malware/exploit content analysis. Have no doubt, however, that like most technologies, it can still be broken.

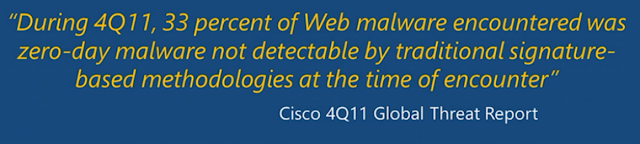

Hopefully most of you have read Imperva’s report, which found that less than 5% of antivirus solutions are able to initially detect previously non-cataloged viruses. Regardless of your opinion on Imperva’s testing methodologies, you might have also read the less-scrutinized Cisco 2011 Global Threat report that claimed 33% of web malware encountered was zero-day malware not detectable by traditional signature-based methodologies at the time of encounter. This, in my experience, has been a more widely accepted industry statistic.

What these numbers are telling us is that the technology, if looked at individually, is failing us, but what I am saying is that it is all about context. Penetrating defenses and gaining access to a non-critical machine is never desirable. However, a “smart” defense, if architected correctly, would incorporate a number of technologies, situated on your network, to protect the critical assets you care about most.

The Cloud versus the End-Point

If you were to attend any major conference in the last few years, most vendors would claim “the cloud” is where protection technology is headed. Even though there is evidence to show that this might be somewhat true, the majority of protection techniques (such as hash-detection, signature-detection, reputation, and similar technologies) simply were moved from the desktop to the gateway or “cloud”. The technology and techniques, however, are the same. Of course, there are benefits to the gateway or cloud, such as consolidated updates and possibly a more responsive feedback and correlation loop from other cloud nodes or the company lab headquarters. I am of the opinion that there is nothing wrong with having anti-virus software on the desktop. In fact, in my graduate studies at UCSD in Computer Science, I remember a number of discussions on the end-to-end arguments of system design, which argued that it is best to place functionality at end points and at the highest level unless doing otherwise improves performance.

The desktop/server is the end point where the most amount of information can be disseminated. The desktop/server is where context can be added to malware, allowing you to ask questions such as:

- Was it downloaded by the user and from which site?

- Is that site historically common for that particular user to visit?

- What happened after it was downloaded?

- Did the user choose to execute the downloaded binary?

- What actions did the downloaded binary take?

Hashes, signatures, heuristics, semantic-detection, and reputation can all be applied at this level. However, at a gateway or in the cloud, generally only static analysis is performed due to latency and performance requirements.

This is not to say that gateway or cloud security solutions cannot observe malicious patterns at the gateway, but restraints on state and the fact that this is a network bottleneck generally makes any analysis node after the end point thorough. I would argue that both desktop and cloud or gateway security solutions have their benefits though, and if used in conjunction, they add even more visibility into the network. As a result, they supplement what a desktop antivirus program cannot accomplish and add collective analysis.

Conclusion

My main point is that to have a secure network you have to think offensively by architecting security to fit your organization needs. Antivirus software on the desktop is not the problem. The problem is the lack of planing that goes into deployment as well as the lack of understanding in the capabilities of desktop, gateway, network, and cloud security solutions. What must change is the haste with which network teams deploy security technologies without having a plan, a threat model, or a holistic organizational security framework in place that takes into account how all security products work together to protect critical assets.

With regard to the cloud, make no mistake that most of the same security technology has simply moved from the desktop to the cloud. Because it is at the network level, the latency of being able to analyze the file/network stream is weaker and fewer checks are performed for the sake of user performance. People want to feel safe relying on the cloud’s security and feel assured knowing that a third-party is handling all security threats, and this might be the case. However, companies need to make sure a plan is in place and that they fully understand the capabilities of the security products they have chosen, whether they be desktop, network, gateway, or cloud based.

If you found this topic interesting, Chris Valasek and I are working on a related project that Chris will be presenting at An Evening with IOActive on Thursday, January 17, 2013. We also plan to talk about this at the IOAsis at the RSA Conference. Look for details!