The poodle must be the most vicious dog, because it has killed SSL.

POODLE is the latest in a rather lengthy string of vulnerabilities in SSL (Secure Socket Layer) and a more recent protocol, TLS (Transport layer Security). Both protocols secure data that is being sent between applications to prevent eavesdropping, tampering, and message forgery.

POODLE (Padding Oracle On Downgraded Legacy Encryption) rings the death knell for our 18-year-old friend SSL version 3.0 (SSLv3), because at this point, there is no truly safe way to continue using it.

Google announced Tuesday that its researchers had discovered POODLE. The announcement came amid rumors about the researchers’ security advisory white paper which details the vulnerability, which was circulating internally.

SSLv3 had survived numerous prior vulnerabilities, including SSL renegotiation, BEAST, CRIME, Lucky 13, and RC4 weakness. Finally, its time has come; SSLv3 is long overdue for deprecation.

The security industry’s initial view is that POODLE will not be as devastating as other recent vulnerabilities such as Heartbleed, a TLS bug. After all, POODLE is a client-side attack; the others were direct server-side attacks.

However, I believe POODLE will ultimately have a larger overall impact than Heartbleed. Even the hundreds of thousands of applications that use a more recent TLS protocol still use SSLv3 as part of backward compatibility. In addition, some applications that directly use SSLv3 may not support any version of TLS; for these, there might not be a quick fix, if there will be one at all.

TLS version 1.0 (TLSv1.0) and higher versions are not affected by POODLE because these protocols are strict about the contents of the padding bytes. Therefore, TLSv1.0 is still considered safe for CBC mode ciphers. However, we shouldn’t let that lull us into complacency. Keep in mind that even the clients and servers that support recent TLS versions can force the use of SSLv3 by downgrading the transmission channel, which is often still supported. This ‘downgrade dance’ can be triggered through a variety of methods. What’s important to know is that it can happen.

There are a few ways to prevent POODLE from affecting your communication:

Plan A: Disable SSLv3 for all applications. This is the most effective mitigation for both clients and servers.

Plan B: As an alternative, you could disable all CBC Ciphers for SSLv3. This will protect you from POODLE, but leaves only RC4 as the remaining “strong” cryptographic ciphers, which as mentioned above has had weaknesses in the past.

Plan C: If an application must continue supporting SSLv3 in order work correctly, implement the TLS_FALLBACK_SCSV mechanism. Some vendors are taking this approach for now, but it is a coping technique, not a solution. It addresses problems with retried connections and prevents reversion to earlier protocols, as described in the document TLS Fallback Signaling Cipher Suite Value for Preventing Protocol Downgrade Attacks (Draft Released for Comments).

How to Implement Plan A

With no solution that would allow truly safe continued use of SSLv3, you should implement Plan A: Disable SSLv3 for both server and client applications wherever possible, as described below.

Disable SSLv3 for Browsers

|

Browser

|

Disabling instructions

|

|

Chrome:

|

Add the command line -ssl-version-min=tls1 so the browser uses TLSv1.0 or higher.

|

|

Internet: Explorer:

|

Go to IE’s Tools menu -> Internet Options -> Advanced tab. Near the bottom of the tab, clear the Use SSL 3.0 checkbox.

|

|

Firefox:

|

Type about:config in the address bar and set security.tls.version.min to 1.

|

|

Adium/Pidgin:

|

Clear the Force Old-Style SSL checkbox.

|

Note: Some browser vendors are already issuing patches and others are offering diagnostic tools to assess connectivity.

If your device has multiple users, secure the browsers for every user. Your mobile browsers are vulnerable as well.

Disable SSLv3 on Server Software

|

Server Software

|

Disabling instructions

|

|

Apache:

|

Add -SSLv3 to the SSLProtocol line.

|

|

IIS 7:

|

Because this is an involved process that requires registry tweaking and a reboot, please refer to Microsoft’s instructions: https://support.microsoft.com/kb/187498/en-us

|

|

Postfix:

|

In main.cf, adopt the setting smtpd_tls_mandatory_protocols=!SSLv3 and ensure that !SSLv2 is present too.

|

POODLE is a high risk for payment gateways and other applications that might expose credit card data and must be fixed in 30 days, according to Payment Card Industry standards. The clock is ticking.

Conclusion

Ideally, the security industry should move to recent versions of the TLS protocol. Each iteration has brought improvements, yet adoption has been slow. For instance, TLS version 1.2, introduced in mid-2008, was scarcely implemented until recently and many services that support TLSv1.2 are still required to support the older TLSv1.0, because the clients don’t yet support the newer protocol version.

A draft of TLS version 1.3 released in July 2014 removes support for features that were discovered to make encrypted data vulnerable. Our world will be much safer if we quickly embrace it.

Robert Zigweid

To know if your system is compromised, you need to find everything that could run or otherwise change state on your system and verify its integrity (that is, check that the state is what you expect it to be).

“Finding everything” is a bold statement, particularly in the realm of computer security, rootkits, and advanced threats. Is it possible to find everything? Sadly, the short answer is no, it’s not. Strangely, the long answer is yes, it is.

By defining the execution environment at any point in time, predominantly through the use of hardware-based hypervisor or virtualization facilities, you can verify the integrity of that specific environment using cryptographically secure hashing.

“DKOM is one of the methods commonly used and implemented by Rootkits, in order to remain undetected, since this the main purpose of a rootkit.” – Harry Miller

Even if an attacker significantly modifies and attempts to hide from standard logical object scanning, there is no way to evade page-table detection without significantly patching the OS fault handler. A major benefit to a DKOM rootkit is that it avoids code patches, that level of modification is easily detected by integrity checks and is counter to the goal of DKOM. DKOM is a codeless rootkit technique, it runs code without patching the OS to hide itself, it only patches data pointers.

IOActive released several versions of this process detection technique. We also built it into our memory integrity checking tools, BlockWatch™ and The Memory Cruncher™.

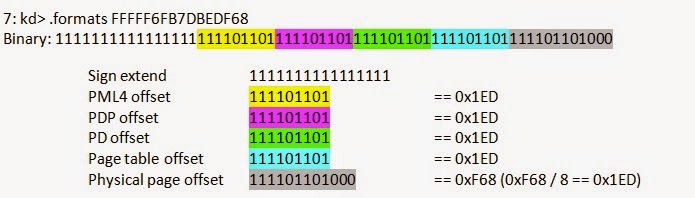

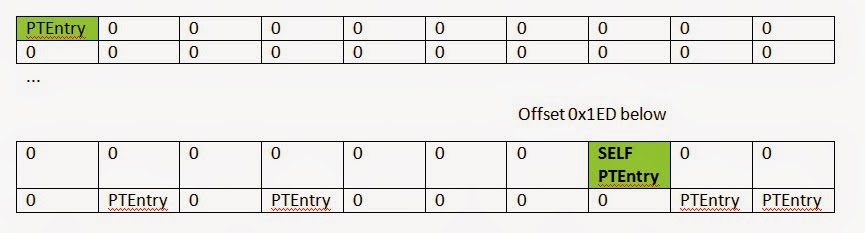

The magic of this technique comes from the propensity of all OS (at least Windows, Linux, and BSD) to organize their page tables into virtual memory. That way they can use virtual addresses to edit PTEs instead of physical memory addresses.

Shane Macaulay

This is a short blog post, because I’ve talked about this topic in the past. I want to let people know that I have the honor of presenting at DEF CON on Friday, August 8, 2014, at 1:00 PM. My presentation is entitled “Hacking US (and UK, Australia, France, Etc.) Traffic Control Systems”. I hope to see you all there. I’m sure you will like the presentation.

I am frustrated with Sensys Networks (vulnerable devices vendor) lack of cooperation, but I realize that I should be thankful. This has prompted me to further my research and try different things, like performing passive onsite tests on real deployments in cities like Seattle, New York, and Washington DC. I’m not so sure these cities are equally as thankful, since they have to deal with thousands of installed vulnerable devices, which are currently being used for critical traffic control.

The latest Sensys Networks numbers indicate that approximately 200,000 sensor devices are deployed worldwide. See http://www.trafficsystemsinc.com/newsletter/spring2014.html. Based on a unit cost of approximately $500, approximately $100,000,000 of vulnerable equipment is buried in roads around the world that anyone can hack. I’m also concerned about how much it will cost tax payers to fix and replace the equipment.

One way I confirmed that Sensys Networks devices were vulnerable was by traveling to Washington DC to observe a large deployment that I got to know.

When I exited the train station, the fun began.

As you can see, there are 50,000+ devices out there that could be hacked and cause traffic messes in many important cities.

| Electronic signs |

|

Site

|

Accounts

|

%

|

|

Facebook

|

308

|

17.26457

|

|

Google

|

229

|

12.83632

|

|

Orbitz

|

182

|

10.20179

|

|

WashingtonPost

|

149

|

8.352018

|

|

Twitter

|

108

|

6.053812

|

|

Plaxo

|

93

|

5.213004

|

|

LinkedIn

|

65

|

3.643498

|

|

Garmin

|

45

|

2.522422

|

|

MySpace

|

44

|

2.466368

|

|

Dropbox

|

44

|

2.466368

|

|

NYTimes

|

36

|

2.017937

|

|

NikePlus

|

23

|

1.289238

|

|

Skype

|

16

|

0.896861

|

|

Hulu

|

13

|

0.7287

|

|

Economist

|

11

|

0.616592

|

|

Sony Entertainment Network

|

9

|

0.504484

|

|

Ask

|

3

|

0.168161

|

|

Gartner

|

3

|

0.168161

|

|

Travelers

|

2

|

0.112108

|

|

Naymz

|

2

|

0.112108

|

|

Posterous

|

1

|

0.056054

|

|

Robert Abrams

|

Email: robert.abrams@nullus.army.mil

|

|

|

|

|

|

Found account on site: orbitz.com

|

|

|

Found account on site: washingtonpost.com

|

|

|

|

|

Jamos Boozer

|

Email: james.boozer@nullus.army.mil

|

|

|

|

|

|

Found account on site: orbitz.com

|

|

|

Found account on site: facebook.com

|

|

|

|

|

Vincent Brooks

|

Email: vincent.brooks@nullus.army.mil

|

|

|

|

|

|

Found account on site: facebook.com

|

|

|

Found account on site: linkedin.com

|

|

|

|

|

James Eggleton

|

Email: james.eggleton@nullus.army.mil

|

|

|

|

|

|

Found account on site: plaxox.com

|

|

|

|

|

Reuben Jones

|

Email: reuben.jones@nullus.army.mil

|

|

|

|

|

|

Found account on site: plaxo.com

|

|

|

Found account on site: washingtonpost.com

|

|

|

|

|

|

|

|

David quantock

|

Email: david-quantock@nullus.army.mil

|

|

|

|

|

|

Found account on site: twitter.com

|

|

|

Found account on site: orbitz.com

|

|

|

Found account on site: plaxo.com

|

|

|

|

|

|

|

|

Dave Halverson

|

Email: dave.halverson@nullconus.army.mil

|

|

|

|

|

|

Found account on site: linkedin.com

|

|

|

|

|

Jo Bourque

|

Email: jo.bourque@nullus.army.mil

|

|

|

|

|

|

Found account on site: washingtonpost.com

|

|

|

|

|

|

|

|

Kev Leonard

|

Email: kev-leonard@nullus.army.mil

|

|

|

|

|

|

Found account on site: facebook.com

|

|

|

|

|

James Rogers

|

Email: james.rogers@nullus.army.mil

|

|

|

|

|

|

Found account on site: plaxo.com

|

|

|

|

|

|

|

|

William Crosby

|

Email: william.crosby@nullus.army.mil

|

|

|

|

|

|

Found account on site: linkedin.com

|

|

|

|

|

Anthony Cucolo

|

Email: anthony.cucolo@nullus.army.mil

|

|

|

|

|

|

Found account on site: twitter.com

|

|

|

Found account on site: orbitz.com

|

|

|

Found account on site: skype.com

|

|

|

Found account on site: plaxo.com

|

|

|

Found account on site: washingtonpost.com

|

|

|

Found account on site: linkedin.com

|

|

|

|

|

Genaro Dellrocco

|

Email: genaro.dellarocco@nullmsl.army.mil

|

|

|

|

|

|

Found account on site: linkedin.com

|

|

|

|

|

Stephen Lanza

|

Email: stephen.lanza@nullus.army.mil

|

|

|

|

|

|

Found account on site: skype.com

|

|

|

Found account on site: plaxo.com

|

|

|

Found account on site: nytimes.com

|

|

|

|

|

Kurt Stein

|

Email: kurt-stein@nullus.army.mil

|

|

|

|

|

|

Found account on site: orbitz.com

|

|

|

Found account on site: skype.com

|

The idea of this post is to raise awareness. I want to show how vulnerable some industrial, oil, and gas installations currently are and how easy it is to attack them. Another goal is to pressure vendors to produce more secure devices and to speed up the patching process once vulnerabilities are reported.

|

Version numbers

|

Hosts Found

|

Vulnerable (SSH Key)

|

|

1.8-5a

|

1

|

Yes

|

|

1.8-6

|

2

|

Yes

|

|

2.0-0

|

110

|

Yes

|

|

2.0-0_a01

|

1

|

Yes

|

|

2.0-1

|

68

|

Yes

|

|

2.0-2 (patched)

|

50

|

No

|

By continuing to use the site, you agree to the use of cookies. more information

The cookie settings on this website are set to "allow cookies" to give you the best browsing experience possible. If you continue to use this website without changing your cookie settings or you click "Accept" below then you are consenting to this.