Category: INSIGHTS

A Bigger Stick To Reduce Data Breaches

On average I receive a postal letter from a bank or retailer every two months telling me that I’ve become the unfortunate victim of a data theft or that my credit card is being re-issued to prevent against future fraud. When I quiz my friends and colleagues on the topic, it would seem that they too suffer the same fate on a reoccurring schedule. It may not be that surprising to some folks. 2013 saw over 822 million private records exposed according to the folks over at DatalossDB – and that’s just the ones that were disclosed publicly.

It’s clear to me that something is broken and it’s only getting worse. When it comes to the collection of personal data, too many organizations have a finger in the pie and are ill equipped (or prepared) to protect it. In fact I’d question why they’re collecting it in the first place. All too often these organizations – of which I’m supposedly a customer – are collecting personal data about “my experience” doing business with them and are hoping to figure out how to use it to their profit (effectively turning me in to a product). If these corporations were some bloke visiting a psychologist, they’d be diagnosed with a hoarding disorder. For example, consider what criteria the DSM-5 diagnostic manual uses to identify the disorder:

- Persistent difficulty discarding or parting with possessions, regardless of the value others may attribute to these possessions.

- This difficulty is due to strong urges to save items and/or distress associated with discarding.

- The symptoms result in the accumulation of a large number of possessions that fill up and clutter active living areas of the home or workplace to the extent that their intended use is no longer possible.

- The symptoms cause clinically significant distress or impairment in social, occupational, or other important areas of functioning.

- The hoarding symptoms are not due to a general medical condition.

- The hoarding symptoms are not restricted to the symptoms of another mental disorder.

Whether or not the organizations hording personal data know how to profit from it or not, it’s clear that even the biggest of them are increasingly inept at protecting it. The criminals that are pilfering the data certainly know what they’re doing. The gray market for identity laundering has expanded phenomenonly since I talked about at Blackhat in 2010.

We can moan all we like about the state of the situation now, but we’ll be crying in the not too distant future when statistically we progress from being a victim to data loss, to being a victim of (unrecoverable) fraud.

The way I see it, there are two core components to dealing with the spiraling problem of data breaches and the disclosure of personal information. We must deal with the “what data are you collecting and why?” questions, and incentivize corporations to take much more care protecting the personal data they’ve been entrusted with.

I feel that the data hording problem can be dealt with fairly easily. At the end of the day it’s about transparency and the ability to “opt out”. If I was to choose a role model for making a sizable fraction of this threat go away, I’d look to the basic component of the UK’s Data Protection Act as being the cornerstone of a solution – especially here in the US. I believe the key components of personal data collection should encompass the following:

- Any organization that wants to collect personal data must have a clearly identified “Data Protection Officer” who not only is a member of the executive board, but is personally responsible for any legal consequences of personal data abuse or data breaches.

- Before data can be collected, the details of the data sought for collection, how that data is to be used, how long it would be retained, and who it is going to be used by, must be submitted for review to a government or legal authority. I.e. some third-party entity capable of saying this is acceptable use – a bit like the ethics boards used for medical research etc.

- The specifics of what data a corporation collects and what they use that data for must be publicly visible. Something similar to the nutrition labels found on packaged foods would likely be appropriate – so the end consumer can rapidly discern how their private data is being used.

- Any data being acquired must include a date of when it will be automatically deleted and removed.

- At any time any person can request a copy of any and all personal data held by a company about themselves.

- At any time any person can request the immediate deletion and removal of all data held by a company about themselves.

If such governance existed for the collection and use of personal data, then the remaining big item is enforcement. You’d hope that the morality and ethics of corporations would be enough to ensure they protected the data entrusted to them with the vigor necessary to fight off the vast majority of hackers and organized crime, but this is the real world. Apparently the “big stick” approach needs to be reinforced.

A few months ago I delved in to how the fines being levied against organizations that had been remiss in doing all they could to protect their customer’s personal data should be bigger and divvied up. Essentially I’d argue that half of the fine should be pumped back in to the breached organization and used for increasing their security posture.

Looking at the fines being imposed upon the larger organizations (that could have easily invested more in protecting their customers data prior to their breaches), the amounts are laughable. No noticeable financial pain occurs, so why should we be surprised if (and when) it happens again. I’ve become a firm believer that the fines businesses incur should be based upon a percentage of valuation. Why should a twenty-billion-dollar business face the same fine for losing 200,000,000 personal records as a ten-million-dollar business does for losing 50,000 personal records? If the fine was something like two-percent of valuation, I can tell you that the leadership of both companies would focus more firmly on the task of keeping yours and mine data much safer than they do today.

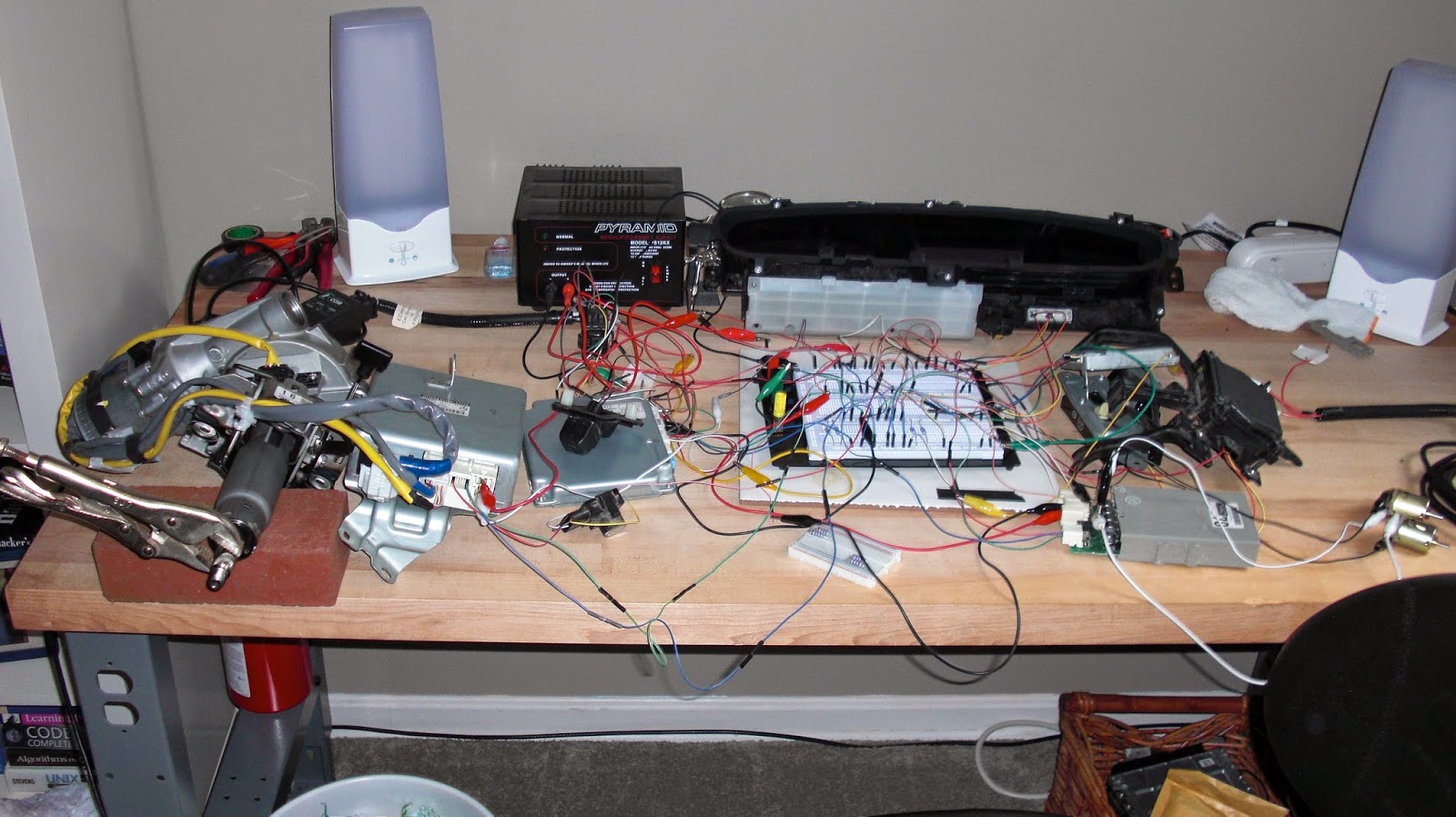

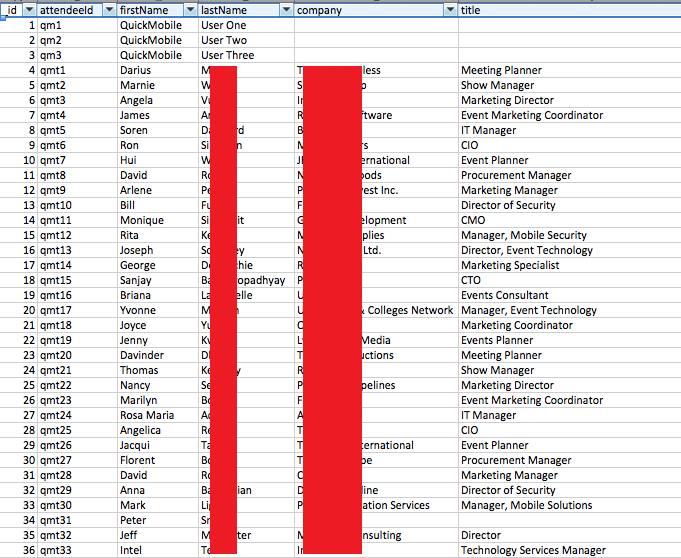

Beware Your RSA Mobile App Download

With the RSA Conference Mobile App, you can stay connected with all Conference activities, view the event catalog, manage session schedules and engage with colleagues and peers while onsite using our social and professional networking tools. You’ll have access to dynamic agenda updates, venue maps, exhibitor listing and more!

PCI DSS and Security Breaches

2. Requirements designed to detect malicious activities.

These requirements involve implementing solutions such as antivirus software, intrusion detection systems, and file integrity monitoring.

3. Requirements designed to ensure that if a security breach occurs, actions are taken to respond to and contain the security breach, and ensure evidence will exist to identify and prosecute the attackers.

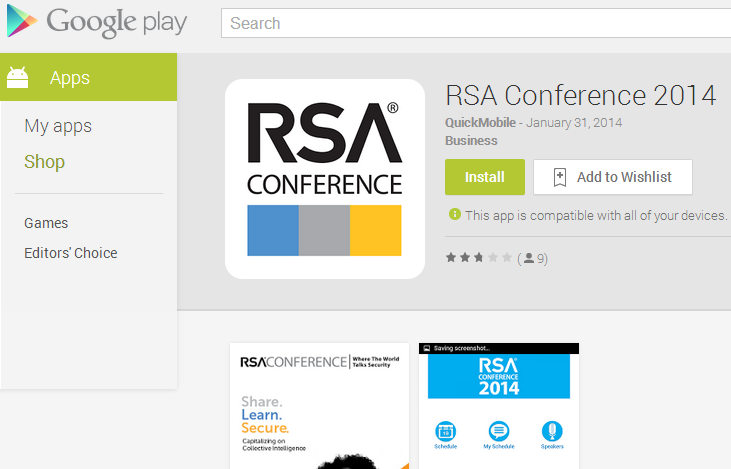

INTERNET-of-THREATS

- Laptops, tablets, smartphones, set-top boxes, media-streaming devices, and data-storage devices

- Watches, glasses, and clothes

- Home appliances, home switches, home alarm systems, home cameras, and light bulbs

- Industrial devices and industrial control systems

- Cars, buses, trains, planes, and ships

- Medical devices and health systems

- Traffic sensors, seismic sensors, pollution sensors, and weather sensors

- Sensitive data sent over insecure channels

- Improper use of encryption

- No SSL certificate validation

- Things like encryption keys and signing certificates easily available to anyone

- Hardcoded credentials/backdoor accounts

- Lack of authentication and/or authorization

- Storage of sensitive data in clear text

- Unauthenticated and/or unauthorized firmware updates

- Lack of firmware integrity check during updates

- Use of insecure custom made protocols

-

- Training developers on secure development

- Implementing security development practices to improve software security

- Training company staff on security best practices

- Implementing a security patch development and distribution process

- Performing product design/architecture security reviews

- Performing source code security audits

- Performing product penetration tests

- Performing company network penetration tests

- Staying up-to-date with new security threats

- Creating a bug bounty program to reward reported vulnerabilities and clearly defining how vulnerabilities should be reported

- Implementing a security incident/emergency response team

The password is irrelevant too

An Equity Investor’s Due Diligence

Information technology companies constitute the core of many investment portfolios nowadays. With so many new startups popping up and some highly visible IPO’s and acquisitions by public companies egging things on, many investors are clamoring for a piece of the action and looking for new ways to rapidly qualify or disqualify an investment ; particularly so when it comes to hottest of hot investment areas – information security companies.

Over the years I’ve found myself working with a number of private equity investment firms – helping them to review the technical merits and implications of products being brought to the market by new security startups. In most case’s it’s not until the B or C investment rounds that the money being sought by the fledgling company starts to get serious to the investors I know. If you’re going to be handing over money in the five to twenty million dollar range, you’re going to want to do your homework on both the company and the product opportunity.

Over the last few years I’ve noted that a sizable number of private equity investment firms have built in to their portfolio review the kind of technical due diligence traditionally associated with the formal acquisition processes of Fortune-500 technology companies. It would seem to me that the $20,000 to $50,000 price tag for a quick-turnaround technical due diligence report is proving to be valuable investment in a somewhat larger investment strategy.

When it comes to performing the technical due diligence on a startup (whether it’s a security or social media company for example), the process tends to require a mix of technical review and tapping past experiences if it’s to be useful, let alone actionable, to the potential investor. Here are some of the due diligence phases I recommend, and why:

-

- Vocabulary Distillation – For some peculiar reason new companies go out of their way to invent their own vocabulary as descriptors of their value proposition, or they go to great lengths to disguise the underlying processes of their technology with what can best be described as word-soup. For example, a “next-generation big-data derived heuristic determination engine” can more than adequately be summed up as “signature-based detection”. Apparently using the word “signature” in your technology description is frowned upon and the product management folks avoid the use the word (however applicable it may be). Distilling the word soup is a key component of being able to compare apples with apples.

-

- Overlapping Technology Review – Everyone wants to portray their technology as unique, ground-breaking, or next generation. Unfortunately, when it comes to the world of security, next year’s technology is almost certainly a progression of the last decade’s worth of invention. This isn’t necessarily bad, but it is important to determine the DNA and hereditary path of the “new” technology (and subcomponents of the product the start-up is bringing to market). Being able to filter through the word-soup of the first phase and determine whether the start-up’s approach duplicates functionality from IDS, AV, DLP, NAC, etc. is critical. I’ve found that many start-ups position their technology (i.e. advancements) against antiquated and idealized versions of these prior technologies. For example, simplifying desktop antivirus products down to signature engines – while neglecting things such as heuristic engines, local-host virtualized sandboxes, and dynamic cloud analysis.

-

- Code Language Review – It’s important to look at the languages that have been employed by the company in the development of their product. Popular rapid prototyping technologies like Ruby on Rails or Python are likely acceptable for back-end systems (as employed within a private cloud), but are potential deal killers to future acquirer companies that’ll want to integrate the technology with their own existing product portfolio (i.e. they’re not going to want to rewrite the product). Similarly, a C or C++ implementation may not offer the flexibility needed for rapid evolution or integration in to scalable public cloud platforms. Knowing which development technology has been used where and for what purpose can rapidly qualify or disqualify the strength of the company’s product management and engineering teams – and help orientate an investor on future acquisition or IPO paths.

-

- Security Code Review – Depending upon the size of the application and the due diligence period allowed, a partial code review can yield insight in to a number of increasingly critical areas – such as the stability and scalability of the code base (and consequently the maturity of the development processes and engineering team), the number and nature of vulnerabilities (i.e. security flaws that could derail the company publicly), and the effort required to integrate the product or proprietary technology with existing major platforms.

-

- Does it do what it says on the tin? – I hate to say it, but there’s a lot of snake oil being peddled nowadays. This is especially so for new enterprise protection technologies. In a nut-shell, this phase focuses on the claims being made by the marketing literature and product management teams, and tests both the viability and technical merits of each of them. Test harnesses are usually created to monitor how well the technology performs in the face of real threats – ranging from the samples provided by the companies user acceptance team (UAT) (i.e. the stuff they guarantee they can do), through to common hacking tools and tactics, and on to a skilled adversary with key domain knowledge.

- Product Penetration Test – Conducting a detailed penetration test against the start-up’s technology, product, or service delivery platform is always thoroughly recommended. These tests tend to unveil important information about the lifecycle-maturity of the product and the potential exposure to negative media attention due to exploitable flaws. This is particularly important to consumer-focused products and services because they are the most likely to be uncovered and exposed by external security researchers and hackers, and any public exploitation can easily set-back the start-up a year or more in brand equity alone. For enterprise products (e.g. appliances and cloud services) the hacker threat is different; the focus should be more upon what vulnerabilities could be introduced in to the customers environment and how much effort would be required to re-engineer the product to meet security standards.

Obviously there’s a lot of variety in the technical capabilities of the various private equity investment firms (and private investors). Some have people capable of sifting through the marketing hype and can discern the actual intellectual property powering the start-ups technology – but many do not. Regardless, in working with these investment firms and performing the technical due diligence on their potential investments, I’ve yet to encounter a situation where they didn’t “win” in some way or other. A particular favorite of mine is when, following a code review and penetration test that unveiled numerous serious vulnerabilities, the private equity firm was still intent on investing with the start-up but was able use the report to negotiate much better buy-in terms with the existing investors – gaining a larger percentage of the start-up for the same amount.

Scientifically Protecting Data

This is not “yet another Snapchat Pwnage blog post”, nor do I want to focus on discussions about the advantages and disadvantages of vulnerability disclosure. A vulnerability has been made public, and somebody has abused it by publishing 4.6 million records. Tough luck! Maybe the most interesting article in the whole Snapchat debacle was the one published at www.diyevil.com [1], which explains how data correlation can yield interesting results in targeted attacks. The question then becomes, “How can I protect against this?”

Stored personal data is always vulnerable to attackers who can track it down to its original owner. Because skilled attackers can sometimes gain access to metadata, there is very little you can do to protect your data aside from not storing it at all. Anonymity and privacy are not new concepts. Industries, such as healthcare, have used these concepts for decades, if not centuries. For the healthcare industry, protecting patient data remains one of the most challenging problems. Where does the balance tip when protecting privacy by not disclosing that person X is a diabetic, and protecting health by giving EMT’s information about allergies and existing conditions? It’s no surprise that those who research data anonymity and privacy often use healthcare information for their test cases. In this blog, I want to focus on two key principles relating to this.

k-Anonymity [2]

In 2000, Latanya Sweeney used the US Census data to prove that 87% of US citizens are uniquely identifiable by their birth date, gender, and zip code[3]. That isn’t surprising from a mathematical point of view as there are approximately 310 million Americans and roughly 2 billion possible combinations of the {birth date,gender, zip code} tuple. You can easily find out how unique you really are through an online application using the latest US Census data [4] Although it is not a good idea to store “unique identifiers” like names, usernames, or social security numbers, this is not at all practical. Assuming that data storage is a requirement, k-Anonymity comes into play. By using data suppression, where data is replaced by an *, and data generalization, where—as an example—a specific age is replaced by an age range, companies can anonymize a data set to a level where each row is, at the very least, identical to k-1 rows in the dataset. Whoever thought an anonymity level could actually be mathematically proven?

k-Anonymity has known weaknesses. Imagine that you know that the data of your Person of Interest (POI) is among four possible records in four anonymous datasets. If these four records have a common trait like “disease = diabetes”, you know that your POI suffers from this disease without knowing the record in which their data is contained. With sufficient metadata about the POI, another concept comes into play. Coincidentally, this is also where we find a possible solution for preventing correlation attacks against breached databases.

l-diversity [5]

One thing companies cannot control is how much knowledge about a POI an adversary has. This does not, however, divorce us from our responsibility to protect user data. This is where l-Diversity comes into play. This concept does not focus on the fields that attackers can use to identify a person with available data. Instead, it focuses on sensitive information in the dataset. By applying the l-Diversity principle to a dataset, companies can make it notably expensive for attackers to correlate information by increasing the number of required data points.

Solving Problems

All of this sounds very academic, and the question remains whether or not we can apply this in real-life scenarios to better protect user data. In my opinion, we definitely can.

Social application developers should become familiar with the principles of k-Anonymity and l-Diversity. It’s also a good idea to build KPIs that can be measured against. If personal data is involved, organizations should agree on minimum values for k and l.

More and more applications allow user email addresses to be the same as the associated user name. This directly impacts the l-Diversity database score. Organizations should allow users to select their username and also allow the auto-generation of usernames. Both these tactics have drawbacks, but from a security point of view, they make sense.

Users should have some control. This becomes clear when analyzing the common data points that every application requires.

- Email address:

- Do not use your corporate email address for online services, unless it is absolutely necessary

- If you have multiple email addresses, randomize the email addresses that you use for registration

- If your email provider allows you to append random strings to your email address, such as name+random@gmail.com, use this randomization—especially if your email address is also your username for this service

- Username:

- If you can select your username, make it unique

- If your email address is also your username, see my previous comments on this

- Password:

- Select a unique password for each service

- In some cases, select a phone number for 2FA or other purposes

By understanding the concepts of k-Anonymity and l-Diversity, we now know that this statement in the www.diyevil.com article is incorrect:

“While the techniques described above depend on a bit of luck and may be of limited usefulness, they are yet another tool in the pen tester’s toolset for open source intelligence gathering and user attack vectors.”

The success of techniques discussed in this blog depend on science and math. Also, where science and math are in play, solutions can be devised. I can only hope that the troves of “data scientists” that are currently being recruited also understand the principles I have described. I also hope that we will eventually evolve into a world where not only big data matters but where anonymity and privacy are no longer empty words.

[1] http://www.diyevil.com/using-snapchat-to-compromise-users/

[2] https://ioactive.com/wp-content/uploads/2014/01/K-Anonymity.pdf

[3] https://ioactive.com/wp-content/uploads/2014/01/paper1.pdf

[4] http://aboutmyinfo.org/index.html

[5] https://ioactive.com/wp-content/uploads/2014/01/ldiversityTKDDdraft.pdf