The other week I was invited to keynote at the ISSA CISO Forum on Incident Response in Dallas and in the weeks prior to it I was struggling to decide upon what angle I should take. Should I be funny, irreverent, diplomatic, or analytical? Should I plaster slides with the last quarter’s worth of threat statistics, breach metrics, and headline news? Should I quip some anecdote and hope the attending CISO’s would have an epiphany that’ll fundamentally change the way they secure their organizations?

In the end I did none of that… instead I decided to pull apart the latest batch of security buzzwords – “Big Data” and “Machine Learning”.

If you attended RSA USA (or any major security vendor/booth conference) this year you can’t have missed the fact that everything from Antivirus through to USB memory sticks now come with a dab of big data, a sprinkling of machine learning, and a dollop of cloud for good measure. Thankfully I’m a cynic; or else I’d have been thrashing around on the ground in an epileptic fit from all the flashy marketing claims and trademarked nonsense phrases.

I guess I’m lucky to be in the position of having had several years of exposure to some of the greatest minds at Georgia Tech as they drummed in to me on a daily basis the “what and how” of machine learning in the context of solving many of today’s toughest security problems.

So, it was with that in mind that I thought “If I’m a CISO and everything I know about machine learning and big data came from carefully rehearsed vendor sound bites and glossy pamphlets, would I be able to tell the difference between Chanel #5 and cow manure?” The obvious answer would result in some very happy farmers.

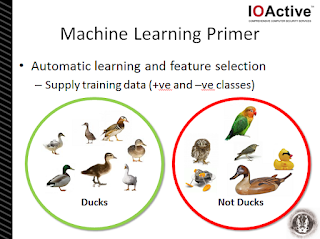

What was the net result of this self-inflection and impending deadline? I crafted a short presentation for CISO’s… a 101 course on machine learning and big data… and it included ducks.

If you’re in the upper tiers of your organization and you’ve had sales folks pimping you their latest cloud-infused, big data-munching, machine learning, and world-hunger-solving security solution, please carry on reading as I attempt to explain the basics of the latest and greatest in buzzwords…

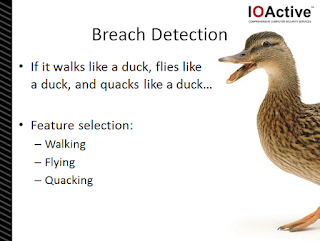

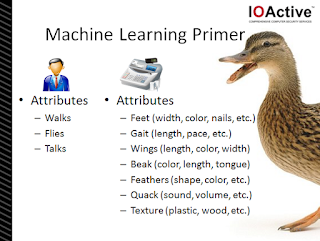

First of all – some context! In the world of breach detection and incident response there’s a common idiom: “If it walks like a duck, flies like a duck, and quacks like a duck… it must be a duck.”

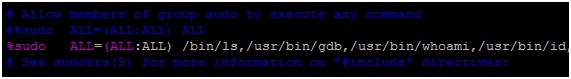

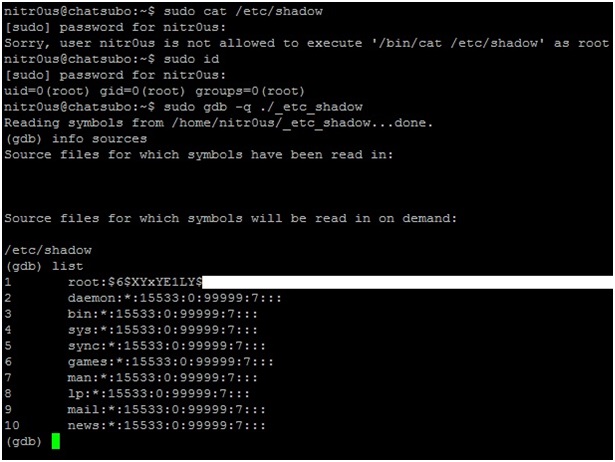

Now I could easily spend another 5,000 words explaining why such an idiom doesn’t apply to modern security threats, targeted attacks and advanced persistent threats, but you’ll have to wait for a different blog post. Rather, for this 101 lesson, it’s important to understand the concept of “Feature Selection” – which in the case of this idiom includes: walking, flying and quacking.

If you’ve been tasked with dealing with a duck problem, ideally you’d be an aficionado on the feet, wings and sounds of ducks. You’d be able to apply this knowledge to each bird you have the time to focus your attention on and make a determination: Duck, or Not a Duck. As a security professional, you’d be versed in the various attributes of certain threats – and able to derive a conclusion as to the nature of the security problem.

The problem though is that at scale things break down.

What do you do when there’s too many to analyze, when time is too short, and when you can’t make out all the duck features from afar? This is typical of the big data problem (and your everyday network traffic). Storing the data is the easy part. Retrieving the data is mechanically complicated, but solvable.

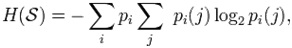

Meanwhile, making decisions and taking actions upon the data is typically the most difficult part. With every doubling of data, your problem grows exponentially.

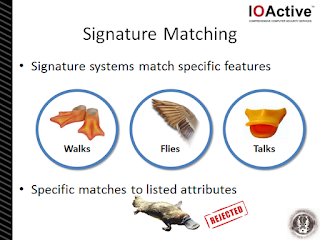

The traditional method of dealing with the situation has been to employ signature matching systems. In essence, we build rules based upon the features we’ve previously identified as significant and capable of bounding the problem (or duck selection). We then compare these rules against the sample animal and receive a binary answer – Duck, or Not a Duck.

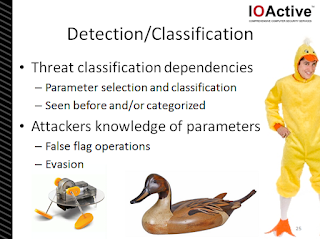

Signature systems can be very efficient at classification. Just look at your average Intrusion Prevention System (IPS). A problem though lies in the scope of the features that had been defined.

If those features (or parameters) used for classification are too narrow (or too broad) then evasion is not only probable, but guaranteed. In essence, for a threat (or duck) to be classified, it must have been observed in the past or carefully predicted (although rare).

From an attacker’s perspective, knowledge of those features and triggering parameters makes it a trivial task to evade or to conduct false flag operations. Just think – hunters do this all the time with their floating duck decoys. Even fellow duck hunters have been known to mistakenly take pot-shots at them too.

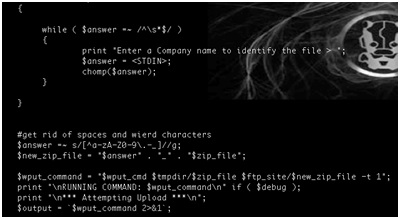

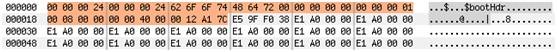

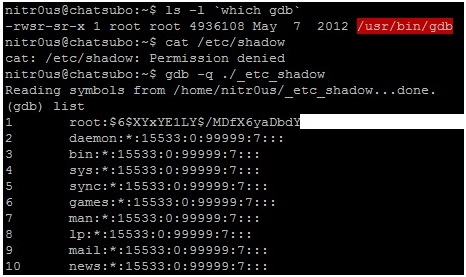

Switching pace a little, let’s look at the network a little.

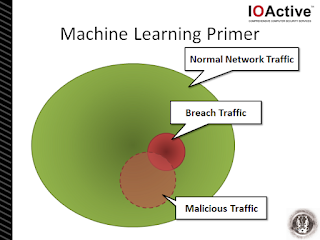

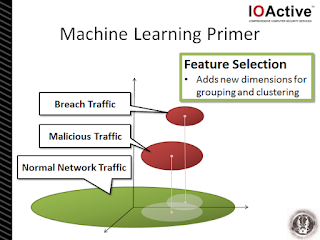

The big green blob is all the network traffic for an organization for a week. The red blog right-of-center is traffic associated with an active breach, and the lighter red blob with the dotted lines are just general malicious traffic observed within the network. In this two-dimensional view (if I hadn’t color-coded it previously) you’d have a near impossible task differentiating between them. As it is, the malicious traffic is mixed with both the “safe” and “breach” traffic.

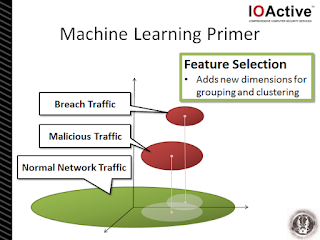

The trick in differentiating between the network traffic types lies in increasing the dimensionality of the problem. What was a two-dimensional blob suddenly becomes much clearer when an appropriate view or perspective to the data is added. In the context of the above diagram, the addition of a z-axis and an extension in to the third-dimension allows the observer (i.e. analyst) to easily differentiate between the traffic types – for example, the axis could represent “country code of destination IP address”. In this context, the appropriate feature selection can greatly simplify the detection problem. Choosing appropriate features is important – nay, it’s critical!

This is where advances in machine learning over the last half-decade have really come to the fore in computer science and more recently in information security.

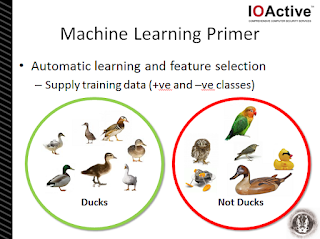

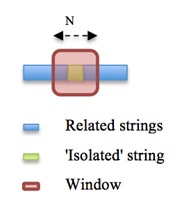

Without getting in to any of the math behind the various machine learning algorithms or techniques, the key concept you need to understand is “training”. It can mean many things to many a mathematician, but since we’re likely not one of those, what training means in our context is that we already have samples of what we’re going to be looking for, and samples of things we know we’re definitely not interested in. The better we define and populate these training sets, the more precise the machine learning system we’re employing will be in differentiating between them – and potentially classifying other contenders.

So, in this example we’ve taken a bunch of ducks and grouped them together. They become our “+ve class” – which basically means these are the things we’re interested in. But, equally important, is our “-ve class” – our collection of things we know not to be ducks. In many cases our -ve class may be more important than our +ve class because it contains all those false positives and “nearly” ducks – the things that may have caught us out once before.

One function of a good machine learning system is to automatically determine which attributes make the most sense in differentiating between your +ve and -ve classes.

While our poor old hunter (or analyst) was working with three features – walks, flies, and talks – the computer-based system may have reviewed all the attributes that were available and determined which ones are the most useful in differentiating between “ducks” and “not ducks”. In many cases the system will have weighted the various features (or attributes) to indicate which features are more deterministic of the classes.

For example, texture may be a good indicator of “not a duck” – since none of the +ve class were made from plastic or wood. Meanwhile features such as “wing length” may not be such a good criteria and will be weighted in a way to not have an influence on determining whether a duck is a duck or not – or may be dropped by the system entirely.

The number of features reviewed, assessed and weighted by the machine learning system will eventually be determined by the type of data being used and how “rich” it is. For example, in the network security realm we may be feeding the system with collated samples of firewall logs, DNS traffic samples, IP blacklists/whitelists, IPS alerts, etc. It’s important to note though that the “richer” the data set (i.e. the more possible features there could be), the more complex the problem is for the computer to solve and the longer it’ll take to train the system.

Now let’s switch back to that “big data” we originally talked about. In the duck realm we’re talking about all the birds within a national park (say). Meanwhile, in the network security realm, we may be talking about all the traffic observed in real-time across a corporate network and all the alerting instrumentation (e.g. firewalls, IPS, etc.)

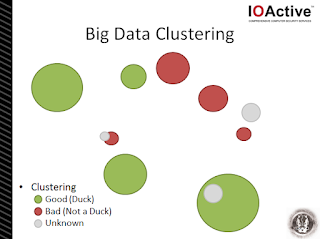

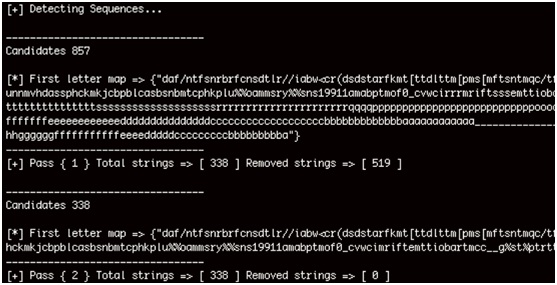

I’m going to do some hand-waving here because it can get rather complex and there’s a lot of proprietary tweaks that can be undertaken here… but in one representation we can get our trained system to automatically group and cluster events on our network.

Using our original training data, or some other previously labeled datasets, it’s possible to automatically label the clusters output by a machine learning system.

For example, in the graphic above we see a number of unique clusters (or blobs if you insist). Through automatic labeling we know that the green blobs are types of ducks, the red blobs are various groupings of not ducks, and the gray blobs are clusters of previously unknown or unlabeled clusters – each one mathematically distinct from the other – based upon the features the system chose.

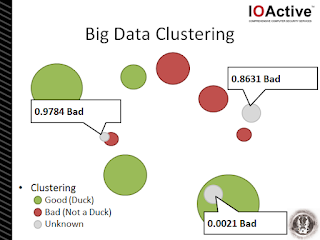

What the system can also do is assign a probability that the unknown clusters are associated with our +ve or -ve training sets. For example, in this particular graphical representation the proximity of the unlabeled clusters to labeled (and classified) clusters allows the system to assign a probability of whether the cluster is a duck or not a duck – even though the system had never seen these things before (i.e. “birds” the system hasn’t encountered before).

The next (and near final) stage is to manually label these new clusters. For example, we ask an ornithologist to look at each cluster of “ducks” and “not ducks” in turn and to label them… “rubber duckies”, “robot duckies”, and “Madagascar mallard ducks”.

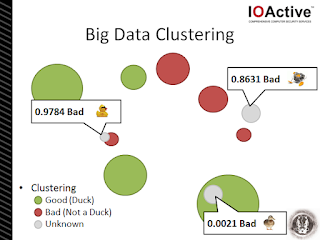

Then, to improve our machine learning system further, we add these newly labeled clusters to our +ve and -ve training sets… and the system continues to learn and become more precise over time.

In addition, since we’ve now labeled these clusters, in the future we’re able to automatically flag new additions to these clusters and correctly label the duck (or threat).

And, if we’re a really smart CISO, we can use this clustering system (and labeled clusters) to automatically alert us to new threats or to initiate automatic network security actions – e.g. enable blocking of a new malicious URL, stop blocking a new cloud service delivering official updates to applications, etc.

The application of machine learning techniques to the toughest security problems facing business today has come along in leaps and bounds recently. However as with any buzz word that falls in to the hands of marketers and gets manipulated until it squeaks and glitters, or oozes onto every product in this year’s price list, senior technical staff need to take added care not to be bamboozled by well-practiced but meaningless word salad.

A little understanding of the concepts behind big data and machine learning can not only cut through the latest batch of sales promises, but can also form the basis of constructing a new generation of meaningful breach detection and incident response solutions.