This analysis of a mobile banking application from X bank illustrates how easily anyone with sufficient knowledge can get install and analyze the application, bypassing common protections.

1. Installing and unpacking the application

Only users located in Wonderland can install the X Android application with Google Play, which uses both the phone’s SIM card and IP address to determine the location of the device. To bypass this limitation, remove the SIM card and reset the phone to factory defaults.

Complete the initial Android setup with a Wonderland IP address, using an L2TP VPN service (PPTP encryption support is broken). If Google Play recognizes the device as located in Wonderland, install the application. Once installed in a rooted phone, copy the application APK from /data/app/com.X.mobile.android.X.apk.

These are some of the many reversing tools for unpacking and decompiling the APK package:

• apktool http://code.google.com/p/android-apktool/

• smali/baksmali http://code.google.com/p/smali/

• dex2jar http://code.google.com/p/dex2jar/

• jd-gui http://java.decompiler.free.fr/?q=jdgui

• apkanalyser https://github.com/sonyericssondev

In this example, the code was decompiled using jd-gui after converting the apk file to jar using the dex2jar tool. The source code produced by jd-guifrom Android binary files is not perfect, but is a good reference. The output produced by the baksmali tool is more accurate but difficult to read. The smalicode was modified and re-assembled to produce modified versions of the application with restrictions removed. A combination of decompiled code review, code patching, and network traffic interception was used to analyze the application.

2. Bypassing the SMS activation

The application, when started for the first time, asks for a Wonderland mobile number. Activating a new account requires an activation code sent by SMS. We tested three different ways to bypass these restrictions, two of which worked. We were also able to use parts of the application without a registered account.

2.1 Intercepting and changing the activation code HTTPS request

The app GUI only accepts cell phone numbers with the 0X prefix; always adding the +XX prefix before requesting an activation code from the web service at https://android.X.com/activationCode

Intercepting the request and changing the phone number didn’t work, because the phone prefix is verified on the server side.

2.2 Editing shared preferences

The app uses the “Shared Preferences” Android service to store its minimal configuration. The configuration can be easily modified in a rooted phone by editing the file

/data/data/com.X.mobile.android.X/shared_prefs/preferences.xml

Set the “activated” preference to “true” like this:

<?xml version=’1.0′ encoding=’utf-8′ standalone=’yes’ ?>

<map>

<boolean name=”welcome_screen_viewed ” value=”true” />

<string name=”msisdn”>09999996666</string>

<boolean name=”user_notified” value=”true” />

<boolean name=”activated ” value=”true” />

<string name=”guid” value=”” />

<boolean name=”passcode_set” value=”true” />

<int name=”version_code” value=”202″ />

</map>

2.3 Starting the SetPasscode activity

The Android design allows the user to bypass the normal startup and directly start activities exported by applications. The decompiled code shows that the SetPasscodeactivity can be started after the activation code is verified. Start the SetPasscode activity using the “am” tool as follows:

From the adb root shell:

#am start -a android.intent.action.MAIN -n com.X.mobile.android.X/.activity.registration.SetPasscodeActivity

3. Intercepting HTTPS requests

To read and modify the traffic between the app and the server, perform a SSL/TLS MiTM attack. We weren’t able to create a CA certificate and install it using the Android’s user interface with the Android version we used for testing. Android ignores CA certificates added by the user. Instead, we located and then modified the app’s http client code to make it accept any certificate. Then we installed the modified APK file on the phone. Using iptables on the device, we could redirect all the HTTPS traffic to an MiTM proxy to intercept and modify requests sent by the application.

4. Data storage

The app doesn’t store any data on external storage (SD card), and it doesn’t use the SQLlite database. Preferences are stored on the “Shared Preferences” XML file. Access to the preferences is restricted to the application. During a review of the decompiled code, we didn’t find any evidence of sensitive information being stored in the shared preferences.

5. Attack scenario: Device compromised while the app is running.

The X android application doesn’t store sensitive information on the device. In the event of theft, loss or remote penetration, the auto lockout mechanism reduces the risk of unauthorized use of the running application. When the app is not being used or is running in background, the screen is locked and the passcode must be entered to initiate a new session. The HTTPS session cookie expires after 300 seconds. The current session cookie is removed from memory when the application is locked.

5.1 Attacker with root privileges

An attacker with root access to the device can obtain the GUID and phone number from the unencrypted XML configuration file; but without the clear-text or encrypted passcode, mobile banking web services cannot be accessed. We have discovered a way to “unlock” the application using the Android framework to start the AccountsFragmentActivity activity, but if the web session has already expired, the app limits the attacker to a read-only access. The most profitable path for the attacker at this point is the running process memory dump, as we explain in the next section.

5.2 Memory dump analysis.

An attacker who gets root access can acquire the memory of a running Android application in several different ways. “Acquisition and Analysis of Volatile Memory from Android Devices,” published in the Digital Investigation Journal, describes some of the different methods.

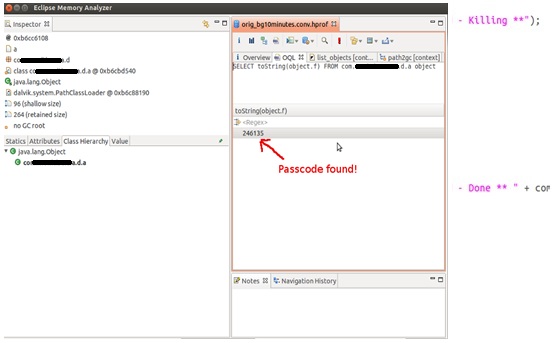

We used the ddms (Dalvik Debug Monitor Services) tool included with the Android SDK to acquire the memory dump. The Eclipse Memory Analyzer tool with Object Query Language support is one of the most powerful ways to explore the application memory. The application passcodes are short numeric strings; a simple search using regular expressions returns too many occurrences. The attacker can’t try every occurrence of numeric strings in the process memory as a passcode for the app web services because the account is blocked after a few attempts. The HTTPS session cookies are longer strings and easier to find.

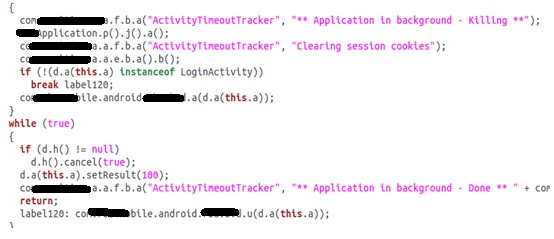

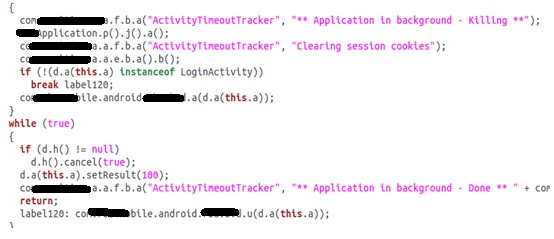

By searching for the prefix “JSESSION,” the attacker can easily locate the cookies when the application is running and active. However, the cookies are removed from memory after a timeout. The ActivityTimeoutTracker function calls the method clear() of the HashMap used to store the cookies. The cookie HashMap is accessed through the singleton class com.X.a.a.e.b.

Reviewing the decompiled code, we located a variable where the passcode is stored before being encrypted to perform the session initiation request. The field String f of the class com.X.a.a.d.a contains the passcode, and it is never overwritten or released. The references to the instance prevent the garbage collection of the string. Executing the OQL query “SELECT toString(object.f) FROM com.X.a.a.d.a object” inside the Eclipse Memory Analyzer is sufficient to reliably locate the passcode in the application memory dump.

Although developers tried to remove information from the memory to prevent this type of attack, they left the most important piece of information unprotected.

6. Attack scenario: Perform MiTM using a compromised CA

The banking application validates certificates against all the CA certificates that ship with Android. Any single compromised CA in the system key store can potentially compromise communication between the app and the backend. An active network attacker can hijack the connection between the mobile app and the server to impersonate the user.

Mobile apps making SSL/TLS connections to a service controlled by the vendor don’t need to trust Certificate Authorities signatures. The app could therefore implement certificate “pinning” or distribute a signing certificate created by the vendor.

The authentication protocol is vulnerable to MiTM attacks. The app’s authentication protocol uses the RSA and 3DES algorithms to encrypt the passcode before sending it to the server. After the user types the passcode and presses the “login” button, the client retrieves an RSA public key from the server without having to undergo any authenticity check, which allows for an MiTM attack. We were able to implement an attack and capture the passcodes from the app in our testing environment. Although the authentication protocol implemented by the app is insecure, attacks are prevented by the requirement of SSL/TLS for every request. Once someone bypasses the SSL/TLS certificate verification, though, the encryption of the passcode doesn’t provide extra protection.

7. Attack scenario: Enumerate users

The web service API allows user enumeration. With a simple JSON request, attackers can determine if a given phone number is using the service, and then use this information to guess passwords or mount social engineering attacks.

About Juliano

Juliano Rizzo has been involved in computer security for more than 12 years, working on vulnerability research, reverse engineering, and development of high quality exploits for bugs of all classes. As a researcher he has published papers, security advisories, and tools. His recent work includes the ASP.NET “padding oracle” exploit, the BEAST attack, and the CRIME attack. Twitter: @julianor