As most of us know, the Earth’s CO2 levels keep rising, which directly contributes to the melting of our pale blue dot’s icecaps. This is slowly but surely making it harder for our beloved polar bears to keep on living. So, it’s time for us information security professionals to help do our part. As we all know, every packet traveling over the Internet is processed by power hungry CPUs. By simply sending fewer packets, we can consume less electricity while still get our banner grabbing, and subsequently our work, done.

But first a little bit of history. Back in the old days, port scanners were very simple. Some of the first scanners out there were probe_tcp_ports (published in Phrack #46) and pscan.c by pluvius. The first scanner I found that actually managed to use non-blocking I/O was strobe written by Proff, more commonly known nowadays as Julian Assange, somewhere in 1995. Using non-blocking I/O obviously made it a lot faster.

In 1996, scantcp.c written by Uriel Maimon (published in Phrack #49) became one of the first scanners to use half-open/SYN scanning. This was followed by the introduction of nmap by Fyodor Lyon in The Art of Port Scanning (published in Phrack #51) in 1997.

Of course, any security person worth his salt knows nmap and is at least familiar with a good subset of the myriad of scanning techniques it implements. But, in many cases, when scanning for TCP ports we want results as quickly as possible, so we can get a list of open ports we can connect to from the source address that we’re scanning and subsequently identify the services behind those ports.

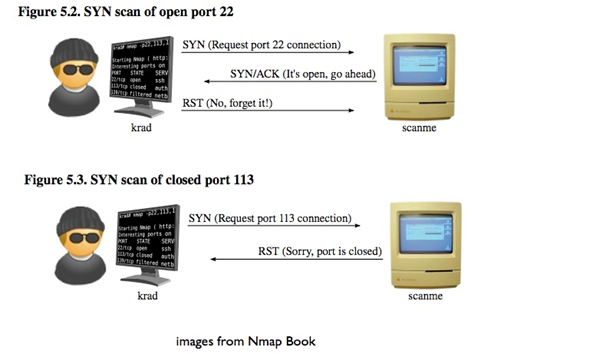

To determine if a port is open, we sent out a SYN packet and watch for one of several things to happen. Assuming there are no firewalls or routing issues, the receiving TCP/IP stack will either:

- Send a packet with the RST flag set, which means the port is closed and the host is not accepting connections on that port.

- Send a packet with the SYN and ACK flags set, which means the host is accepting the connection. The Operating System under which nmap runs doesn’t know anything about the outgoing SYN packet (as it was created in raw mode), and subsequently it will send back an RST packet as a reply.

To summarize see the following image from (courtesy of the nmap book)

This is great, as we can now list the open ports, and this forms the basis of half-open/SYN scanning. However, one thing that nmap does is track the number of outstanding probes and retransmit them a number of times if no response is received in a timely fashion. This leads to a lot of complexity, and it makes scanning slower too. Besides that, standard scanning modes do not offer protection against an adversary who is feeding wrong responses down the pipe. So nmap can, under such circumstances, claim that a port is open when it is not, or vice versa.

This lead to some efforts to protect the outgoing SYN probes by cryptographically signing them. The first known public release of this was scanrand by Dan Kaminsky introduced in his collection of Paketto Kereitsu utilities. It generates and fires off packets as quickly as possible. Each SYN probe is protected by a cryptographic cookie based on an HMAC calculated over the 4-tuple identifying the connection (the source and destination IP addresses and ports) and a randomly generated secret. As a valid reply for an open port needs to be sent back with an incremented TCP ACK value, it’s easy to recalculate the original cookie and deduce if it was indeed a response to a packet sent out by the scanner. This completely eliminates the need to keep state, although a bit of packet loss can mean some probes may get lost and won’t be retransmitted, and as such not all open ports will be discovered.

So that’s all great. But what’s one of the first things we do after discovering an open port? Connect to it properly. Possibly to do a banner grab or a version scan or just to see what the heck is behind this port. Now when it comes to standard IANA port assignments, in most cases, it’s relatively clear what’s behind a port. If open, port 22 is most likely SSH, port 80 HTTP, port 143 IMAP etc. But we still want to check.

So what happens if you do an nmap version scan? Or a simple banner grab after a port scan? After receiving a SYN|ACK reply from an open port, the scanner jots down that the port is open, and then connects to it. The only way to do this is to go through libc and the kernel and do a full TCP connect() call. This will result in another SYN – SYN|ACK – ACK exchange on the wire before any data can actually be exchanged. Combining that with the original SYN – SYN|ACK – RST exchange, that’s a total of three potentially unnecessary packets being exchanged.

So how would one go about solving this? There are a few approaches. One is by patching the host kernel and enabling an API that allows us to send out raw SYN probes without keeping any state. By then adding a callback API when valid SYN|ACK packets are received, we would have a mechanism to turn these connections into valid file descriptors. But that means writing a lot of complicated kernel code.

The other approach is to use a userland TCP/IP stack. These work exactly the same as any normal TCP/IP stack, but they just run in userspace. That makes it easier to integrate them into scanners and modify them without having to deal with complicated custom kernel modules.

As I wrote several scanners over the years, I always wanted to have a good userland TCP/IP stack, but I never saw one that fit my needs or whose code quality I actually liked. And writing one myself seemed like a lot of work, so I shied away from it.

Anyhow, ever seen the movie American Beauty? Where Kevin Spacey’s wife comes home and asks him about the 1970 Pontiac Firebird out on the driveway which he bought with the money received from blackmailing his former boss? It’s such a great scene; “the car I’ve always wanted and now I have it. I rule”.

That’s sort of how I felt when I stumbled over Patrick Kelsey’s libuinet. The userland TCP/IP stack I’ve always wanted and now I have it. I rule!! It’s a port of the FreeBSD TCP/IP stack with kernel paradigms ported back on userland libraries, such as POSIX threads. It’s great, and with a bit of fiddling, I got it to work on Linux too.

That enabled me to combine the two ideas into just one port scanner; the stateless SYN scanning first introduced in scanrand and the custom TCP/IP stack. Now I can immediately resume doing a banner grab or sending a request without having to do another TCP connect() call. To prevent the hosting Linux kernel from interfering and sending a RST packet, an iptables firewall rule will be inserted. Otherwise, the userland TCP/IP stack will try to pick up the connection from the SYN|ACK but then the Linux kernel has already frontran it and will have sent the RST out. After this was figured out, tying everything else together in the tool was relatively easy, thus, polarbearscan was born.

The above approach saves us from sending a couple of packets. And thus saves CPU cycles. And so we can hopefully give the polar bears a few more years. The code for the polarbear scanner can be found here,and instructions on how to compile it and get it up and running on Linux systems are inside in the README in the distribution.

A sample run of the tool doing a standard banner grab for port 21, 22, 143 (FTP, SSH, IMAP) with a bandwidth limitation for outgoing SYN probes of just 10kbps and scanning a /20 range will yield something like this:

$ sudo ./pbscan -10k –sB –p21,22,143 x.x.x.x/20

seed: 0xb21f7f4e, iface: eth0, src: 10.0.2.15 (id: 54321, ttl: 64, win: 65535)

x.x.x.6:21 -> 220———- Welcome to Pure-FTPd [privsep] [TLS] ———-

x.x.x.6:22 -> SSH-2.0-OpenSSH_4.3

x.x.x.14:21 (t/o: 0s)

x.x.x.6:143 -> * OK [CAPABILITY IMAP4rev1 LITERAL+ SASL-IR LOGIN-REFERRALS ID ENABLE IDLE STARTTLS AUTH=PLAIN] Dovecot DA ready.

x.x.x.8:143 -> * OK [CAPABILITY IMAP4rev1 LITERAL+ SASL-IR LOGIN-REFERRALS ID ENABLE IDLE STARTTLS AUTH=PLAIN] Dovecot DA ready.

x.x.x.15:21 (t/o: 0s)

x.x.x.16:21 (t/o: 0s)

x.x.x.8:22 -> SSH-2.0-OpenSSH_5.3

x.x.x.9:143 -> * OK [CAPABILITY IMAP4rev1 LITERAL+ SASL-IR LOGIN-REFERRALS ID ENABLE IDLE STARTTLS AUTH=PLAIN] Dovecot DA ready.

x.x.x.8:21 -> 220 ProFTPD 1.3.3e Server ready.

x.x.x.10:22 -> SSH-2.0-OpenSSH_4.3

x.x.x.10:21 -> 220 ProFTPD 1.3.5 Server ready.

x.x.x.9:22 -> SSH-2.0-OpenSSH_5.3

x.x.x.9:21 -> 220 ProFTPD 1.3.3e Server ready.

x.x.x.17:21 (t/o: 0s)

x.x.x.11:22 -> SSH-2.0-OpenSSH_4.3

x.x.x.10:143 -> * OK [CAPABILITY IMAP4rev1 LITERAL+ SASL-IR LOGIN-REFERRALS ID ENABLE IDLE STARTTLS AUTH=PLAIN] Dovecot DA ready.

x.x.x.7:21 -> 220 Core FTP Server Version 1.2, build 369 Registered

x.x.x.11:21 -> 220———- Welcome to Pure-FTPd [privsep] [TLS] ———-

x.x.x.13:143 -> * OK [CAPABILITY IMAP4rev1 LITERAL+ SASL-IR LOGIN-REFERRALS ID ENABLE IDLE STARTTLS AUTH=PLAIN] Dovecot DA ready.

x.x.x.13:21 -> 220 ProFTPD 1.3.4d Server ready.

x.x.x.13:22 -> SSH-2.0-OpenSSH_4.3

x.x.x.14:22 -> SSH-2.0-OpenSSH_5.1p1 Debian-6ubuntu2

x.x.x.15:22 -> SSH-2.0-OpenSSH_5.1p1 Debian-6ubuntu2

x.x.x.16:22 -> SSH-2.0-OpenSSH_6.6.1p1 Ubuntu-2ubuntu2

x.x.x.18:22 -> SSH-2.0-OpenSSH_5.5p1 Debian-6+squeeze5

x.x.x.19:22 -> SSH-2.0-OpenSSH_4.3

x.x.x.19:21 -> 220———- Welcome to Pure-FTPd [privsep] [TLS] ———-

x.x.x.17:143 -> * OK [CAPABILITY IMAP4rev1 LITERAL+ SASL-IR LOGIN-REFERRALS ID ENABLE IDLE STARTTLS LOGINDISABLED] Dovecot (Ubuntu) ready.

x.x.x.21:22 -> SSH-2.0-OpenSSH_5.3

x.x.x.22:22 -> SSH-2.0-OpenSSH_4.3

x.x.x.25:22 -> SSH-2.0-OpenSSH_4.3

x.x.x.25:143 -> * OK [CAPABILITY IMAP4rev1 LITERAL+ SASL-IR LOGIN-REFERRALS ID ENABLE STARTTLS AUTH=PLAIN] Dovecot DA ready.

x.x.x.26:22 -> SSH-2.0-OpenSSH_4.3

x.x.x.27:143 -> * OK [CAPABILITY IMAP4rev1 LITERAL+ SASL-IR LOGIN-REFERRALS ID ENABLE STARTTLS AUTH=PLAIN] Dovecot DA ready.

x.x.x.26:143 -> * OK [CAPABILITY IMAP4rev1 LITERAL+ SASL-IR LOGIN-REFERRALS ID ENABLE STARTTLS AUTH=PLAIN] Dovecot DA ready.

x.x.x.27:22 -> SSH-2.0-OpenSSH_4.3

x.x.x.36:143 -> * OK [CAPABILITY IMAP4rev1 LITERAL+ SASL-IR LOGIN-REFERRALS ID ENABLE IDLE STARTTLS AUTH=PLAIN] Dovecot DA ready.

x.x.x.36:22 -> SSH-2.0-OpenSSH_5.3

x.x.x.38:22 -> SSH-2.0-OpenSSH_6.6p1 Ubuntu-2ubuntu1

x.x.x.40:22 -> SSH-2.0-OpenSSH_4.7p1 Debian-8ubuntu1.2

x.x.x.17:22 (t/o: 5s)

x.x.x.35:22 (t/o: 5s)

x.x.x.37:22 (t/o: 5s)

x.x.x.239:22 -> SSH-2.0-OpenSSH_5.1p1 Debian-5

x.x.x.100:22 (t/o: 5s)

x.x.x.124:22 (t/o: 6s)

x.x.x.242:22 (t/o: 5s)

The tool also has the ability to do more than a passive banner grab. It includes other scan modes in which it will try to identify TLS servers by sending it a TLS NULL probe and identify HTTP servers.

Enjoy!