Today, no one is surprised at the appearance of an IIoT. The idea of putting your logging, monitoring, and even supervisory/control functions in the cloud does not sound as crazy as it did several years ago. If you look at mobile application offerings today, many more ICS- related applications are available than two years ago. Previously, we predicted that the “rapidly growing mobile development environment” would redeem the past sins of SCADA systems.

The purpose of our research is to understand how the landscape has evolved and assess the security posture of SCADA systems and mobile applications in this new IIoT era.

SCADA and Mobile Applications

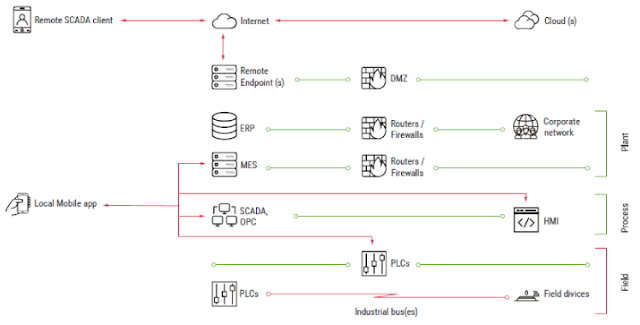

ICS infrastructures are heterogeneous by nature. They include several layers, each of which is dedicated to specific tasks. Figure 1 illustrates a typical ICS structure.

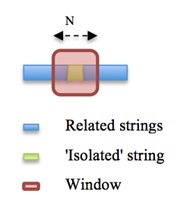

Mobile applications reside in several ICS segments and can be grouped into two general families: Local (control room) and Remote.

Local Applications

Local applications are installed on devices that connect directly to ICS devices in the field or process layers (over Wi-Fi, Bluetooth, or serial).

Remote Applications

Remote applications allow engineers to connect to ICS servers using remote channels, like the Internet, VPN-over-Internet, and private cell networks. Typically, they only allow monitoring of the industrial process; however, several applications allow the user to control/supervise the process. Applications of this type include remote SCADA clients, MES clients, and remote alert applications.

In comparison to local applications belonging to the control room group, which usually operate in an isolated environment, remote applications are often installed on smartphones that use Internet connections or even on personal devices in organizations that have a BYOD policy. In other words, remote applications are more exposed and face different threats.

Typical Threats And Attacks

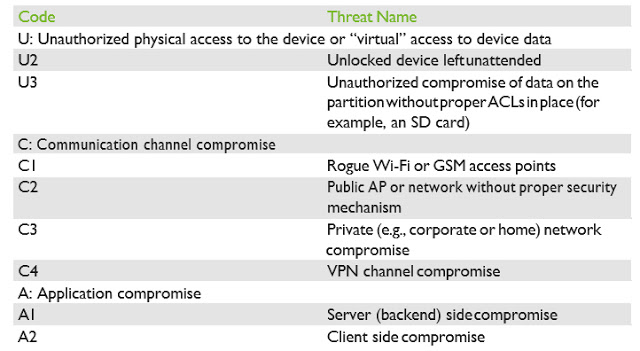

- Unauthorized physical access to the device or “virtual” access to device data

- Communication channel compromise (MiTM)

- Application compromise

Table 1 summarizes the threat types.

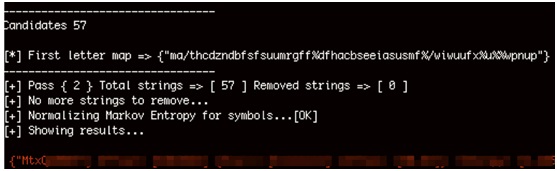

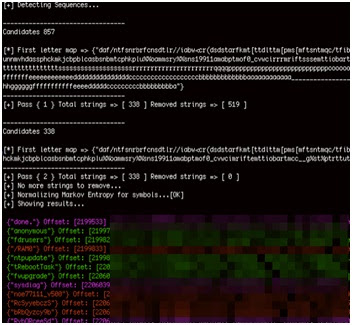

- Perform analysis and fill out the test checklist

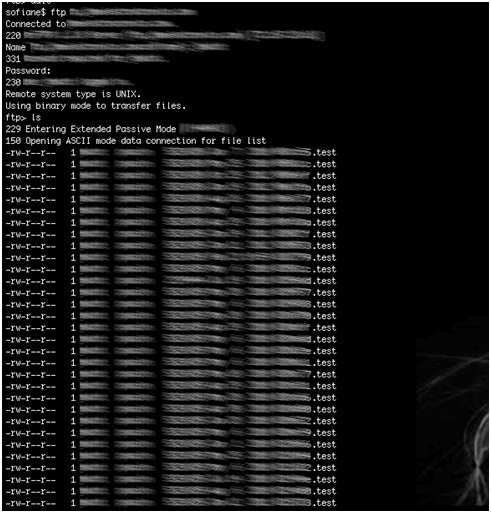

- Perform client and backend fuzzing

- If needed, perform deep analysis with reverse engineering

- Application purpose, type, category, and basic information

- Permissions

- Password protection

- Application intents, exported providers, broadcast services, etc.

- Native code

- Code obfuscation

- Presence of web-based components

- Methods of authentication used to communicate with the backend

- Correctness of operations with sessions, cookies, and tokens

- SSL/TLS connection configuration

- XML parser configuration

- Backend APIs

- Sensitive data handling

- HMI project data handling

- Secure storage

- Other issues

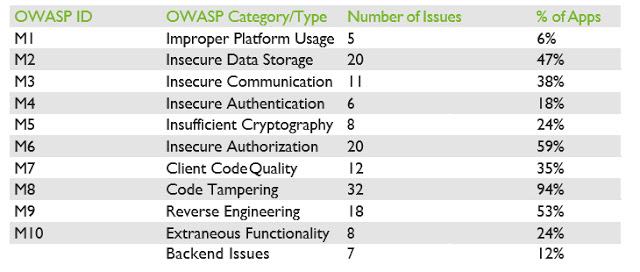

In our white paper, we provide an in-depth analysis of each category, along with examples of the most significant vulnerabilities we identified. Please download the white paper for a deeper analysis of each of the OWASP category findings.

Remediation And Best Practices

In addition to the well-known recommendations covering the OWASP Top 10 and OWASP Mobile Top 10 2016 risks, there are several actions that could be taken by developers of mobile SCADA clients to further protect their applications and systems.

In the following list, we gathered the most important items to consider when developing a mobile SCADA application:

- Always keep in mind that your application is a gateway to your ICS systems. This should influence all of your design decisions, including how you handle the inputs you will accept from the application and, more generally, anything that you will accept and send to your ICS system.

- Avoid all situations that could leave the SCADA operators in the dark or provide them with misleading information, from silent application crashes to full subverting of HMI projects.

- Follow best practices. Consider covering the OWASP Top 10, OWASP Mobile Top 10 2016, and the 24 Deadly Sins of Software Security.

- Do not forget to implement unit and functional tests for your application and the backend servers, to cover at a minimum the basic security features, such as authentication and authorization requirements.

- Enforce password/PIN validation to protect against threats U1-3. In addition, avoid storing any credentials on the device using unsafe mechanisms (such as in cleartext) and leverage robust and safe storing mechanisms already provided by the Android platform.

- Do not store any sensitive data on SD cards or similar partitions without ACLs at all costs Such storage mediums cannot protect your sensitive data.

- Provide secrecy and integrity for all HMI project data. This can be achieved by using authenticated encryption and storing the encryption credentials in the secure Android storage, or by deriving the key securely, via a key derivation function (KDF), from the application password.

- Encrypt all communication using strong protocols, such as TLS 1.2 with elliptic curves key exchange and signatures and AEAD encryption schemes. Follow best practices, and keep updating your application as best practices evolve. Attacks always get better, and so should your application.

- Catch and handle exceptions carefully. If an error cannot be recovered, ensure the application notifies the user and quits gracefully. When logging exceptions, ensure no sensitive information is leaked to log files.

- If you are using Web Components in the application, think about preventing client-side injections (e.g., encrypt all communications, validate user input, etc.).

- Limit the permissions your application requires to the strict minimum.

- Implement obfuscation and anti-tampering protections in your application.

Conclusions

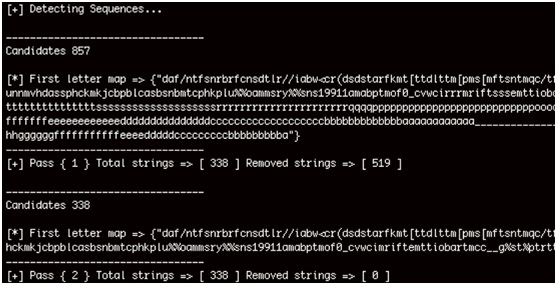

Two years have passed since our previous research, and things have continued to evolve. Unfortunately, they have not evolved with robust security in mind, and the landscape is less secure than ever before. In 2015 we found a total of 50 issues in the 20 applications we analyzed and in 2017 we found a staggering 147 issues in the 34 applications we selected. This represents an average increase of 1.6 vulnerabilities per application.

We therefore conclude that the growth of IoT in the era of “everything is connected” has not led to improved security for mobile SCADA applications. According to our results, more than 20% of the discovered issues allow attackers to directly misinform operators and/or directly/ indirectly influence the industrial process.

In 2015, we wrote:

SCADA and ICS come to the mobile world recently, but bring old approaches and weaknesses. Hopefully, due to the rapidly developing nature of mobile software, all these problems will soon be gone.

We now concede that we were too optimistic and acknowledge that our previous statement was wrong.

Over the past few years, the number of incidents in SCADA systems has increased and the systems become more interesting for attackers every year. Furthermore, widespread implementation of the IoT/IIoT connects more and more mobile devices to ICS networks.

Thus, the industry should start to pay attention to the security posture of its SCADA mobile applications, before it is too late.

For the complete analysis, please download our white paper here.

Acknowledgments