In my five years with IOActive, I’ve had the opportunity to visit some awesome places, often thousands of kilometers from home. So flying has obviously been an integral part of my routine. You might not think that’s such a big deal, unless like me, you’re afraid of flying. I don’t think I can completely get rid of that anxiety; after dozens of flights my hands still sweat during takeoff, but I’ve learned to live with it, even enjoying it sometimes…and spending some flights hacking stuff.

What helped a lot to reduce that fear was to understand how things work in planes, and getting used to noises, bumps, and turbulence. This blog post is about understanding a bit more about how things work aboard an aircraft. More specifically, the In-Flight Entertainment Systems (IFE) developed by Panasonic Avionics.

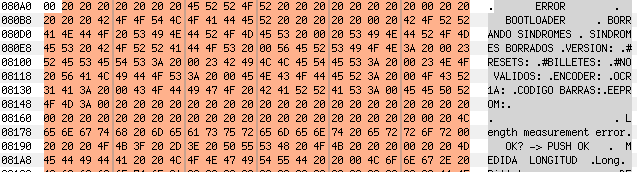

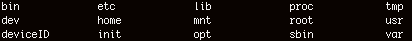

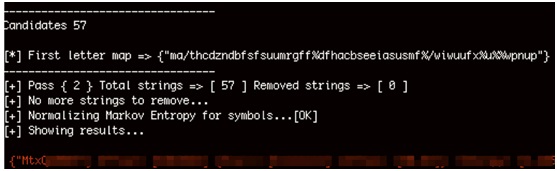

While I was flying from Warsaw to Dubai two years ago, I decided to try my luck and play with the IFE a little bit, touching this and that. Suddenly, after touching a specific point in one of the screen’s upper corners, the device returned this debug information:

These files were obviously being actively updated, so it was possible to gain access to the latest versions deployed on aircraft. Today the files are still there, although the directory listing is no longer available.

The airlines I was able to find firmware updates available for included:

- Emirates

- AirFrance

- Aerolineas Argentinas

- United

- Virgin

- Singapore

- FinnAir

- Iberia

- Etihad

- Qatar

- KLM

- American Airlines

- Scandinavian

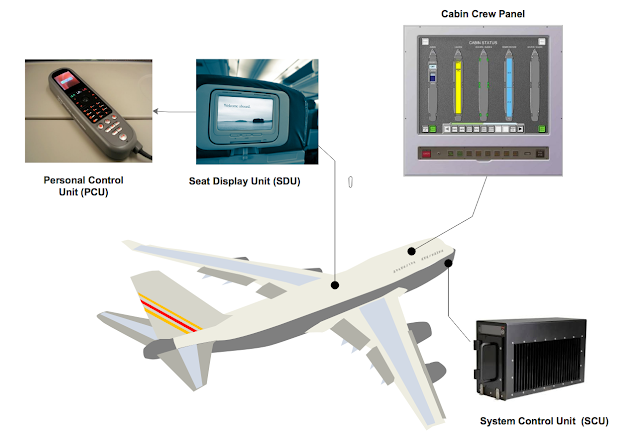

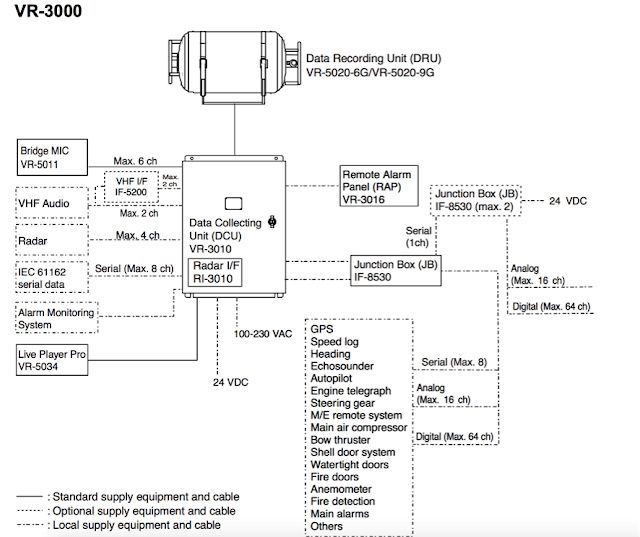

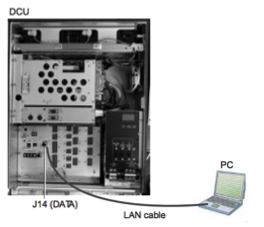

This needs to be a certified airborne server. Passengers can access real-time information about the flight, such as wind speed, latitude, longitude, altitude, and outside temperature. The SCU receives all of these values via the avionics bus (usually ARINC 429) and makes them available for the Seat Display Unit (SDU) through an Ethernet connection.

Seat Display Unit (SDU)

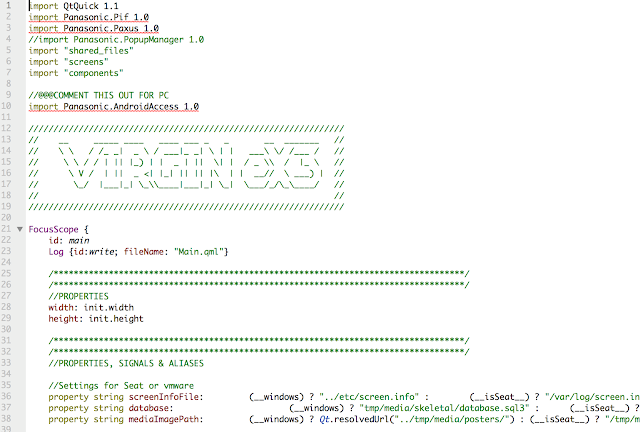

This LRU (Linear Replaceable Unit) allows the passenger to consume passive and active functions implemented in the IFE, such as watching movies, buying items, reading articles, or connecting to the internet. It’s basically an embedded device with a touchscreen; the newest are based on Android, while legacy devices mainly use Linux.

This handset is optional. The PCU can control the SDU, and as I’ll cover later, it can also act as a credit card reader.Cabin Crew Panel

Flight attendants and other crew members use these units to control features of the aircraft such as lights, actuators (including beds), announcements, onboard shopping, or the PA system, in order to attend to passenger needs. The Cabin Management System (CMS) is normally integrated with the IFE. Panasonic Avionics integrates the aircraft’s CMS and inflight entertainment (IFE) systems with the Global Communications Services, featuring shared system functionality for simple operation by the cabin crew (see https://www.panasonic.aero/inflight-systems/cabin-systems/). The IFE CrewApp is accessible from the Cabin Crew Panel.Panasonic IFE

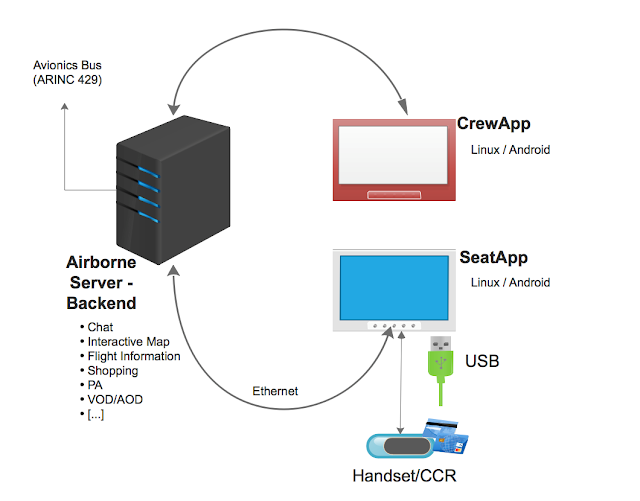

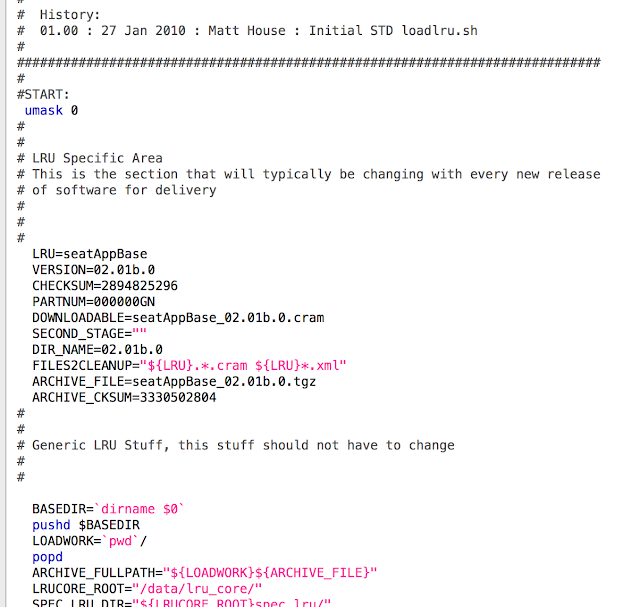

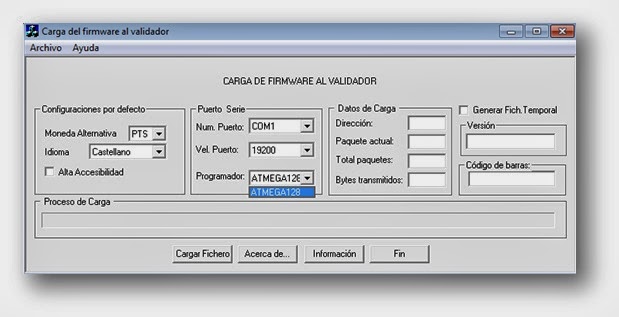

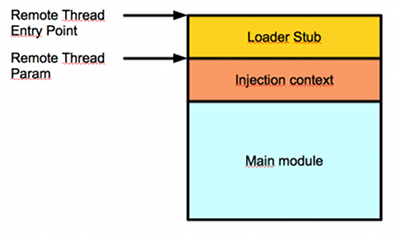

There are multiple Panasonic IFEs: the “legacy” 3000/3000i and the newest XSeries eFX, eX2 and eX3 (Android-based). Hardware may vary among them, but they have a similar architecture and some common features.You can find more details on these IFEs from the Panasonic Avionics website at https://www.panasonic.aero.These systems support a large range of personalization features, which allow airlines to deploy tailored IFEs while the code base remains pretty much the same.My analysis of the firmware files did not clearly reveal the approach used to perform the data loading on the ground. Usually IFEs perform content updates via Wi-Fi ad-hoc networks or high-speed mobile data links once the aircraft touches down. Panasonic Avionics IFEs are mostly updated over “sneakernet.” While in flight, satellite communications or custom mobile data links are also possible. However, in most cases IFEs operate offline, with their contents preloaded. Usually the IFE doesn’t even check credit cards in real time.The Panasonic Avionics IFE follows a client-server architecture with three main components

- CrewApp

- SeatApp

- Backend

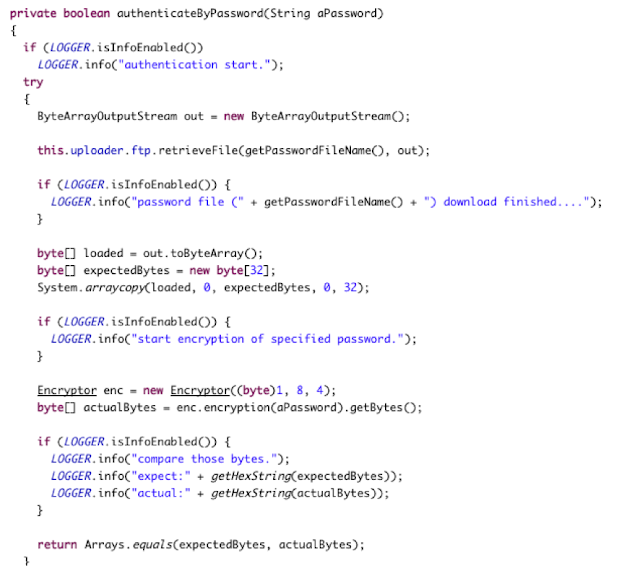

I found multiple versions of the CrewApp and SeatApp on the aforementioned website. When searching in Google for certain keywords, I also found the source code of the backend to be publicly exposed, but on a different .aero website. Although it contains the custom features and data for specific airlines, it still uses the Panasonic backend codebase.

It is impossible to cover all of the variations in a single blog post, as each airline and other companies adapt and extend the Panasonic framework to its own needs, so we will focus on specific features.

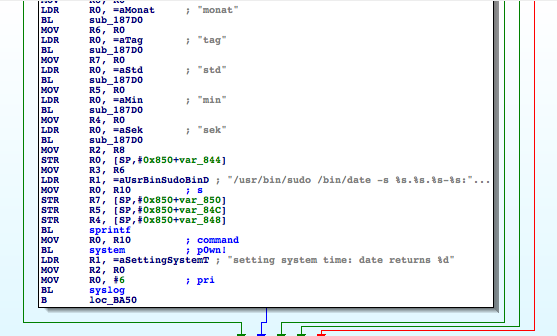

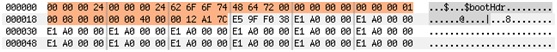

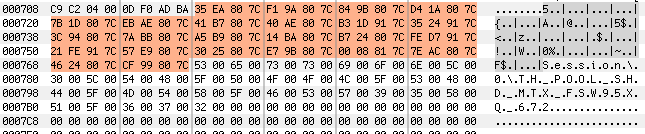

The firmware files (in this case software that runs on a device the user does not maintain) that I could analyze did not contain the complete system, just the files that should be updated. That’s a pity, because otherwise we could acquire more details on the low-level workings of those devices. However, it is possible to get some interesting details by going through the available files.

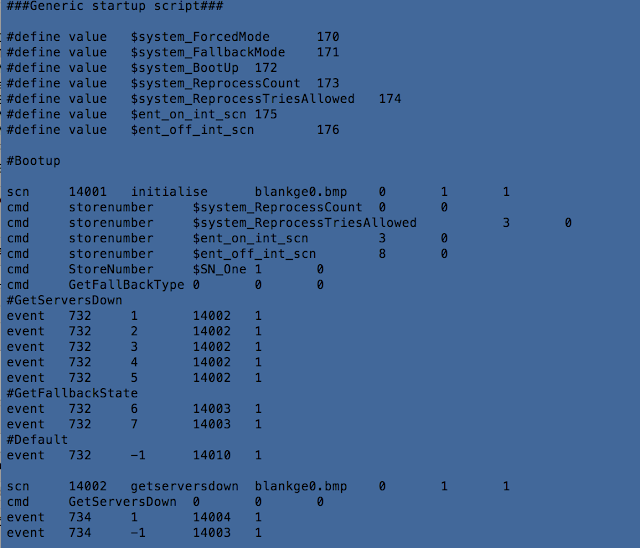

Scripts

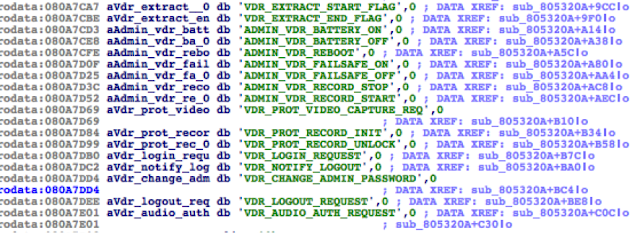

Panasonic Avionics has implemented its own declarative script language, used to extend and interface with the GUI and features of the main application. It supports dozens of commands that cover the entire range of functionalities.

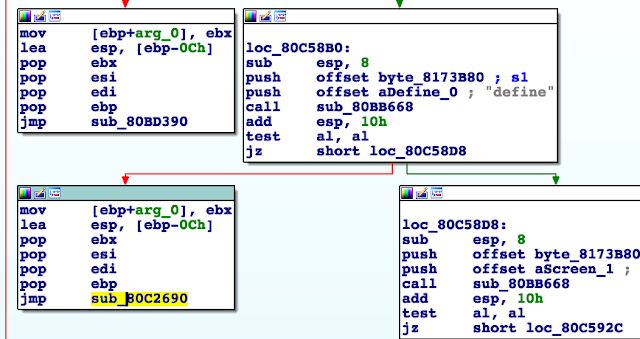

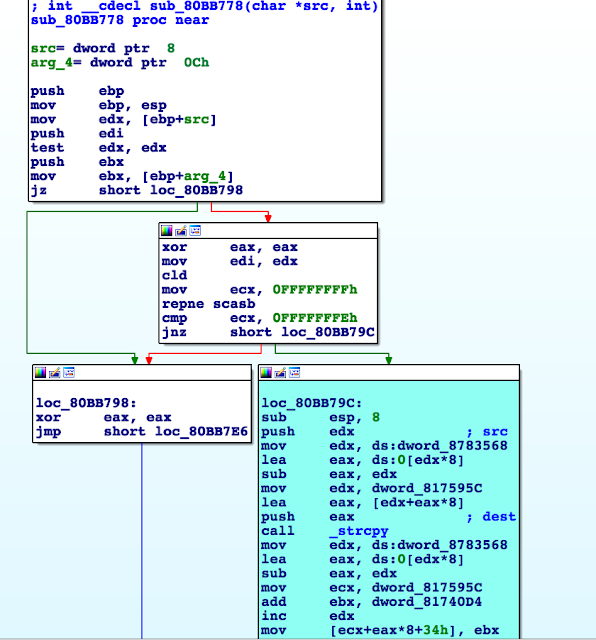

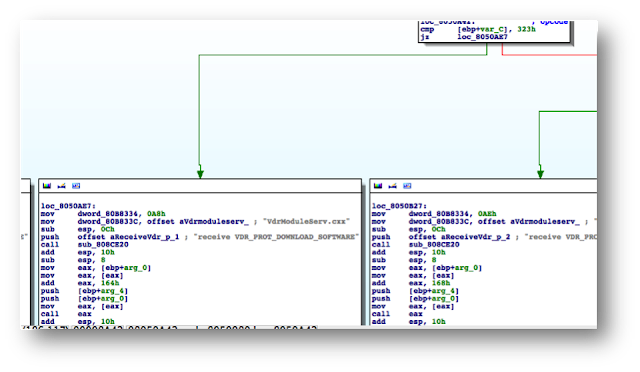

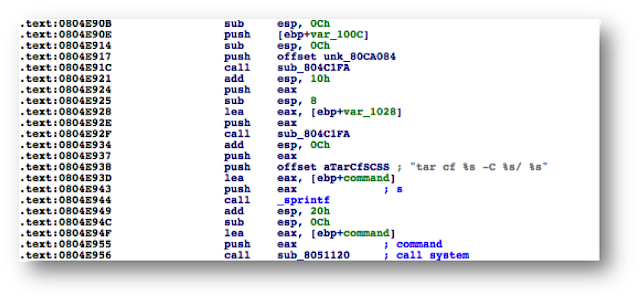

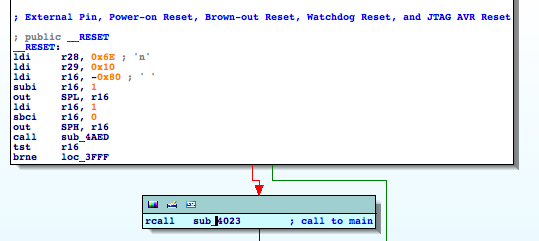

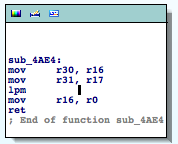

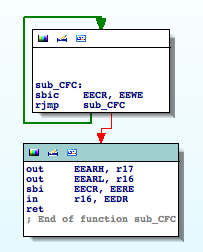

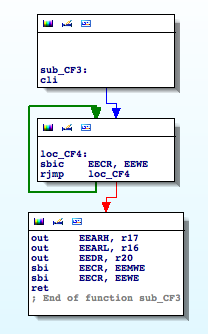

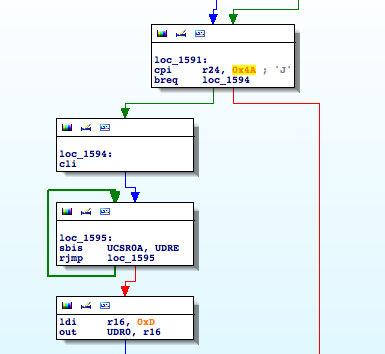

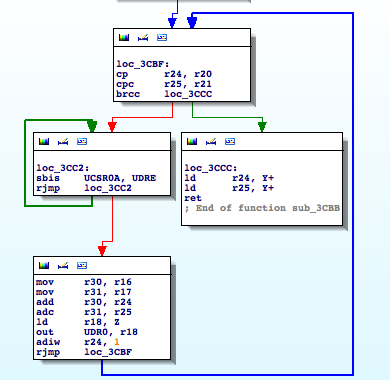

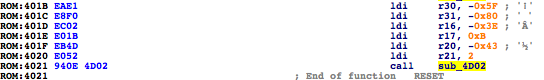

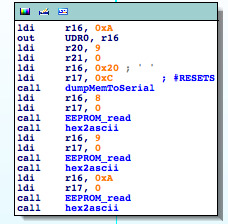

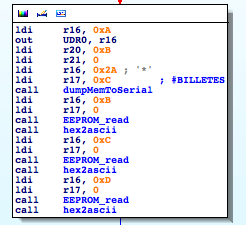

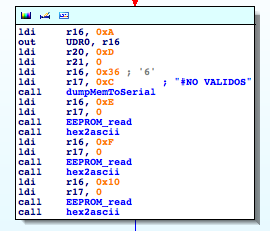

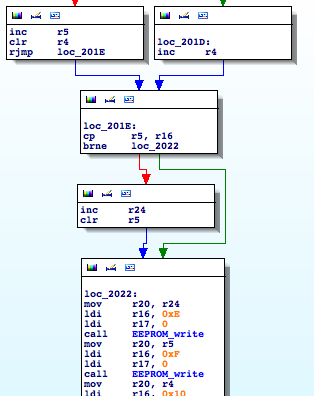

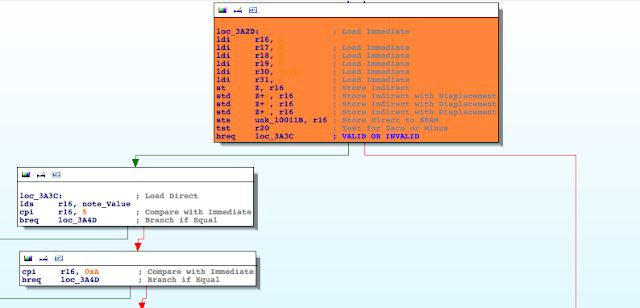

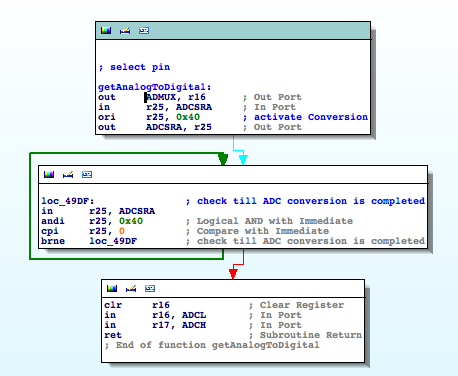

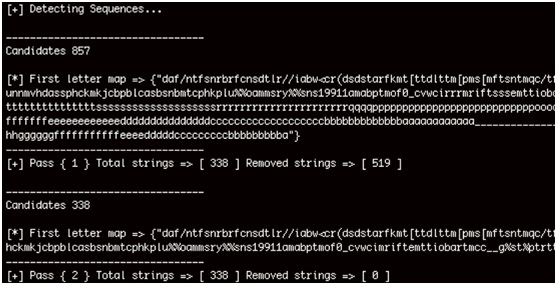

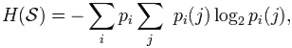

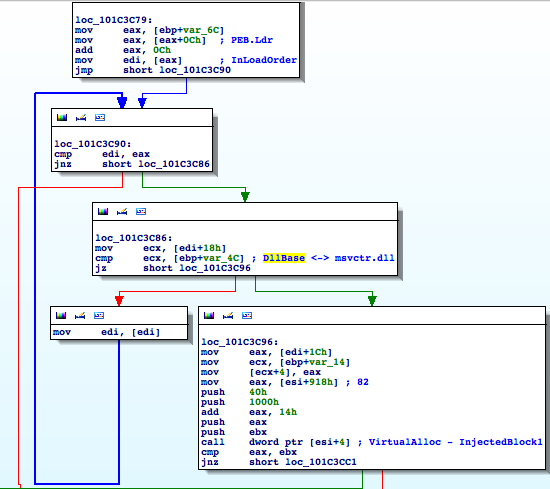

Reverse-engineering the main binary (airsurf), we can figure out how this script format’s parser works. In order to illustrate this approach, let’s look at #define.

The script is processed line-by-line, and when the parser detects a #define statement, it tries to parse the statement at sub_80C2690.

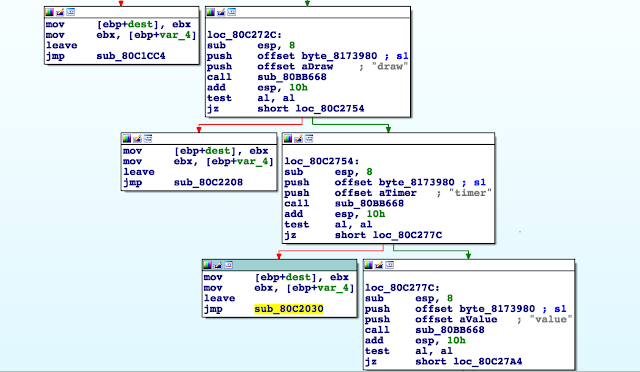

In this function there are five types that can be defined: flash, text, draw, timer, and value.

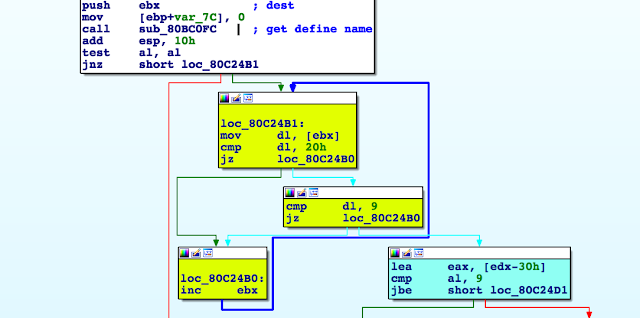

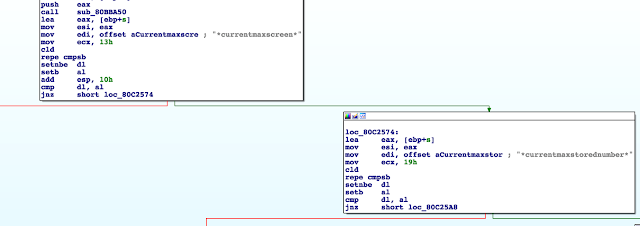

The first line of the script is a #define value, so let’s look at how it’s handled.

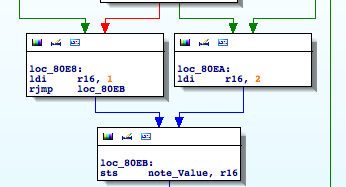

First, the line is parsed and the name of the define extracted. Then the parser checks to see whether the value that comes after (the green basic block below) is a number (blue basic block).

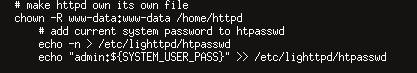

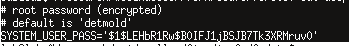

We can also see regular files here, such as shell scripts, configuration files (containing hardcoded credentials), databases, resources, and libraries.

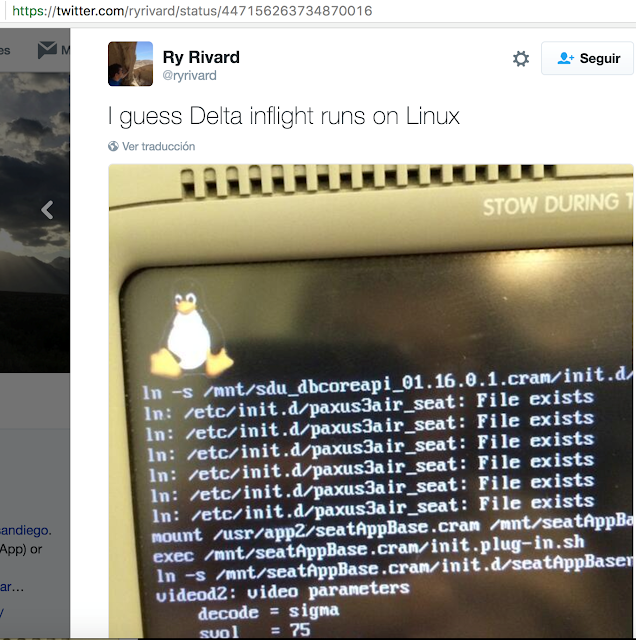

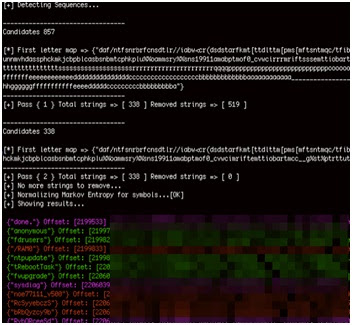

I found the following information in a tweet from someone who captured the Panasonic IFE rebooting. The SeatAppBase files are the same files we are dealing with:

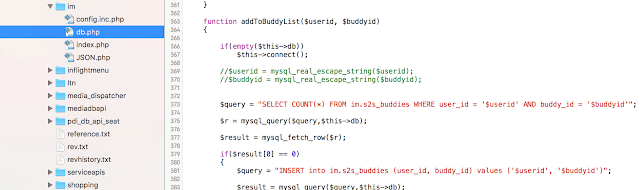

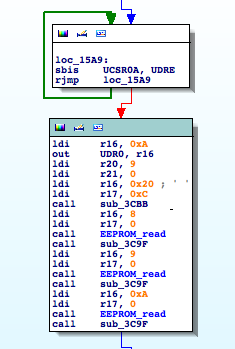

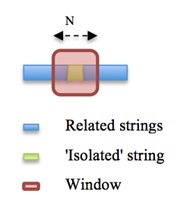

The image above belongs to the “seat-2-seat” chat functionality, where passengers can send messages to others. It shouldn’t take long for you to figure out there is something wrong, and it’s not the only problem.

The following videos show real vulnerability tests performed in such a way that they presented no risk.

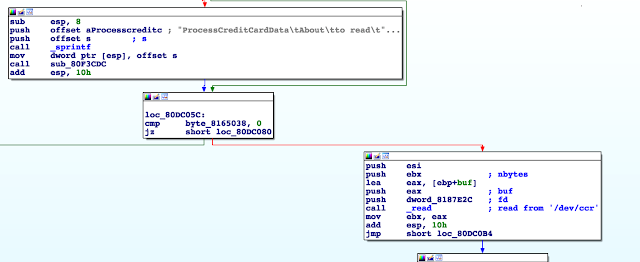

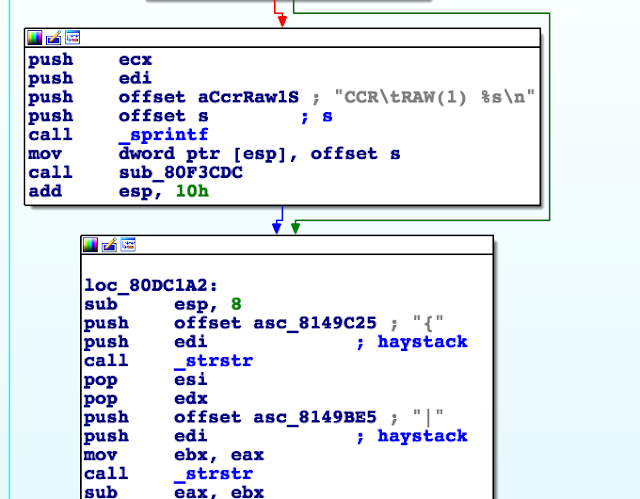

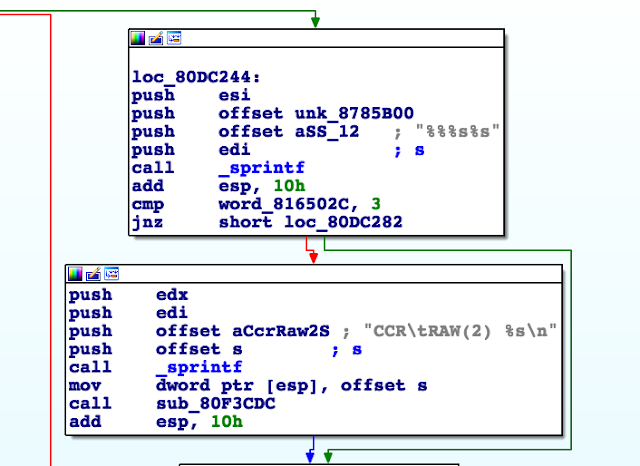

1. Bypass credit card check

2. Arbitrary file access (so /dev/random)

3. SQL injection

So how far can an attacker go by chaining and exploiting vulnerabilities in an In-Flight Enterntainment system? There’s no generic response to this, but let’s try to dissect some potential general case scenarios by introducing some additional context (nonspecific to a particular company or system unless stated).

Relying exclusively on the DO-178B standard that defines Software Considerations in Airborne Systems and Equipment Certification, the IFE would technically lie within the D and E levels. Panasonic Avionics’ IFE in particular is certified at Level E. This basically means that even if the entire system fails, the impact would be something between no effect at all and passenger discomfort.

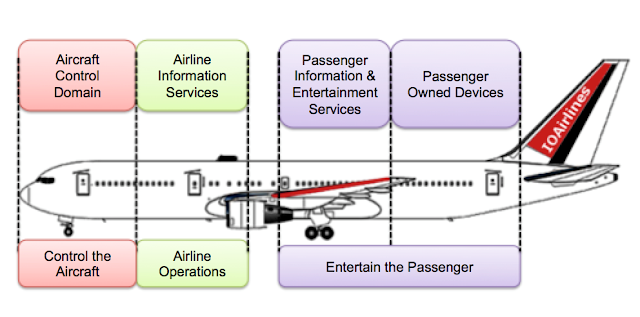

Also, I should mention that an aircraft’s data networks are divided into four domains, depending on the kind of data they process: passenger entertainment, passenger owned devices, airline information services, and finally aircraft control.

Physical control systems should be located in the Aircraft Control domain, which should be physically isolated from the passenger domains; however, this doesn’t always happen. Some aircraft use optical data diodes, while others rely upon electronic gateway modules. This means that as long as there is a physical path that connects both domains, we can’t disregard the potential for attack.

In-flight entertainment systems may be an attack vector. In some scenarios such an attack would be physically impossible due to the isolation of these systems, while in others an attack remains theoretically feasible due to the physical connectivity. IOActive has successfully compromised other electronic gateway modules in non-airborne vehicles. The ability to cross the “red line” between the passenger entertainment and owned devices domain and the aircraft control domain relies heavily on the specific devices, software and configuration deployed on the target aircraft.

In 2014 we presented a series of vulnerabilities in Satellite Communication (SATCOM) devices, including airborne SATCOM terminals. A primary concern is the sharing of these SATCOM devices between different data domains, which could allow an attacker to use this equipment to pivot from a compromised IFE to certain avionics.

On the IT side, compromising the IFE means an attacker can control how passengers are informed aboard the plane. For example, an attacker might spoof flight information values such as altitude or speed, and show a bogus route on the interactive map. An attacker might compromise the CrewApp unit, controlling the PA, lighting, or actuators for upper classes. If all of these attacks are chained, a malicious actor may create a baffling and disconcerting situation for passengers.

The capture of personal information, including credit card details, while not in scope of this research, would also be technically possible if backends that sometimes provide access to specific airlines’ frequent-flyer/VIP membership data were not configured properly.

On the bright side, while not in scope of the Panasonic Avionics IFE systems research, I believe in-flight Wi-Fi itself is not a problem because it can be implemented securely without safety and security risks.

What all of this means is that after initial analysis we do not believe these systems can resist solid attacks from skilled malicious actors. Airlines must be vigilant when it comes to their In-Flight Entertainment Systems, ensuring that these and other systems are properly segregated and each aircraft’s security posture is carefully analyzed case by case. The responsibility for security does not solely rest with an IFE manufacturer, an aircraft manufacturer, or the fleet operator. Each plays an important role in assuring a secure environment.

Responsible disclosure

We reported these findings to Panasonic Avionics in March 2015. We believe that has been enough time to produce and deploy patches, at least for the most prominent vulnerabilities. That said, we believe that in such a heterogenous environment, with dozens of airlines involved and hundreds of versions of the software available, it’s difficult to say whether these issues have been completely resolved.

As always, we would love to hear from other security types who might have a differing opinion. All of our positions are subject to change through exposure to compelling arguments and/or data.