Tag: mobile security

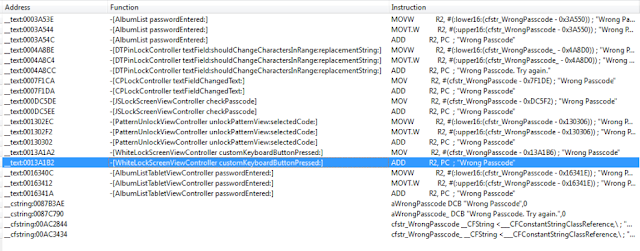

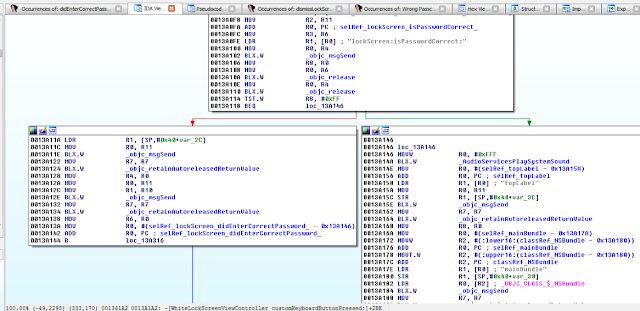

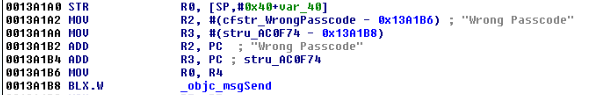

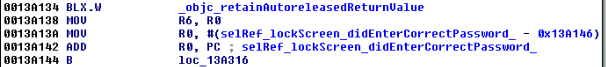

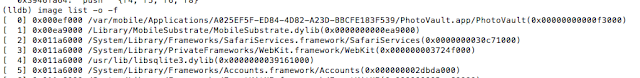

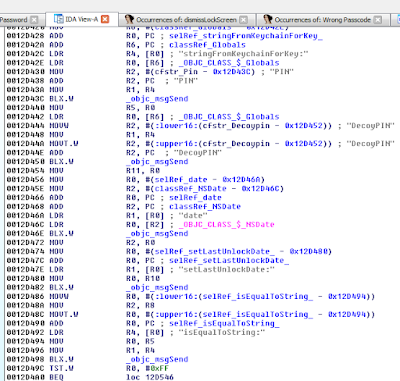

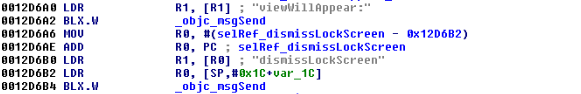

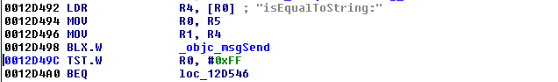

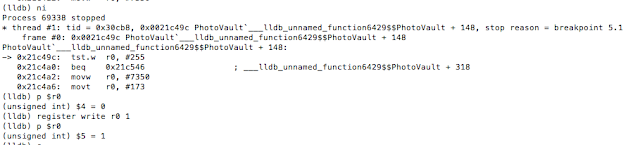

Breaking into and Reverse Engineering iOS Photo Vaults

Every so often we hear stories of people losing their mobile phones, often with sensitive photos on them. Additionally, people may lend their phones to friends only to have those friends start going through their photos. For whatever reason, a lot of people store risqué pictures on their devices. Why they feel the need to do that is left for another discussion. This behavior has fueled a desire to protect photos on mobile devices.

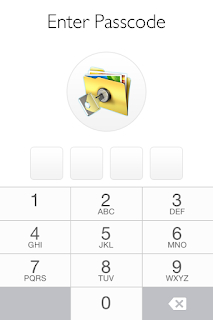

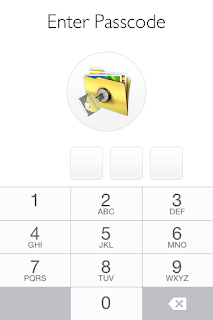

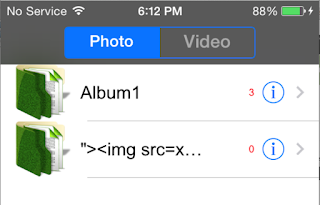

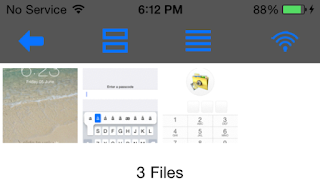

One popular option are photo vault applications. These applications claim to protect your photos, videos, etc. In general, they create albums within their application containers and limit access with a passcode, pattern, or, in the case of newer devices, TouchID.

- Jailbroken iPhone 4S (7.1.2)

- BurpSuite Pro

- Hex editor of choice

- Cycript

- Private Photo Vault

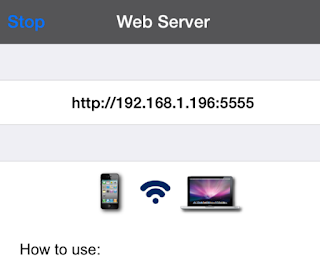

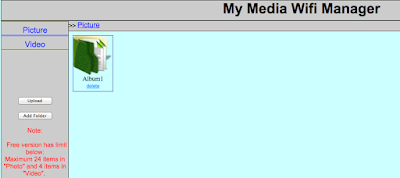

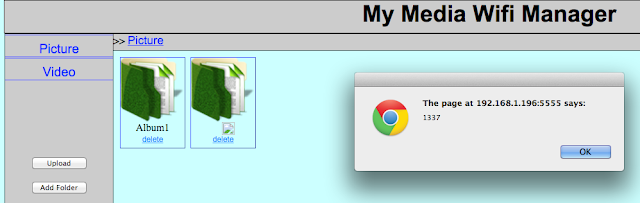

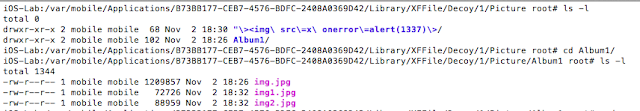

- Photo+Video Vault Keep Safe(My Media)

- KeepSafe

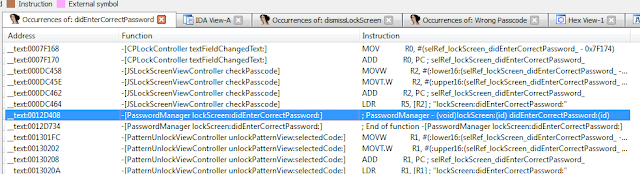

- IDA Pro/Hopper

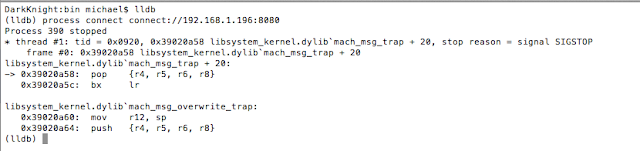

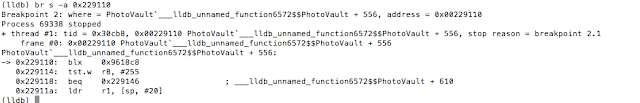

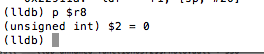

- LLDB

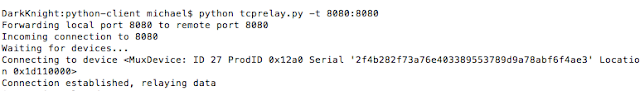

- Usbmuxd

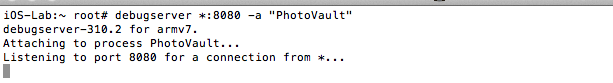

- Debugserver

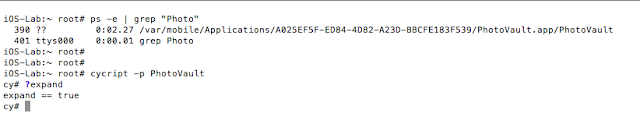

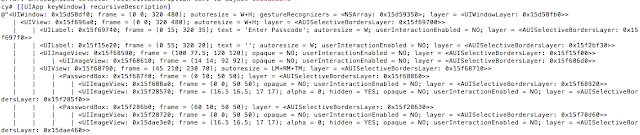

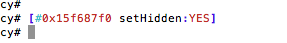

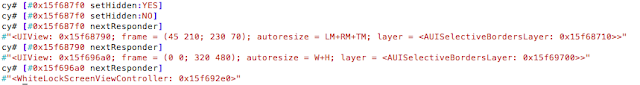

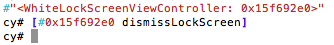

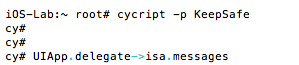

- cycript to bypass the lock screens

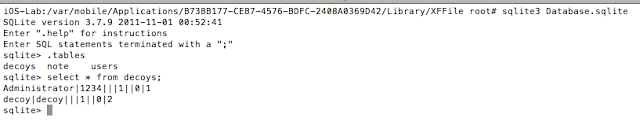

- sqlite to extract sensitive information from the application databases

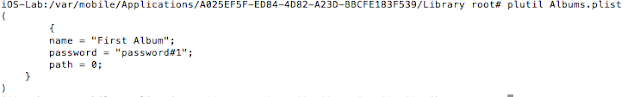

- plutil to read plist files and access sensitive information

- BurpSuite Pro to intercept traffic from the application

- IDA Pro to reverse the binary and achieve results similar to cycript

- No jailbreak detection routines

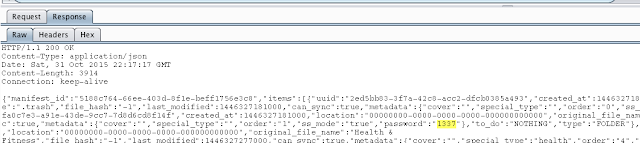

- Insecure storage of credentials

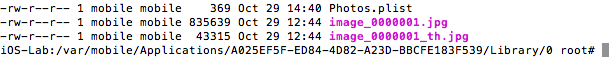

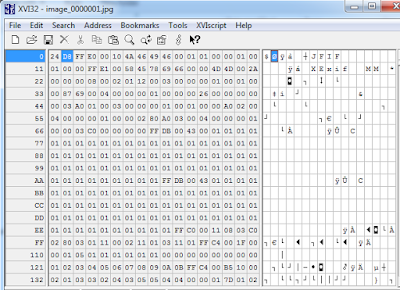

- Photos stored unencrypted

- Lock screens are easy to bypass

- Common web application vulnerabilities

- http://www.zdziarski.com/blog/?p=3951

- Hacking and Securing iOS Applications: Stealing Data, Hijacking Software, and How to Prevent It

- http://www.cycript.org/

- http://cgit.sukimashita.com/usbmuxd.git/snapshot/usbmuxd-1.0.8.tar.gz

- http://resources.infosecinstitute.com/ios-application-security-part-42-lldb-usage-continued/

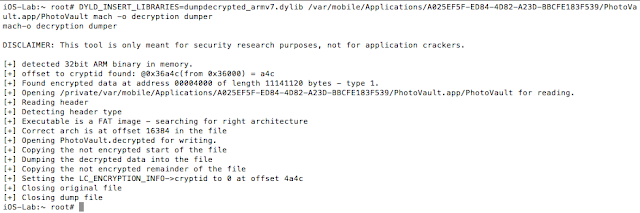

- https://github.com/stefanesser/dumpdecrypted

- https://developer.apple.com/library/ios/technotes/tn2239/_index.html#//apple_ref/doc/uid/DTS40010638-CH1-SUBSECTION34

- https://developer.apple.com/library/ios/documentation/UIKit/Reference/UIResponder_Class/#//apple_ref/occ/instm/UIResponder/nextResponder

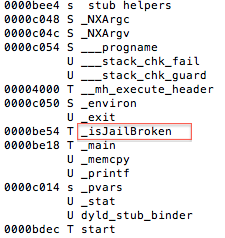

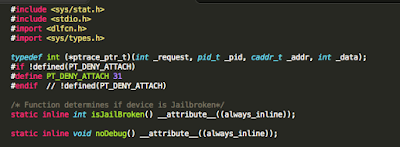

The iOS Get out of Jail Free Card

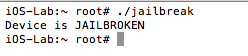

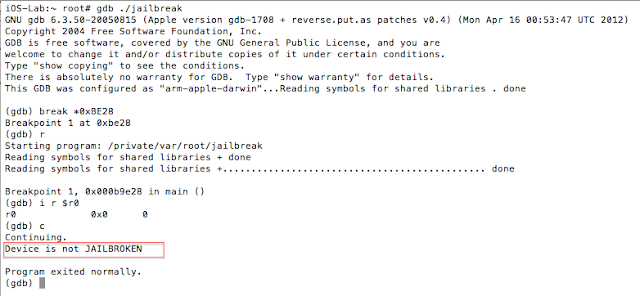

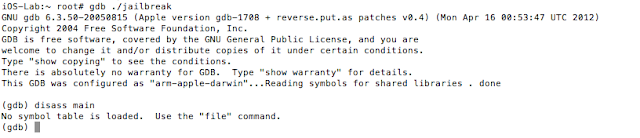

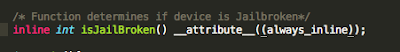

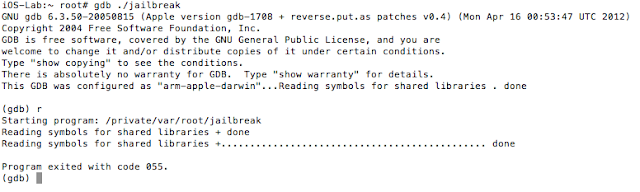

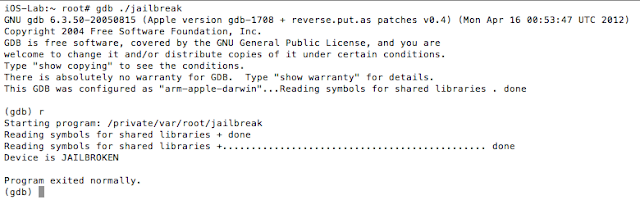

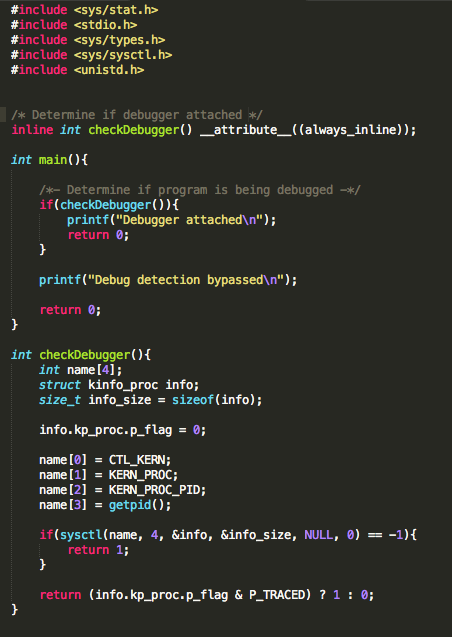

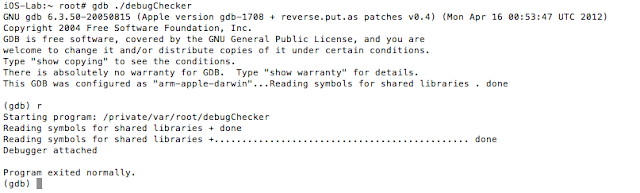

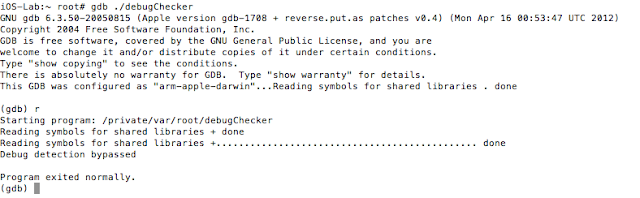

Well, iOS mobile application assessments usually occur on jailbroken devices, and application developers often implement measures that seek to thwart this activity. The tester often has to come up with clever ways of bypassing detection and breaking free from this restriction, a.k.a. “getting out of jail”. This blog post will walk you through the steps required to identify and bypass frequently recommended detection routines. It is intended for persons who are just getting started in reverse engineering mobile platforms. This is not for the advanced user.

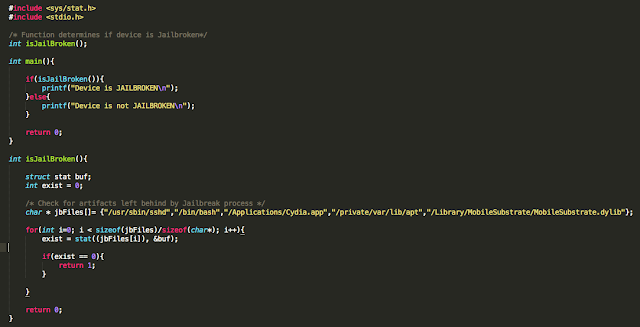

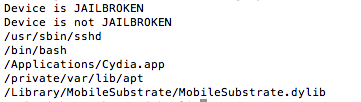

- Known file paths

- Use of non-default ports such as port 22(OpenSSH), which is often used to connect to and administer the device

- Symbolic links to various directories (e.g. /Applications, etc.)

- Integrity of the sandbox (i.e. a call to fork() should return a negative value in a properly functioning sandbox)

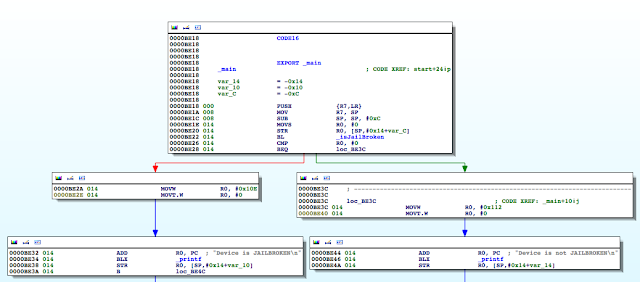

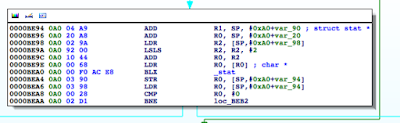

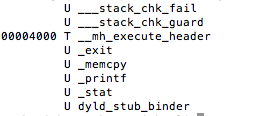

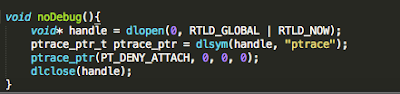

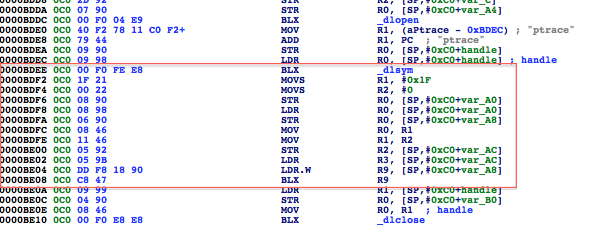

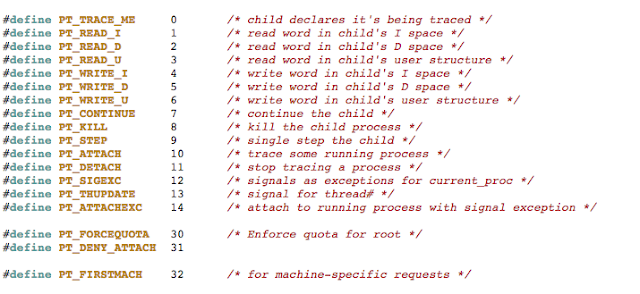

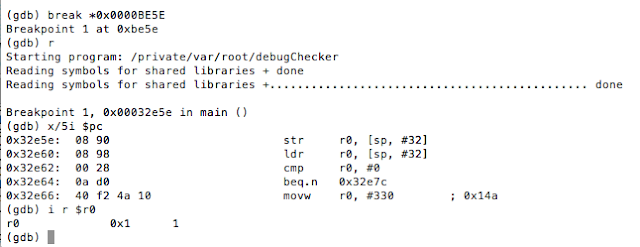

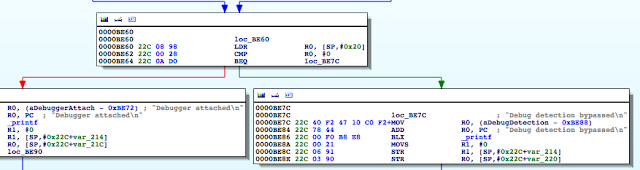

In addition to the above, developers will often seek to prevent us from debugging the process with the use of PT_ATTACH_DENY, which prevents the use of the ptrace() system call (a call used in the debugging of iOS applications). The point is, there are a multitude of ways developers try to thwart our efforts as pen testers. That discussion, however, is beyond the scope of this post. You are encouraged to check out the resources included in the references section. Of the resources listed, The Mobile Application Hackers Handbook does a great job covering the topic.

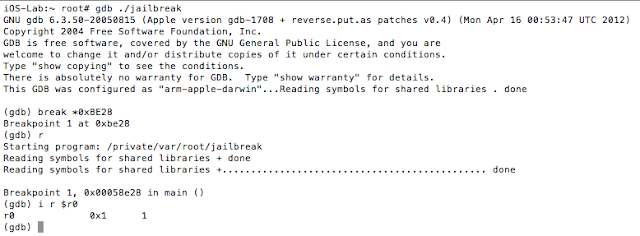

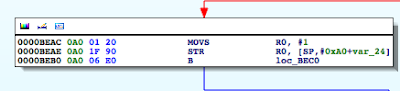

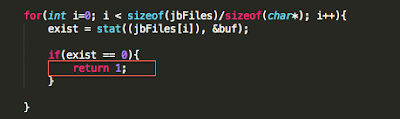

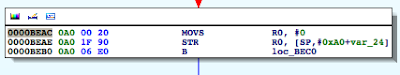

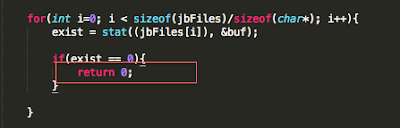

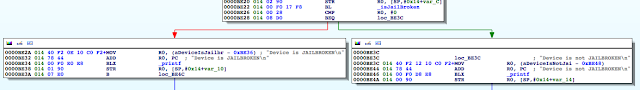

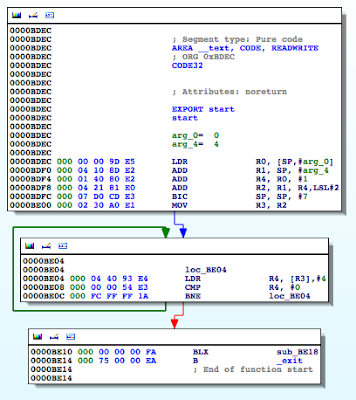

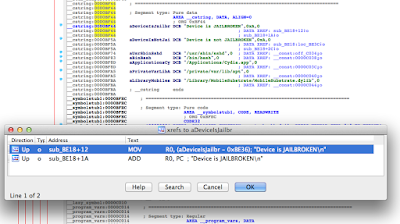

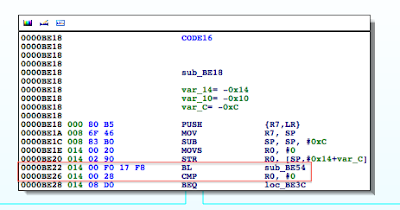

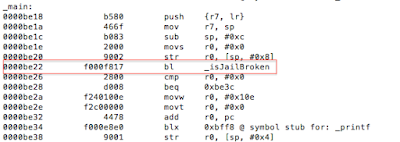

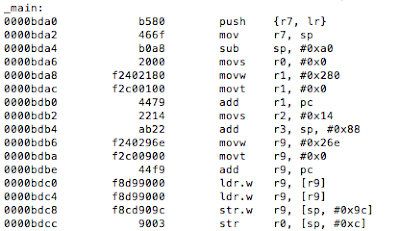

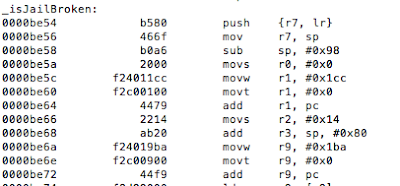

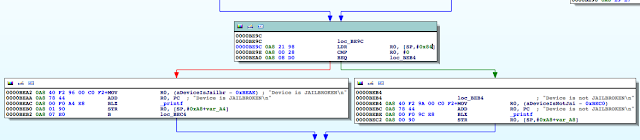

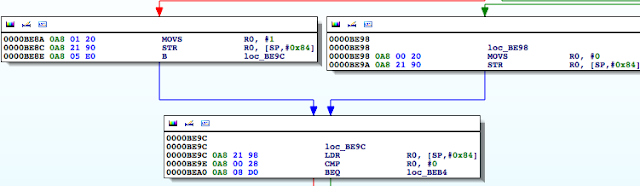

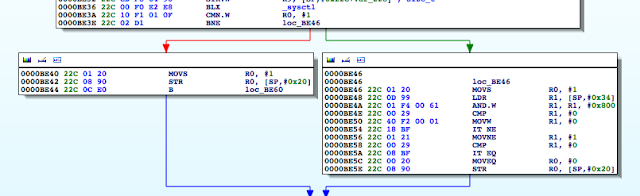

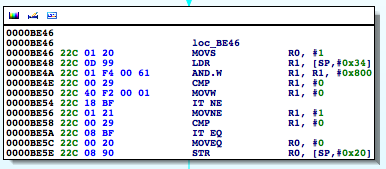

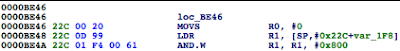

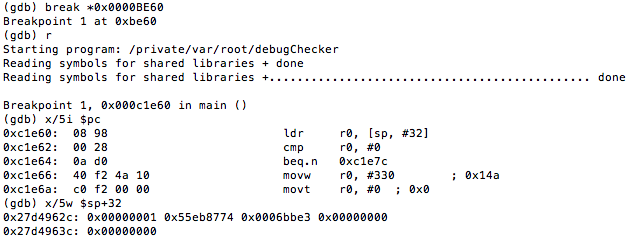

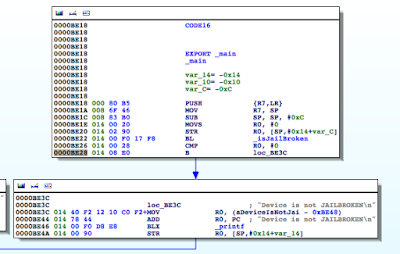

Figure 5: Examining the contents of the R0 register

Figure 6: isJailBroken function analysis

Figure 7: Determining where R0 register is set

- http://www.amazon.com/The-Mobile-Application-Hackers-Handbook/dp/1118958500

- http://www.amazon.com/Practical-Reverse-Engineering-Reversing-Obfuscation/dp/1118787315

- http://www.amazon.com/Hacking-Securing-iOS-Applications-Hijacking/dp/1449318746

- https://www.owasp.org/index.php/IOS_Application_Security_Testing_Cheat_Sheet

- https://www.theiphonewiki.com/wiki/Bugging_Debuggers

- http://www.opensource.apple.com/source/xnu/xnu-792.13.8/bsd/sys/ptrace.h

Bad Crypto 101

This post is part of a series about bad cryptography usage . We all rely heavily on cryptographic algorithms for data confidentiality and integrity, and although most commonly used algorithms are secure, they need to be used carefully and correctly. Just as holding a hammer backwards won’t yield the expected result, using cryptography badly won’t yield the expected results either.

To refresh my Android skillset, I decided to take apart a few Android applications that offer to encrypt personal files and protect them from prying eyes. I headed off to the Google Play Store and downloaded the first free application it recommended to me. I decided to only consider free applications, since most end users would prefer a cheap (free) solution compared to a paid one.

I stumbled upon the Encrypt File Free application by MobilDev. It seemed like the average file manager/encryption solution a user would like to use. I downloaded the application and ran it through my set of home-grown Android scanning scripts to look for possible evidence of bad crypto. I was quite surprised to see that no cryptographic algorithms where mentioned anywhere in the source code, apart from MD5. This looked reasonably suspicious, so I decided to take a closer look.

Looking at the JAR/DEX file’s structure, one class immediately gets my attention: the Crypt class in the com.acr.encryptfilefree package. Somewhere halfway into the class you find an interesting initializer routine:

}

This is basically a pretty bad substitution cipher. While looking through the code, I noticed that the values set in the init() function aren’t really used in production, they’re just test values which are likely a result of testing the code while it’s being written up. Since handling signedness is done manually, it is reasonable to assume that the initial code didn’t really work as expected and that the developer poked it until it gave the right output. Further evidence of this can be found in one of the encrypt() overloads in that same class, which contains a preliminary version of the file encryption routines.

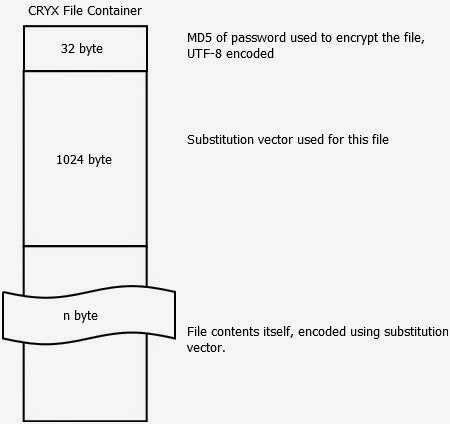

Going further through the application reveals that the actual encryption logic is stored in the Main$UpdateProgress.class file, and further information is revealed about the file format itself. Halfway through the doInBackground(Void[] a) function you discover that the file format is basically the following:

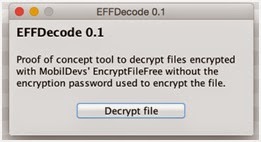

The password check on the files itself turns out to be just a branch instruction, which can be located in Main$15$1.class and various other locations. Using this knowledge, an attacker could successfully modify a copy of the application that would allow unrestricted access to all of the files encoded by any password. Apart from rolling your own crypto, this is one of the worst offenses in password protecting sensitive data: make sure you use the password as part of the key for the data, and not as part of a branch instruction. Branches can be patched, keys need to be brute forced, or, in the event of a weak algorithm, calculated.

The substitution vector in the file is remarkably long–it seems that the vector stored is 1024 bytes. But, don’t be fooled–this is a bug. Only the first 256 bytes are actually used, the rest of them are simply ignored during processing. If we forget for a moment that a weak encoding is used as a substitute for encryption, and assume this is a real algorithm, reducing the key space from 1024 byte to 256 byte would be a serious compromise. Algorithms have ended up in the “do not use” corner for lesser offenses.

Silly Bugs That Can Compromise Your Social Media Life

After a quick review, I determined that the request was sent when I clicked on the Facebook Friends button, which allows users to search for friends from their Facebook account.

- installed

- basic_info

- public_profile

- create_note

- photo_upload

- publish_actions

- publish_checkins

- publish_stream

- status_update

- share_item

- video_upload

- user_friends

One Mail to Rule Them All

Now for the gold: his Facebook. Using the same method there, I gained access to his Facebook; he had Flickr as well…set to login with Facebook. How convenient. I now own his whole online “life”.. There’s an account at an online electronics store; nice, and it’s been approved for credit.

In this case, it was easy.

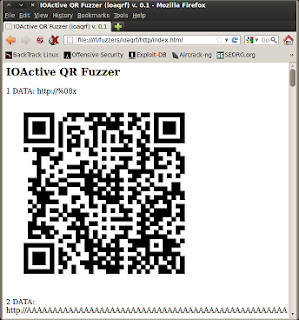

QR Fuzzing Fun

QR codes [1] have become quite popular due to their fast readability and large storage capacity to send information. It is very easy to find QR codes anywhere these days with encoded information such as a URL, phone number, vCard information, etc. There exist tons of apps on smartphones that are able to read / scan QR codes.

|

Platform

|

Popular QR Apps / Libraries

|

|

Android

|

· Google Goggles

· ZXing

· QRDroid

|

|

iOS

|

· Zxing

· Zbar

|

|

BlackBerry

|

· App World

|

|

Windows Phone

|

· Bing Search App

· ZXlib

|

QR codes are very interesting for attackers as they can store large quantity of information, from under 1000 up to 7000 characters, perfect for a malicious payload, and QR codes can be encrypted and used for security purposes. There are malicious QR codes that abuse permissive apps permissions to compromise system and user data. This attack is known as “attagging”. Also QR codes can be used as an attack vector for DoS, SQL Injection, Cross-Site Scripting (XSS) and information stealing attacks among others.