Artificial Intelligence (AI) is transforming industries and everyday life, driving innovations once relegated to the realm of science fiction into modern reality. As AI technologies grow more integral to complex systems like autonomous vehicles, healthcare diagnostics, and automated financial trading platforms, the imperative for robust security measures increases exponentially.

Securing AI is not only about safeguarding data but also about ensuring the core systems — in particular, the trained models that really put the “intelligence” in AI — function as intended without malicious interference. Historical lessons from earlier technologies offer some guidance and can be used to inform today’s strategies for securing AI systems. Here, we’ll explore the evolution, current state, and future direction of AI security, with a focus on why it’s essential to learn from the past, secure the present, and plan for a resilient future.

AI: The Newest Crown Jewel

Security in the context of AI is paramount precisely because AI systems increasingly handle sensitive data, make important, autonomous decisions, and operate with limited supervision in critical environments where safety and confidentiality are key. As AI technologies burrow further into sectors like healthcare, finance, and national security, the potential for misuse or harmful consequences due to security shortcomings rises to concerning levels. Several factors drive the criticality of AI security:

- Data Sensitivity: AI systems process and learn from large volumes of data, including personally identifiable information, proprietary business information, and other sensitive data types. Ensuring the security of enterprise training data as it passes to and through AI models is crucial to maintaining privacy, regulatory compliance, and the integrity of intellectual property.

- System Integrity: The integrity of AI systems themselves must be well defended in order to prevent malicious alterations or tampering that could lead to bogus outputs and incorrect decisions. In autonomous vehicles or medical diagnosis systems, for example, instructions issued by compromised AI platforms could have life-threatening consequences.

- Operational Reliability: AI is increasingly finding its way into critical infrastructure and essential services. Therefore, ensuring these systems are secure from attacks is vital for maintaining their reliability and functionality in critical operations.

- Matters of Trust: For AI to be widely adopted, users and stakeholders must trust that the systems are secure and will function as intended without causing unintended harm. Security breaches or failures can undermine public confidence and hinder the broader adoption of emerging AI technologies over the long haul.

- Adversarial Activity: AI systems are uniquely susceptible to certain attacks, whereby slight manipulations in inputs — sometimes called prompt hacking — can deceive an AI system into making incorrect decisions or spewing malicious output. Understanding the capabilities of malicious actors and building robust defenses against such prompt-based attacks is crucial for the secure deployment of AI technologies.

In short, security in AI isn’t just about protecting data. It’s also about ensuring safe, reliable, and ethical use of AI technologies across all applications. These inexorably nested requirements continue to drive research and ongoing development of advanced security measures tailored to the unique challenges posed by AI.

Looking Back: Historical Security Pitfalls

We don’t have to turn the clock back very far to witness new, vigorously hyped technology solutions wreaking havoc on the global cybersecurity risk register. Consider the peer-to-peer recordkeeping database mechanism known as blockchain. When blockchain exploded into the zeitgeist circa 2008 — alongside the equally disruptive concept of cryptocurrency — its introduction brought great excitement thanks to its potential for both decentralization of data management and the promise of enhanced data security. In short order, however, events such as the DAO hack —an exploitation of smart contract vulnerabilities that led to substantial, if temporary, financial losses — demonstrated the risk of adopting new technologies without diligent security vetting.

As a teaching moment, the DAO incident highlights several issues: the complex interplay of software immutability and coding mistakes; and the disastrous consequences of security oversights in decentralized systems. The case study teaches us that with every innovative leap, a thorough understanding of the new security landscape is crucial, especially as we integrate similar technologies into AI-enabled systems.

Historical analysis of other emerging technology failures over the years reveals other common themes, such as overreliance on untested technologies, misjudgment of the security landscape, and underestimation of cyber threats. These pitfalls are exacerbated by hype-cycle-powered rapid adoption that often outstrips current security capacity and capabilities. For AI, these themes underscore the need for a security-first approach in development phases, continuous vulnerability assessments, and the integration of robust security frameworks from the outset.

Current State of AI Security

With AI solutions now pervasive, each use case introduces unique security challenges. Be it predictive analytics in finance, real-time decision-making systems in manufacturing systems, or something else entirely, each application requires a tailored security approach that takes into account the specific data types and operational environments involved. It’s a complex landscape where rapid technological advancements run headlong into evolving security concerns. Key features of this challenging infosec environment include:

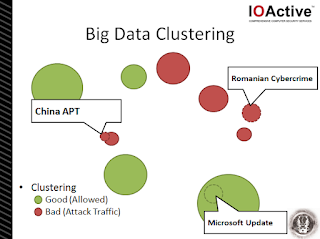

- Advanced Threats: AI systems face a range of sophisticated threats, including data poisoning, which can skew an AI’s learning and reinforcement processes, leading to flawed outputs; model theft, in which proprietary intellectual property is exposed; and other adversarial actions that can manipulate AI perceptions and decisions in unexpected and harmful ways. These threats are unique to AI and demand specialized security responses that go beyond traditional cybersecurity controls.

- Regulatory and Compliance Issues: With statutes such as GDPR in Europe, CCPA in the U.S., and similar data security and privacy mandates worldwide, technology purveyors and end users alike are under increased pressure to prioritize safe data handling and processing. On top of existing privacy rules, the Biden administration in the U.S. issued a comprehensive executive order last October establishing new standards for AI safety and security. In Europe, meanwhile, the EU’s newly adopted Artificial Intelligence Act provides granular guidelines for dealing with AI-related risk. This spate of new rules can often clash with AI-enabled applications that demand more and more access to data without much regard for its origin or sensitivity.

- Integration Challenges: As AI becomes more integrated into critical systems across a wide swath of vertical industries, ensuring security coherence across different platforms and blended technologies remains a significant challenge. Rapid adoption and integration expose modern AI systems to traditional threats and legacy network vulnerabilities, compounding the risk landscape.

- Explainability: As adoption grows, the matter of AI explainability — or the ability to understand and interpret the decisions made by AI systems — becomes increasingly important. This concept is crucial in building trust, particularly in sensitive fields like healthcare where decisions can have profound impacts on human lives.Consider an AI system used to diagnose disease from medical imaging. If such a system identifies potential tumors in a scan, clinicians and patients must be able to understand the basis of these conclusions to trust in their reliability and accuracy. Without clear explanations, hesitation to accept the AI’s recommendations ensues, leading to delays in treatment or disregard of useful AI-driven insights. Explainability not only enhances trust, it also ensures AI tools can be effectively integrated into clinical workflows, providing clear guidance that healthcare professionals can evaluate alongside their own expertise.

Addressing such risks requires a deep understanding of AI operations and the development of specialized security techniques such as differential privacy, federated learning, and robust adversarial training methods. The good news here: In response to AI’s risk profile, the field of AI security research and development is on a steady growth trajectory. Over the past 18 months the industry has witnessed increased investment aimed at developing new methods to secure AI systems, such as encryption of AI models, robustness testing, and intrusion detection tailored to AI-specific operations.

At the same time, there’s also rising awareness of AI security needs beyond the boundaries of cybersecurity organizations and infosec teams. That’s led to better education and training for application developers and users, for example, on the potential risks and best practices for securing A-powered systems.

Overall, enterprises at large have made substantial progress in identifying and addressing AI-specific risk, but significant challenges remain, requiring ongoing vigilance, innovation, and adaptation in AI defensive strategies.

Data Classification and AI Security

One area getting a fair bit of attention in the context of safeguarding AI-capable environments is effective data classification. The ability to earmark data (public, proprietary, confidential, etc.) is essential for good AI security practice. Data classification ensures that sensitive information is handled appropriately within AI systems. Proper classification aids in compliance with regulations and prevents sensitive data from being used — intentionally or unintentionally — in training datasets that can be targets for attack and compromise.

The inadvertent inclusion of personally identifiable information (PII) in model training data, for example, is a hallmark of poor data management in an AI environment. A breach in such systems not only compromises privacy but exposes organizations to profound legal and reputational damage as well. Organizations in the business of adopting AI to further their business strategies must be ever aware of the need for stringent data management protocols and advanced data anonymization techniques before data enters the AI processing pipeline.

The Future of AI Security: Navigating New Horizons

As AI continues to evolve and tunnel its way further into every facet of human existence, securing these systems from potential threats, both current and future, becomes increasingly critical. Peering into AI’s future, it’s clear that any promising new developments in AI capabilities must be accompanied by robust strategies to safeguard systems and data against the sophisticated threats of tomorrow.

The future of AI security will depend heavily on our ability to anticipate potential security issues and tackle them proactively before they escalate. Here are some ways security practitioners can prevent future AI-related security shortcomings:

- Continuous Learning and Adaptation: AI systems can be designed to learn from past attacks and adapt to prevent similar vulnerabilities in the future. This involves using machine learning algorithms that evolve continuously, enhancing their detection capabilities over time.

- Enhanced Data Privacy Techniques: As data is the lifeblood of AI, employing advanced and emerging data privacy technologies such as differential privacy and homomorphic encryption will ensure that data can be used for training without exposing sensitive information.

- Robust Security Protocols: Establishing rigorous security standards and protocols from the initial phases of AI development will be crucial. This includes implementing secure coding practices, regular security audits, and vulnerability assessments throughout the AI lifecycle.

- Cross-Domain Collaboration: Sharing knowledge and strategies across industries and domains can lead to a more robust understanding of AI threats and mitigation strategies, fostering a community approach to AI security.

Looking Further Ahead

Beyond the immediate horizon, the field of AI security is set to witness several meaningful advancements:

- Autonomous Security: AI systems capable of self-monitoring and self-defending against potential threats will soon become a reality. These systems will autonomously detect, analyze, and respond to threats in real time, greatly reducing the window for attacks.

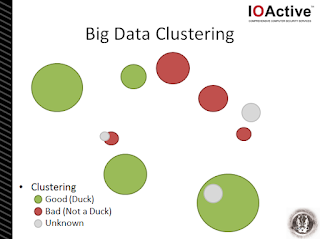

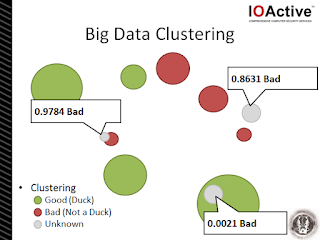

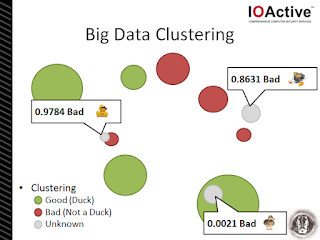

- Predictive Security Models: Leveraging big data and predictive analytics, AI can forecast potential security threats before they manifest. This proactive approach will allow organizations to implement defensive measures in advance.

- AI in Cybersecurity Operations: AI will increasingly become both weapon and shield. AI is already being used to enhance cybersecurity operations, providing the ability to sift through massive amounts of data for threat detection and response at a speed and accuracy unmatchable by humans. The technology and its underlying methodologies will only get better with time. This ability for AI to remove the so-called “human speed bump” in incident detection and response will take on greater importance as the adversaries themselves increasingly leverage AI to generate malicious attacks that are at once faster, deeper, and potentially more damaging than ever before.

- Decentralized AI Security Frameworks: With the rise of blockchain technology, decentralized approaches to AI security will likely develop. These frameworks can provide transparent and tamper-proof systems for managing AI operations securely.

- Ethical AI Development: As part of securing AI, strong initiatives are gaining momentum to ensure that AI systems are developed with ethical considerations in mind will prevent biases and ensure fairness, thus enhancing security by aligning AI operations with human values.

As with any rapidly evolving technology, the journey toward a secure AI-driven future is complex and fraught with challenges. But with concerted effort and prudent innovation, it’s entirely within our grasp to anticipate and mitigate these risks effectively. As we advance, the integration of sophisticated AI security controls will not only protect against potential threats, it will foster trust and promote broader adoption of this transformative technology. The future of AI security is not just about defense but about creating a resilient, reliable foundation for the growth of AI across all sectors.

Charting a Path Forward in AI Security

Few technologies in the past generation have held the promise for world-altering innovation in the way AI has. Few would quibble with AI’s immense potential to disrupt and benefit human pursuits from healthcare to finance, from manufacturing to national security and beyond. Yes, Artificial Intelligence is revolutionary. But it’s not without cost. AI comes with its own inherent collection of vulnerabilities that require vigilant, innovative defenses tailored to their unique operational contexts.

As we’ve discussed, embracing sophisticated, proactive, ethical, collaborative AI security and privacy measures is the only way to ensure we’re not only safeguarding against potential threats but also fostering trust to promote the broader adoption of what most believe is a brilliantly transformative technology.

The journey towards a secure AI-driven future is indeed complex and fraught with obstacles. However, with concerted effort, continuous innovation, and a commitment to ethical practices, successfully navigating these impediments is well within our grasp. As AI continues to evolve, so too must our strategies for defending it.