The great annual experience of Black Hat and DEF CON starts in just a few days, and we here at IOActive have a lot to share. This year we have several groundbreaking hacking talks and fun activities that you won’t want to miss!

For Fun

Join IOActive for an evening of dancing

Our very own DJ Alan Alvarez is back – coming all the way from Mallorca to turn the House of Blues RED. Because no one prefunks like IOActive.

Wednesday, August 5th

6–9PM

House of Blues

Escape to the IOAsis – DEF CON style!

We invite you to escape the chaos and join us in our luxury suite at Bally’s for some fun and great networking.

Friday, August 7th12–6PM

Bally’s penthouse suite

· Unwind with a massage

· Enjoy spectacular food and drinks

· Participate in discussions on the hottest topics in security

· Challenge us to a game of pool!

FREAKFEST 2015

After a two year hiatus, we’re bringing back the party and taking it up a few notches. Join DJs Stealth Duck, Alan Alvarez, and Keith Myers as they get our booties shaking. All are welcome! This is a chance for the entire community to come together to dance, swim, laugh, relax, and generally FREAK out!

And what’s a FREAKFEST without freaks? We are welcoming the community to go beyond just dancing and get your true freak on.

Saturday, August 8th

10PM till you drop

Bally’s BLU Pool

For Hacks

Escape to the IOAsis – DEF CON style!

Join the IOActive research team for an exclusive sneak peek into the world of IOActive Labs.

Friday, August 7th

12–6PM

Bally’s penthouse suite

Enjoy Lightning Talks with IOActive Researchers:

Straight from our hardware labs, our brilliant researchers will talk about their latest findings in a group of sessions we like to call IOActive Labs Presents:

· Mike Davis & Michael Milvich: Lunch & Lab – an overview of the IOActive Hardware Lab in Seattle

Robert Erbes: Little Jenny is Export Controlled: When Knowing How to Type Turns 8th-graders into Weapons

· Vincent Berg: The PolarBearScan

· Kenneth Shaw: The Grid: A Multiplayer Game of Destruction

· Andrew Zonenberg: The Anti Taco Device: Because Who Doesn’t Go to All This trouble?

· Sofiane Talmat: The Dark Side of Satellite TV Receivers

· Fernando Arnaboldi: Mathematical Incompetence in Programming Languages

· Joseph Tartaro: PS4: General State of Hacking the Console

· Ilja Van Sprundel: An Inside Look at the NIC Minifilter

RSVP at https://www.eventbrite.com/e/ioactive-las-vegas-2015-tickets-17604087299

Black Hat/DEF CON

Speaker: Chris Valasek

Remote Exploitation of an Unaltered Passenger Vehicle

Black Hat: 3PM Wednesday, August 5, 2015

DEF CON: 2PM Saturday, August 8, 2015

In case you haven’t heard, Dr. Charlie Miller and I will be speaking at Black Hat and DEF CON about our remote compromise of a 2014 Jeep Cherokee (http://www.wired.com/2015/07/hackers-remotely-kill-jeep-highway/). While you may have seen media regarding the project, our presentation will examine the research at much more granular level. Additionally, we have a few small bits that haven’t been talked about yet, including some unseen video, along with a 90-page white paper that will provide you with an abundance of information regarding vehicle security assessments. I hope to see you all there!

Speaker: Colin Cassidy

Switches get Stitches

Black Hat: 3PM Wednesday, August 5, 2015

DEF CON: 4PM Saturday, August 8, 2015

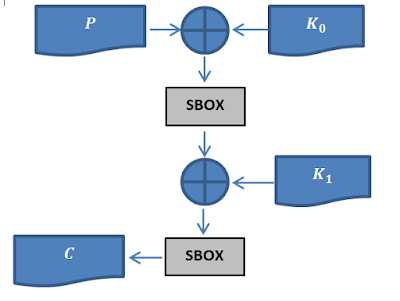

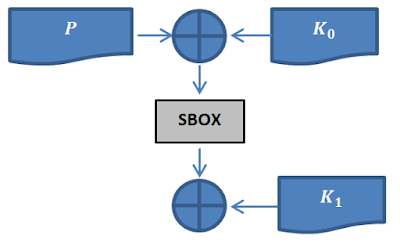

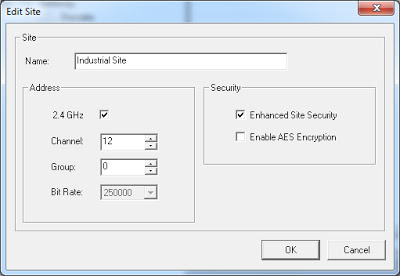

Have you ever stopped to think about the network equipment between you and your target? Yeah, we did too. In this talk we will be looking at the network switches that are used in industrial environments, like substations, factories, refineries, ports, or other homes of industrial automation. In other words: DCS, PCS, ICS, and SCADA switches.

We’ll be attacking the management plane of these switches because we already know that most ICS protocols lack authentication and cryptographic integrity. By compromising the switches an attacker can perform MITM attacks on a live processes.

Not only will we reveal new vulnerabilities, along with the methods and techniques for finding them, we will also share defensive techniques and mitigations that can be applied now, to protect against the average 1-3 year patching lag (or even worse, “forever-day” issues that are never going to be patched).

Speaker: Damon Small

Beyond the Scan: The Value Proposition of Vulnerability Assessment

DEF CON: 2PM Thursday, August 6, 2015

It is a privilege to have been chosen to present at DEF CON 23. My presentation, “Beyond the Scan: The Value Proposition of Vulnerability Assessment”, is not about how to scan a network; rather, it is about how to consume the data you gather effectively and how to transform it into useful information that will affect meaningful change within your organization.

As I state in my opening remarks, scanning is “Some of the least sexy capabilities in information security”. So how do you turn such a base activity into something interesting? Key points I will make include:

· Clicking “scan” is easy. Making sense of the data is hard and requires skilled professionals. The tools you choose are important, but the people using them are critical.

· Scanning a few, specific hosts once a year is a compliance activity and useful only within the context of the standard or regulation that requires it. I advocate for longitudinal studies where large numbers of hosts are scanned regularly over time. This reveals trends that allow the information security team to not only identify missing patches and configuration issues, but also to validate processes, strengthen asset management practices, and to support both strategic and tactical initiatives.

I illustrate these concepts using several case studies. In each, the act of assessing the network revealed information to the client that was unexpected, and valuable, “beyond the scan.”

Speaker: Fernando Arnaboldi

Abusing XSLT for Practical Attacks

Black Hat: 3:50PM Thursday, August 6, 2015

DEF CON: 2PM Saturday, August 8, 2015

XML and XML schemas (i.e. DTD) are an interesting target for attackers. They may allow an attacker to retrieve internal files and abuse applications that rely on these technologies. Along with these technologies, there is a specific language created to manipulate XML documents that has been unnoticed by attackers so far, XSLT.

XSLT is used to manipulate and transform XML documents. Since its definition, it has been implemented in a wide range of software (standalone parsers, programming language libraries, and web browsers). In this talk I will expose some security implications of using the most widely deployed version of XSLT.

Speaker: Jason Larsen

Remote Physical Damage 101 – Bread and Butter Attacks

Black Hat: 9AM Thursday, August 6, 2015

Speaker: Jason Larsen

Rocking the Pocket Book: Hacking Chemical Plant for Competition and Extortion

DEF CON: 6PM Friday, August 7, 2015

Speaker: Kenneth Shaw

The Grid: A Multiplayer Game of Destruction

DEF CON: 12PM Sunday, August 9, 2015, IoT Village, Bronze Room

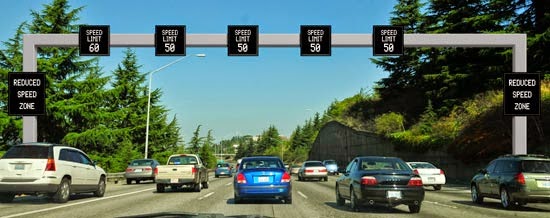

Kenneth will host a table in the IoT Village at DEF CON where he will present a demo and explanation of vulnerabilities in the US electric grid.

Speaker: Sofiane Talmat

Subverting Satellite Receivers for Botnet and Profit

Black Hat: 5:30PM Wednesday, August 5, 2015

Security and the New Generation of Set Top Boxes

DEF CON: 2PM Saturday, August 8, 2015, IoT Village, Bronze Room

New satellite TV receivers are revolutionary. One of the devices used in this research is much more powerful than my graduation computer, running a Linux OS and featuring a 32-bit RISC processor @450 Mhz with 256MB RAM.

Satellite receivers are massively joining the IoT and are used to decrypt pay TV through card sharing attacks. However, they are far from being secure. In this upcoming session we will discuss their weaknesses, focusing on a specific attack that exploits both technical and design vulnerabilities, including the human factor, to build a botnet of Linux-based satellite receivers.

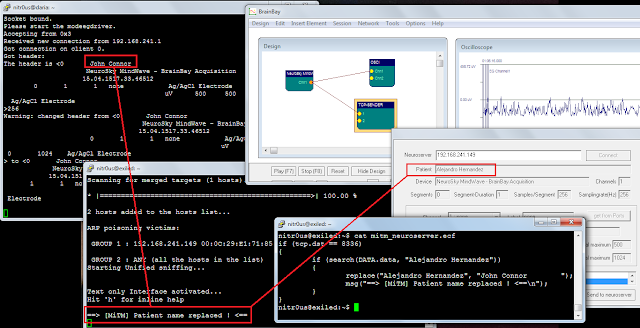

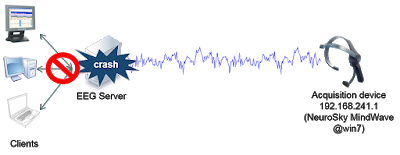

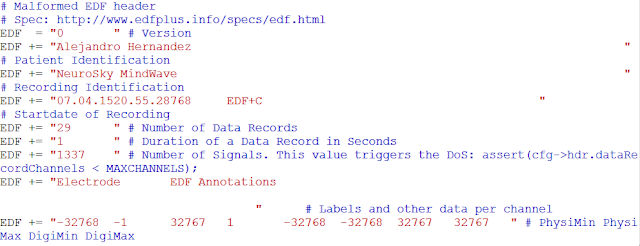

Speaker: Alejandro Hernandez

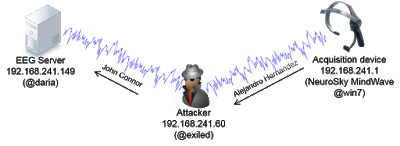

Brain Waves Surfing – (In)security in EEG (Electroencephalography) Technologies

DEF CON: 7-7:50PM Saturday, August 8, 2015, BioHacking Village, Bronze 4, Bally’s

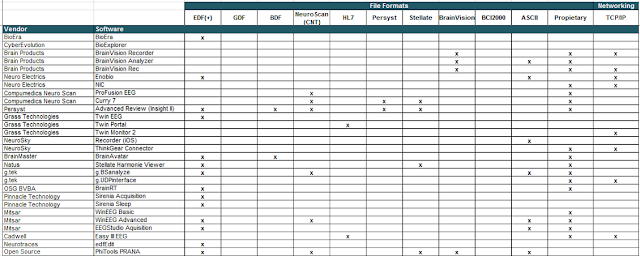

Electroencephalography (EEG) is a non-invasive method for recording and studying electrical activity (synapse between neurons) of the brain. It can be used to diagnose or monitor health conditions such as epilepsy, sleeping disorders, seizures, and Alzheimer disease, among other clinical uses. Brain signals are also being used for many other different research and entertainment purposes, such as neurofeedback, arts, and neurogaming.

I wish this were a talk on how to become Johnny Mnemonic, so you could store terabytes of data in your brain, but, sorry to disappoint you, I will only cover non-invasive EEG. I will cover 101 issues that we all have known since the 90s, that affect this 21st century technology.

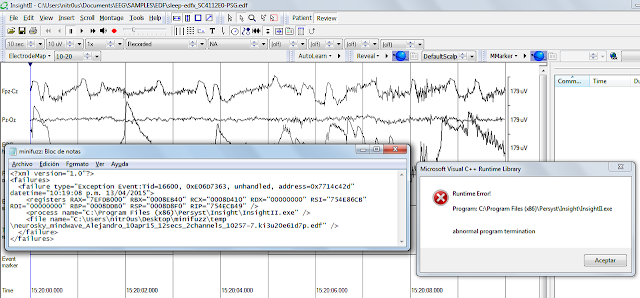

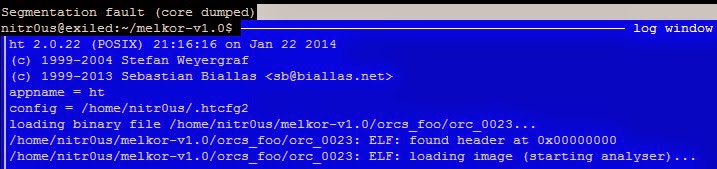

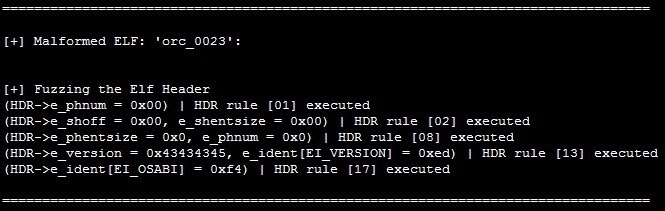

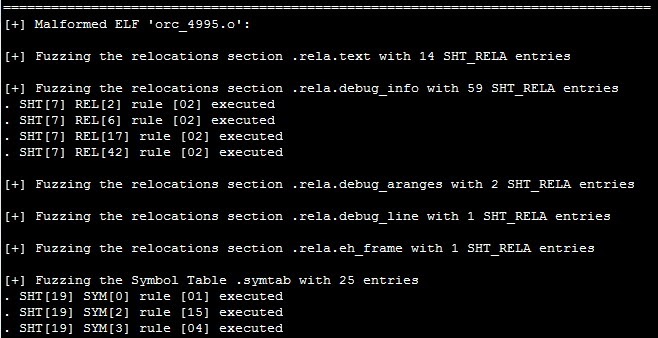

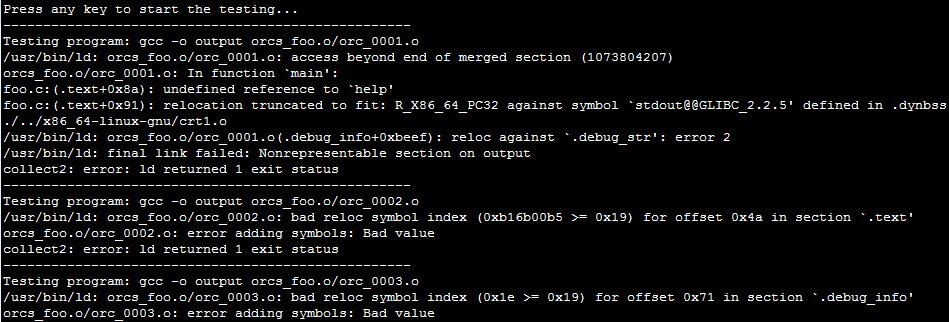

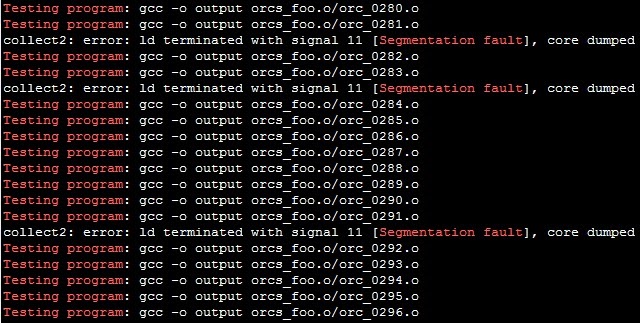

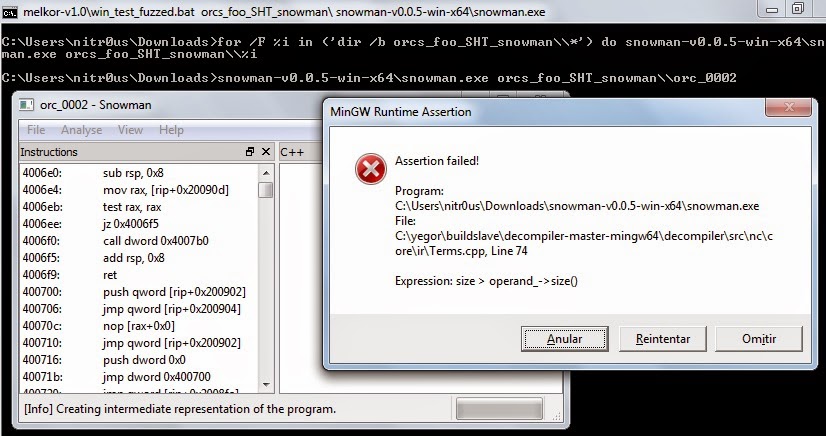

I will give a brief introduction of Brain-Computer Interfaces and EEG in order to understand the risks involved in brain signal processing, storage, and transmission. I will cover common (in)security aspects, such as encryption and authentication as well as (in)security in design. Also, I will perform some live demos, such as the visualization of live brain activity, sniffing of brain signals over TCP/IP, as well as software bugs in well-known EEG applications when dealing with corrupted brain activity files. This talk is a first step to demonstrating that many EEG technologies are vulnerable to common network and application attacks.