Recently, I ventured into the crazy world of differential cryptanalysis purely to find out what the heck it was all about. In this post, I hope to reassure you that this strange and rather cool technique is not as scary as it seems. Hopefully, you’ll be attacking some ciphers of your own in no time!

A differential cryptanalysis attack is a method of abusing pairs of plaintext and corresponding ciphertext to learn about the secret key that encrypted them, or, more precisely, to reduce the amount of time needed to find the key. It’s what is called a chosen plaintext attack; the attacker has access to plaintext and corresponding ciphertext.

We’re going to attack a simple block cipher I stole using another explanation of the technique (links are available at the bottom of the post). This cipher is meant to exhibit a few rudimentary elements of a Substitution-Permutation Network (SPN) (minus some common elements for simplicity), while highlighting the power of the differential technique. So, let’s get started.

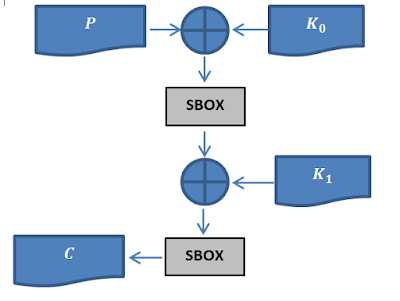

Where:

- C is the cipher-text

- K_0, K_1 are the first and second round keys, respectively

- P is the plaintext

- SBOX is the substitution box.

As you can probably guess from looking at the schematic,: the cipher takes in two 2 sub-keys (K_0,K_1) and a piece of plain-text. It, it then XORs the plain-text with the first key, K_0, then, afterwards pulls the plain-text through a substitution box (SBOX) (we will talk about this little gadget soon). From there, it then takes the output of the SBOX, and XORs it with K_01, and then pulls it through the SBOX again to produce the file piece of final cipher-text. So now that we know how the cipher works, and we can begin formulating our attack.!

To start off with, let’s try writing down this cipher in an algebraic form:

C=SBOX(SBOX(P⨁K_0 )⨁K_1 )

(1)

Great! We can start talking about some of the weird properties of this cipher.

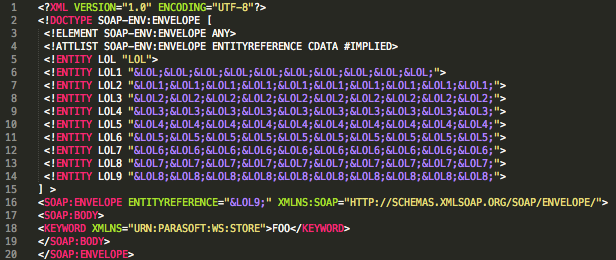

We know that when it comes to the cryptographic security of an encryption function the only thing that should be relied on for Confidentiality is your secret key (these are the words of a great Dutch cryptographer a long time ago [https://en.wikipedia.org/wiki/Auguste_Kerckhoffs]). This means the algorithm, including the workings of the SBOXs, will be known (or rather, in our analysis, we should always assume it will be known) by our attacker. Anything secret in our algorithm cannot be relied on for security. More specifically, any operation that is completely reversible (requiring only knowledge of the ciphertext) is useless!

When it comes to the use of substitution boxes, unless something we don’t know meaningfully obscures the output of the SBOX, using it will not add any security. It will be reduced to a mere reversible “encoding” of its input.

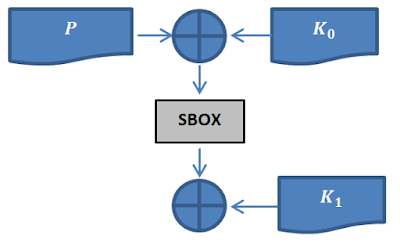

Looking at the algebraic form in (1), we can see the cipher pulls the input through the SBOX again in its last operation before returning it as ciphertext. This operation adds no security at all, especially in the context of a differential attack, because it is trivial to reverse the effect of the last SBOX. To make this clear, imagine knowing the output of the SBOX, but not being capable of knowing the input. Given the context of the last SBOX, this is impossible if you know the ciphertext. We can reduce the encryption function and focus on what actually adds security. The function becomes:

Ironically, the SBOX in this expression (2), is now the only thing that adds security, so it will be the target of our analysis. Consider what would happen if we could know the output of the SBOX, including the plaintext/ciphertext pairs. The entire security of the cipher would fly out the window. After a few XOR operations, we would be able to work out the second key, and by extrapolation, the first key.

But how would we know the SBOX output? Well, what about analyzing the way the SBOX behaves by observing how differences between inputs map to differences in outputs. This insight is at the heart of differential cryptanalysis! Why choose this property? The only thing we have access to is plaintext/ciphertext pairs in a differential cryptanalysis attack (thems the rules), so we need to derive our information from this data. Also, XOR differences have favorable properties under XOR, namely they are unaffected by them. If we look at how the SBOX maps these differences, this is what we get:

|

∆x

|

∆y

|

count

|

[(x,x^’), (y,y^’)]

|

|

15

|

4

|

1

|

[(0, 15), (3, 7)]

|

|

15

|

7

|

1

|

[(3, 12), (10, 13)]

|

|

15

|

6

|

2

|

[(4, 11), (4, 2)] [(5, 10), (9, 15)]

|

|

5

|

15

|

1

|

[(3, 6), (10, 5)]

|

|

5

|

10

|

2

|

[(0, 5), (3, 9)] [(1, 4), (14, 4)]

|

|

3

|

15

|

1

|

[(1, 2), (14, 1)]

|

|

3

|

12

|

2

|

[(5, 6), (9, 5)] [(13, 14), (12, 0)]

|

|

3

|

10

|

2

|

[(8, 11), (8, 2)] [(12, 15), (13, 7)]

|

|

5

|

2

|

1

|

[(11, 14), (2, 0)]

|

|

5

|

4

|

1

|

[(8, 13), (8, 12)]

|

|

5

|

7

|

1

|

[(2, 7), (1, 6)]

|

|

5

|

6

|

1

|

[(9, 12), (11, 13)]

|

|

5

|

8

|

1

|

[(10, 15), (15, 7)]

|

|

4

|

15

|

1

|

[(10, 14), (15, 0)]

|

|

4

|

12

|

1

|

[(3, 7), (10, 6)]

|

|

1

|

7

|

1

|

[(14, 15), (0, 7)]

|

|

1

|

1

|

1

|

[(12, 13), (13, 12)]

|

- ∆x = x⊕x^’

- ∆y = y⊕y^’

- x^’,x is the input pair to the SBOX

- y’,y is the output pair of from the SBOX

- And ∆x→ ∆y is a differential characteristic

- Count is the number of times the differential characteristic appears

This mapping of the XOR difference in the input to the XOR difference in the output is called a differential characteristic. Hence the name, “differential” cryptanalysis.! We can now use this data to steal the secret key.! Given the cipher-text/plain-text pairs, we need to find one encrypted under our target key that satisfies one of the differential characteristics. To find that pair you need to do the following:

- Choose (or generate) one plain-text P, and produce another plain-text P^’=P⨁a , where a is an input differential (notice that the XOR difference of P and P’ is a!)

- Encrypt the two plain-texts (P,P’) to get (C,C’)

- If C⨁C’ = b, then you have a good pair!

If you know that a →b is the differential that suits your text pair, you now know what the potential input/output pairs are for the SBOX, because of thanks to the analysis we did earlier on. ! So now given these pairs, you can try different calculations for the key. You will likely have a couple possible keys, but one of them will definitely be the correct one.

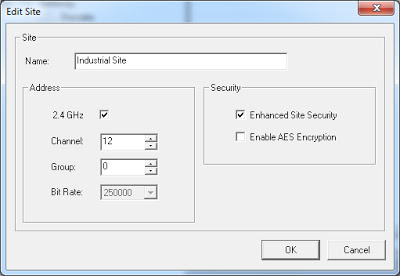

For example, let’s say we have the following setup:

The script that generated this output is available at the end of the post.

We need to select a plain-text/cipher-text pair and use the information associated to with them the pair to calculate the key. So for example let’s use, using (3,12) => (11,12), we can then calculate the K_0, K_1 in the following way:

Given then that (from the last operation of the cipher):

C=K_1⊕ S_o

We know that (because of how XOR works):

K_1=C⊕ S_o