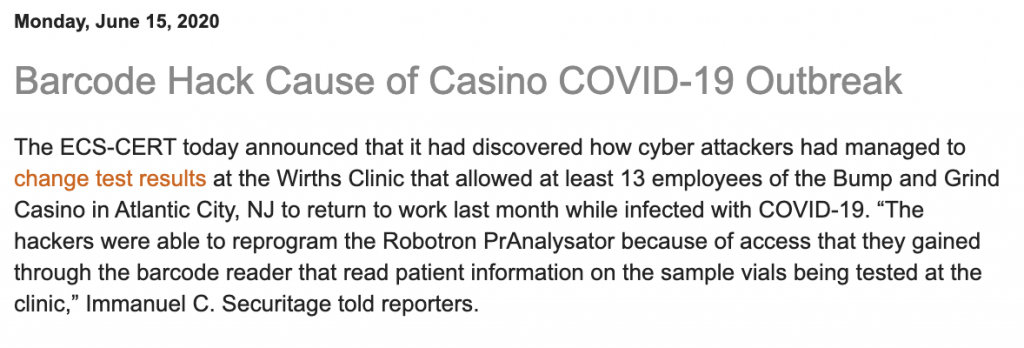

Several days ago I came across an interesting entry in the curious ‘ICS Future News’ blog run by Patrick Coyle.

Before anyone becomes alarmed, the description of this blog is crystal clear about its contents:

“News about control system security incidents that you might see in the not too distant future. Any similarity to real people, places or things is purely imaginary.”

IOActive provides research-fueled security services, so when we analyze cutting-edge technologies the goal is to stay one step ahead of malicious actors in order to reduce current and future risk.

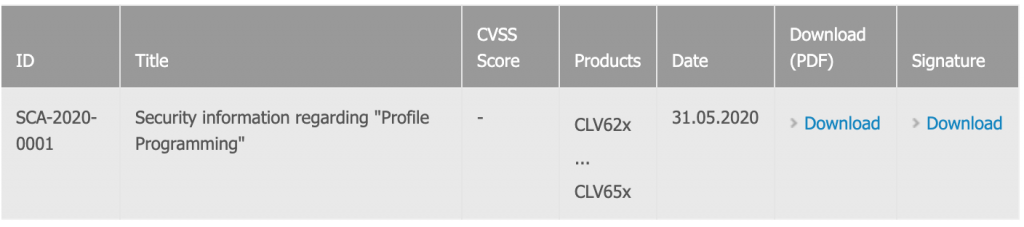

Although the casino barcode hack is made up, it turns out IOActive reported similar vulnerabilities to SICK months ago, resulting in the following advisory:

This blog post provides an introductory analysis of real attack vectors where custom barcodes could be leveraged to remotely attack ICS, including critical infrastructure.

Introduction

Barcode scanning is ubiquitous across sectors and markets. From retail companies to manufacturing, utilities to access control systems, barcodes are used every day to identify and track millions of assets at warehouses, offices, shops, supermarkets, industrial facilities, and airports.

Depending on the purpose and the nature of the items being scanned, there are several types of barcode scanners available on the market: CCD, laser, and image-based to name a few. Also, based on the characteristics of the environment, there are wireless, corded, handheld, or fixed-mount scanners.

In general terms, people are used to seeing this technology as part of their daily routine. The security community has also paid attention to these devices, mainly focusing on the fact that regular handheld barcode scanners are usually configured to act as HID keyboards. Under certain circumstances, it is possible to inject keystroke combinations that can compromise the host computer where the barcode scanner is connected. However, the barcode scanners in the industrial world have their own characteristics.

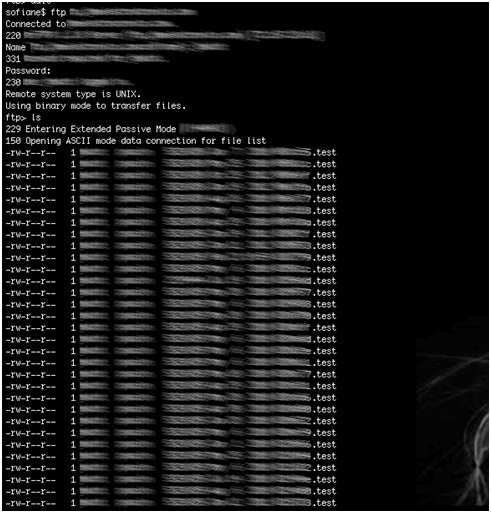

From the security perspective, the cyber-physical systems at manufacturing plants, airports, and retail facilities may initially look as though they are physically isolated systems. We all know that this is not entirely true, but reaching ICS networks should ideally involve several hops from other systems. In some deployments there is a direct path from the outside world to those systems: the asset being handled.

Depending on the industrial process where barcode scanners are deployed, we can envision assets that contain untrusted inputs (barcodes) from malicious actors, such as luggage at airports or parcels in logistics facilities.

In order to illustrate the issue, I will focus on the specific case I researched: smart airports.

Attack Surface of Baggage Handling Systems

Airports are really complex facilities where interoperability is a key factor. There is a plethora of systems and devices shared between different stakeholders. Passengers are also constrained in what they are allowed to carry.

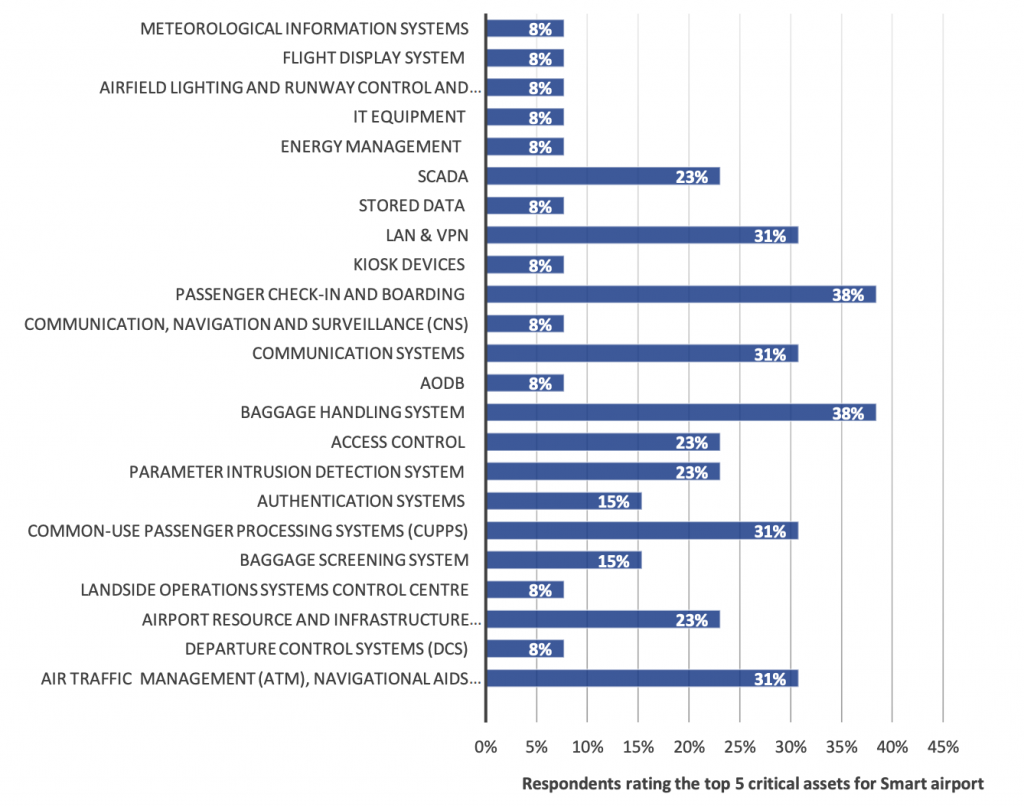

In 2016, ENISA published a comprehensive paper on securing smart airports. Their analysis provided a plethora of interesting details; among which was a ranking of the most critical systems for airport resilience.

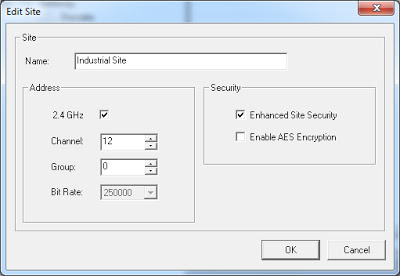

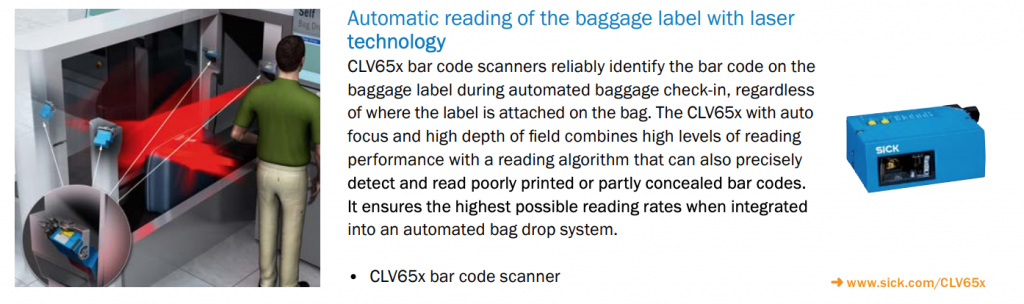

Most modern baggage handling systems, including self-service bag drop, rely on fixed-mount laser scanners to identify and track luggage tickets. Devices such as the SICK CLV65X are typically deployed at the automatic baggage handling systems and baggage self-drop systems operating at multiple airports.

The Baggage Connection

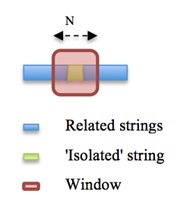

More specifically, SICK CLV62x-65x barcode scanners support ‘profile programming’ barcodes. These are custom barcodes that, once scanned, directly modify settings in a device, without involving a host computer. This functionality relies in custom CODE128 barcodes that trigger certain actions in the device and can be leveraged to change configuration settings.

A simple search on YouTube results in detailed walkthroughs of some of the largest airport’s baggage handling systems, which are equipped with SICK devices.

Custom CODE128 barcodes do not implement any kind of authentication, so once they are generated they will work on any SICK device that supports them. As a result, an attacker that is able to physically present a malicious profile programming barcode to a device can either render it inoperable or change its settings to facilitate further attacks.

We successfully tested this attack against a SICK CLV650 device.

Technical Details

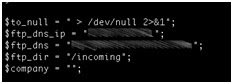

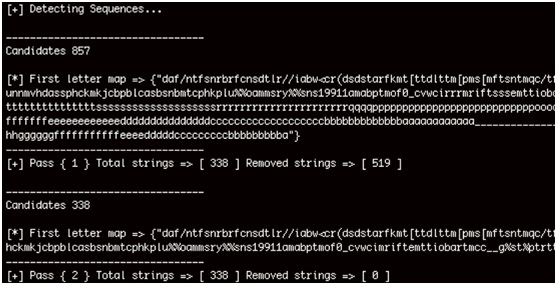

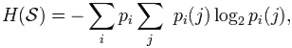

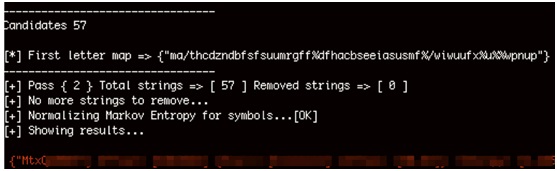

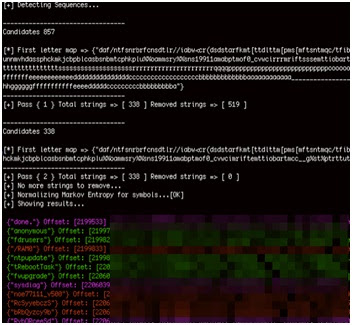

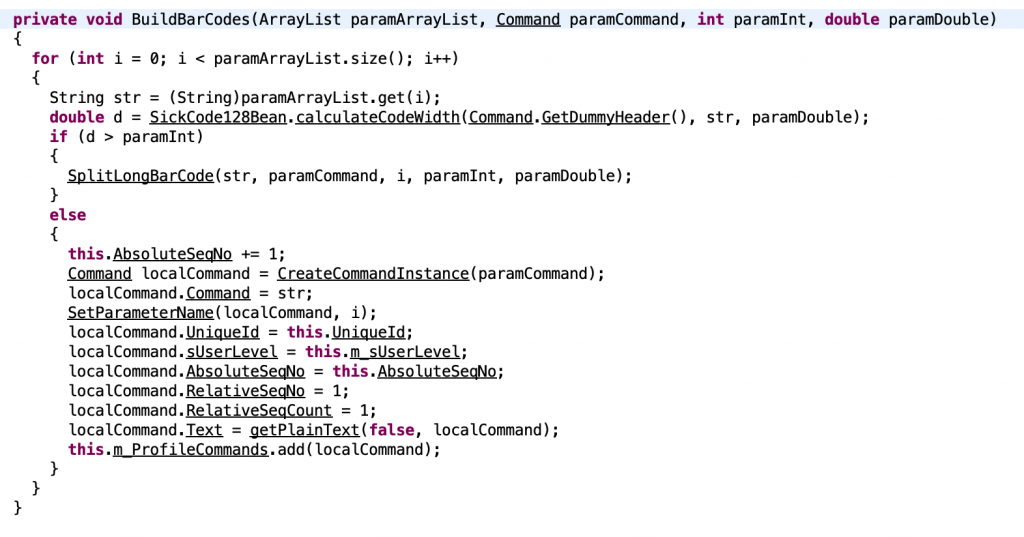

IOActive reverse engineered the logic used to generate profile programming barcodes (firmware versions 6.10 and 3.10) and verified that they are not bound to specific devices.

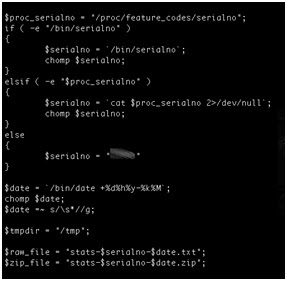

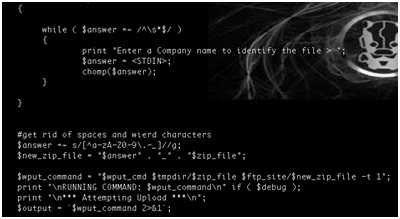

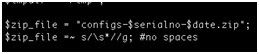

The following method in ProfileCommandBuilder.class demonstrates the lack of authentication when building profile programming barcodes.

[caption id="attachment_6882" align="alignnone" width="686"]

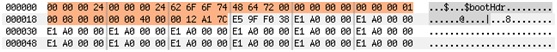

Analyzing CLV65x_V3_10_20100323.jar and CLV62x_65x_V6.10_STD

Analyzing CLV65x_V3_10_20100323.jar and CLV62x_65x_V6.10_STDConclusion

The attack vector described in this blog post can be exploited in various ways, across multiple industries and will be analyzed in future blog posts. Also, according to IOActive’s experience, it is likely that similar issues affect other barcode manufacturers.

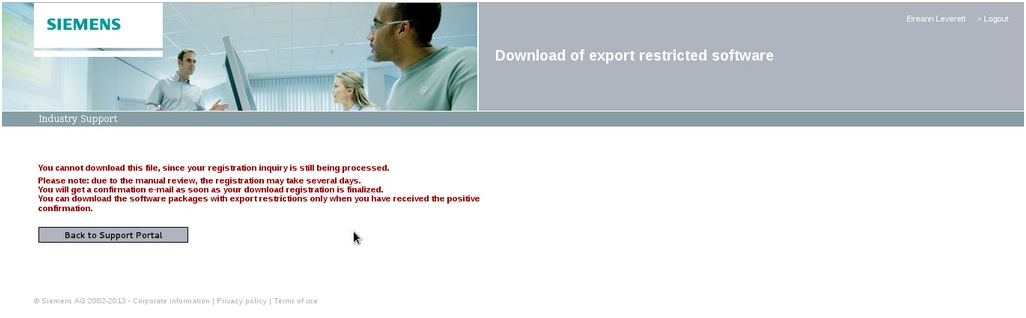

IOActive reported these potential issues to SICK via its PSIRT in late February 2020. SICK handled the disclosure process in a diligent manner and I would like to publicly thank SICK for its coordination and prompt response in providing its clients with proper mitigations.