Ransomware attacks have boomed during the last few years, becoming a preferred method for cybercriminals to get monetary profit by encrypting victim information and requiring a ransom to get the information back. The primary ransomware target has always been information. When a victim has no backup of that information, he panics, forced to pay for its return.

(more…)

Tag: cyber attack

Security Theater and the Watch Effect in Third-party Assessments

Before the facts were in, nearly every journalist and salesperson in infosec was thinking about how to squeeze lemonade from the Equifax breach. Let’s be honest – it was and is a big breach. There are lessons to be learned, but people seemed to have the answers before the facts were available.

At IOActive we guard against making on-the-spot assumptions. We consider and analyze the actual threats, ever mindful of the “Watch Effect.” The Watch Effect can be simply explained: you wear a watch long enough, you can’t even feel it.

The industry-wide point here is: Everyone is asking everyone else for proof that they’re secure.

Well, sure, they do mean something. In the case of questionnaires, you are asking a company to perform a massive amount of tedious work, and, if they respond with those questions filled in, and they don’t make gross errors or say “no” where they should have said “yes”, that probably counts for something.

But the question is how much do we really know about a company’s security by looking at their responses to a security questionnaire?

The answer is, “not much.”

At IOActive we conduct full, top-down security reviews of companies that include business risk, crown-jewel defense, and every layer that these pieces touch. Because we know how attackers get in, we measure and test how effective the company is at detecting and responding to cyber events – and use this comprehensive approach to help companies understand how to improve their ability to prevent, detect, and ever so critically, RESPOND to intrusions. Part of that approach includes a series of interviews with everyone from the C-suite to the people watching logs. What we find is frightening.

We are often days or weeks into an assessment before we discover a thread to pull that uncovers a major risk, whether that thread comes from a technical assessment or a person-to-person interview or both.

That’s days—or weeks—of being onsite with full access to the company as an insider.

Here’s where the Watch Effect comes in. Many of the companies have no idea what we’re uncovering or how bad it is because of the Watch Effect. They’re giving us mostly standard answers about their day-to-day, the controls they have in place, etc. It’s not until we pull the thread and start probing technically – as an attacker – that they realize they’re wearing a broken watch.

Then they look down at a set of catastrophic vulnerabilities on their wrist and say, “Oh. That’s a problem.”

So, back to the questionnaire…

If it takes days or weeks for an elite security firm to uncover these vulnerabilities onsite with full cooperation during an INTERNAL assessment, how do you expect to uncover those issues with a form?

You can’t. And you should stop pretending you can. Questionnaires depend far too much upon the capability and knowledge of the person or team filling it out, and often are completed with impartial knowledge. How would one know if a firewall rule were updated improperly to “any/any” in the last week if it is not tested and verified?

To be clear, the problem isn’t that third party assessments only give 2/10 in security assessment value. The problem is that executives THINK it’s giving them 6/10, or 9/10.

It’s that disconnect that’s causing the harm.

Eventually, companies will figure this out. In the meantime, the breaches won’t stop.

Until then, we as technical practitioners can do our best to convince our clients and prospects to understand the value these types of cursory, external glances at a company provide. Very little. So, let’s prioritize appropriately.

Cryptocurrency and the Interconnected Home

There are many tiny elements to cryptocurrency that are not getting the awareness time they deserve. To start, the very thing that attracts people to cryptocurrency is also the very thing that is seemingly overlooked as a challenge. Cryptocurrencies are not backed by governments or institutions. The transactions allow the trader or investor to operate with anonymity. We have seen a massive increase in the last year of cyber bad guys hiding behind these inconspicuous transactions – ransomware demanding payment in bitcoin; bitcoin ATMs being used by various dealers to effectively clean money.

Because there are few regulations governing crypto trading, we cannot see if cryptocurrency is being used to fund criminal or terrorist activity. There is an ancient funds transfer capability, designed to avoid banks and ledgers called Hawala. Hawala is believed to be the method by which terrorists are able to move money, anonymously, across borders with no governmental controls. Sound like what’s happening with cryptocurrency? There’s an old saying in law enforcement – follow the money. Good luck with that one.

Many people don’t realize that cryptocurrencies depend on multiple miners. This allows the processing to be spread out and decentralized. Miners validate the integrity of the transactions and as a result, the miners receive a “block reward” for their efforts. But, these rewards are cut in half every 210,000 blocks. A bitcoin block reward when it first started in 2009 was 50 BTC, today it’s 12.5. There are about 1.5 million bitcoins left to mine before the reward halves again.

This limit on total bitcoins leads to an interesting issue – as the reward decreases, miners will switch their attention from bitcoin to other cryptocurrencies. This will reduce the number of miners, therefore making the network more centralized. This centralization creates greater opportunity for cyber bad guys to “hack” the network and wreak havoc, or for the remaining miners to monopolize the mining.

At some point, and we are already seeing the early stages of this, governments and banks will demand to implement more control. They will start to produce their own cryptocurrency. Would you trust these cryptos? What if your bank offered loans in Bitcoin, Ripple or Monero? Would you accept and use this type of loan?

Because it’s a limited resource, what happens when we reach the 21 million bitcoin limit? Unless we change the protocols, this event is estimated to happen by 2140. My first response – I don’t think bitcoins will be at the top of my concerns list in 2140.

The Interconnected Home

So what does crypto-mining malware or mineware have to do with your home? It’s easy enough to notice if your laptop is being overused – the device slows down, the battery runs down quickly. How can you tell if your fridge or toaster are compromised? With your smart home now interconnected, what happens if the cyber bad guys operate there? All a cyber bad guy needs is electricity, internet and CPU time. Soon your fridge will charge your toaster a bitcoin for bread and butter. How do we protect our unmonitored devices from this mineware? Who is responsible for ensuring the right level of security on your home devices to prevent this?

Smart home vulnerabilities present a real and present danger. We have already seen baby monitors, robots, and home security products, to name a few, all compromised. Most by IOActive researchers. There can be many risks that these compromises introduce to the home, not just around cryptocurrency. Think about how the interconnected home operates. Any device that’s SMART now has the three key ingredients to provide the cyber bad guy with everything he needs – internet access, power and processing.

Firstly, I can introduce my mineware via a compromised mobile phone and start to exploit the processing power of your home devices to mine bitcoin. How would you detect this? When could you detect this? At the end of the month when you get an electricity bill. Instead of 50 pounds a month, its now 150 pounds. But how do you diagnose the issue? You complain to the power company. They show you the usage. It’s correct. Your home IS consuming that power.

IOActive has proven these attack vectors over and over. We know this is possible and we know this is almost impossible to detect. Remember, a cyber bad guy makes several assessments when deciding on an attack – the risk of detection, the reward for the effort, and the penalty for capture. The risk of detection is low, like very low. The reward, well you could be mining blocks for months without stopping, that’s tens of thousands of dollars. And the penalty… what’s the penalty for someone hacking your toaster… The impact is measurable to the homeowner. This is real, and who’s to say not happening already. Ask your fridge!!

What’s the Answer – Avoid Using Smart Home Devices Altogether?

In the meantime, consider the entry point for most cyber bad guys. Generally, this is your desktop, laptop or mobile device. Therefore, ensure you have suitable security products running on these devices, make sure they are patched to the correct levels, be conscious of the websites you are visiting. If you control the available entry points, you will go a long way to protecting your home.

WannaCry vs. Petya: Keys to Ransomware Effectiveness

With WannaCry and now Petya we’re beginning to see how and why the new strain of ransomware worms are evolving and growing far more effective than previous versions.

I think there are 3 main factors: Propagation, Payload, and Payment.*

- Propagation: You ideally want to be able to spread using as many different types of techniques as you can.

- Payload: Once you’ve infected the system you want to have a payload that encrypts properly, doesn’t have any easy bypass to decryption, and clearly indicates to the victim what they should do next.

- Payment: You need to be able to take in money efficiently and then actually decrypt the systems of those who pay. This piece is crucial, otherwise people will quickly learn they can’t get their files back even if they do pay and be inclined to just start over.

WannaCry vs. Petya

WannaCry used SMB as its main spreading mechanism, and its payment infrastructure lacked the ability to scale. It also had a kill switch, which was famously triggered and halted further propagation.

Petya on the other hand appears to be much more effective at spreading since it’s using both EternalBlue and credential sharing

/ PSEXEC to infect more systems. This means it can harvest working credentials and spread even if the new targets aren’t vulnerable to an exploit.

[NOTE: This is early analysis so some details could turn out to be different as we learn more.]

What remains to be seen is how effective the payload and payment infrastructures are on this one. It’s one thing to encrypt files, but it’s something else entirely to decrypt them.

The other important unknown at this point is if Petya is standalone or a component of a more elaborate attack. Is what we’re seeing now intended to be a compelling distraction?

There’s been some reports indicating these exploits were utilized by a sophisticated threat actor against the same targets prior to WannaCry. So it’s possible that WannaCry was poorly designed on purpose. Either way, we’re advising clients to investigate if there is any evidence of a more strategic use of these tools in the weeks leading up to Petya hitting.

*Note: I’m sure there are many more thorough ways to analyze the efficacy of worms. These are just three that came to mind while reading about Petya and thinking about it compared to WannaCry.

Post #WannaCry Reaction #127: Do I Need a Pen Test?

In the wake of WannaCry and other recent events, everyone from the Department of Homeland Security to my grandmother is recommending penetration tests as a silver bullet to prevent falling victim to the next cyberattack. But a penetration test is not a silver bullet, nor is it universally what is needed for improving the security posture of an organization. There are several key factors to consider. So I thought it might be good to review the difference between a penetration test and a vulnerability assessment since this is a routine source of confusion in the market. In fact, I’d venture to say that while there is a lot of good that comes from a penetration test, what people actually more often need is a vulnerability assessment.

First, let’s get the vocabulary down:

Vulnerability Assessments

Vulnerability Assessments are designed to yield a prioritized list of vulnerabilities and are generally best for organizations that understand they are not where they want to be in terms of security. The customer already knows they have issues and need help identifying and prioritizing them.

With a vulnerability assessment, the more issues identified the better, so naturally, a white box approach should be embraced when possible. The most important deliverable of the assessment is a prioritized list of vulnerabilities identified (and often information on how best to remediate).

Penetration Tests

Penetration Tests are designed to achieve a specific, attacker-simulated goal and should be requested by organizations that are already at their desired security posture. A typical goal could be to access the contents of the prized customer database on the internal network or to modify a record in an HR system.

The deliverable for a penetration test is a report on how security was breached in order to reach the agreed-upon goal (and often information on how best to remediate).

No organization has an unlimited budget for security. Every security dollar spent is a trade-off. For organizations that do not have a highly developed security program in place, vulnerability assessments will provide better value in terms of knowing where you need to improve your security posture even though pen tests are generally a less expensive option. A pen test is great when you know what you are looking for or want to test whether a remediation is working and has solved a particular vulnerability.

Here is a quick chart to help determine what your organization may need.

|

VULNERABILITY ASSESSMENT

|

PENETRATION TEST

|

|

|

Organizational Security Program Maturity Level

|

Low to Medium. Usually requested by organizations that already know they have issues, and need help getting started.

|

High. The organization believes their defenses to be strong, and wants to test that assertion.

|

|

Goal

|

Attain a prioritized list of vulnerabilities in the environment so that remediation can occur.

|

Determine whether a mature security posture can withstand an intrusion attempt from an advanced attacker with a specific goal.

|

|

Focus

|

Breadth over depth.

|

Depth overbreadth.

|

So what now?

Most security programs benefit from utilizing some combination of security techniques. These can include any number of tasks, including penetration tests, vulnerability assessments, bug bounties, white/grey/black testing, code review, and/or red/blue/purple team exercises.

We’ll peel back the different tools and how you might use them in a future post. Until then, take a look at your needs and make sure the steps you take in the wake of WannaCry and other security incidents are more than just reacting to the crisis of the week.

Hacking Robots Before Skynet

Our research uncovered both critical- and high-risk cybersecurity problems in many robot features. Some of them could be directly abused, and others introduced severe threats. Examples of some of the common robot features identified in the research as possible attack threats are as follows:

- Microphones and Cameras

- External Services Interaction

- Remote Control Applications

- Modular Extensibility

- Network Advertisement

- Connection Ports

Remotely Disabling a Wireless Burglar Alarm

Maritime Security: Hacking into a Voyage Data Recorder (VDR)

There are multiple facilities, devices, and systems located on ports and vessels and in the maritime domain in general, which are crucial to maintaining safe and secure operations across multiple sectors and nations.

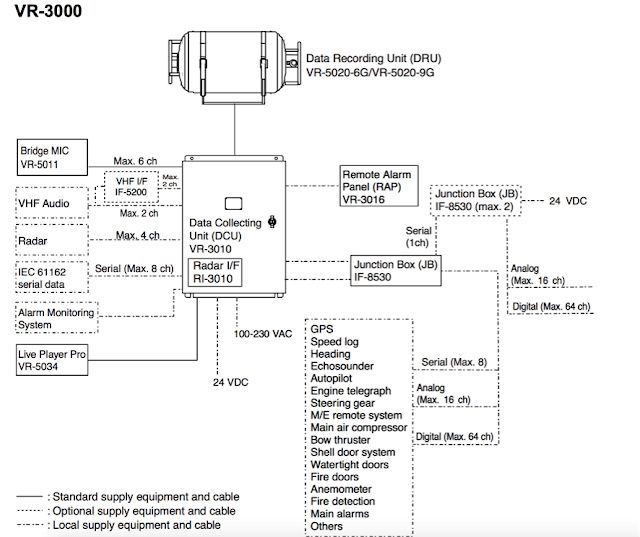

This blog post describes IOActive’s research related to one type of equipment usually present in vessels, Voyage Data Recorders (VDRs). In order to understand a little bit more about these devices, I’ll detail some of the internals and vulnerabilities found in one of these devices, the Furuno VR-3000.

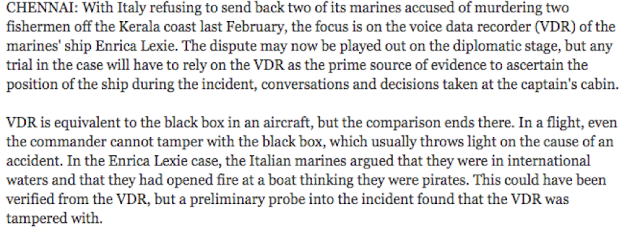

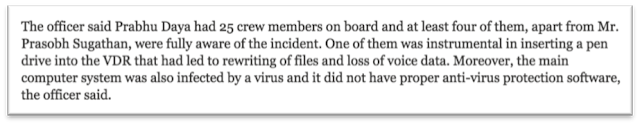

(http://www.imo.org/en/OurWork/Safety/Navigation/Pages/VDR.aspx ) A VDR is equivalent to an aircraft’s ‘BlackBox’. These devices record crucial data, such as radar images, position, speed, audio in the bridge, etc. This data can be used to understand the root cause of an accident.

Several years ago, piracy acts were on the rise. Multiple cases were reported almost every day. As a result, nation-states along with fishing and shipping companies decided to protect their fleet, either by sending in the military or hiring private physical security companies.

Curiously, Furuno was the manufacturer of the VDR that was corrupted in this incident. This Kerala High Court’s document covers this fact: http://indiankanoon.org/doc/187144571/ However, we cannot say whether the model Enrica Lexie was equipped with was the VR-3000. Just as a side note, the vessel was built in 2008 and the Furuno VR-3000 was apparently released in 2007.

During that process, an interesting detail was reported in several Indian newspapers.

http://www.thehindu.com/news/national/tamil-nadu/voyage-data-recorder-of-prabhu-daya-may-have-been-tampered-with/article2982183.ece

From a security perspective, it seems clear VDRs pose a really interesting target. If you either want to spy on a vessel’s activities or destroy sensitive data that may put your crew in a difficult position, VDRs are the key.

Understanding a VDR’s internals can provide authorities, or third-parties, with valuable information when performing forensics investigations. However, the ability to precisely alter data can also enable anti-forensics attacks, as described in the real incident previously mentioned.

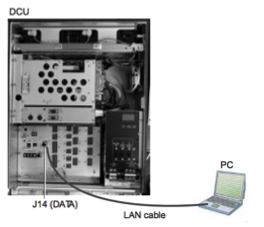

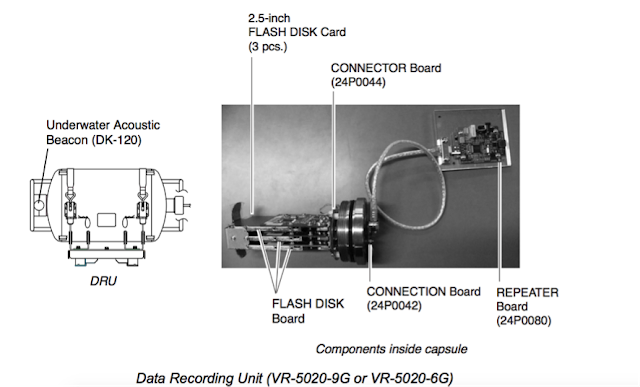

Basically, inside the Data Collecting Unit (DCU) is a Linux machine with multiple communication interfaces, such as USB, IEEE1394, and LAN. Also inside the DCU, is a backup HDD that partially replicates the data stored on the Data Recording Unit (DRU). The DRU is protected against aggressions in order to survive in the case of an accident. It also contains a Flash disk to store data for a 12 hour period. This unit stores all essential navigation and status data such bridge conversations, VHF communications, and radar images.

The International Maritime Organization (IMO) recommends that all VDR and S-VDR systems installed on or after 1 July 2006 be supplied with an accessible means for extracting the stored data from the VDR or S-VDR to a laptop computer. Manufacturers are required to provide software for extracting data, instructions for extracting data, and cables for connecting between a recording device and computer.

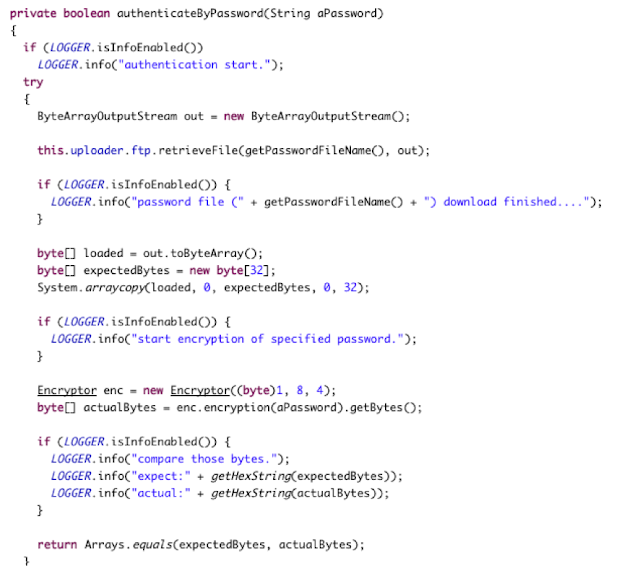

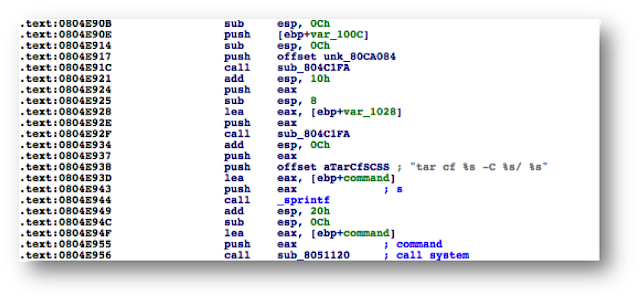

Take this function, extracted from from the Playback software, as an example of how not to perform authentication. For those who are wondering what ‘Encryptor’ is, just a word: Scytale.

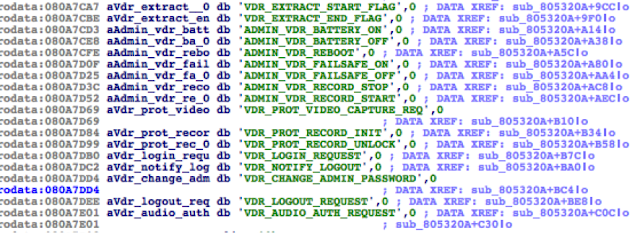

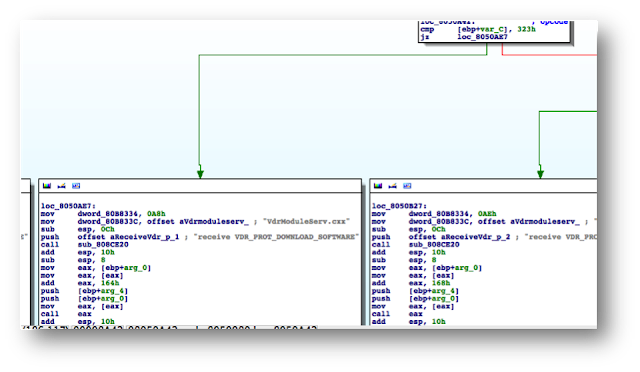

VR-3000’s firmware can be updated with the help of Windows software known as ‘VDR Maintenance Viewer’ (client-side), which is proprietary Furuno software.

The VR-3000 firmware (server-side) contains a binary that implements part of the firmware update logic: ‘moduleserv’

This service listens on 10110/TCP.

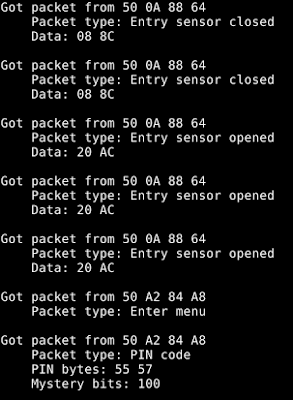

Internally, both server (DCU) and client-side (VDR Maintenance Viewer, LivePlayer, etc.) use a proprietary session-oriented, binary protocol. Basically, each packet may contain a chain of ‘data units’, which, according to their type, will contain different kinds of data.

At this point, attackers could modify arbitrary data stored on the DCU in order to, for example, delete certain conversations from the bridge, delete radar images, or alter speed or position readings. Malicious actors could also use the VDR to spy on a vessel’s crew as VDRs are directly connected to microphones located, at a minimum, in the bridge.

Before IMO’s resolution MSC.233(90) [3], VDRs did not have to comply with security standards to prevent data tampering. Taking into account that we have demonstrated these devices can be successfully attacked, any data collected from them should be carefully evaluated and verified to detect signs of potential tampering.

A Wake-up Call for SATCOM Security

- Aerospace

- Maritime

- Military and governments

- Emergency services

- Industrial (oil rigs, gas, electricity)

- Media

Practical and cheap cyberwar (cyber-warfare): Part I

The idea of this post is to raise awareness. I want to show how vulnerable some industrial, oil, and gas installations currently are and how easy it is to attack them. Another goal is to pressure vendors to produce more secure devices and to speed up the patching process once vulnerabilities are reported.