To know if your system is compromised, you need to find everything that could run or otherwise change state on your system and verify its integrity (that is, check that the state is what you expect it to be).

“Finding everything” is a bold statement, particularly in the realm of computer security, rootkits, and advanced threats. Is it possible to find everything? Sadly, the short answer is no, it’s not. Strangely, the long answer is yes, it is.

By defining the execution environment at any point in time, predominantly through the use of hardware-based hypervisor or virtualization facilities, you can verify the integrity of that specific environment using cryptographically secure hashing.

“DKOM is one of the methods commonly used and implemented by Rootkits, in order to remain undetected, since this the main purpose of a rootkit.” – Harry Miller

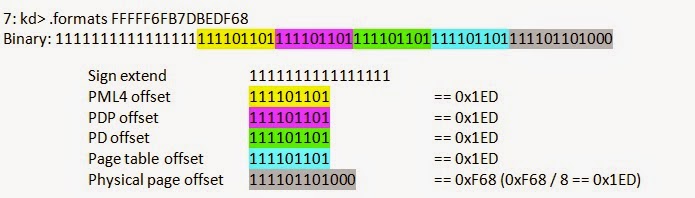

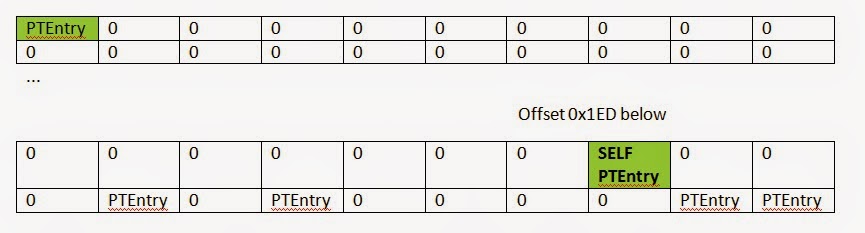

Even if an attacker significantly modifies and attempts to hide from standard logical object scanning, there is no way to evade page-table detection without significantly patching the OS fault handler. A major benefit to a DKOM rootkit is that it avoids code patches, that level of modification is easily detected by integrity checks and is counter to the goal of DKOM. DKOM is a codeless rootkit technique, it runs code without patching the OS to hide itself, it only patches data pointers.

IOActive released several versions of this process detection technique. We also built it into our memory integrity checking tools, BlockWatch™ and The Memory Cruncher™.

The magic of this technique comes from the propensity of all OS (at least Windows, Linux, and BSD) to organize their page tables into virtual memory. That way they can use virtual addresses to edit PTEs instead of physical memory addresses.

Shane Macaulay

|

Site

|

Accounts

|

%

|

|

Facebook

|

308

|

17.26457

|

|

Google

|

229

|

12.83632

|

|

Orbitz

|

182

|

10.20179

|

|

WashingtonPost

|

149

|

8.352018

|

|

Twitter

|

108

|

6.053812

|

|

Plaxo

|

93

|

5.213004

|

|

LinkedIn

|

65

|

3.643498

|

|

Garmin

|

45

|

2.522422

|

|

MySpace

|

44

|

2.466368

|

|

Dropbox

|

44

|

2.466368

|

|

NYTimes

|

36

|

2.017937

|

|

NikePlus

|

23

|

1.289238

|

|

Skype

|

16

|

0.896861

|

|

Hulu

|

13

|

0.7287

|

|

Economist

|

11

|

0.616592

|

|

Sony Entertainment Network

|

9

|

0.504484

|

|

Ask

|

3

|

0.168161

|

|

Gartner

|

3

|

0.168161

|

|

Travelers

|

2

|

0.112108

|

|

Naymz

|

2

|

0.112108

|

|

Posterous

|

1

|

0.056054

|

|

Robert Abrams

|

Email: robert.abrams@us.army.mil

|

|

|

|

|

|

Found account on site: orbitz.com

|

|

|

Found account on site: washingtonpost.com

|

|

|

|

|

Jamos Boozer

|

Email: james.boozer@us.army.mil

|

|

|

|

|

|

Found account on site: orbitz.com

|

|

|

Found account on site: facebook.com

|

|

|

|

|

Vincent Brooks

|

Email: vincent.brooks@us.army.mil

|

|

|

|

|

|

Found account on site: facebook.com

|

|

|

Found account on site: linkedin.com

|

|

|

|

|

James Eggleton

|

Email: james.eggleton@us.army.mil

|

|

|

|

|

|

Found account on site: plaxox.com

|

|

|

|

|

Reuben Jones

|

Email: reuben.jones@us.army.mil

|

|

|

|

|

|

Found account on site: plaxo.com

|

|

|

Found account on site: washingtonpost.com

|

|

|

|

|

|

|

|

David quantock

|

Email: david-quantock@us.army.mil

|

|

|

|

|

|

Found account on site: twitter.com

|

|

|

Found account on site: orbitz.com

|

|

|

Found account on site: plaxo.com

|

|

|

|

|

|

|

|

Dave Halverson

|

Email: dave.halverson@conus.army.mil

|

|

|

|

|

|

Found account on site: linkedin.com

|

|

|

|

|

Jo Bourque

|

Email: jo.bourque@us.army.mil

|

|

|

|

|

|

Found account on site: washingtonpost.com

|

|

|

|

|

|

|

|

Kev Leonard

|

Email: kev-leonard@us.army.mil

|

|

|

|

|

|

Found account on site: facebook.com

|

|

|

|

|

James Rogers

|

Email: james.rogers@us.army.mil

|

|

|

|

|

|

Found account on site: plaxo.com

|

|

|

|

|

|

|

|

William Crosby

|

Email: william.crosby@us.army.mil

|

|

|

|

|

|

Found account on site: linkedin.com

|

|

|

|

|

Anthony Cucolo

|

Email: anthony.cucolo@us.army.mil

|

|

|

|

|

|

Found account on site: twitter.com

|

|

|

Found account on site: orbitz.com

|

|

|

Found account on site: skype.com

|

|

|

Found account on site: plaxo.com

|

|

|

Found account on site: washingtonpost.com

|

|

|

Found account on site: linkedin.com

|

|

|

|

|

Genaro Dellrocco

|

Email: genaro.dellarocco@msl.army.mil

|

|

|

|

|

|

Found account on site: linkedin.com

|

|

|

|

|

Stephen Lanza

|

Email: stephen.lanza@us.army.mil

|

|

|

|

|

|

Found account on site: skype.com

|

|

|

Found account on site: plaxo.com

|

|

|

Found account on site: nytimes.com

|

|

|

|

|

Kurt Stein

|

Email: kurt-stein@us.army.mil

|

|

|

|

|

|

Found account on site: orbitz.com

|

|

|

Found account on site: skype.com

|

The idea of this post is to raise awareness. I want to show how vulnerable some industrial, oil, and gas installations currently are and how easy it is to attack them. Another goal is to pressure vendors to produce more secure devices and to speed up the patching process once vulnerabilities are reported.

|

Version numbers

|

Hosts Found

|

Vulnerable (SSH Key)

|

|

1.8-5a

|

1

|

Yes

|

|

1.8-6

|

2

|

Yes

|

|

2.0-0

|

110

|

Yes

|

|

2.0-0_a01

|

1

|

Yes

|

|

2.0-1

|

68

|

Yes

|

|

2.0-2 (patched)

|

50

|

No

|

As a penetration tester, I encounter interesting problems with network devices and software. The most common problems that I notice in my work are configuration issues. In today’s security environment, we can accept that a zero-day exploit results in system compromise because details of the vulnerability were unknown earlier. But, what about security issues and problems that have been around for a long time and can’t seem to be eradicated completely? I believe the existence of these types of issues shows that too many administrators and developers are not paying serious attention to the general principles of computer security. I am not saying everyone is at fault, but many people continue to make common security mistakes. There are many reasons for this, but the major ones are:

Here are a number of examples to support this discussion:

The majority of network devices can be accessed using Simple Network Management Protocol (SNMP) and community strings that typically act as passwords to extract sensitive information from target systems. In fact, weak community strings are used in too many devices across the Internet. Tools such as snmpwalk and snmpenum make the process of SNMP enumeration easy for attackers. How difficult is it for administrators to change default SNMP community strings and configure more complex ones? Seriously, it isn’t that hard.

A number of devices such as routers use the Network Time Protocol (NTP) over packet-switched data networks to synchronize time services between systems. The basic problem with the default configuration of NTP services is that NTP is enabled on all active interfaces. If any interface exposed on the Internet uses UDP port 123 the remote user can easily execute internal NTP readvar queries using ntpq to gain potential information about the target server including the NTP software version, operating system, peers, and so on.

Unauthorized access to multiple components and software configuration files is another big problem. This is not only a device and software configuration problem, but also a byproduct of design chaos i.e. the way different network devices are designed and managed in the default state. Too many devices on the Internet allow guests to obtain potentially sensitive information using anonymous access or direct access. In addition, one has to wonder about how well administrators understand and care about security if they simply allow configuration files to be downloaded from target servers. For example, I recently conducted a small test to verify the presence of the file Filezilla.xml, which sets the properties of FileZilla FTP servers, and found that a number of target servers allow this configuration file to be downloaded without any authorization and authentication controls. This would allow attackers to gain direct access to these FileZilla FTP servers because the passwords are embedded in these configuration files.

It is really hard to imagine that anyone would deploy sensitive files containing usernames and passwords on remote servers that are exposed on the network. What results do you expect when you find these types of configuration issues?

|

Cisco Catalyst – Insecure Interface

How can we forget about backend databases? The presence of default and weak passwords for the MS SQL system administrator account is still a valid configuration that can be found in the internal networks of many organizations. For example, network proxies that require backend databases are configured with default and weak passwords. In many cases administrators think internal network devices are more secure than external devices. But, what happens if the attacker finds a way to penetrate the internal network

Thesimple answer is: game over. Integrated web applications with backend databases using default configurations are an “easy walk in the park” for attackers. For example, the following insecure XAMPP installation would be devastative.

|

|

XAMPP Security – Web Page

The security community recently encountered an interesting configuration issue in the GitHub repository (http://www.securityweek.com/github-search-makes-easy-discovery-encryption-keys-passwords-source-code), which resulted in the disclosure of SSH keys on the Internet. The point is that when you own an account or repository on public servers, then all administrator responsibilities fall on your shoulders. You cannot blame the service provider, Git in this case. If users upload their private SSH keys to the repository, then whom can you blame? This is a big problem and developers continue to expose sensitive information on their public repositories, even though Git documents show how to secure and manage sensitive data.

Another interesting finding shows that with Google Dorks (targeted search queries), the Google search engine exposed thousands of HP printers on the Internet (http://port3000.co.uk/google-has-indexed-thousands-of-publicly-acce). The search string “inurl:hp/device/this.LCDispatcher?nav=hp.Print” is all you need to gain access to these HP printers. As I asked at the beginning of this post, “How long does it take to secure a printer?” Although this issue is not new, the amazing part is that it still persists.

The existence of vulnerabilities and patches is a completely different issue than the security issues that result from default configurations and poor administration. Either we know the security posture of the devices and software on our networks but are being careless in deploying them securely, or we do not understand security at all. Insecure and default configurations make the hacking process easier. Many people realize this after they have been hacked and by then the damage has already been done to both reputations and businesses.

This post is just a glimpse into security consciousness, or the lack of it. We have to be more security conscious today because attempts to hack into our networks are almost inevitable. I think we need to be a bit more paranoid and much more vigilant about security.

|

|

|

Throughout the second half of 2012 many security folks have been asking “how much is a zero-day vulnerability worth?” and it’s often been hard to believe the numbers that have been (and continue to be) thrown around. For the sake of clarity though, I do believe that it’s the wrong question… the correct question should be “how much do people pay for working exploits against zero-day vulnerabilities?”

The answer in the majority of cases tends to be “it depends on who’s buying and what the vulnerability is” regardless of the questions particular phrasing.

On the topic of exploit development, last month I wrote an article for DarkReading covering the business of commercial exploit development, and in that article you’ll probably note that I didn’t discuss the prices of what the exploits are retailing for. That’s because of my elusive answer above… I know of some researchers with their own private repository of zero-day remote exploits for popular operating systems seeking $250,000 per exploit, and I’ve overheard hushed bar conversations that certain US government agencies will beat any foreign bid by four-times the value.

But that’s only the thin-edge of the wedge. The bulk of zero-day (or nearly zero-day) exploit purchases are for popular consumer-level applications – many of which are region-specific. For example, a reliable exploit against Tencent QQ (the most popular instant messenger program in China) may be more valuable than an exploit in Windows 8 to certain US, Taiwanese, Japanese, etc. clandestine government agencies.

More recently some of the conversations about exploit sales and purchases by government agencies have focused in upon the cyberwar angle – in particular, that some governments are trying to build a “cyber weapon” cache and that unlike kinetic weapons these could expire at any time, and that it’s all a waste of effort and resources.

I must admit, up until a month ago I was leaning a little towards that same opinion. My perspective was that it’s a lot of money to be spending for something that’ll most likely be sitting on the shelf that will expire in to uselessness before it could be used. And then I happened to visit the National Museum of Nuclear Science & History on a business trip to Albuquerque.

|

| Museum: Polaris Missile |

|

| Museum: Minuteman missile part? |

For those of you that have never heard of the place, it’s a museum that plots out the history of the nuclear age and the evolution of nuclear weapon technology (and I encourage you to visit!).

Anyhow, as I literally strolled from one (decommissioned) nuclear missile to another – each laying on its side rusting and corroding away, having never been used, it finally hit me – governments have been doing the same thing for the longest time, and cyber weapons really are no different!

Perhaps it’s the physical realization of “it’s better to have it and not need it, than to need it and not have it”, but as you trace the billions (if not trillions) of dollars that have been spent by the US government over the years developing each new nuclear weapon delivery platform, deploying it, manning it, eventually decommissioning it, and replacing it with a new and more efficient system… well, it makes sense and (frankly) it’s laughable how little money is actually being spent in the cyber-attack realm.

So what if those zero-day exploits purchased for measly 6-figured wads of cash curdle like last month’s milk? That price wouldn’t even cover the cost of painting the inside of a decommissioned missile silo.

No, the reality of the situation is that governments are getting a bargain when it comes to constructing and filling their cyber weapon caches. And, more to the point, the expiry of those zero-day exploits is a well understood aspect of managing an arsenal – conventional or otherwise.

— Gunter Ollmann, CTO – IOActive, Inc.

My last project before joining IOActive was “breaking” 3S Software’s CoDeSys PLC runtime for Digital Bond.

Before the assignment, I had a fellow security nut give me some tips on this project to get me off the ground, but unfortunately this person cannot be named. You know who you are, so thank you, mystery person.