This is the second post in our ongoing series on the troubles posed by high-speed signals in the hardware security lab.

What is a High-speed Signal?

Let’s start by defining “high-speed” a bit more formally:

A signal traveling through a conductor is high-speed if transmission line effects are non-negligible.

That’s nice, but what is a transmission line? In simple terms:

A transmission line is a wire of sufficient length that there is nontrivial delay between signal changes from one end of the cable to the other.

You may also see this referred to as the wire being “electrically long.”

Exactly how long this is depends on how fast your signal is changing. If your signal takes half a second (500ms) to ramp from 0V to 1V, a 2 ns delay from one end of a wire to the other will be imperceptible. If your rise time is 1 ns, on the other hand, the same delay is quite significant – a nanosecond after the signal reaches its final value at the input, the output will be just starting to rise!

Using the classical “water analogy” of circuit theory, the pipe from the handle on your kitchen sink to the spout is probably not a transmission line – open the valve and water comes out pretty much instantly. A long garden hose, on the other hand, definitely is.

Propagation Delay

The exact velocity of propagation depends on the particular cable or PCB geometry, but it’s usually somewhere around two thirds the speed of light or six inches per nanosecond. You may see this specified in cable datasheets as “velocity factor.” A velocity factor of 0.6 means a propagation velocity of 0.6 times the speed of light.

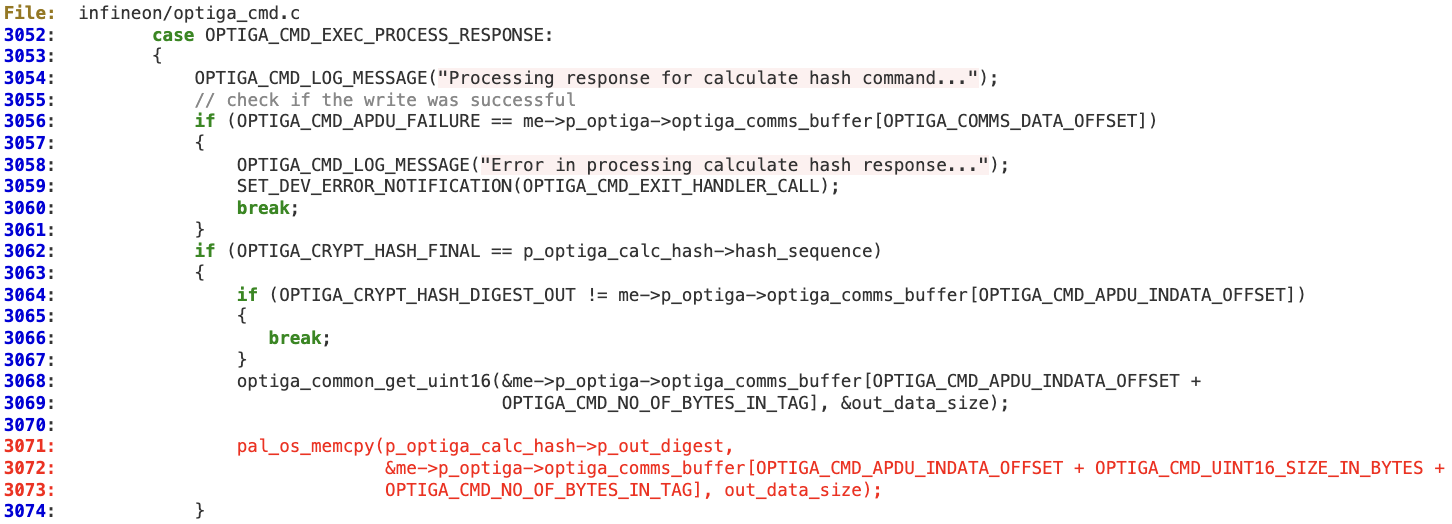

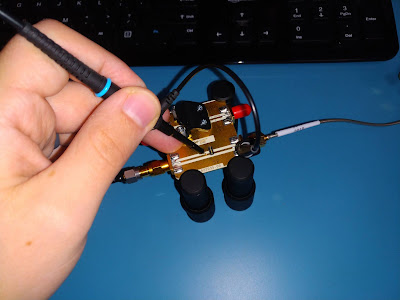

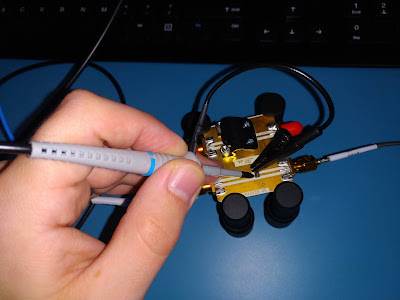

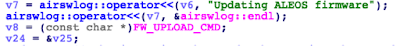

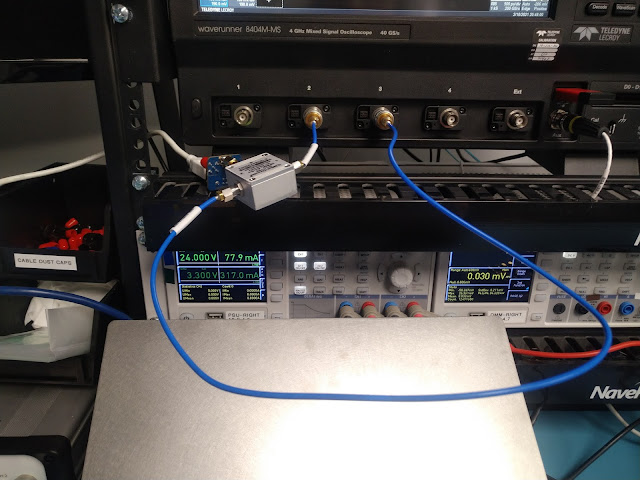

Let’s make this a bit more concrete by running an experiment: a fast rising edge from a signal generator is connected to a splitter (the gray box in the photo below) with one output fed through a 3-inch cable to an oscilloscope input, and the other through a much longer 24-inch cable to another scope input.

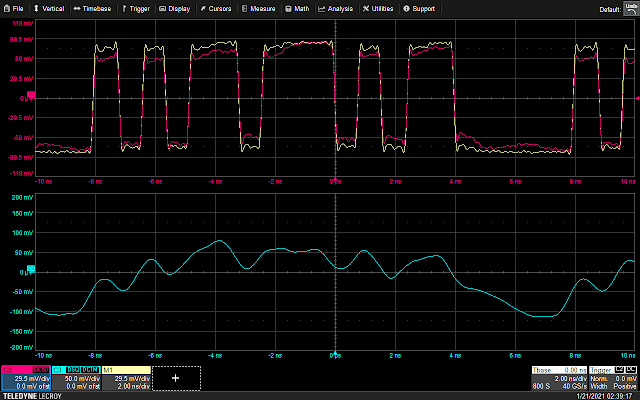

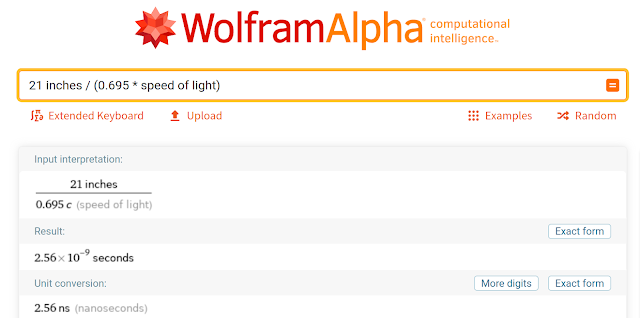

This is a 21-inch difference in length. The cable I used for this test (Mini-Circuits 086 series Hand-Flex) uses PTFE dielectric and has a velocity factor of about 0.695. Plugging these numbers into Wolfram Alpha, we get an expected skew of 2.56 ns:

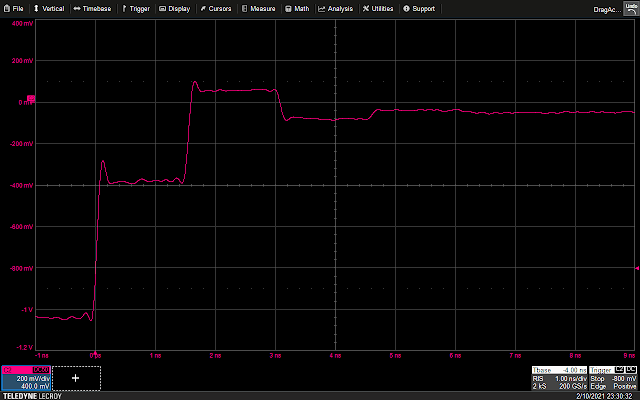

Sure enough, when we set the scope to trigger on the signal coming off the short cable, we see the signal from the longer cable arrives a touch over 2.5 ns later. The math checks out!

As a general rule for digital signals, if the expected propagation delay of your cable or PCB trace is more than 1/10 the rise time of the signal in question, it should be treated as a transmission line.

Note that the baud rate/clock frequency is unimportant! In the experimental setup above, the test signal has a frequency of only 10 MHz (100 ns period) but since the edges are very fast, cable delay is easily visible and thus it should be treated as a transmission line.

This is a common pitfall with modern electronics. It’s easy to look at a data bus clocked at a few MHz and think “Oh, it’s not that fast.” But if the I/O pins on the device under test (DUT) have sharp edges, as is typical for modern parts capable of high data rates, transmission line effects may still be important to consider.

Impedance, Reflections, and Termination

If you open an electrical engineering textbook and look for the definition of impedance you’re probably going to see pages of math talking about cable capacitance and inductance, complex numbers, and other mumbo-jumbo. The good news is, the basic concept isn’t that difficult to understand.

Imagine applying a voltage to one end of an infinitely long cable. Some amount of current will flow and a rising edge will begin to travel down the line. Since our hypothetical cable never ends, the system never reaches a steady state and this current will keep flowing at a constant level forever. We can then plug this voltage and current into Ohm’s law (V=I * R) and solve for R. The transmission line thus acts as a resistor!

This resistance is known as the “characteristic impedance” of the transmission line, and depends on factors such as the distance from signal to ground, the shape of the conductors, and the dielectric constant of the material between them. Most coaxial cable and high-speed PCB traces are 50Ω impedance (because this is a round number, a convenient value for typical material properties at easily manufacturable dimensions, and more) although a few applications such as analog television use 75Ω and other values are used in some specialized applications. The remainder of this discussion assumes 50Ω impedance for all transmission lines unless otherwise stated.

What happens if the line forks? We have the same amount of current flowing in since the line has a 50Ω impedance at the upstream end, but at the split it sees 25Ω (two 50Ω loads in parallel). The signal thus reduces in amplitude downstream of the fork, but otherwise propagates unchanged.

There’s just one problem: at the point of the split, we have X volts in the upstream direction and X/2 volts in the downstream direction! This is obviously not a stable condition, and results in a second wavefront propagating back upstream down the cable. The end result is a mirrored (negative) copy of the incident signal reflecting back.

We can easily demonstrate this effect experimentally by placing a T fitting at the end of a cable and attaching additional cables to both legs of the fitting. The two cables coming off the T are then terminated (one at a scope input and the other with a screw-on terminator). We’ll get to why this is important in a bit.

The cable from the scope input to the split is three inches long; using the same 0.695 velocity factor as before gives a propagation delay of 0.365 ns. So for the first 0.365 ns after the signal rises everything is the same as before. Once the edge hits the T the reduced-voltage signal starts propagating down the two legs of the T, but the same reduced voltage also reflects back upstream.

It takes another 0.365 ns for the reflection to reach the scope input so we’d expect to see the voltage dip at around 0.73 ns (plus a bit of additional delay in the T fitting itself) which lines up nicely with the observed waveform.

In addition to an actual T structure in the line, this same sort of negative reflection can be caused by any change to a single transmission line (different dielectric, larger wire diameter, signal closer to ground, etc.) which reduces the impedance of the line at some point.

Up to this point in our analysis, we’ve only considered infinitely long wires (and the experimental setups have been carefully designed to make this a good approximation). What if our line is somewhat long, but eventually ends in an open circuit? At the instant that the rising edge hits the end of the wire, current is flowing. It can’t stop instantaneously as the current from further down the line is still flowing – the source side of the line has no idea that anything has changed. So the edge goes the only place it can – reflecting down the line towards the source.

Our experimental setup for this is simple: a six-inch cable with the other end unconnected.

Using the same 0.695 velocity factor, we’d expect our signal to reach the end of the cable in about 0.73 ns and the reflection to hit the scope at 1.46 ns. This is indeed what we see.

Sure enough, we see a reflected copy of the original edge. The difference is, since the open circuit is a higher-than-matched impedance instead of lower like in the previous experiment, the reflection has a positive sign and the signal amplitude increases rather than dropping.

A closer inspection shows that this pattern of reflections repeats, much weaker, starting at around 3.0 ns. This is caused by the reflection hitting the T fitting where the signal generator connects to the scope input. Since the parallel combination of the scope input and signal generator is not an exact 50Ω impedance, we get another reflection. The signal likely continues reflecting several more times before damping out completely, however these additional reflections are too small to show up with the current scope settings.

So if a cable ending in a low impedance results in a negative reflection, and a cable ending in a high impedance results in a positive reflection, what happens if the cable ends in an impedance equal to that of the cable – say, a 50Ω resistor? This results in a matched termination which suppresses any reflections: the incident signal simply hits the resistor and its energy is dissipated as heat. Terminating high-speed lines (via a resistor at the end, or any of several other possible methods) is critical to avoid reflections degrading the quality of the signal.

One other thing to be aware of is that the impedance of circuit may not be constant across frequency. If there is significant inductance or capacitance present, the impedance will have frequency-dependent characteristics. Many instrument inputs and probes will specify their equivalent resistance and capacitance separately, for example “1MΩ || 17 pF” so that the user can calculate the effective impedance at their frequency of interest.

Stub Effects and Probing

We can now finally understand why the classic reverse engineering technique of soldering long wires to a DUT and attaching them to a logic analyzer is ill-advised when working with high speed systems: doing so creates an unterminated “stub” in the transmission line.

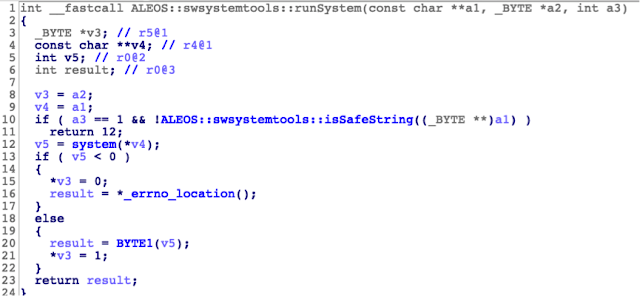

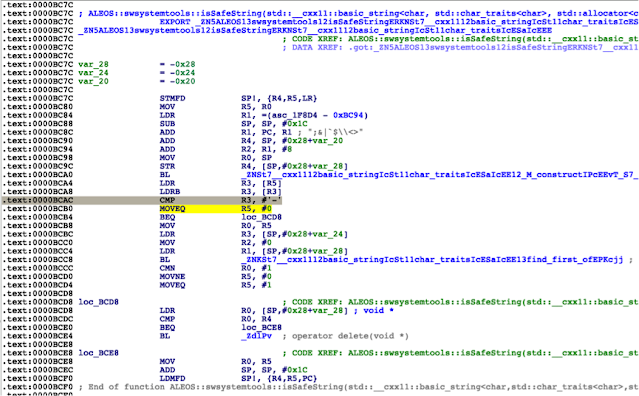

Typical logic analyzer inputs have high input impedances in order to avoid loading down the signals on the DUT. For example, the Saleae Logic Pro 8 has an impedance of 1MΩ || 10 pF and the logic probe for the Teledyne LeCroy WaveRunner 8000-MS series oscilloscopes is 100 kΩ || 5 pF. Although the input capacitance does result in the impedance decreasing at higher frequencies, it remains well over 50Ω for the operating range of the probe.

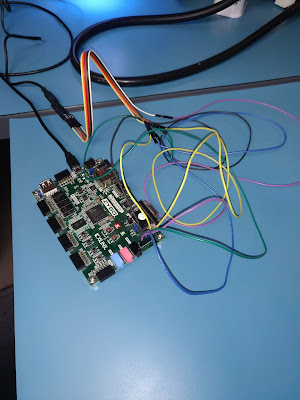

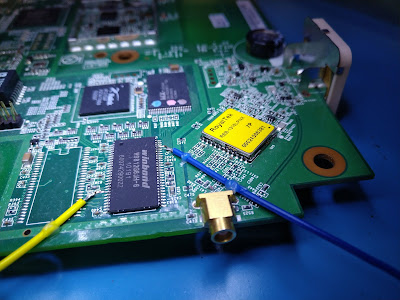

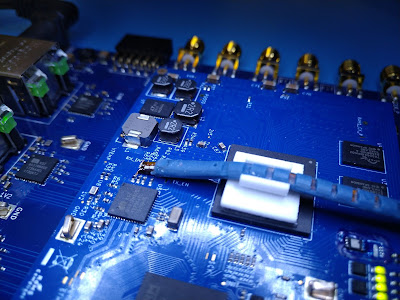

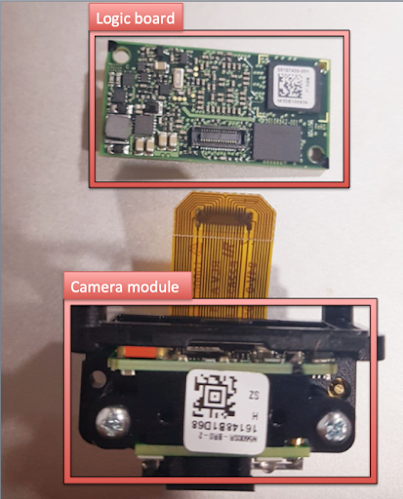

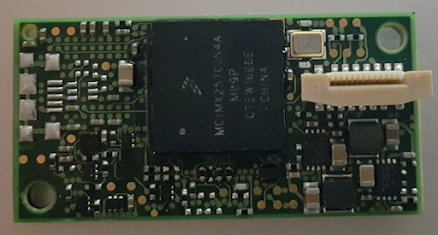

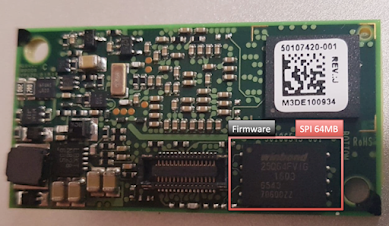

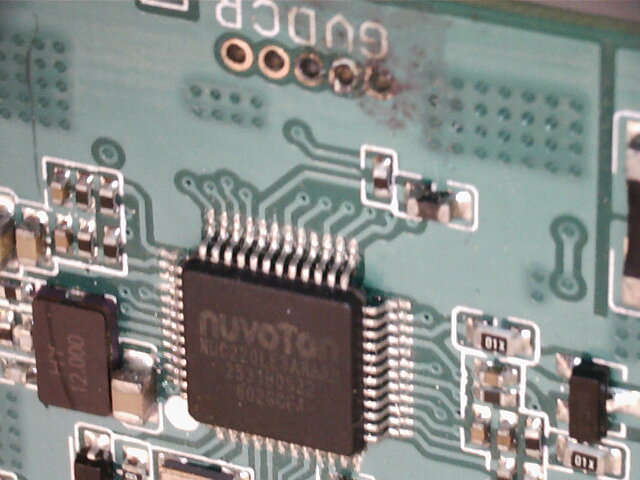

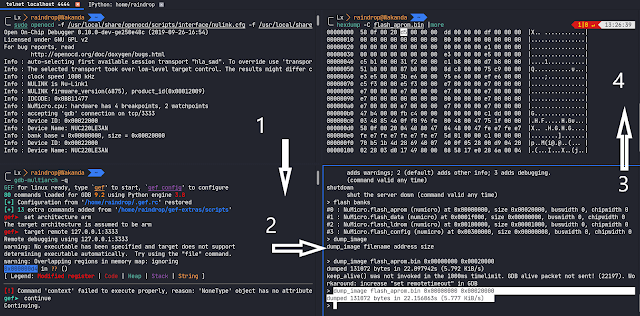

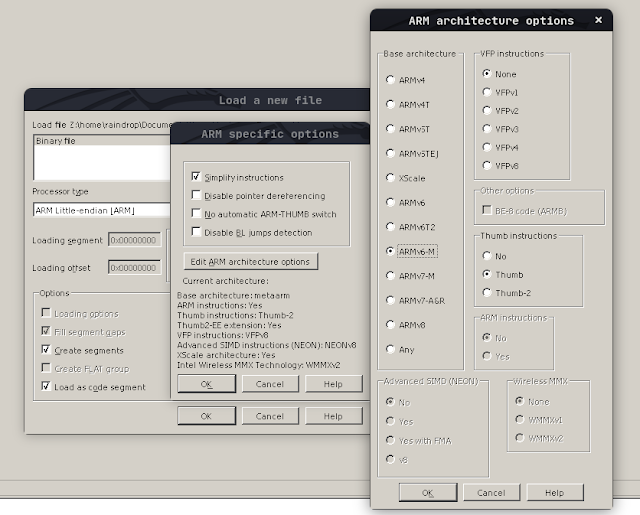

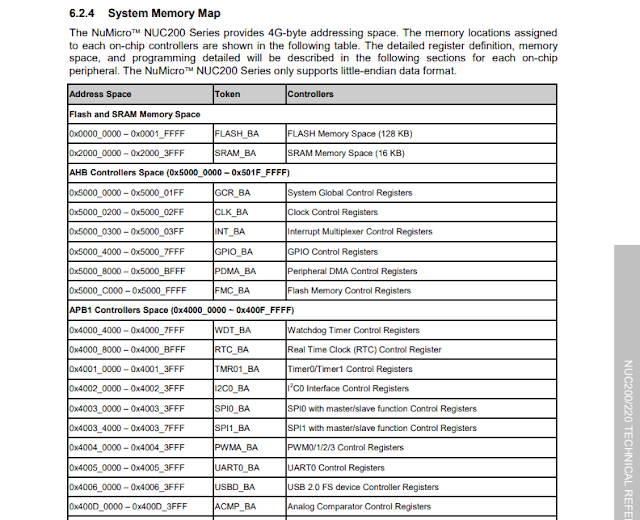

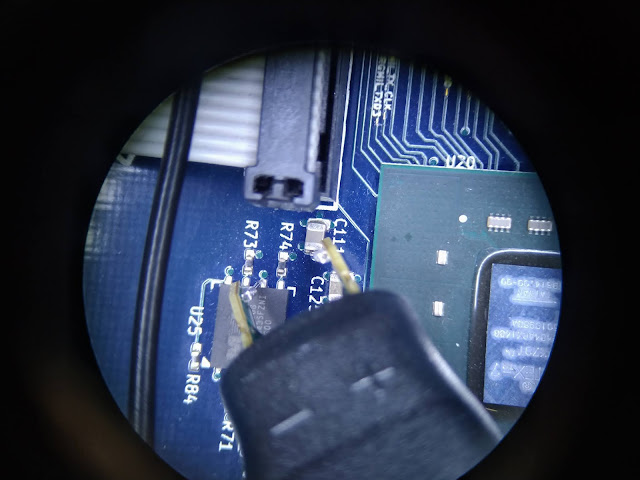

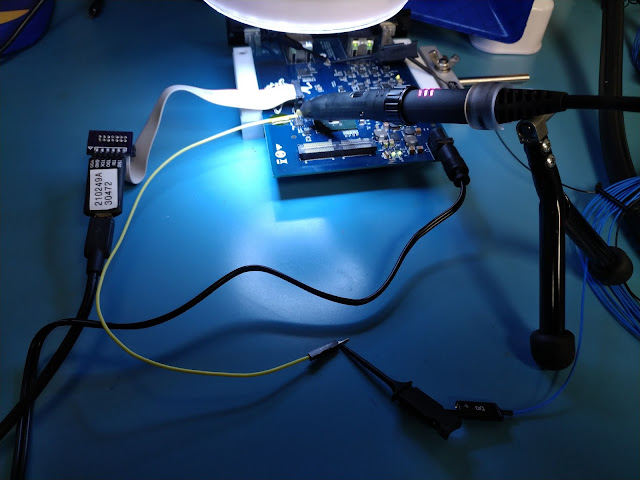

This means that if the wire between the DUT and the logic analyzer is electrically long, the signal will reflect off the analyzer’s input and degrade the signal as seen by the DUT. To see this in action, let’s do an experiment on a real board which boots from an external SPI flash clocked at a moderately fast speed – about 75 MHz.

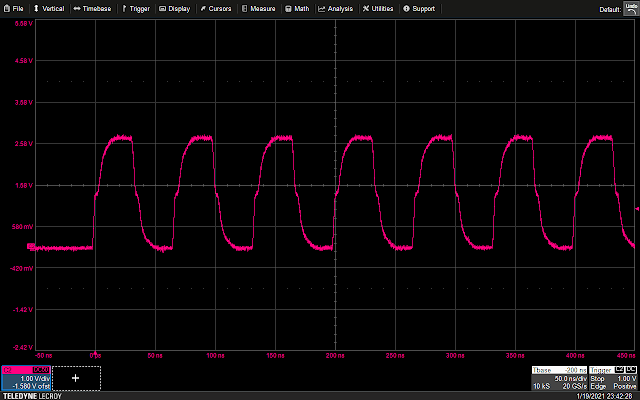

As a control, we’ll first look at the signal using an electrically short probe. I’ll be using a Teledyne LeCroy D400A-AT, a 4 GHz active differential probe. This is a very high performance probe meant for non-intrusive measurements on much faster signals such as DDR3 RAM.

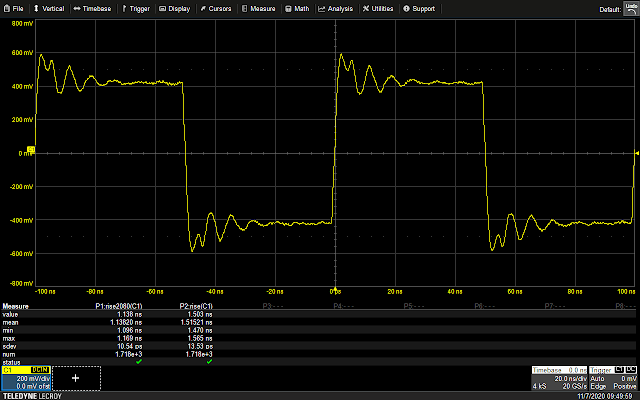

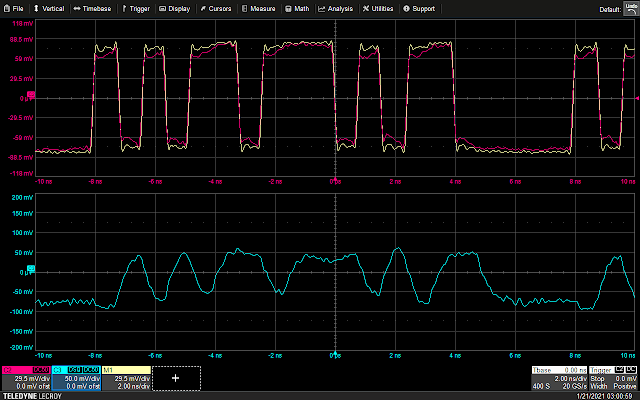

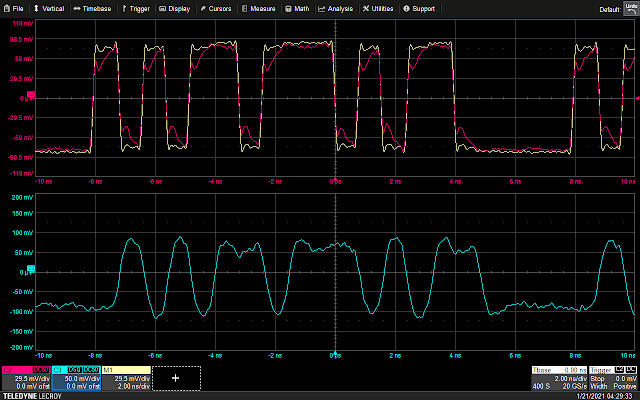

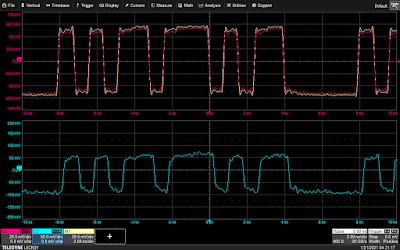

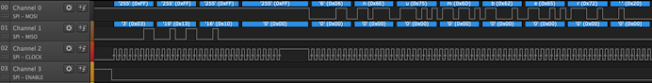

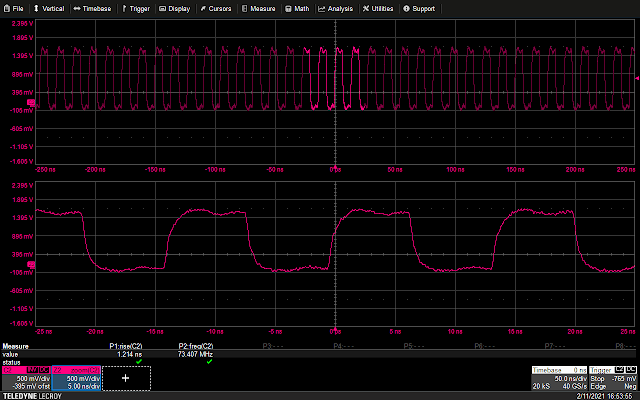

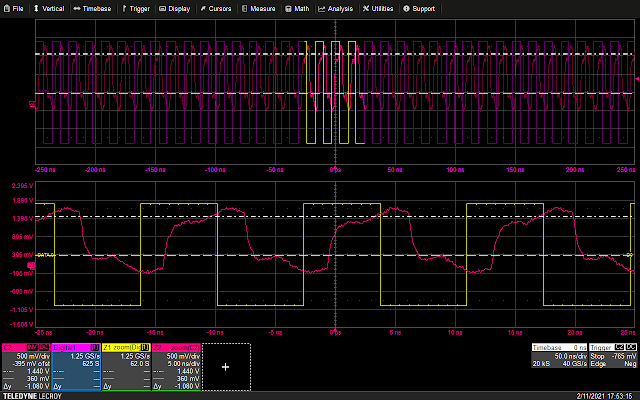

Looking at the scope display, we see a fairly clean square wave on the SPI SCK pin. There’s a small amount of noise and some rounding of the edges, but nothing that would be expected to cause boot failures.

The measured SPI clock frequency is 73.4 MHz and the rise time is 1.2 ns. This means that any stub longer than 120 ps round trip (60 ps one way) will start to produce measurable effects. With a velocity of 0.695, this comes out to about half an inch. You may well get away with something a bit longer (“measurable effects” does not mean “guaranteed boot failure”), but at some point the degradation will be sufficient to cause issues.

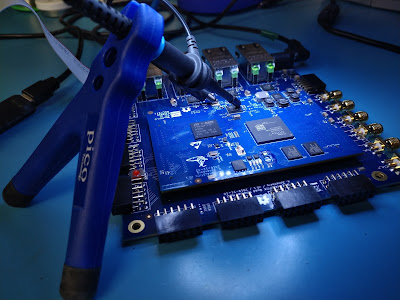

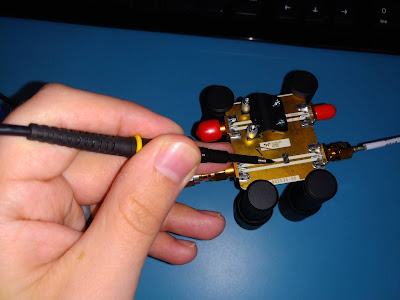

Now that we’ve got our control waveform, let’s build a probing setup more typical of what’s used in lower speed hardware reverse engineering work: a 12-inch wire from a common rainbow ribbon cable bundle, connected via a micro-grabber clip to a Teledyne LeCroy MSO-DLS-001 logic probe. The micro-grabber is about 2.5 inches in length, which when added to the wire comes to a total stub of about 14.5 inches.

(Note that the MSO-DLS-001 flying leads include a probe circuit at the tip, so the length of the flying lead itself does not count toward the stub length. When using lower end units such as the Saleae Logic that use ordinary wire leads, the logic analyzer’s lead length must be considered as part of the stub.)

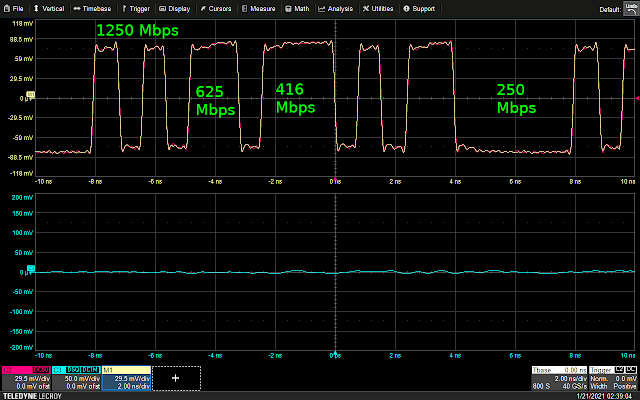

We’d thus expect to see a reflection at around 3.5 ns, although there’s a fair bit of error in this estimate because the velocity factor of the cable is unspecified since it’s not designed for high-speed use. We also expect the reflections to be a bit more “blurry” and less sharply defined than in the previous examples, since the rise time of our test signal is slower.

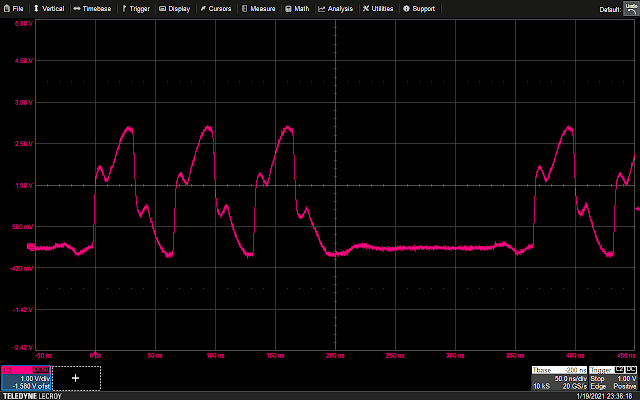

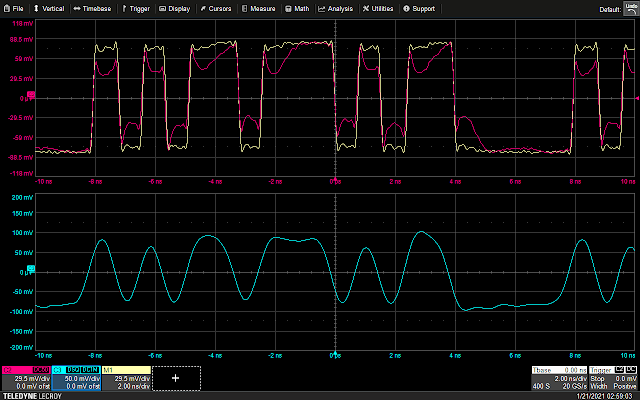

There’s a lot of data to unpack here so let’s go over it piece by piece.

First, we do indeed see the expected reflection. For about the first half of the cycle – close to the 3.5 ns we predicted – the waveform is at a reduced voltage, then it climbs to the final value for the rest of the cycle.

Second, there is significant skew between the waveform seen by the analog probe and the logic analyzer, which is caused by the large difference in length between the path from the DUT to the two probe inputs.

Third, this level of distortion is very likely to cause the DUT to malfunction. The two horizontal cursors are at 0.2 and 0.8 times the 1.8V supply voltage for the flash device, which are the logic low and high thresholds from the device datasheet. Any voltage between these cursors could be interpreted as either a low or a high, unpredictably, or even cause the input buffer to oscillate.

During the period in which the clock is supposed to be high, more than half the time is spent in this nondeterministic region. Worst case, if everything in this region is interpreted as a logic low, the clock will only appear to be high for about a quarter of the cycle! This would likely act like a glitch and result in failures.

Most of the low period is spent in the safe “logic low” range, however it appears to brush against the nondeterministic region briefly. If other noise is present in the DUT, this reflection could be interpreted as a logic high and also create a glitch.

Conclusions

As electronics continue to get faster, hardware hackers can no longer afford to remain ignorant of transmission line effects. A basic understanding of these physics can go a long way in predicting when a test setup is likely to cause problems.