Fault injection, also known as glitching, is a technique where some form of interference or invalid state is intentionally introduced into a system in order to alter the behavior of that system. In the context of embedded hardware and electronics generally, there are a number of forms this interference might take. Common methods for fault injection in electronics include:

- Clock glitching (errant clock edges are forced onto the input clock line of an IC)

- Voltage fault injection (applying voltages higher or lower than the expected voltage to IC power lines)

- Electromagnetic glitching (Introducing EM interference)

This article will focus on voltage fault injection, specifically, the introduction of momentary voltages outside of normal operating conditions on the target device’s power rails. These momentary pulses or drops in input voltage (glitches) can affect device operation, and are directed with the intention of achieving a particular effect. Commonly desired effects include “corrupting” instructions or memory in the processor and skipping instructions. Previous research has shown that these effects can be predictably achieved [1], as well has provided some explanation as to the EM effects (caused by the glitch) which might be responsible for the various behaviors [2].

However, a gap in published research exists in correlating glitches (and associated EM effects) with concrete changes in state at the processor level (i.e. what exactly occurs in the processor at the moment of a glitch that causes an instruction to be corrupted or skipped, an incorrect branch to be taken, etc.). This article seeks to quantify and qualify the state of a processor before, during, and after an injected fault, and describe discrete changes in markers such as registers including general registers as well as control registers such as $pc and $lr, memory, and others.

Past Research and Thanks

Special thanks to the folks at Toothless Consulting, whose excellent series of blog posts [3] were my introduction to fault injection, and the inspiration for this project. Additional thanks to Chris Gerlinsky, whose research into embedded device security and in particular his talk [4] on breaking CRP on the LPC family of chips was an invaluable resource during this project.

Test Setup

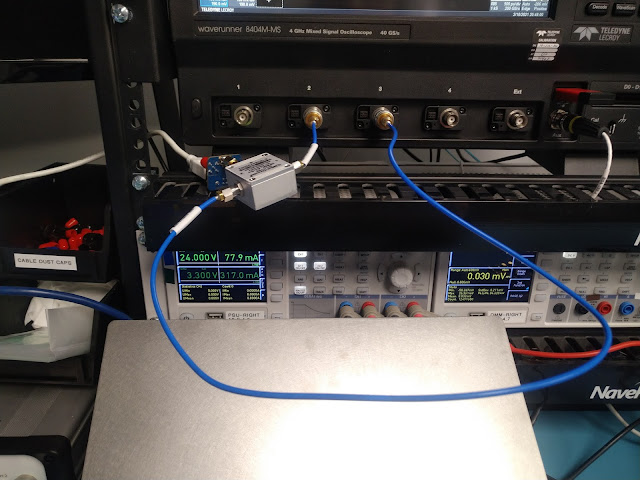

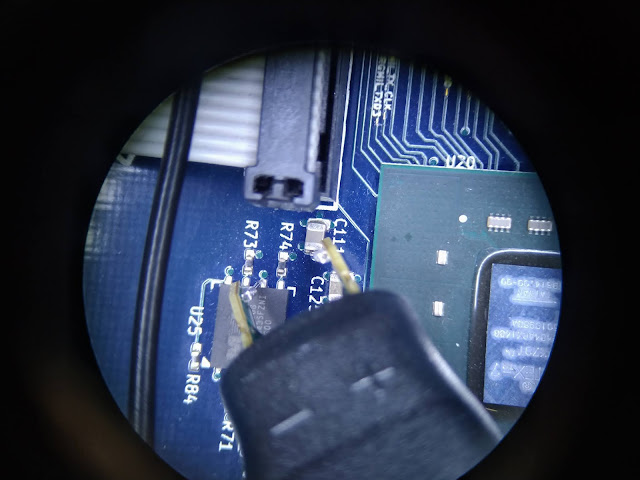

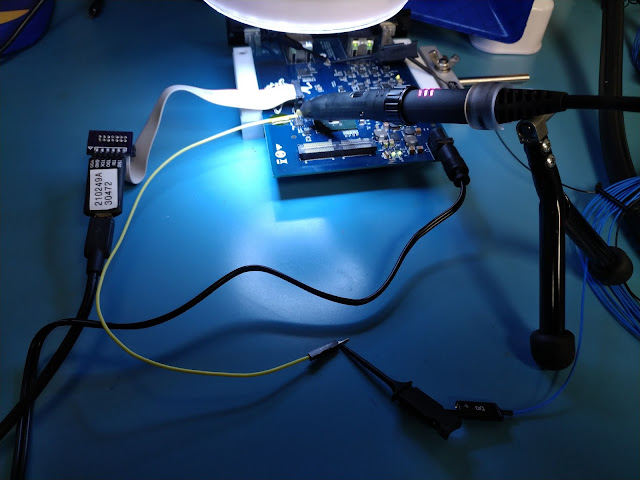

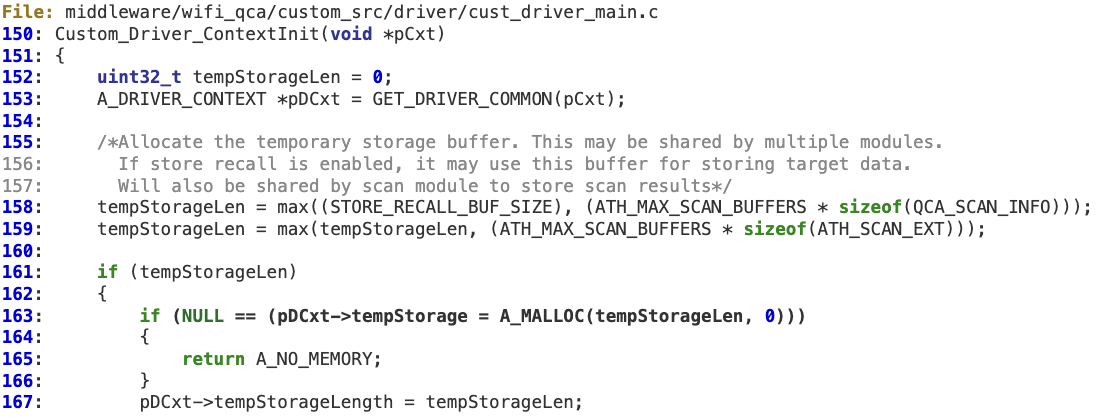

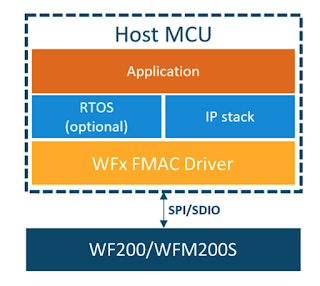

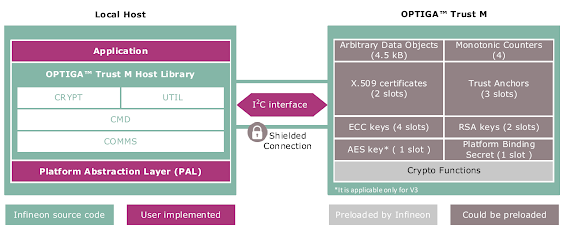

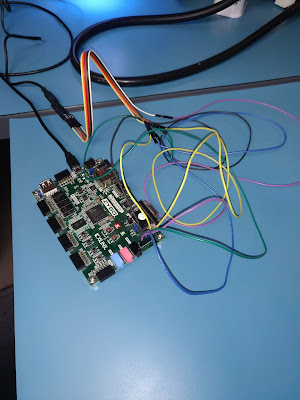

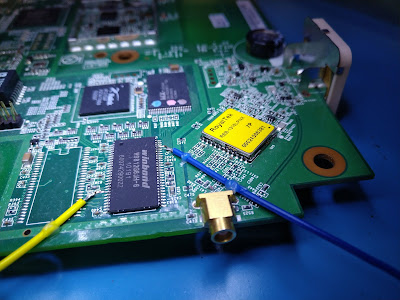

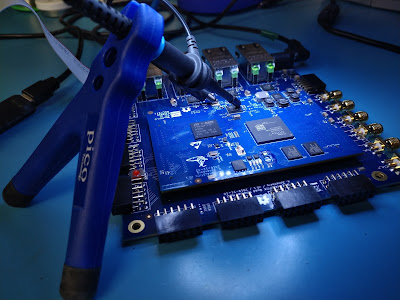

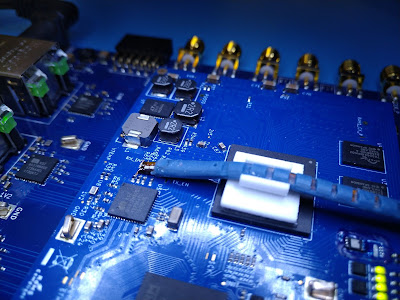

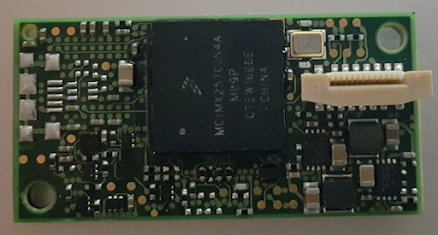

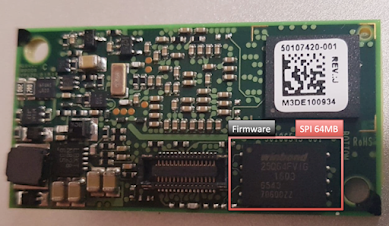

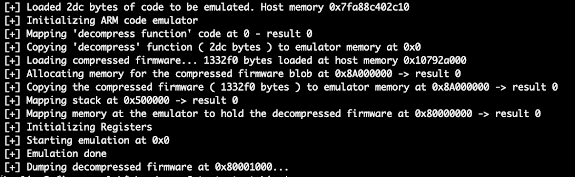

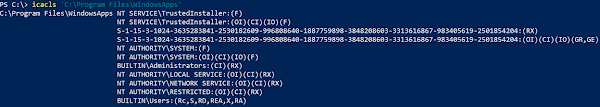

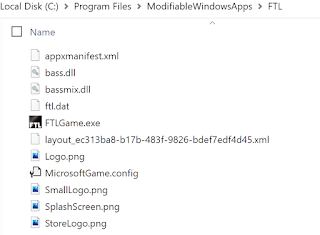

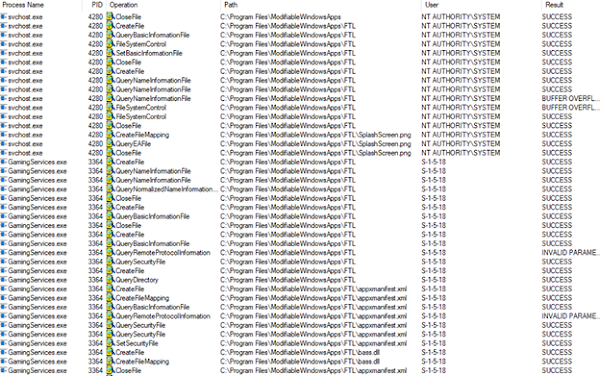

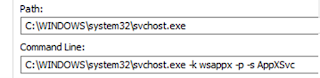

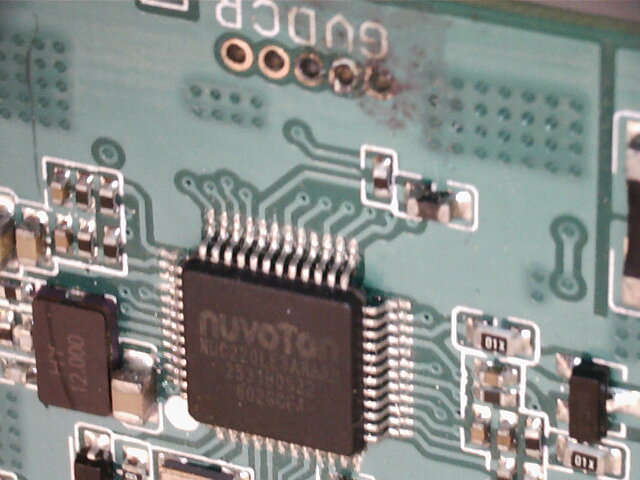

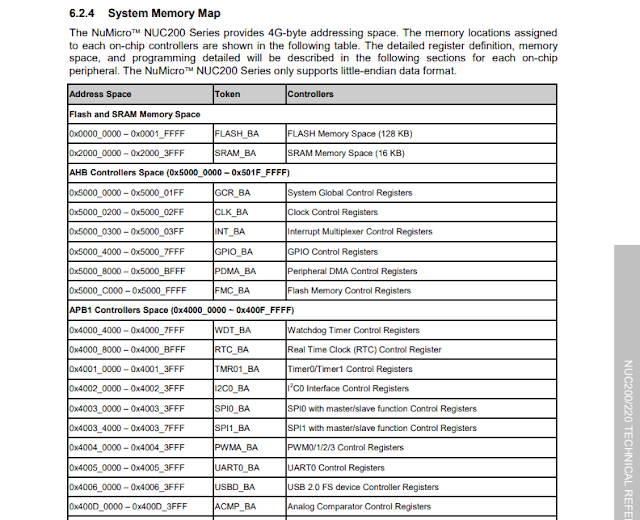

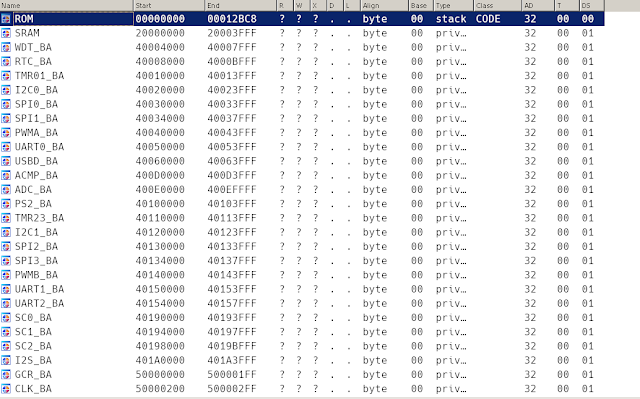

The target device chosen for testing was the NXP LPC1343, an ARM Cortex-M3 microcontroller. In order to control the input target voltage and coordinate glitches, the Digilent Arty A7 development board was used, built around the Xilinx Artix 7 FPGA. Custom gateware was developed for the Arty board, in order to facilitate control and triggering of glitches based on a variety of factors. For the purposes of this article, the two main triggers used are a GPIO line which goes high/low synchronized to certain device operations, and SWD signals corresponding to a “step” event. The source code for the FPGA gateware is available here.

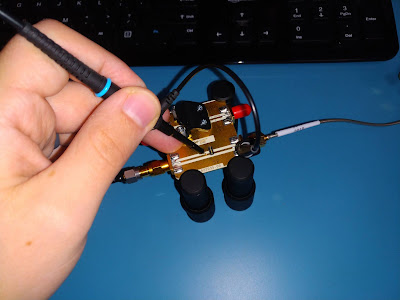

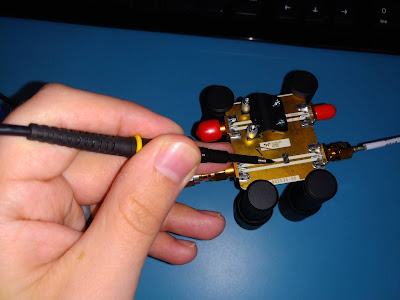

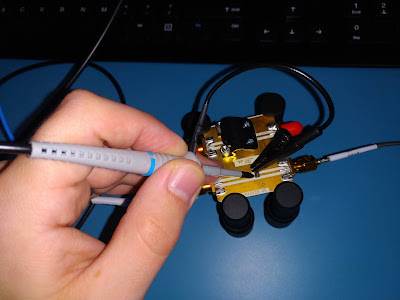

In order to switch between the standard voltage level (Vdd) and the glitch voltage level (Vglitch), a Maxim MAX4617 Multiplexer IC was used. It is capable of switching between inputs in as little as 10ns, and is thus suitable for producing a glitch waveform on the LPC 1343 power rails with sufficient accuracy and timing.

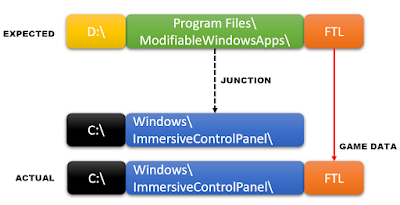

As illustrated in the image above, the Arty A7 monitors a “trigger” line, either a GPIO output from the target or the SWD lines between the target and the debugger, depending on the mode of operation. When the expected condition is met, the A7 will drive the “glitch out” according to a provided waveform specifier, triggering a switch between Vdd and Vglitch via the Power Mux Circuit and feeding that to the target Vcore voltage line. A Segger J-Link was used to provide debug access to the target, and the SWD lines are also fed to the A7 for triggering.

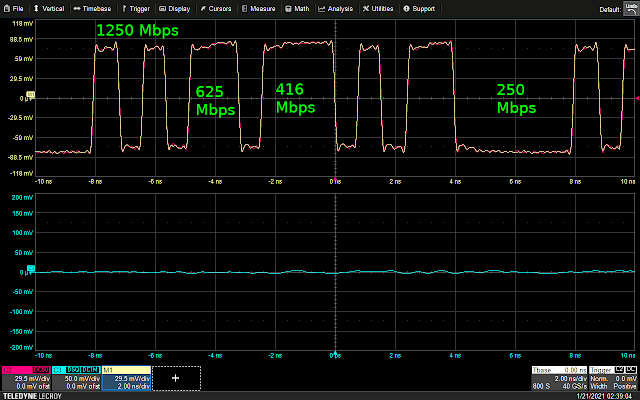

In order to facilitate triggering on arbitrary SWD commands, a barebones SWD receiver was implemented on the A7. The receiver parses SWD transactions sniffed from the bus, and outputs the deserialized header and transaction data, values which can then be compared with a pre-configured target value. This allows for triggering of the glitchOut line based on any SWD data – for example, the S TEP and RESUME transactions, providing a means of timing glitches for single-stepped instructions.

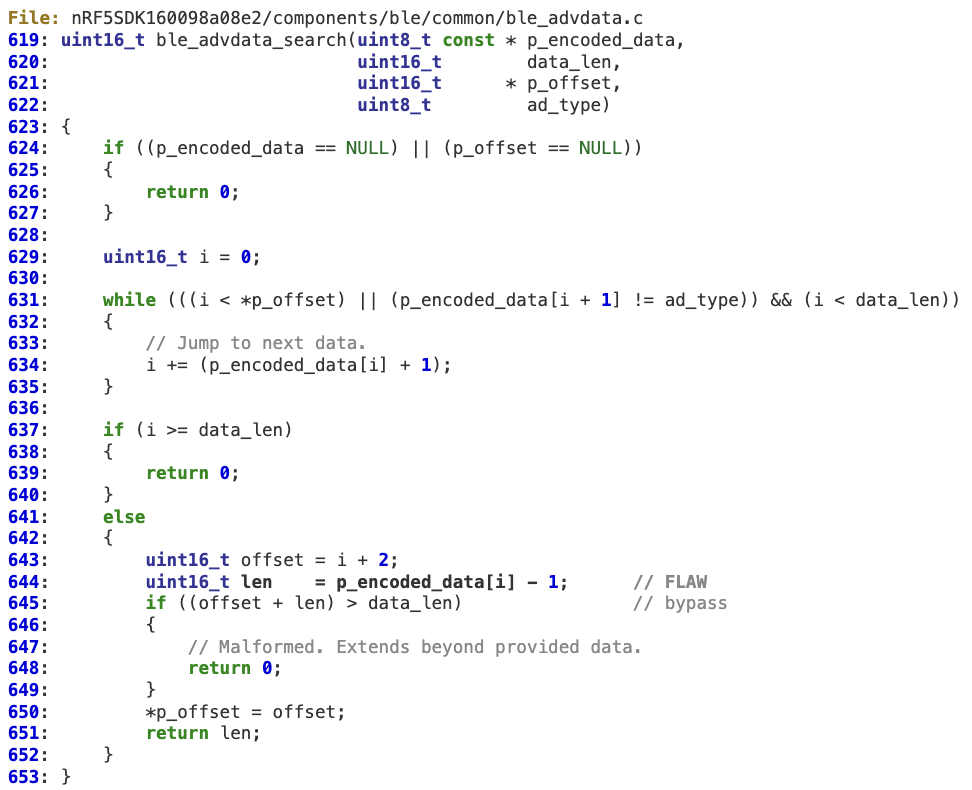

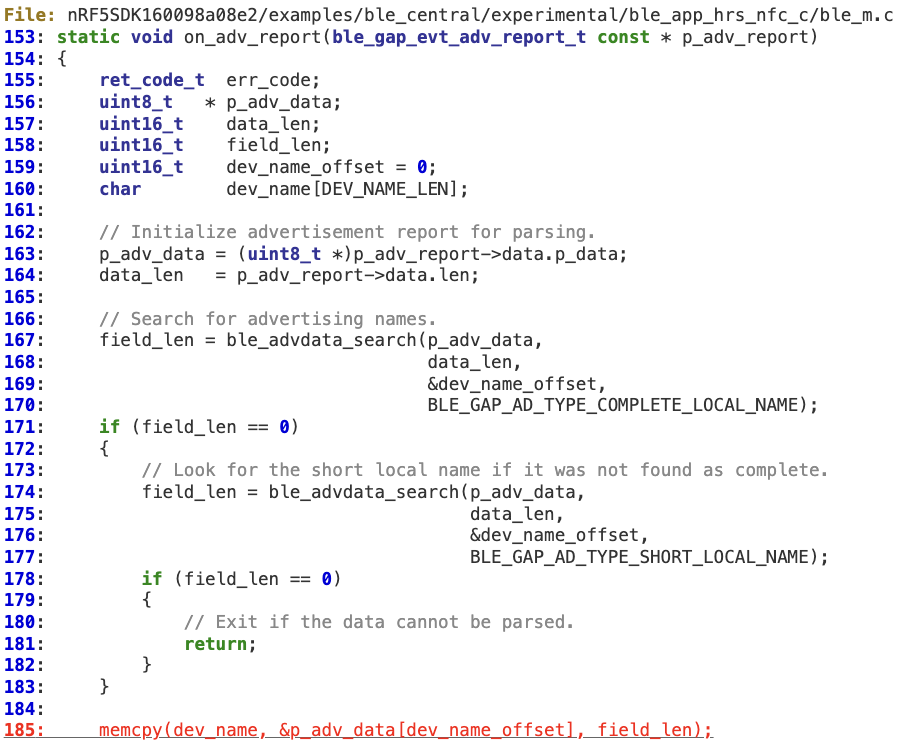

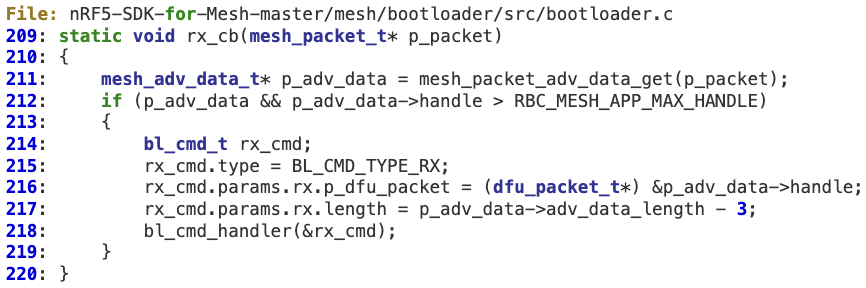

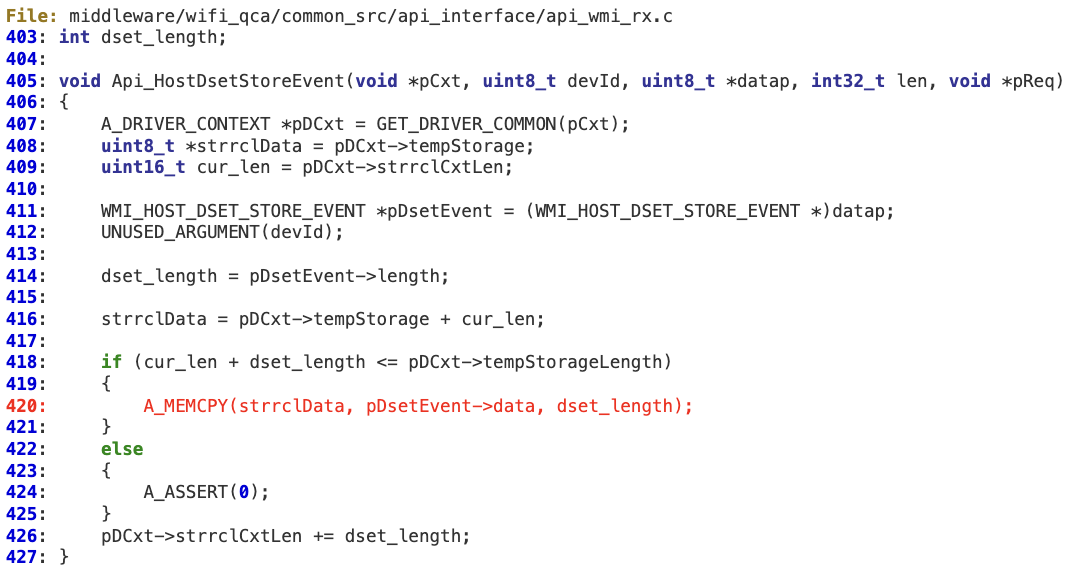

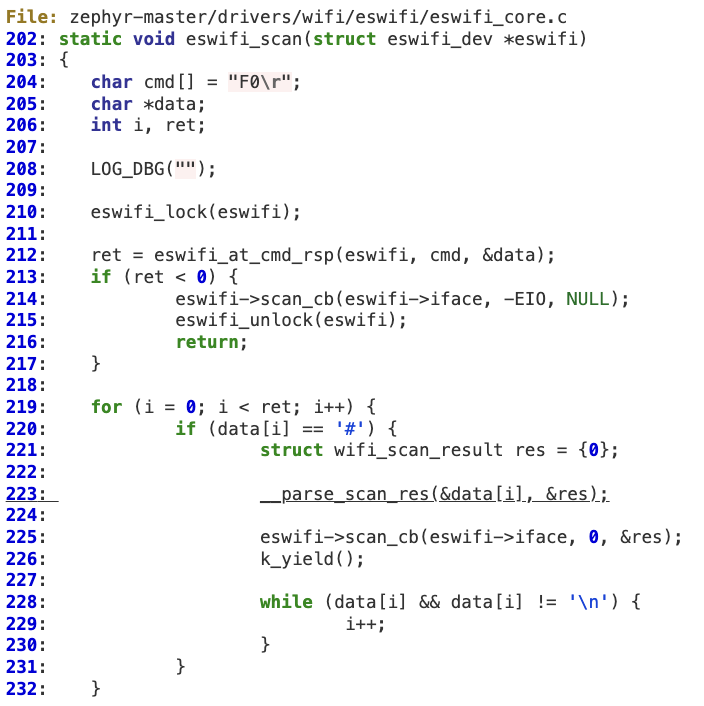

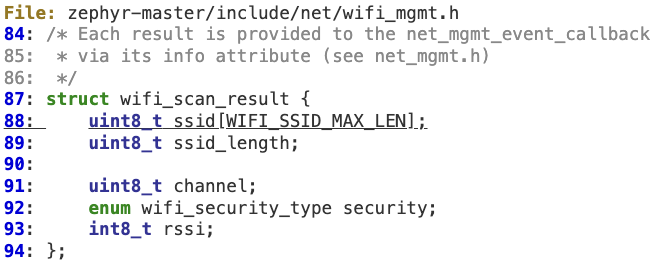

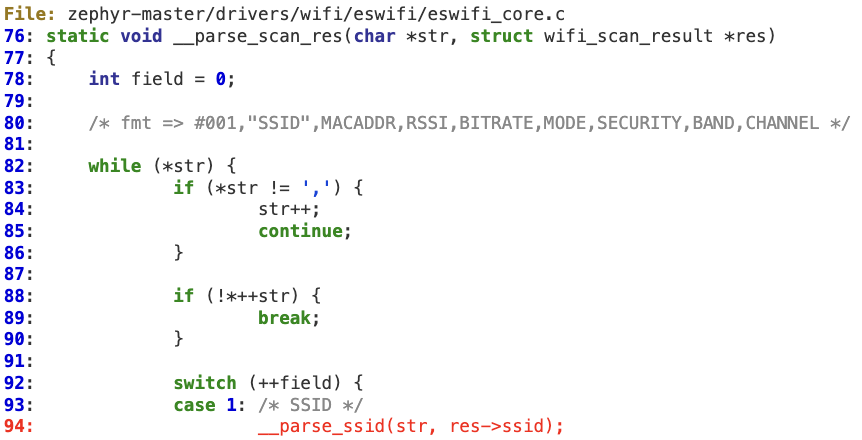

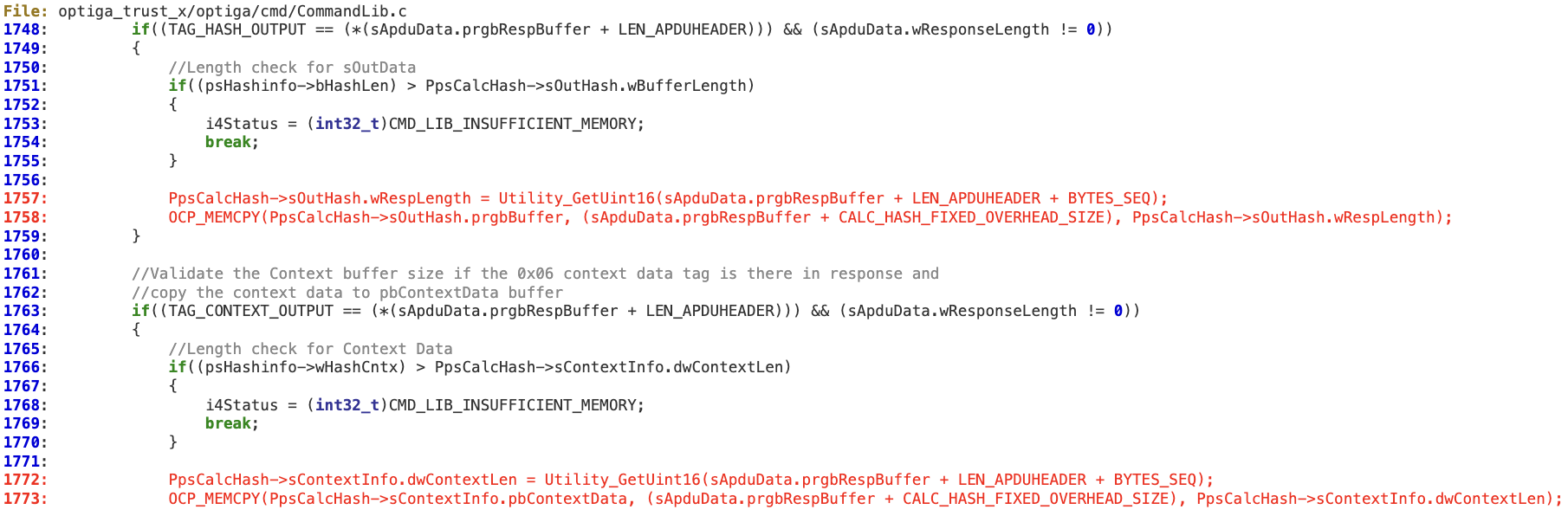

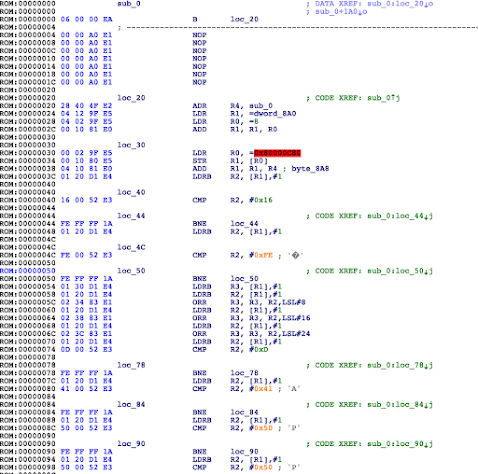

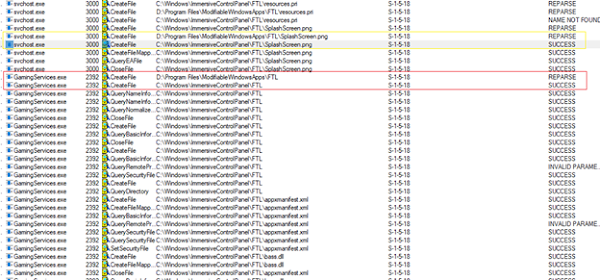

Prior to any direct testing of glitches performed while single-stepping instructions, observing glitches during normal operation and the effects they cause is helpful to provide a base understanding, as well as to provide a platform for making assumptions which can be tested later on. To provide an environment for observing the results of glitches of varied form and duration, program execution consists of a simple loop, incrementing and decrementing two variables. At each iteration, the value of each variable is checked against a known target value, and execution will break out of the loop when either one of the conditions is met. Outside of the loop, the values are checked against expected values and those values are transmitted via UART to the attacking PC if they differ.

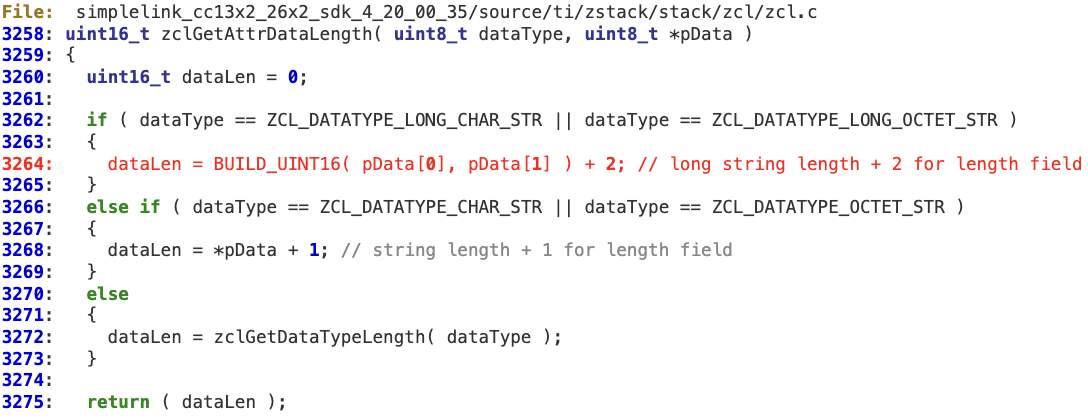

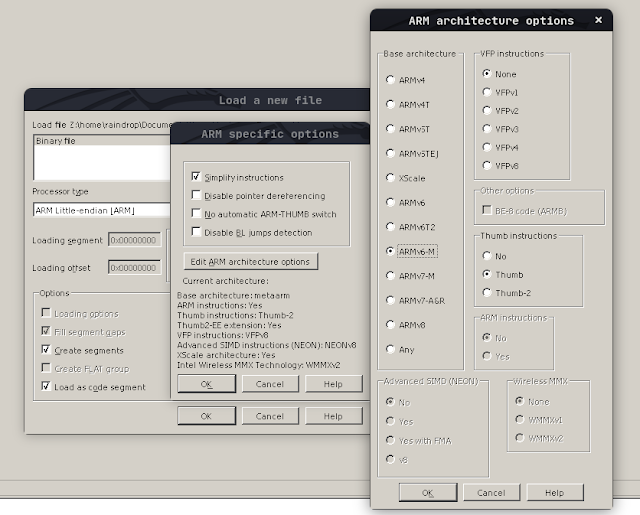

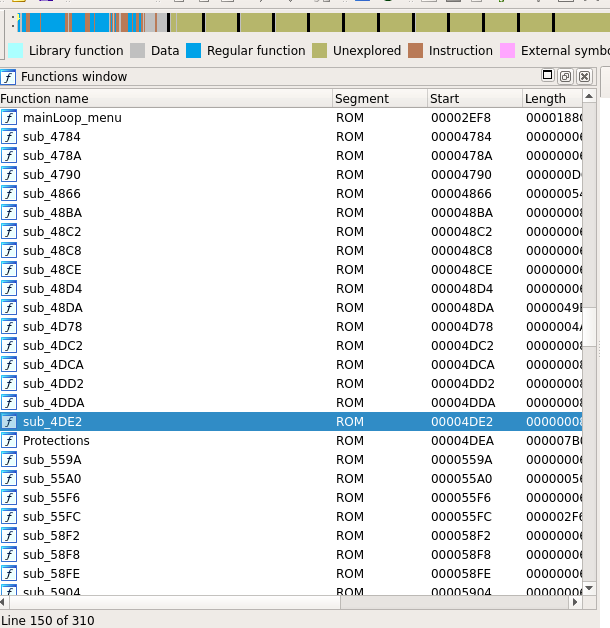

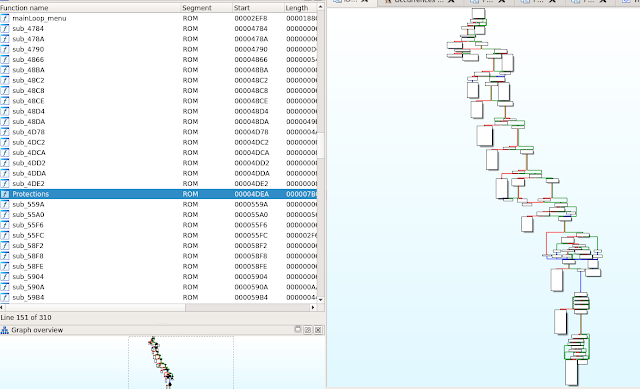

Binary Ninja reverse engineering software was used to provide a visual representation of the compiled C. Because the assembly presented represents the machine code produced after compiling and linking, we can be sure that it matches the behavior of the processor exactly (ignoring concepts like parallel execution, pipelining etc. for now), and lean on that information when making assumptions about timing and processor behavior with regard to injecting faults.

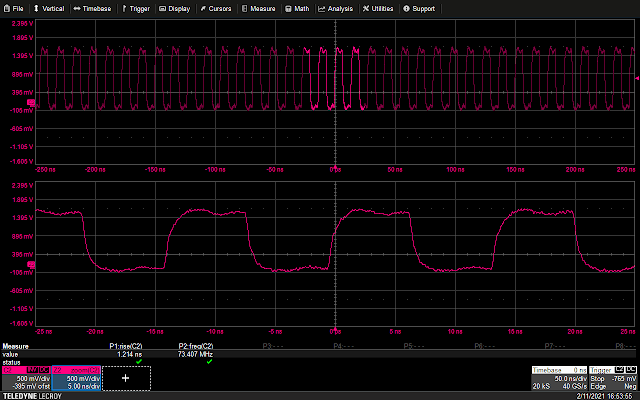

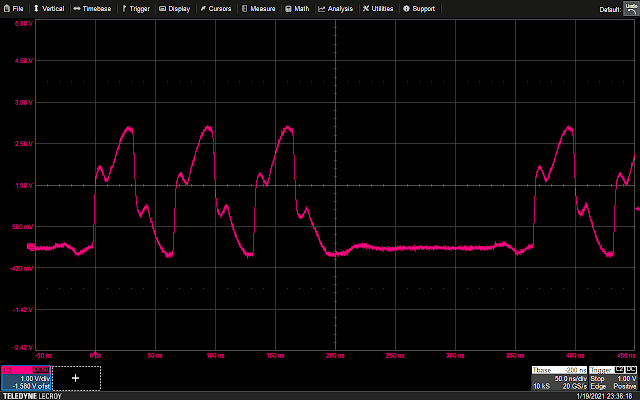

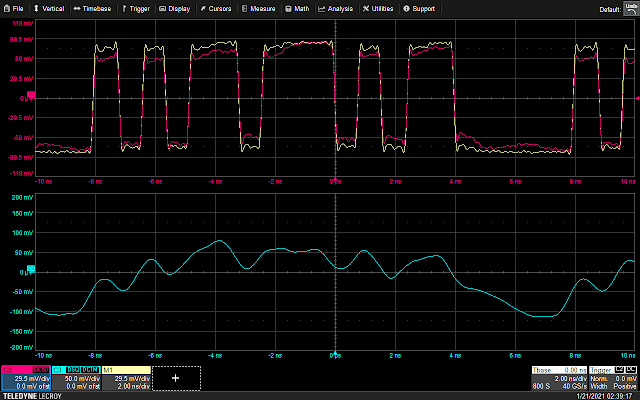

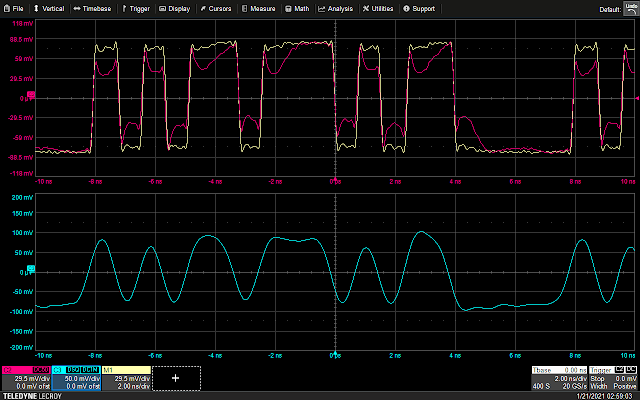

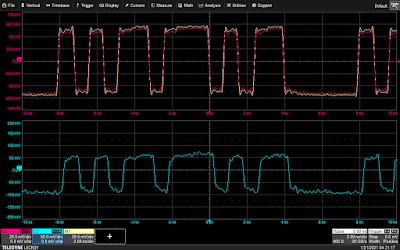

Though simple, this environment provides a number of interesting targets for fault injection. Contained in the loop are memory access instructions (LDR, STR), arithmetic operations (ADDS, SUBS), comparisons, and branching operations. Additionally, the pulse of PIO2_6 provides a trigger for the glitchOut signal from the FPGA – depending on the delay applied to that signal, different areas/instructions in the overall loop may be targeted. By tracing the power consumption of the ARM core with a shunt resistor and transmission line probe, execution can be visualized.

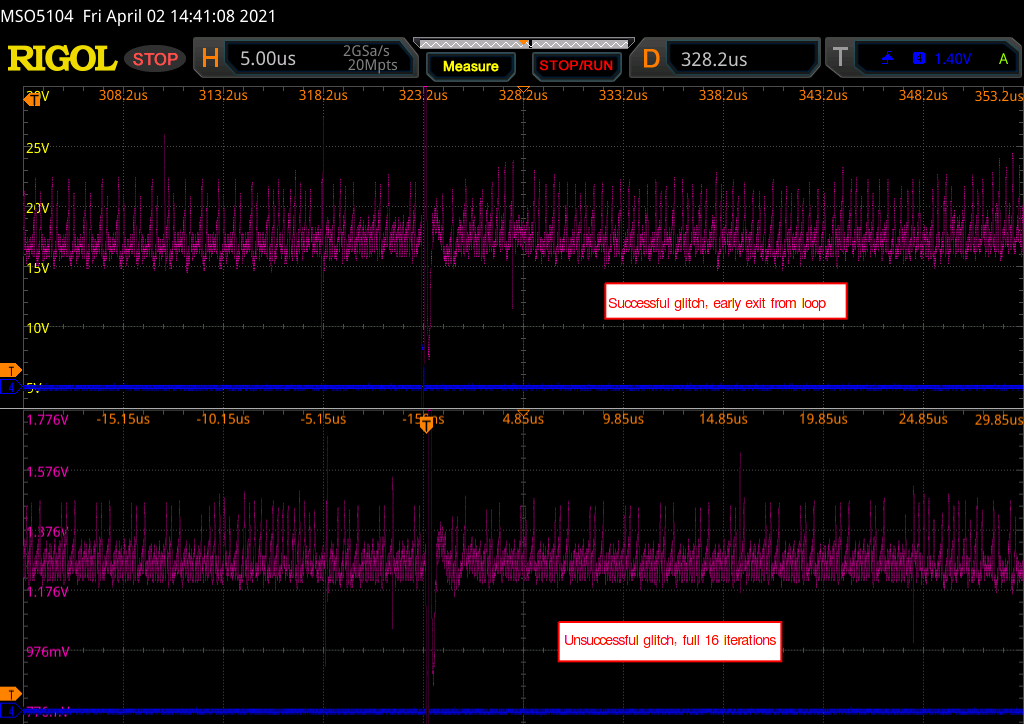

The following waveform shows the GPIO trigger line (blue), and the power trace coming from the LPC (purple). The GPIO line goes high for one cycle then low, signaling the start of the loop. What follows is a pattern which repeats 16 times, representing the 16 iterations of the loop. This is bounded on either side by the power trace corresponding to the code responsible for writing data to the UART, and branching back to the start of the main loop, which is fairly uniform.

We now have:

- A reference of the actual instructions being executed by the processor (the disassembly via Binary Ninja)

- A visual representation of that execution, viewable in real time as the processor executes (via the power trace)

- A means of taking action within the system under test which can be calibrated based on the behavior of the processor (the FPGA glitcher).

Using the above information, it is possible to vary the offset of the glitch from the trigger, and (roughly) correlate that timing to a given instruction or group of instructions being executed. For example, by triggering a glitch sometime during the sixth repetition of the pattern on the power trace, we can observe that that portion of the power trace appears to be cut off early, and the values reported over UART by the target reflect some kind of misbehavior or corruption during the sixth iteration of the loop.

So far, the methodology employed has been in line with traditional fault injection parameter search techniques – optimize for visibility into a system to determine the most effective timing and glitch duration using some behavior baked into device operation (here, a GPIO line pulsing). While this provides coarse insight into the effects of a successfully injected fault (for the above example we can make the assumption that an operation at some point during the sixth iteration of the loop was altered, any more specificity is just speculation), it may have been a skipped load instruction, a corrupted store, or a flipped compare among many other possibilities.

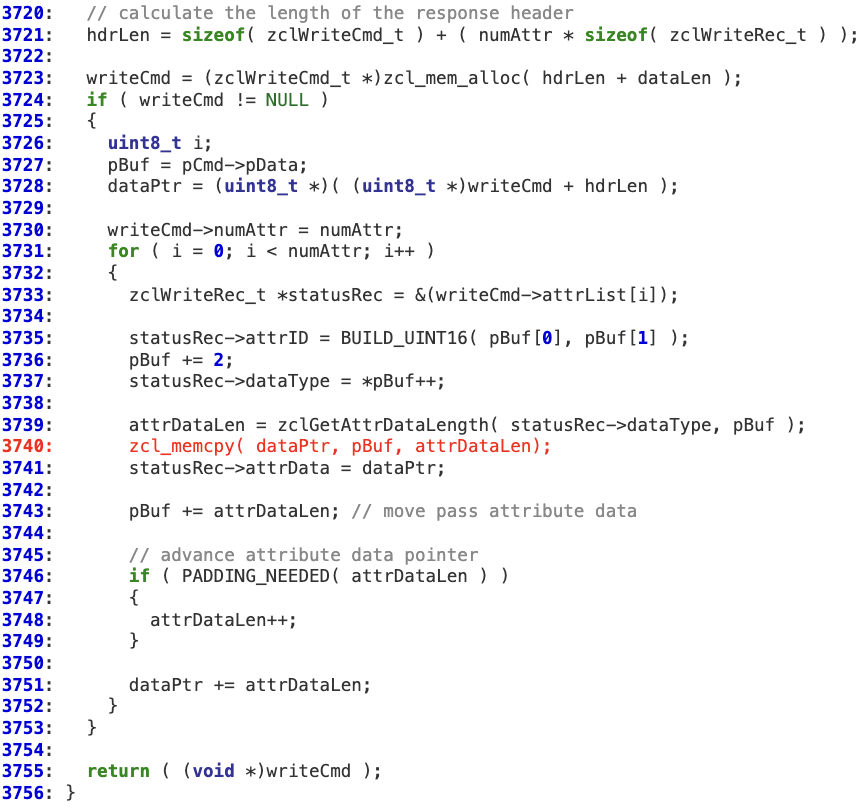

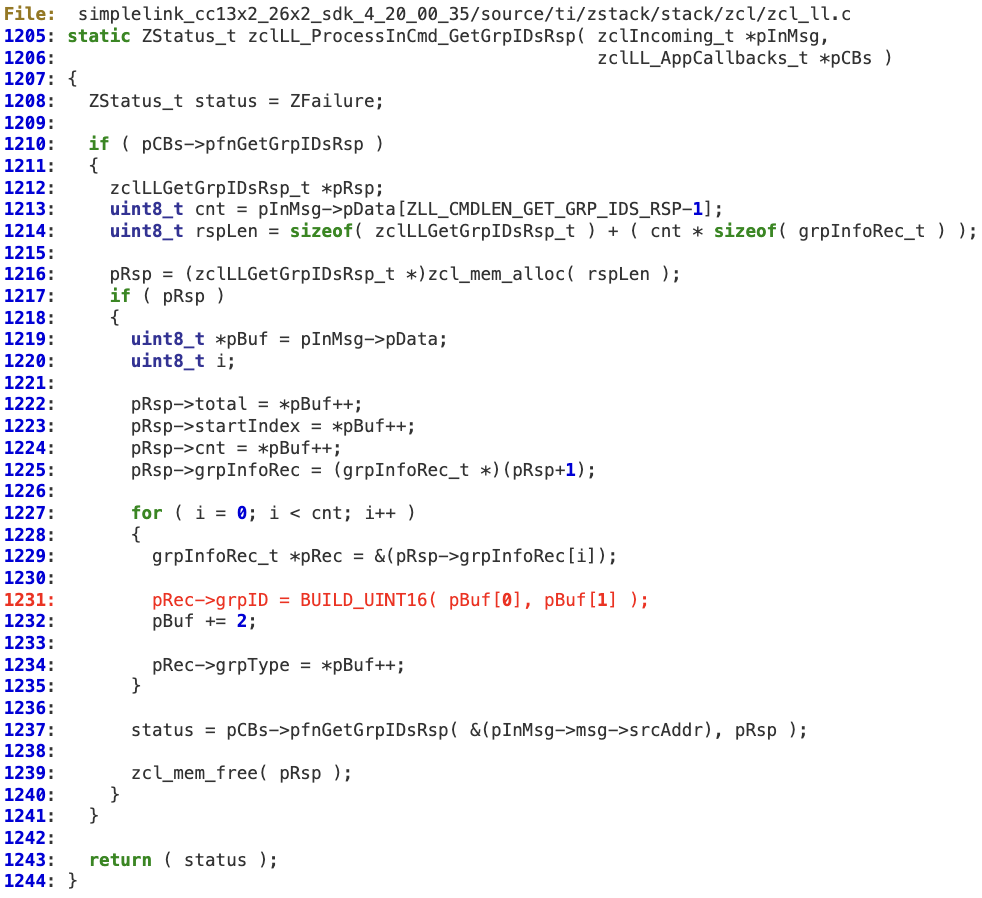

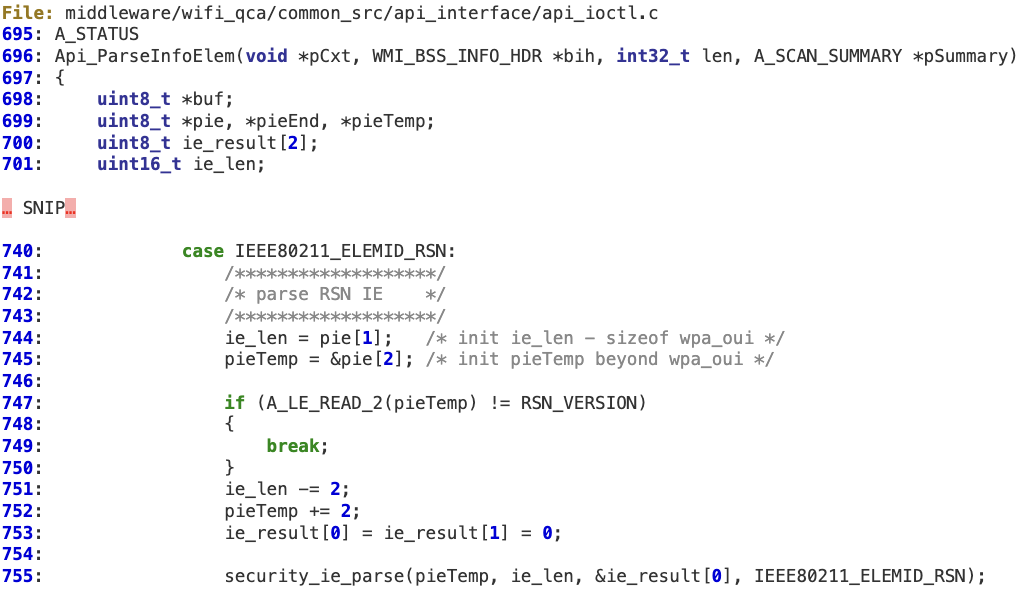

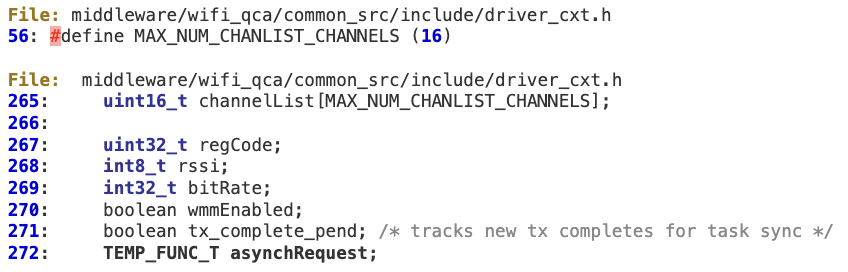

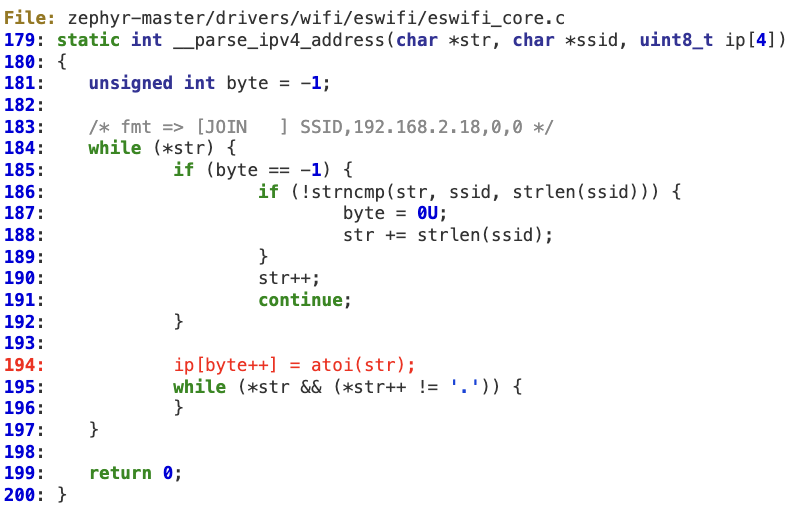

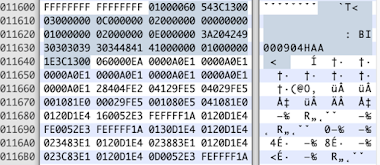

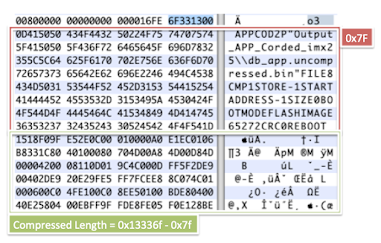

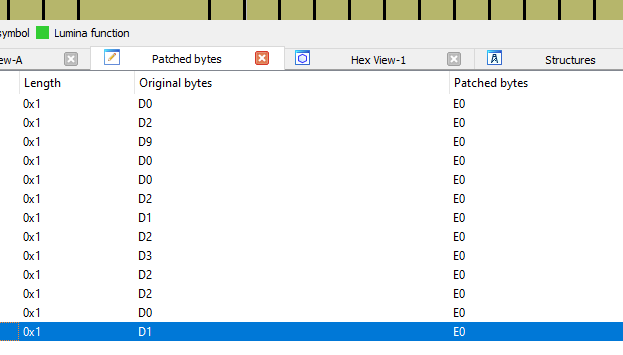

To illustrate this point, the following is the parsed, sorted, and counted output of the UART traffic from the target device, after running the glitch for a few thousand iterations of the outer loop. The glitch delay and duration remained constant, but resulted in a fairly wide spread of discreet effects on the state of the variables at the end of the loop. Some entries are easy to reason about, such as the first and most common result: B is the expected value after six iterations (16 – 6 = 10), but A is 16, and thus a skipped LDR or STR instruction may have left the value 16 in the register placed there by previous operations. However, other results are harder to reason about, such as the entries containing ascii text, or entries where the variable with the incorrect value doesn’t appear to correlate to the iteration number of the loop.

This level of vagueness is acceptable in some applications of fault injection, such as breaking out of an infinite loop as is sometimes seen in secure boot bypass techniques. However, for more complex attacks where a particular operation needs to be corrupted in just the right way greater specificity, and thus a more granular understanding, is a necessity.

And so what follows is the novel portion of the research conducted for this article: creating a methodology for targeting fault injection attacks to single instructions, leveraging debug interfaces such as SWD/JTAG for instruction isolation and timing. In addition to the research value offered by this work, the developed methodology may also have practical applications under certain, not uncommon circumstances regarding devices in the wild as well, which will be discussed in a later section.

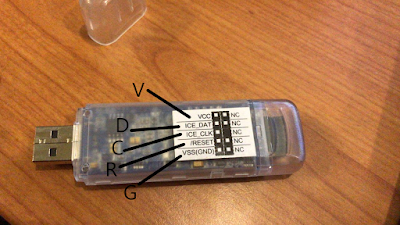

A (Very) Quick Rundown of the SWD protocol

SWD is a debugging protocol developed by ARM and used for debugging many devices, including the Cortex-M3 core in the LPC 1343 target board. From the ARM Debug Interface Architecture Specification ADIv5.0 to ADIv5.2

The Arm SWD interface uses a single bidirectional data connection and a separate clock to transfer data synchronously. An operation on the wire consists of two or three phases: packet request, acknowledgement response, and data transfer.

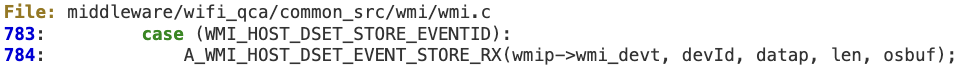

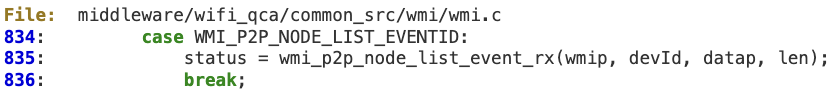

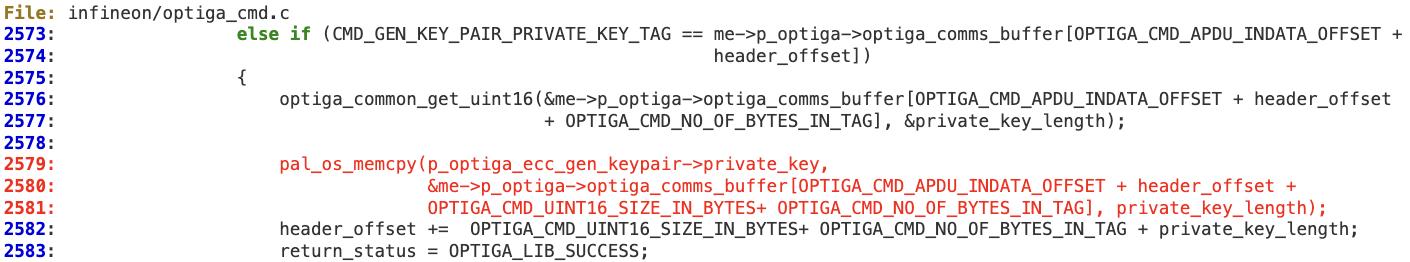

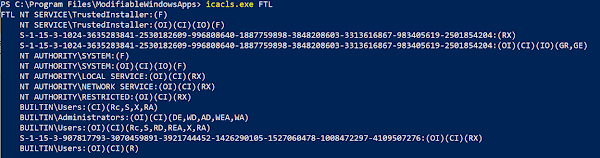

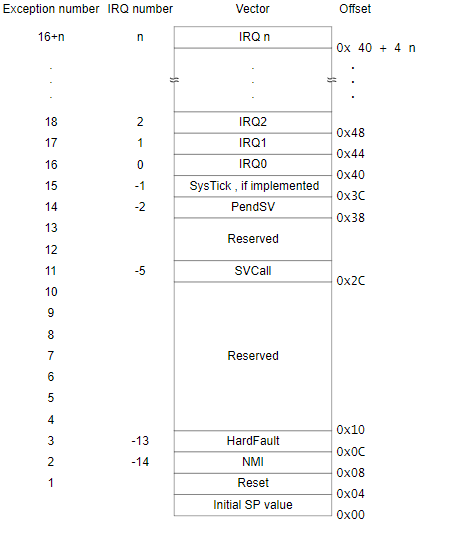

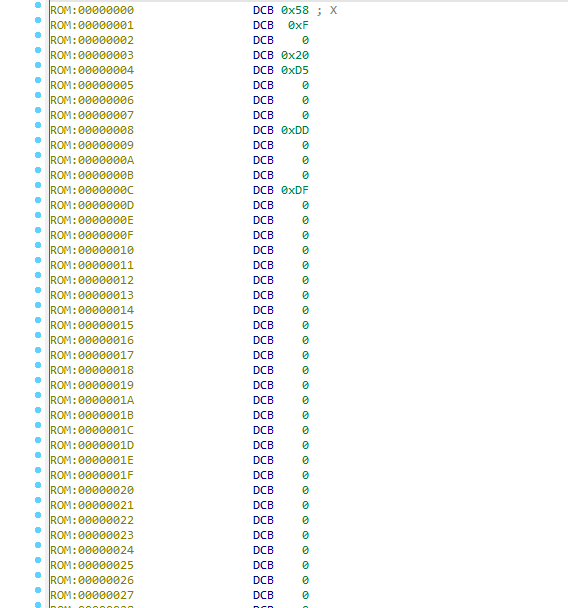

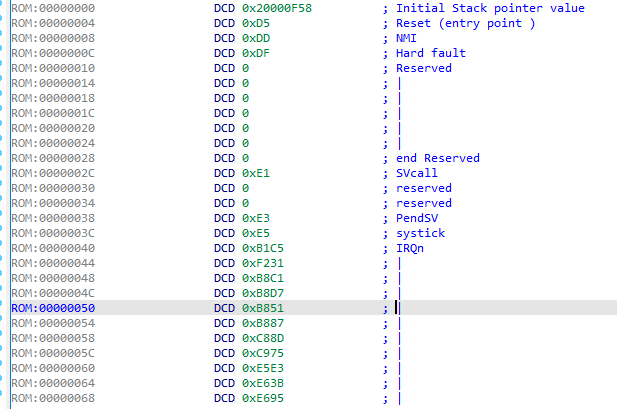

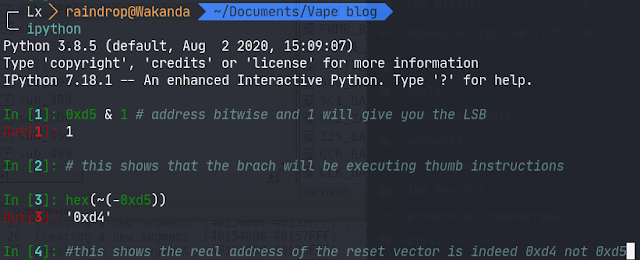

Of course, there’s more to it than that, but for the purposes of this article all we’re really interested in is the data transfer, thanks to a quirk of Cortex-M3 debugging registers: halting, stepping, and continuing execution are all managed by writes to the Debug Halting Control and Status Register (DHCSR). Additionally, writes to this register are always prefixed with 0xA05F, and only the low 4 bits are used to control the debug state — [MASKINTS, STEP, HALT, DEBUGEN] from high to low. So we can track STEP and RESUME actions by looking for SWD write transaction with the data 0xA05F0001 (RESUME) and 0xA05F000D (STEP).

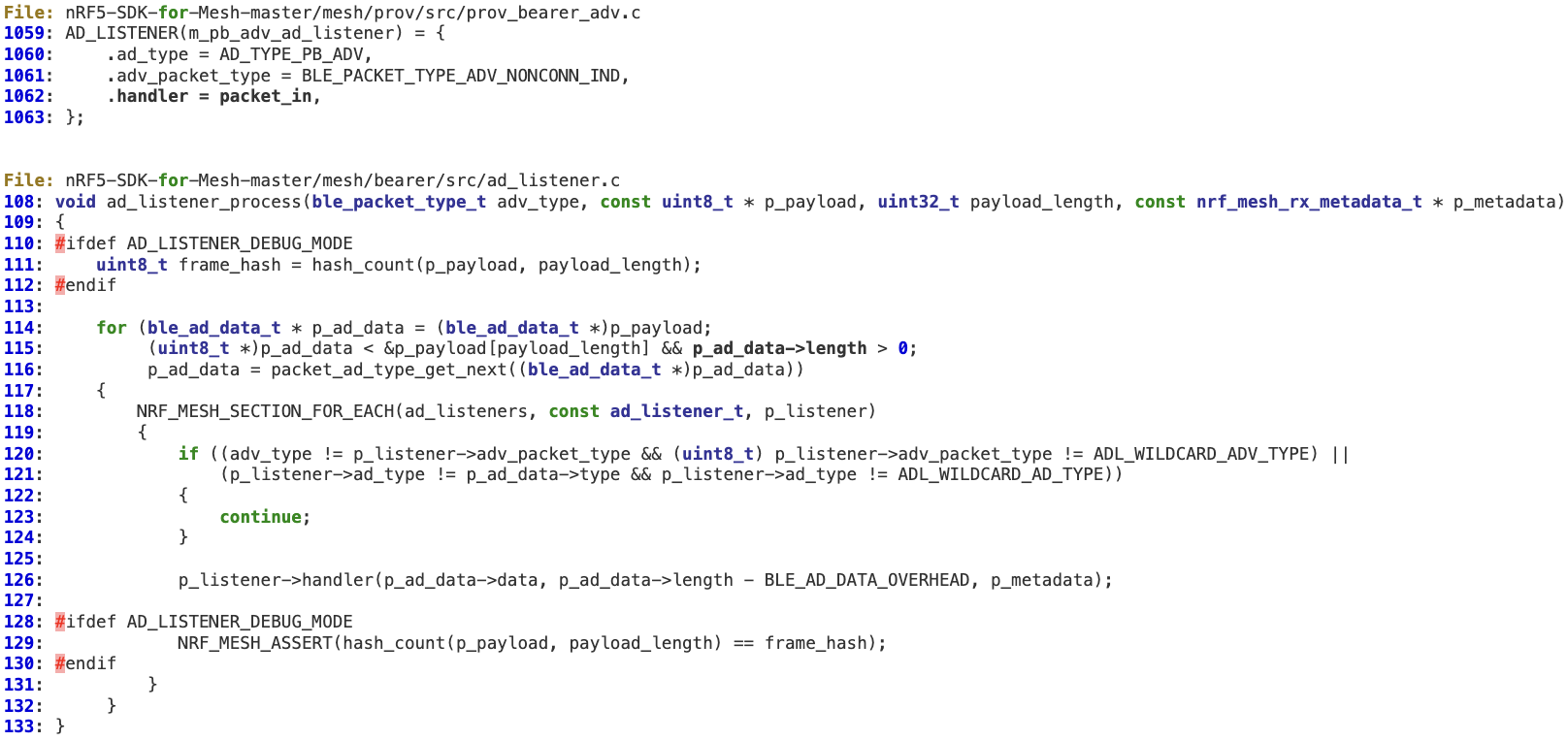

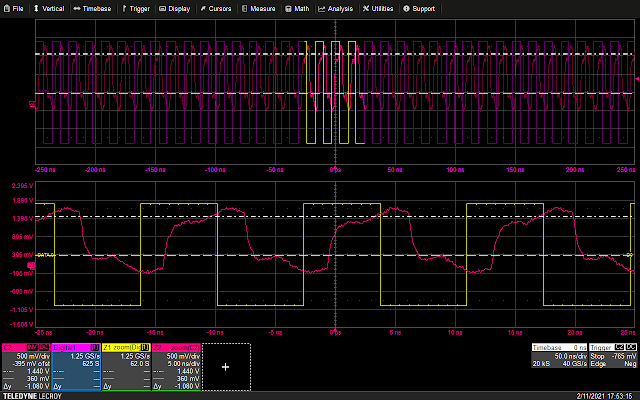

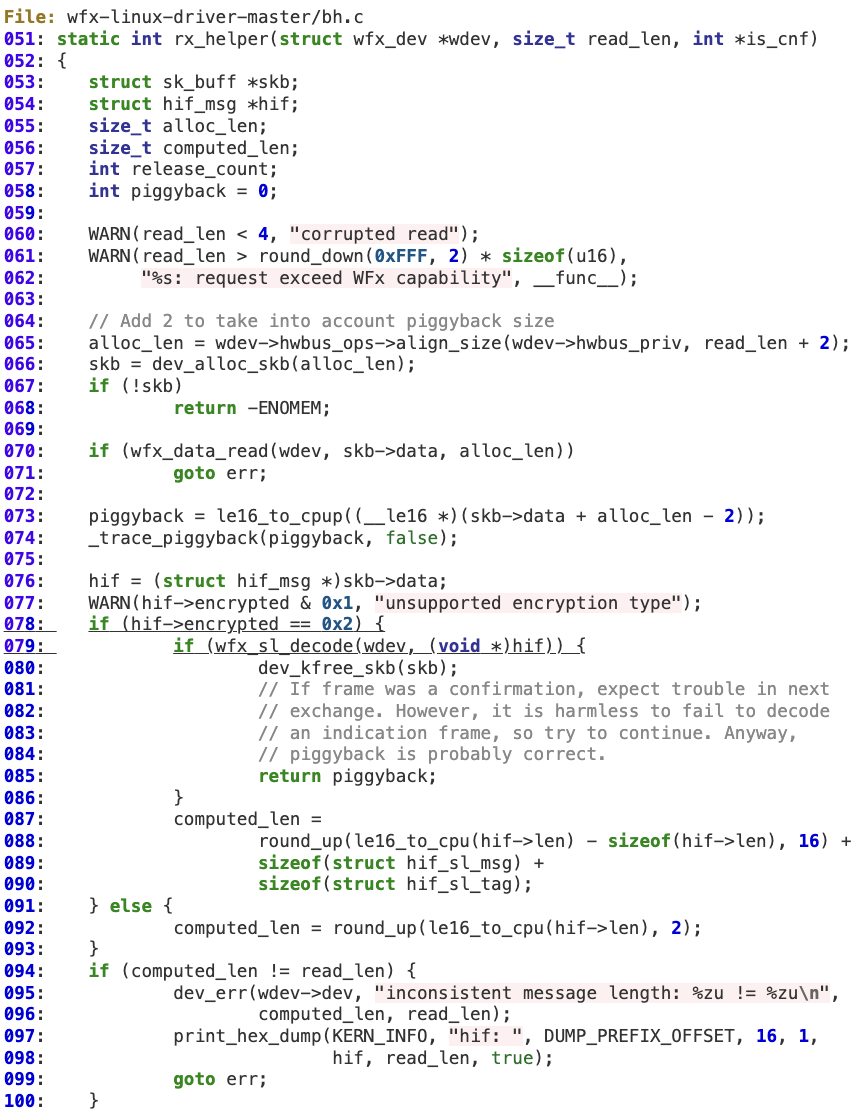

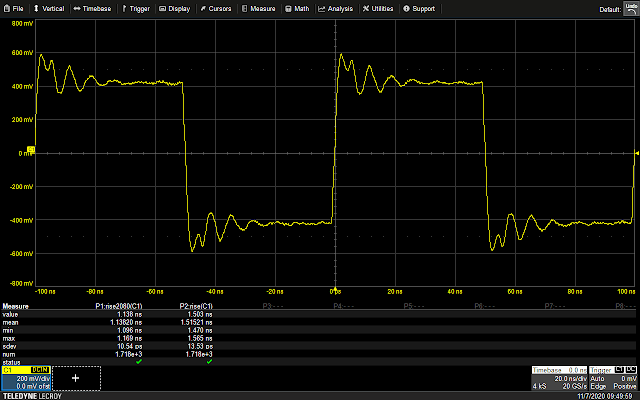

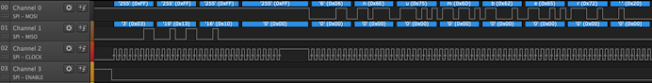

Because of the aforementioned bidirectionality of the protocol, it isn’t as easy as just matching a bit pattern: based on whether a read or write transaction is taking place, and which phase is currently underway, data may be valid on either clock edge. Beyond that, there are also turnaround periods that may or may not be inserted between phases, depending on the transaction. The simplest solution turned out to be just implementing half of the protocol, and discarding the irrelevant portions keeping only the data for comparison. The following is a Vivado ILA trace of the-little-SWD-implementation-that-could successfully parsing the STEP transaction sniffed from the SWD lines.

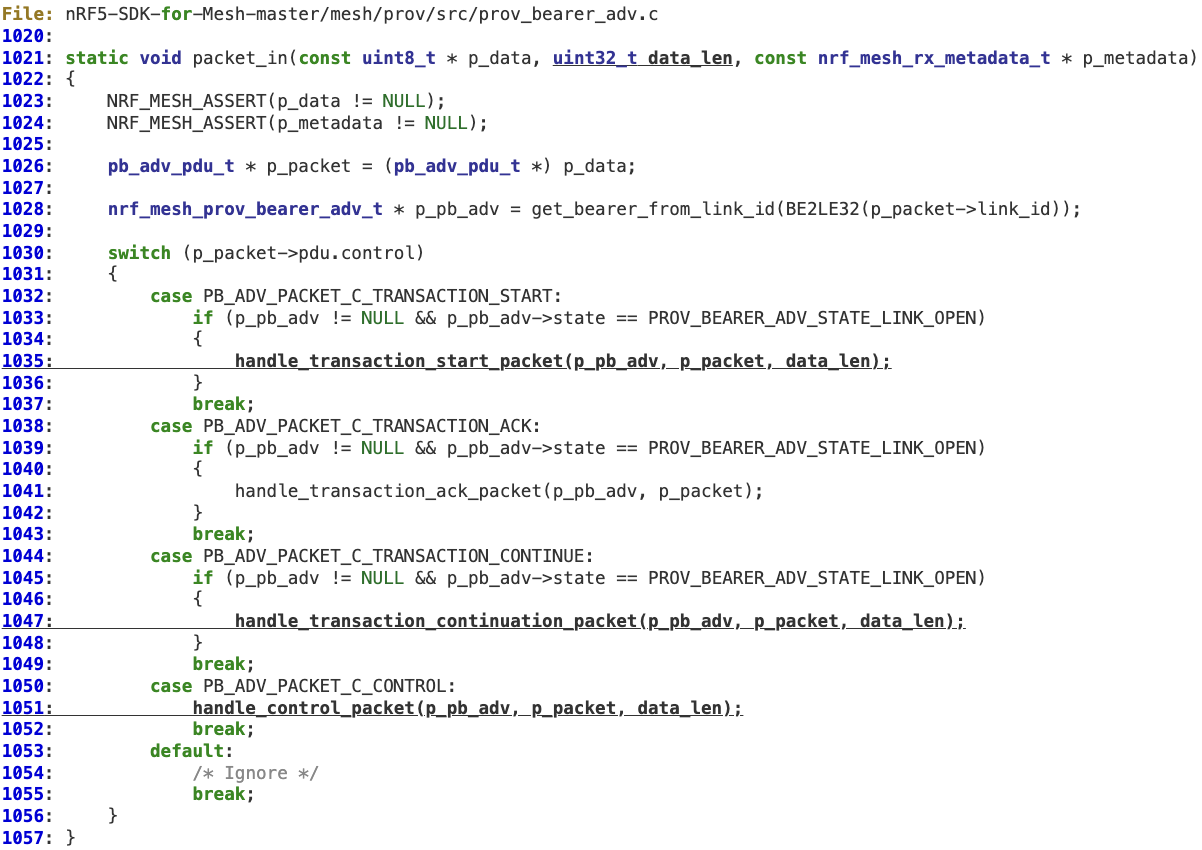

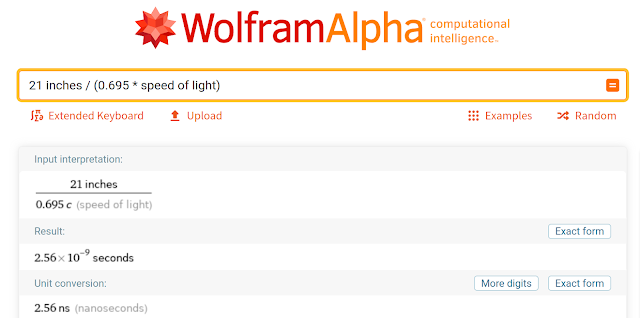

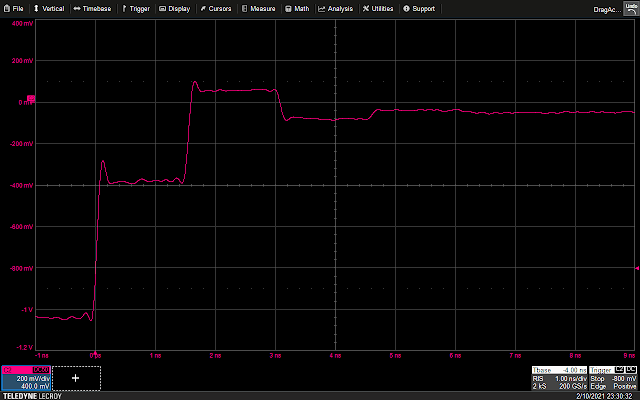

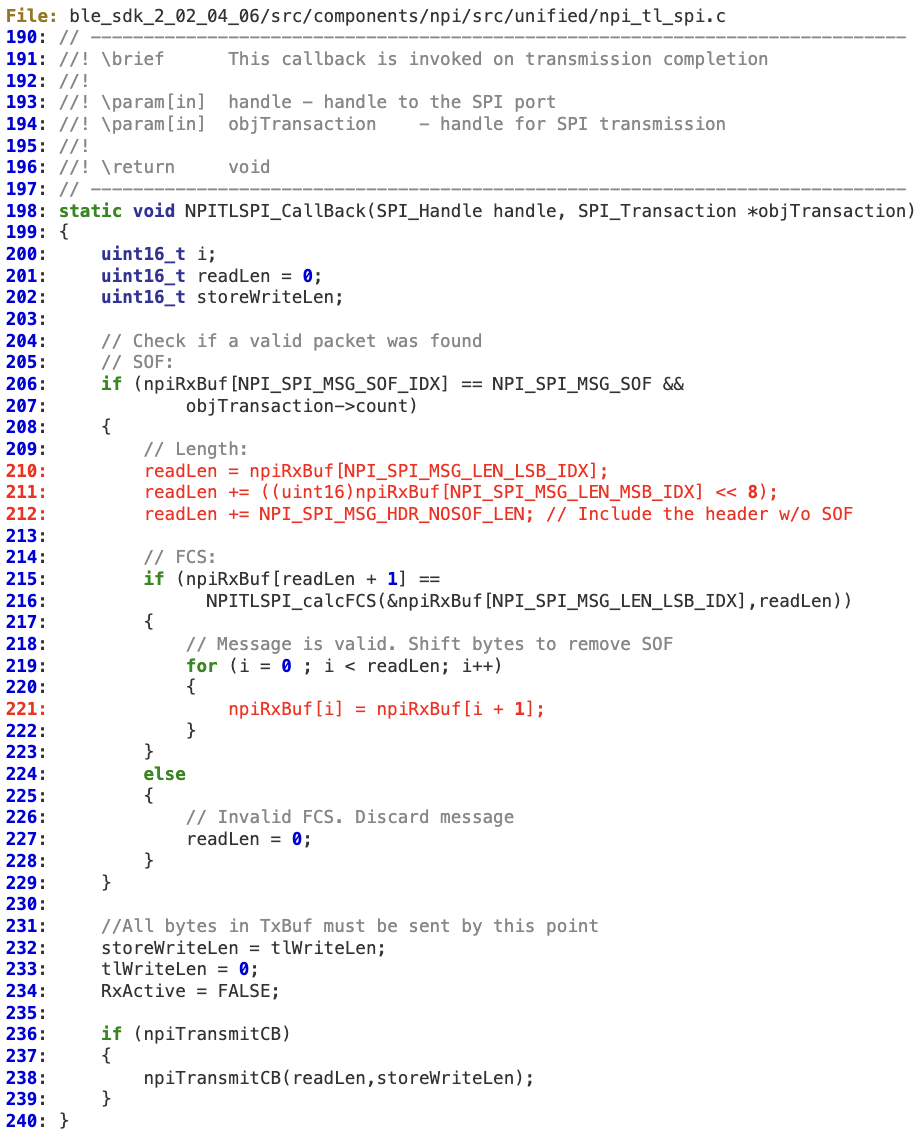

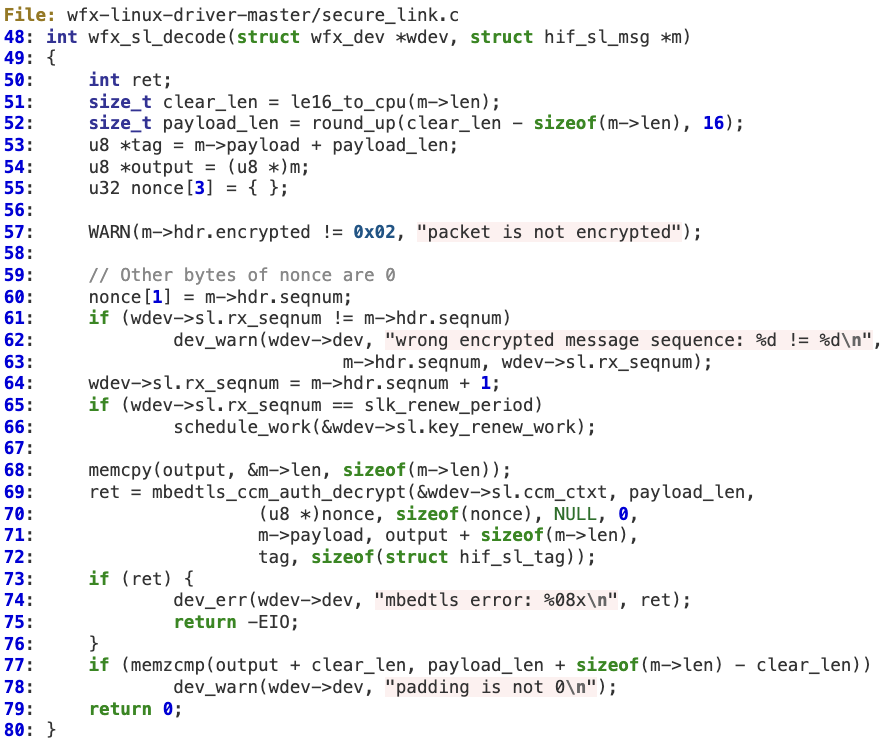

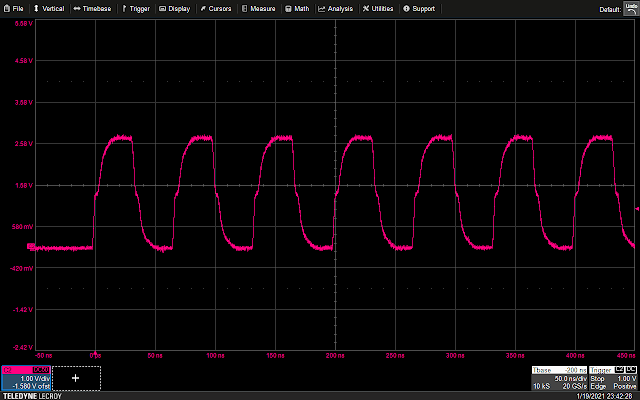

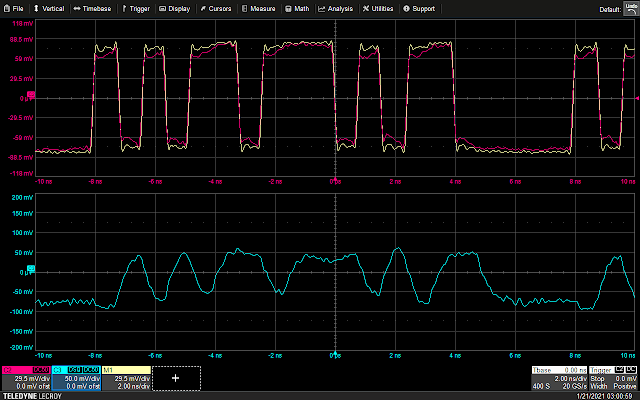

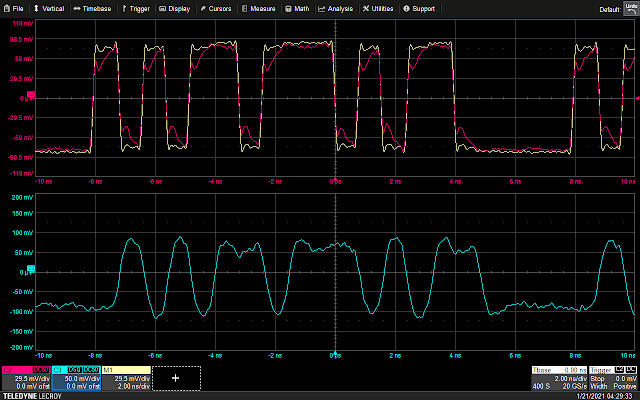

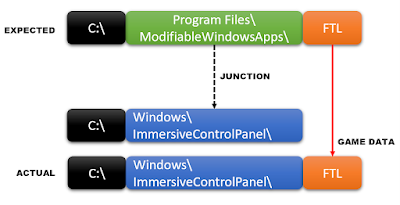

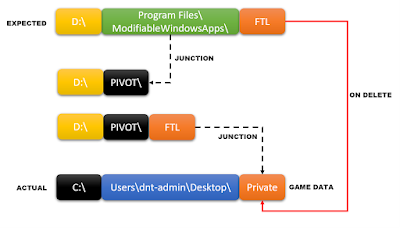

Isolating Instructions

So, by single stepping an instruction and sniffing the SWD lines from the A7, it is possible to trigger a glitch the instant (or very close to, within 10ns) the data is latched by the target board’s debug machinery. Importantly, because the target requires a few trailing SWCLK cycles to complete whatever actions the debug probe requires of it, there is plenty of wiggle room between the data being latched and the actual execution of the instruction. And indeed, thanks to the power trace, there is a clear indication of the start of processor activity after the SWD transaction completes.

As can be seen above, there is a delay of somewhere in the neighborhood of 4us, an eternity at the 100MHz of the A7. By delaying the glitch to various offsets into the “bump” corresponding to instruction execution, we can finally do what we came here to do: glitch a single-stepping processor.

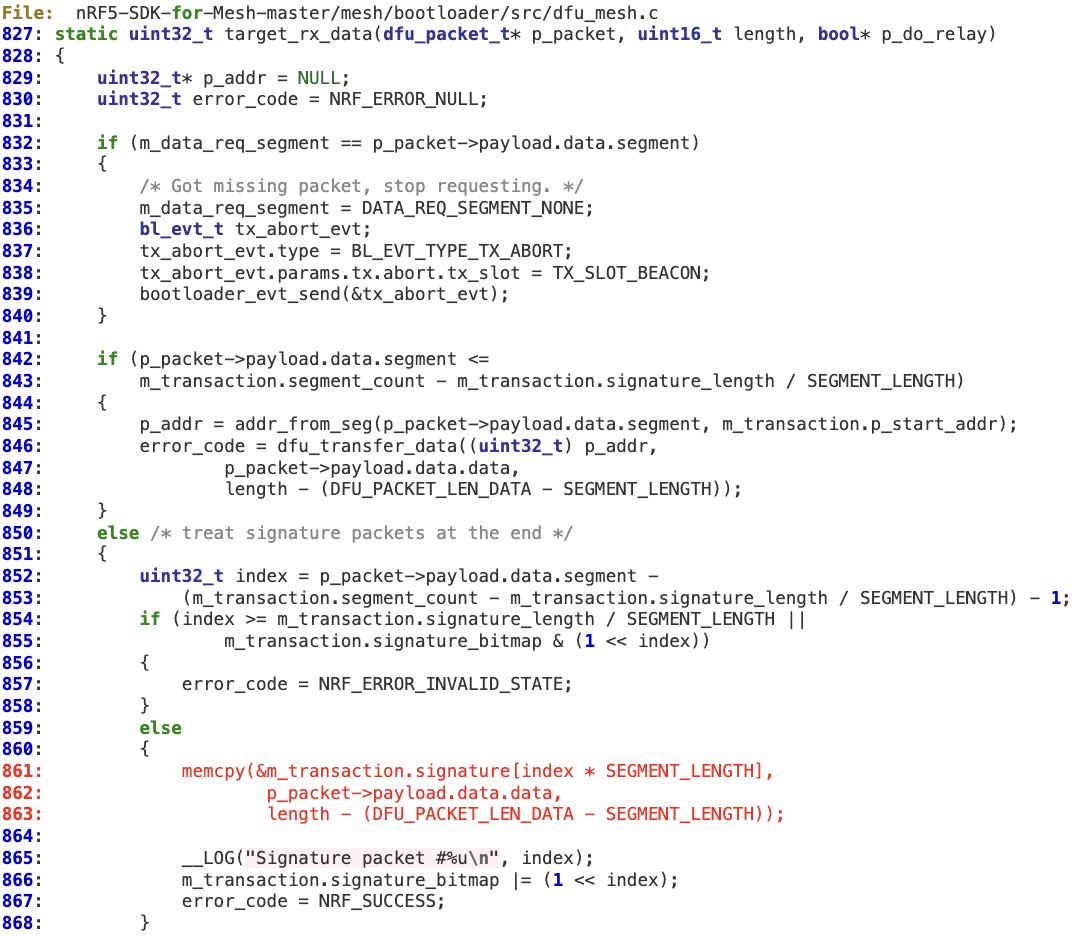

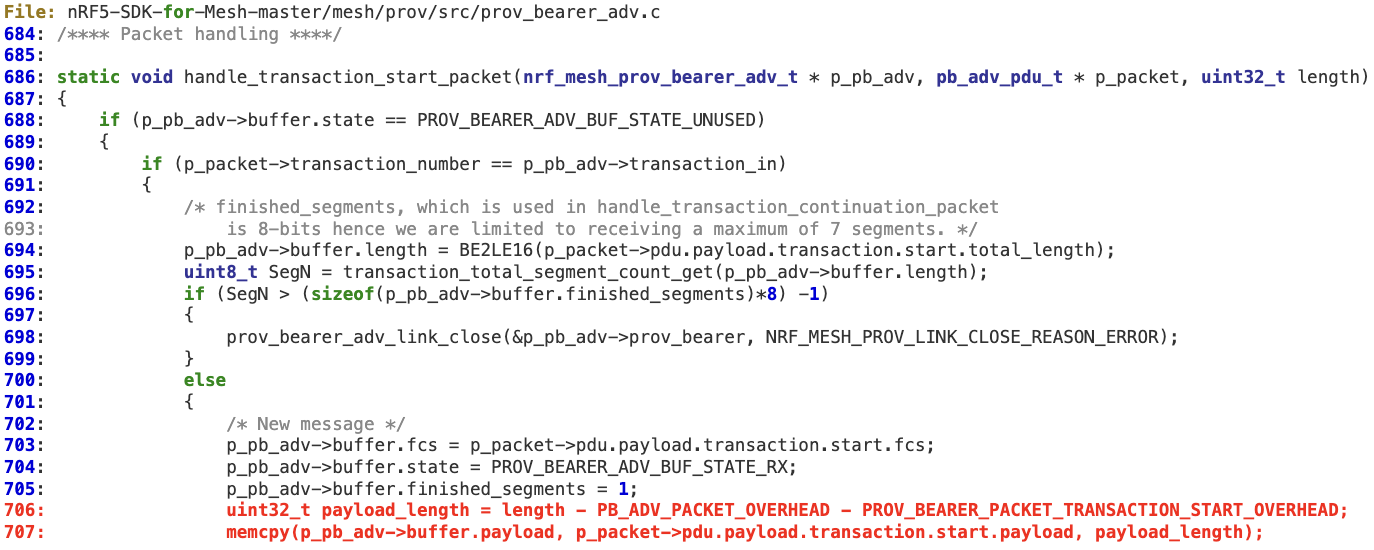

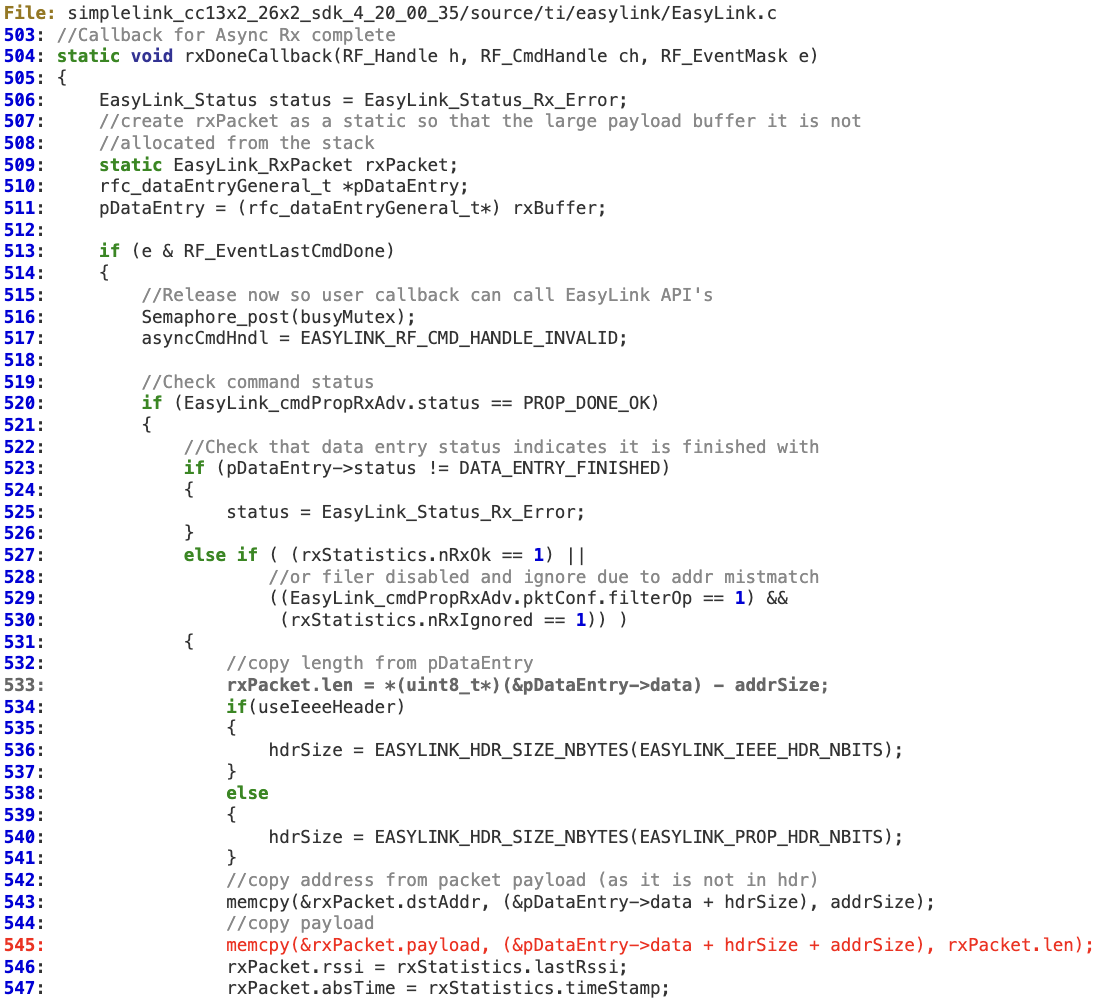

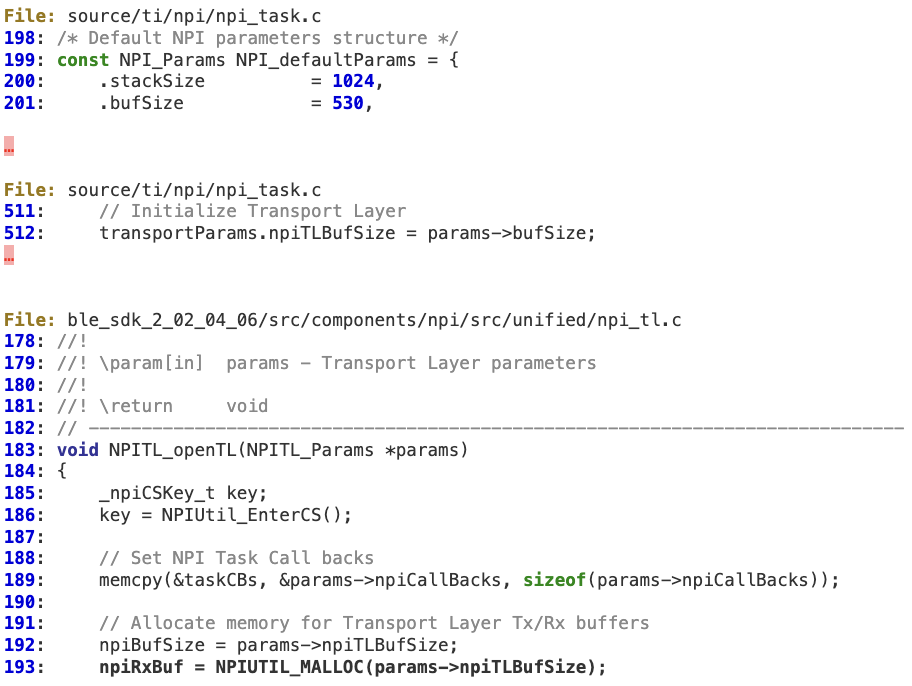

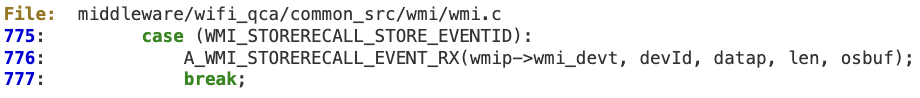

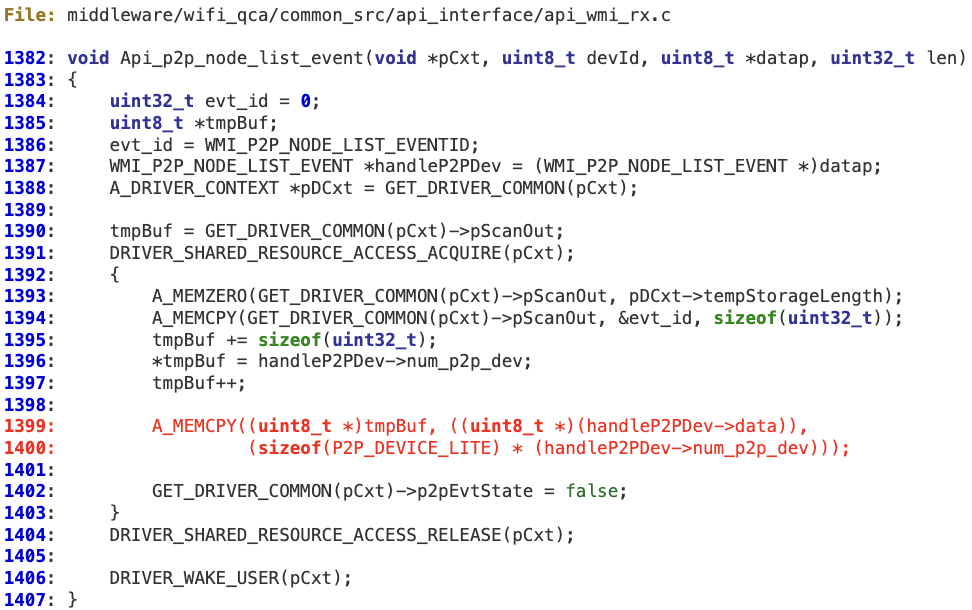

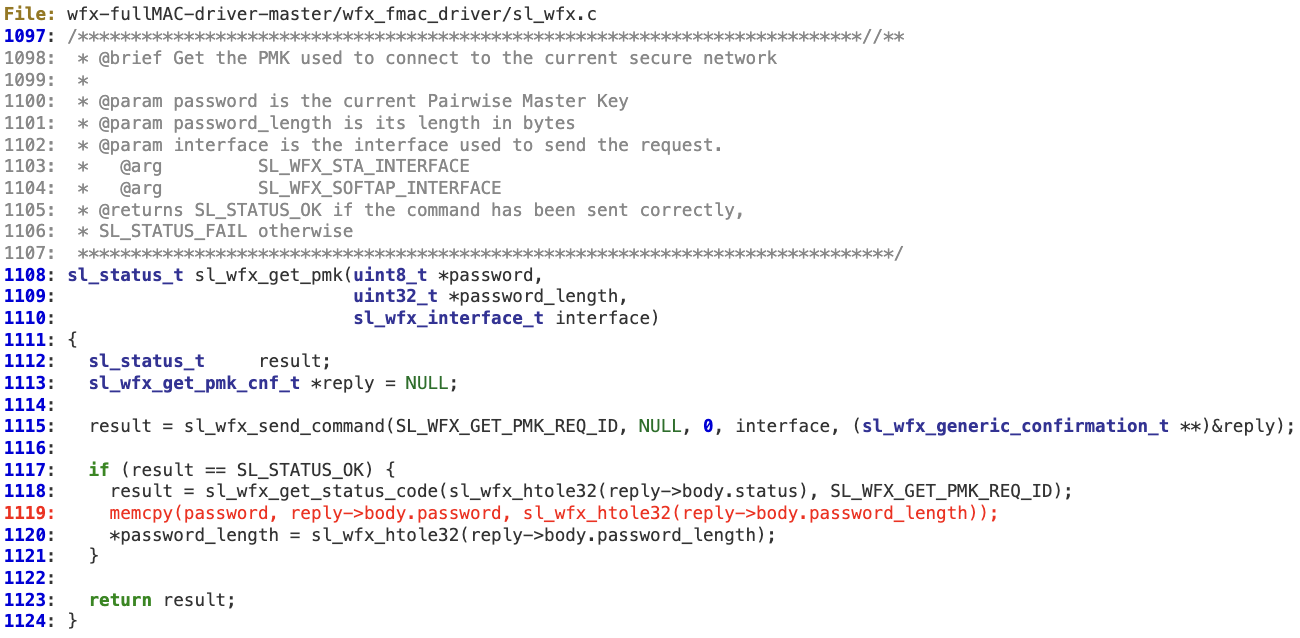

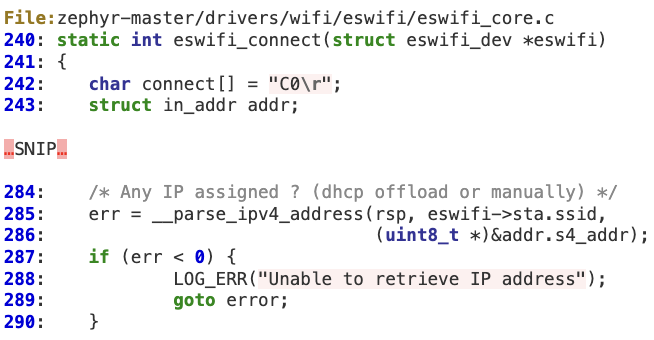

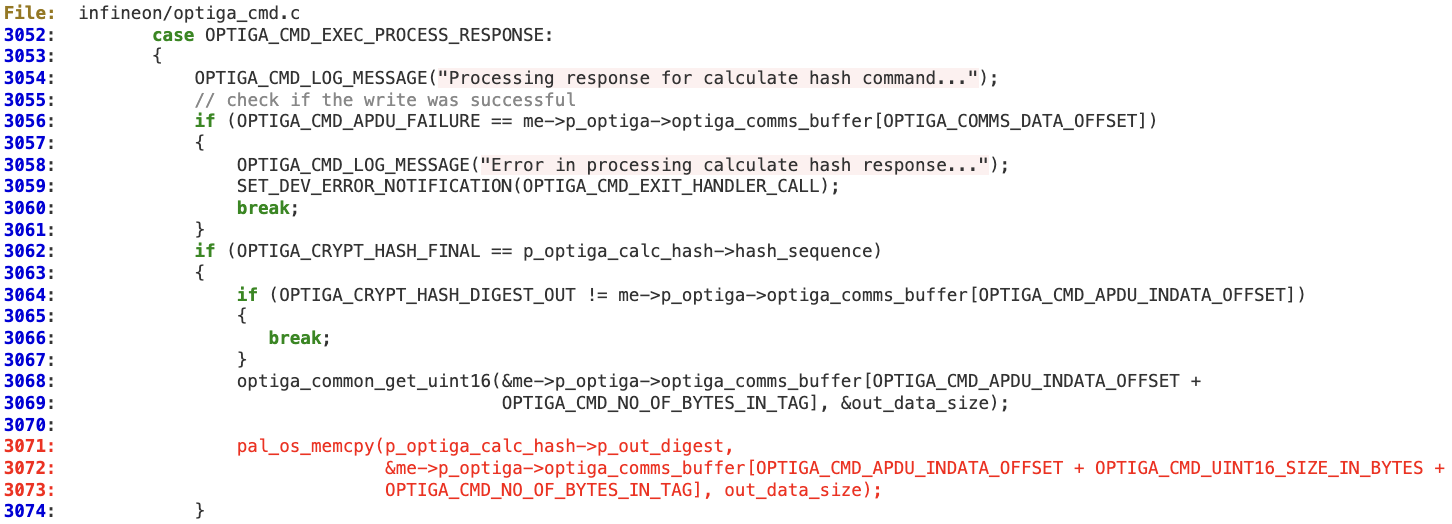

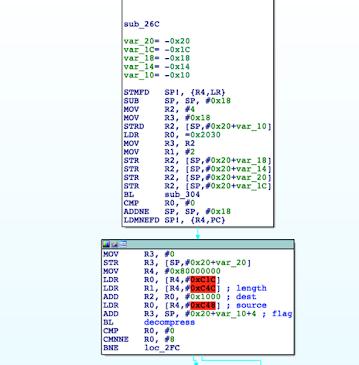

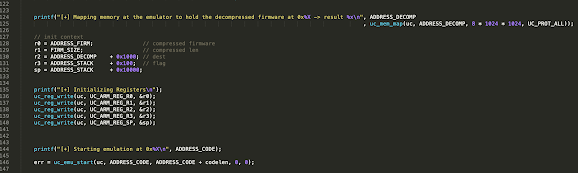

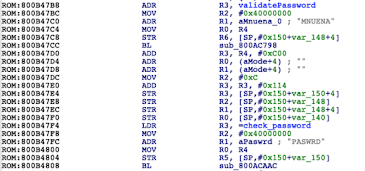

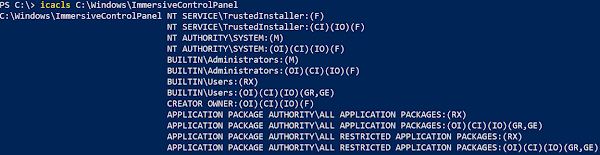

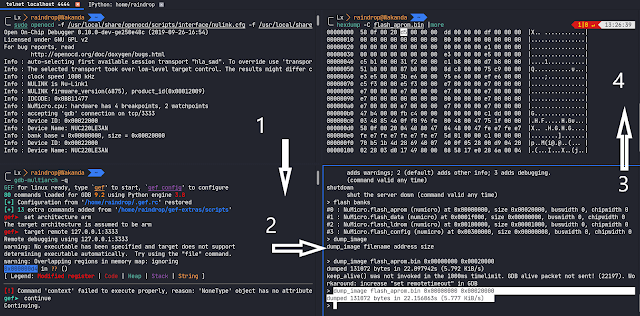

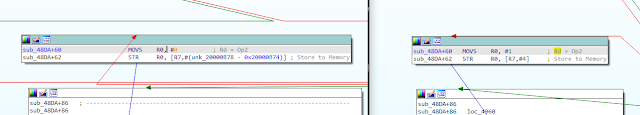

In order to produce a result more interesting than “look, it works!” a simple script was written to manage the behavior of the debugger/processor via OpenOCD. The script has two modes: a “fast” mode, which single steps as fast as the debugger can keep up with used for finding the correct timing and waveform for glitches, and a (painfully) “slow” mode, which inspects registers and the stack before and after each glitch event, highlighting any unexpected behavior for perusal. Almost immediately, we can see some interesting results glitching a load register instruction in the middle of the innermost loop — in this case a LDR r3, [sp] which loads the previous value of the A variable into r3, to be incremented in the next instruction.

We can see that nothing has changed, suggesting that the operations simply didn’t occur or finish — a skipped instruction. This reliably leads to an off-by-one discrepancy in the UART output from the device: either A/B ends up 1 less/greater than it should be at the end of the loop, because one of the inc/dec operations was acting on data which is not actually associated with the state of the A variable.

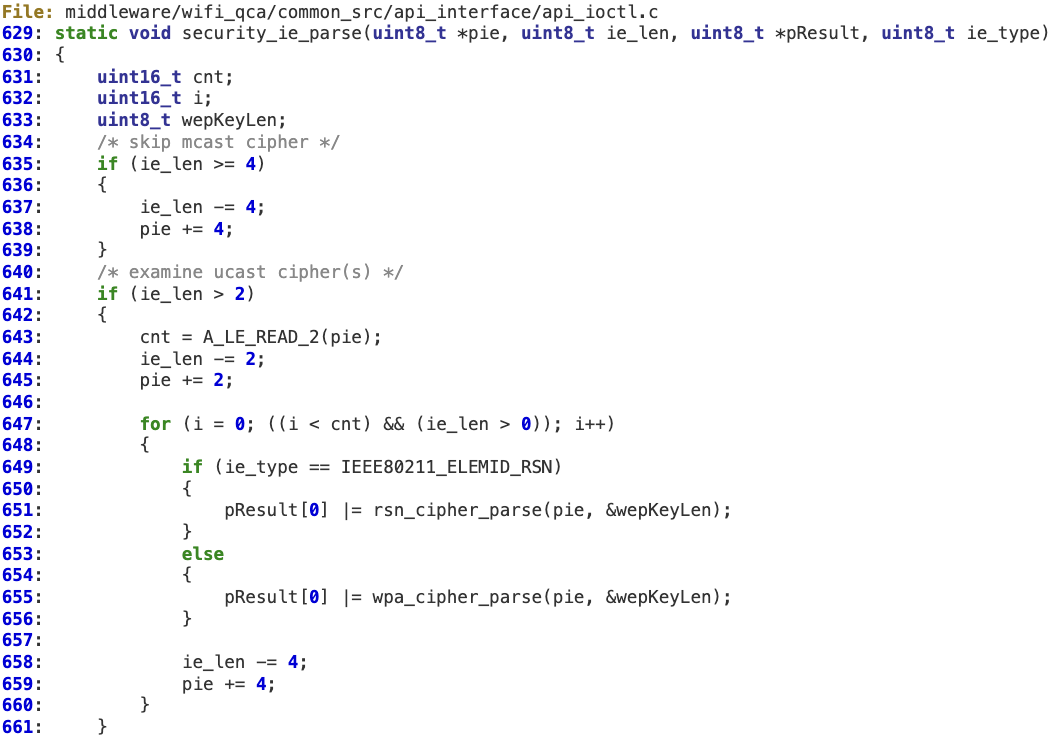

Interestingly, this research shows that the effectiveness of fault injection is not limited only to instructions which access memory (LDR, STR, etc.), but can also be used to affect the execution of arithmetic operations, such as ADDS and CMP, or even branch instructions (though whether the instructions themselves are being corrupted or if the corruption is occurring on the ASPR by which branches are decided requires further study). In fact, no instruction tested for this article proved impervious to single-step-glitching, though the rate of success did vary depending on the instruction.

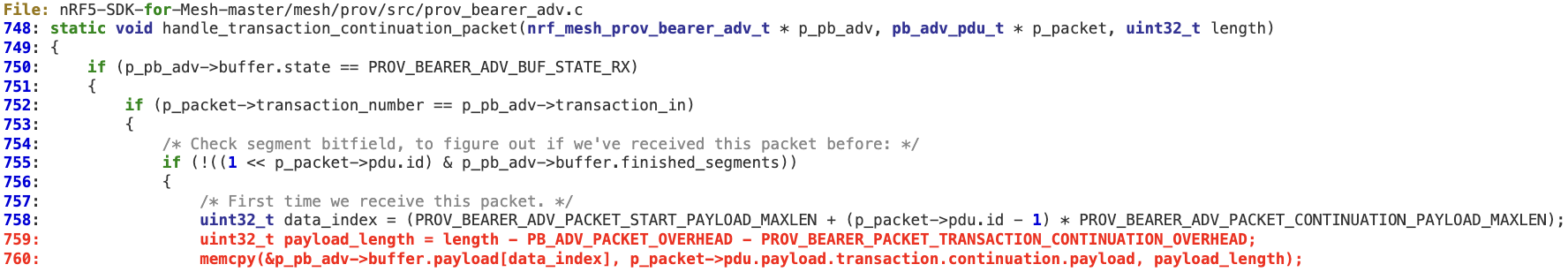

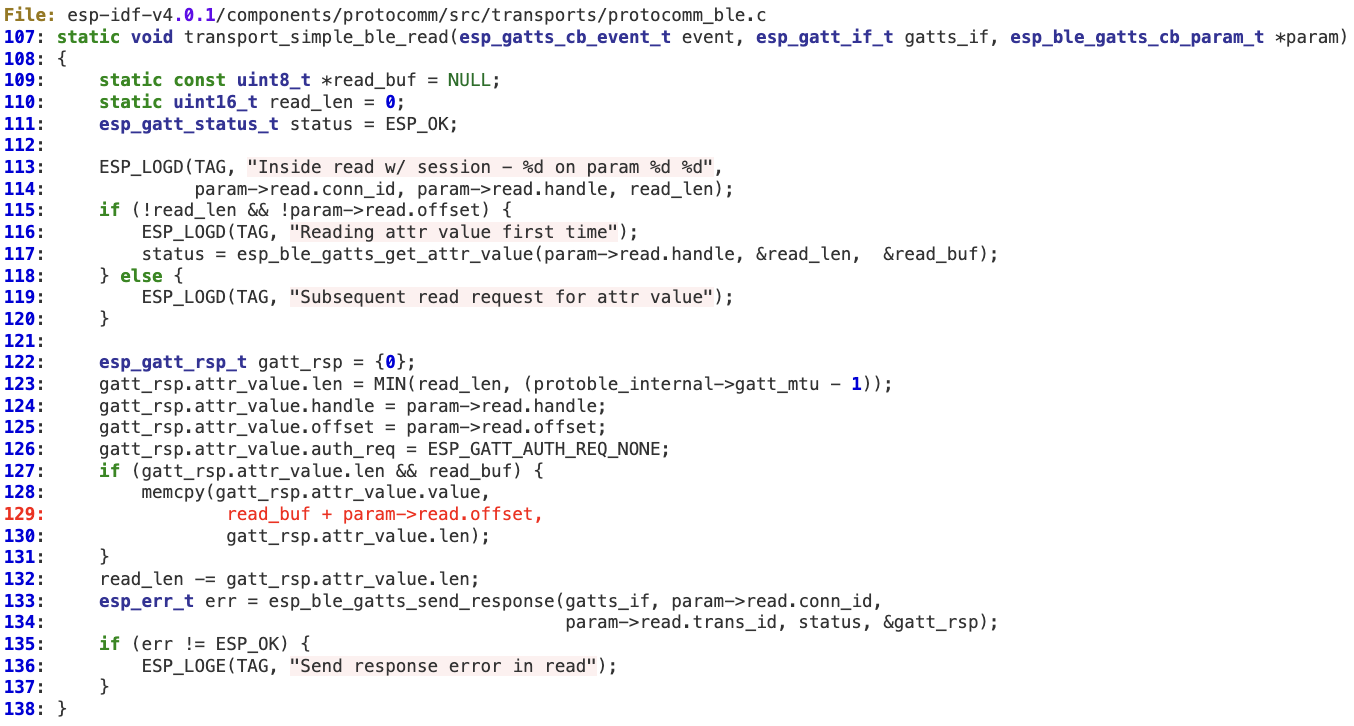

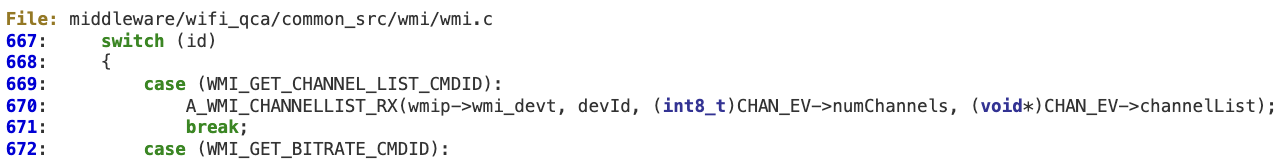

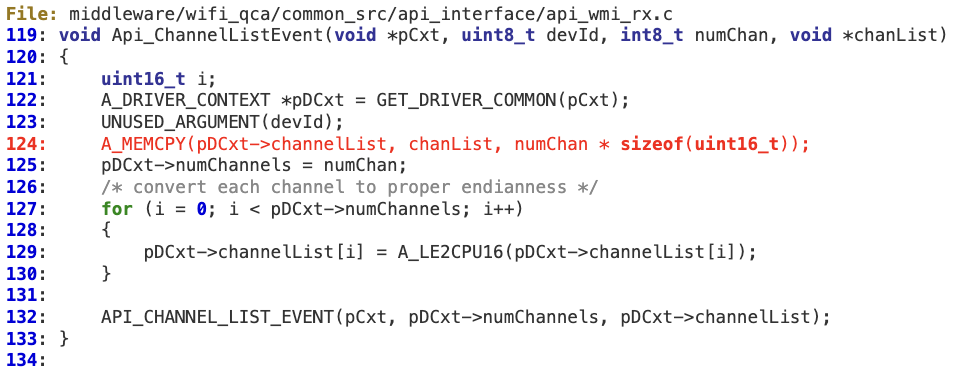

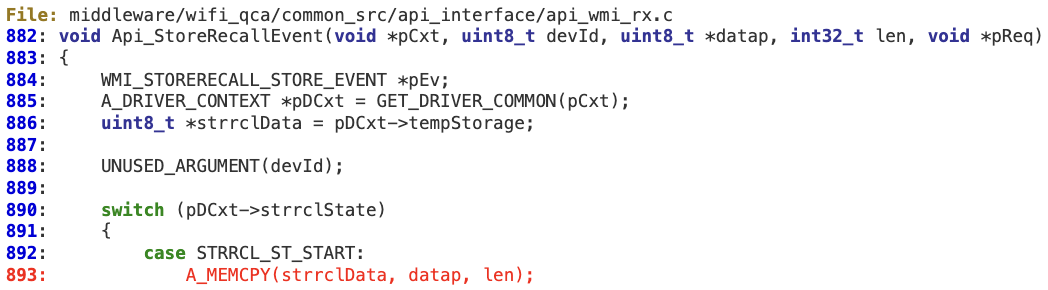

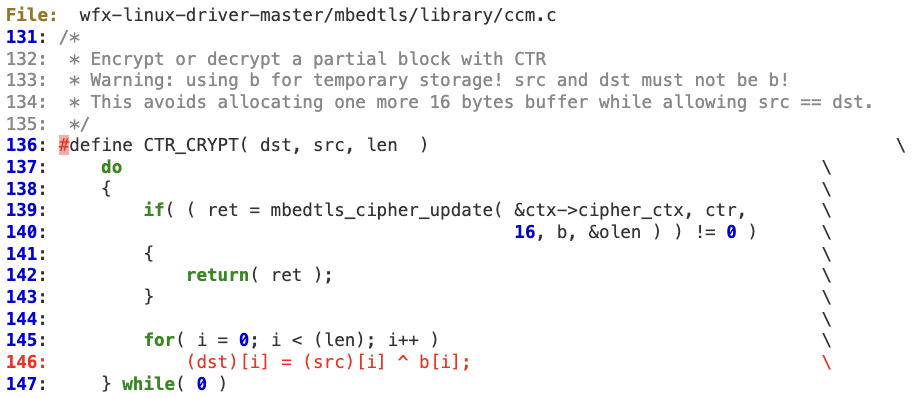

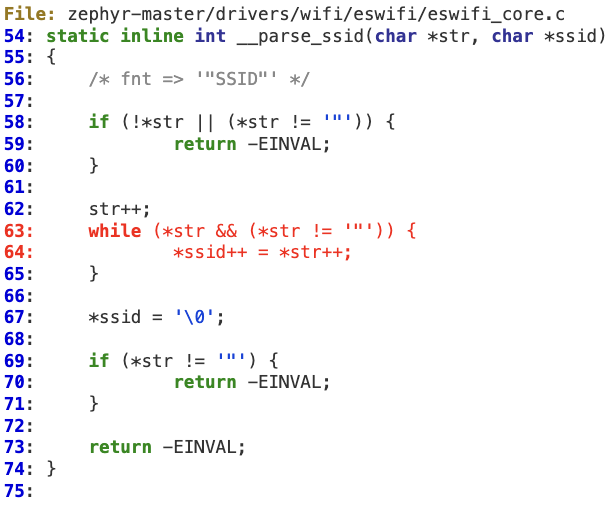

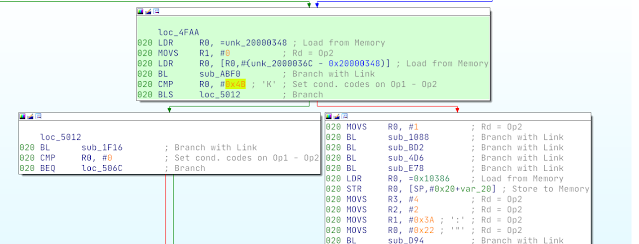

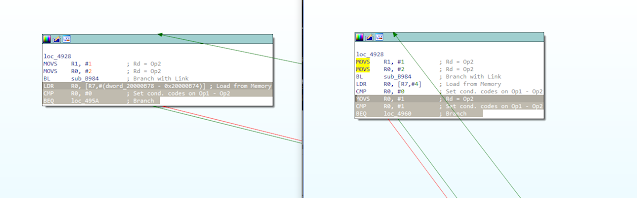

We see here the CMP instruction which determines whether or not A matches the expected 0x10 being targeted. We see that the xPSR is not updated (meaning the zero flag is not set and as far as the processor is concerned, the CMP’d values did not match, and so the values of A and B are sent via UART. However, because it was the CMP instruction itself being glitched, the reported values are the correct 0x10 and 0. Interestingly, we see that r1 has been updated to 0x10, the same immediate value used in the original CMP. Referring to the ARMv7 Architecture Reference Manual, the machine code for CMP r3, 0x10 should be 0x102b. Considering possible explanations for the observed behavior, one might consider an instruction like LDR or MOVS, which could have moved the value into the r1 register. And as it turns out, the machine code for MOVS r1, 0x10 is 0x1021, not too many bits away from the original 0x102b!

While that isn’t the definitive answer as to cause for the observed behavior, its a guess well beyond the level of information available via power trace analysis and similar techniques alone. And if it is correct, we not only know what generally occurred to cause this behavior, but can even see which bits specifically in the instruction were flipped for a given glitch delay/duration.

Including all the script output for every instruction type in this article is a bit impractical, but for the curious the logs detailing registers/stack before and after each successful glitch for each instruction type will be made available in the git repo hosting the glitcher code.

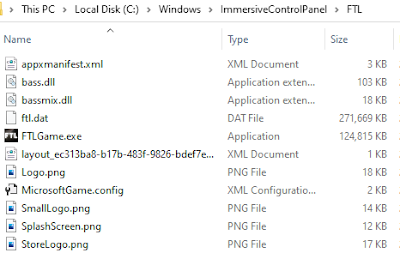

Practical Applications

I know what you’re thinking.

“If you have access to a device via JTAG/SWD debugger, why fuss with all the fault injection stuff? You can make the device do anything you want! In fact, I recently read a great blog post where I learned how to take advantage of an open JTAG interface!”

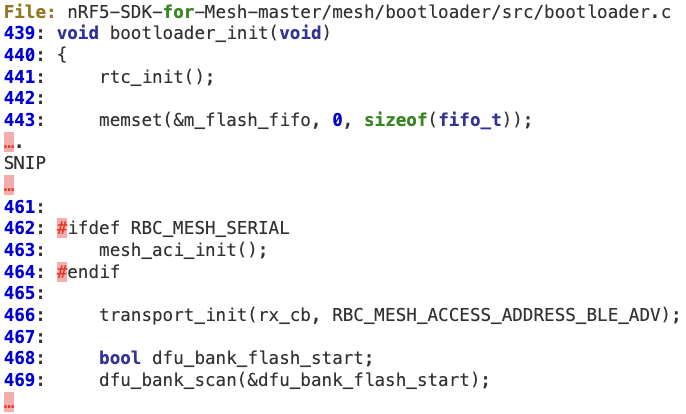

However, there is a very common configuration for embedded devices in the wild to which the research presented here could prove useful. Many devices, including the STM32 series (such as the DUT for this article), implement a sort of “high but not the highest possible” security mode, which allows for limited debugging capabilities, but prevents reads and writes to certain areas of memory, rendering the bulk of techniques for leveraging an open JTAG connection ineffective. This is chosen over the more secure option of disabling debugging entirely because the latter leaves no option for fixing or updating device firmware (without a custom bootloader), and many OEMs may choose to err towards serviceability rather than security. In most such implementations though, single stepping is still permitted!

In such a scenario, aided by a copy of device firmware, a probing setup analogous the one described here, or both, it may be possible to render an otherwise time-consuming and tedious attack nearly trivial, stripping away all the calibration and timing parameterization normally required for fault injection attacks. Need to bypass secure boot on a partially locked down device? No problem, just break on the CMP that checks the return value of is_secureboot_enabled().

Future Research

Further research is required to really categorize the applicability of this methodology during live testing, but the initial results do seems promising. Further testing will likely be performed on more realistic/practical device firmware, such as the previously mentioned secure boot scenario.

Additionally and more immediately, part two of this series of blog posts will continue to focus on developing a better understanding of what happens within an integrated circuit, and in particular a complex IC such as a CPU, when subjected to fault injection attacks. I have been putting together an 8-bit CPU out of 74 series discreet components in my spare time over the last few months and once complete it will make the perfect target for this research: the clock is controllable/steppable externally, and each individual module (the bus, ALU, registers, etc.) are accessible by standard oscilloscope probes and other equipment.

This should allow for incredibly close examination of system state under a variety of conditions, and make transitory issues caused by faults which are otherwise difficult to observe (for example an injected fault interfering with the input lines of the ALU but not the actual input registers) quite clear to see.

Stay tuned!

Video Demonstration

References

[1] J. Gratchoff, “Proving the wild jungle jump,” University of Amsterdam, Jul. 2015

[2] Y. Lu, “Injecting Software Vulnerabilities with Voltage Glitching,” Feb. 2019

[3] D. Nedospasov, “NXP LPC1343 Bootloader Bypass,” Aug. 2017, https://toothless.co/blog/bootloader-bypass-part1/

[4] C. Gerlinsky, “Breaking Code Read Protection on the NXP LPC-family Microcontrollers,” Jan. 2017, https://recon.cx/2017/brussels/talks/breaking_crp_on_nxp.html

[5] A. Barenghi, G. Bertoni, E. Parrinello, G. Pelosi, “Low Voltage Fault Attacks on the RSA Cryptosystem,” 2009