Platform security is one of the specialized service lines IOActive offers and we have worked with many vendors across the industry. Lately, we have been conducting research on various targets while developing tooling that we believe will help the industry make platform security improvements focused on AMD systems.

SecSMIFlash

In early October 2022, IOActive reported a number of security issues to ASUS and AMI in an SMM module called SecSMIFlash (GUID 3370A4BD-8C23-4565-A2A2-065FEEDE6080). SecSMIFlash is included in BIOS image G513QR.329 for the ASUS Rog Strix G513QR. This module garnered some attention after Alexander Matrosov (BlackHat USA 2017) demonstrated how the SMI handlers failed to check input pointers with SmmIsBufferOutsideSmmValid(), resulting in CVE-2017-11315.

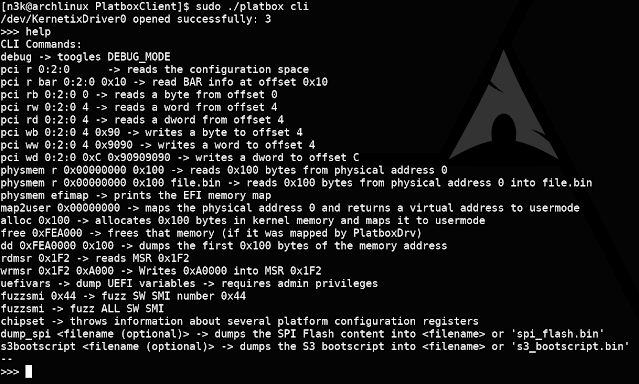

IOActive discovered issues on one of our target platforms, a fully updated ASUS Rog Strix G513QR, while running an internally developed dumb SW SMI fuzzer. Almost immediately, the system appeared to hang on SMI handler 0x1D.

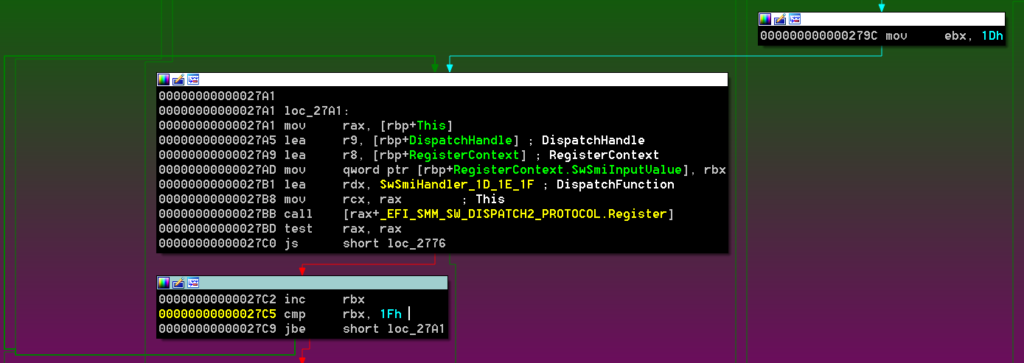

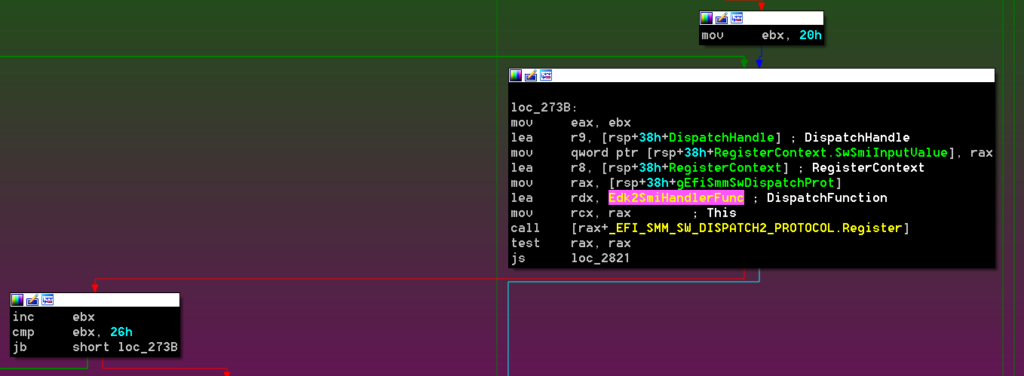

This module registers a single SW SMI handler for three different SwSmiInputs (0x1D, 0x1E, 0x1F) via EFI_SMM_SW_DISPATCH2_PROTOCOL:

Based on public information about this module, the above operations map to:

– 0x1D – LOAD_IMAGE

– 0x1E – GET_POLICY

– 0x1F – SET_POLICY

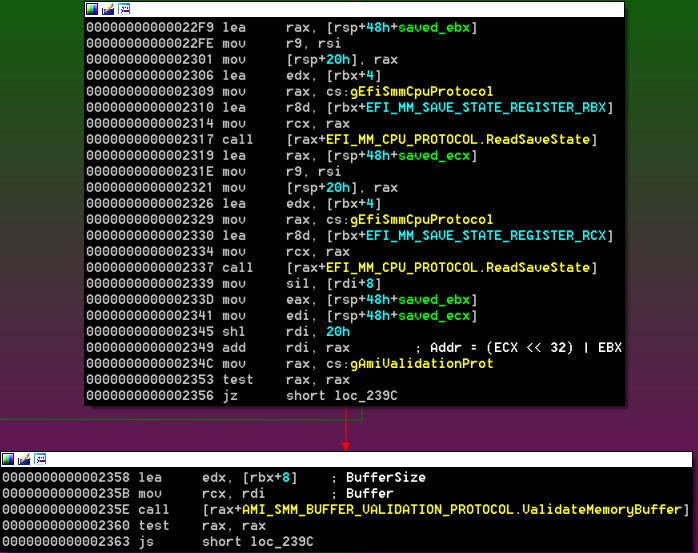

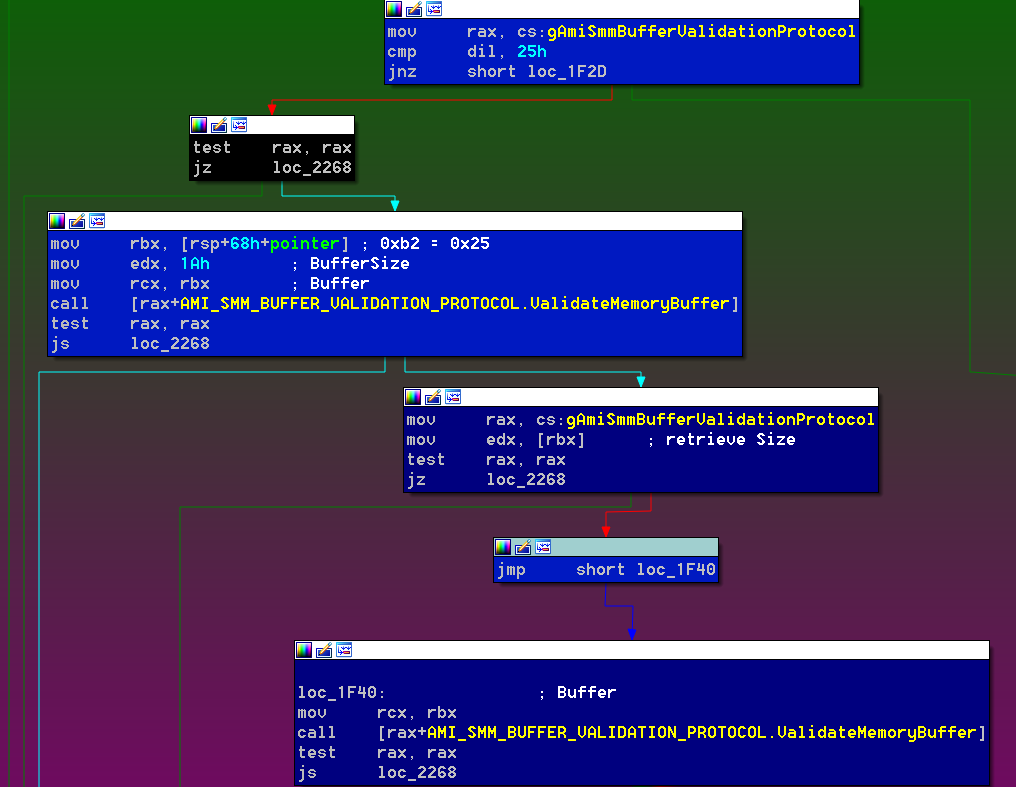

The handler uses EFI_MM_CPU_PROTOCOL to read the contents of the saved ECX and EBX registers and create a 64-bit pointer. This constructed buffer is verified to be exactly 8 bytes long and outside SMRAM using AMI_SMM_BUFFER_VALIDATION_PROTOCOL:

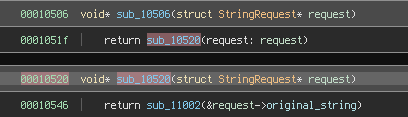

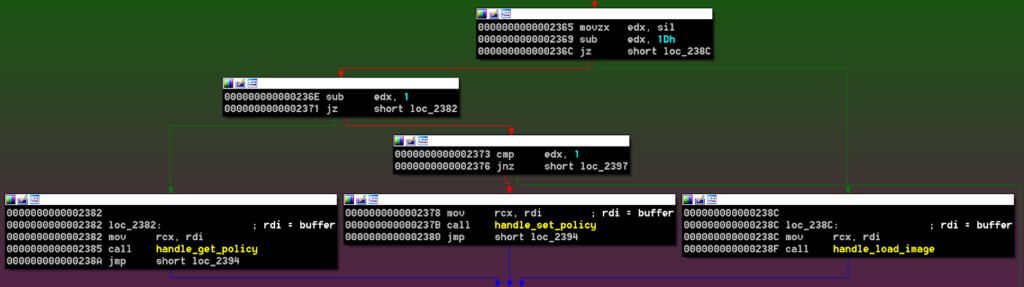

Depending on the value written on the SW-SMI triggering port (SwSmiInput), the execution continues in handle_load_image (0x1D), handle_get_policy (0x1E), or handle_set_policy (0x1F). These three functions receive a single argument which is the constructed pointer from the previous step:

The three operations have security issues.

Let’s start with handle_load_image. As part of its initialization, SecSMIFlash allocates 0x1001 pages of memory (g_pBufferImage) that are going to be used to store the BIOS image file.

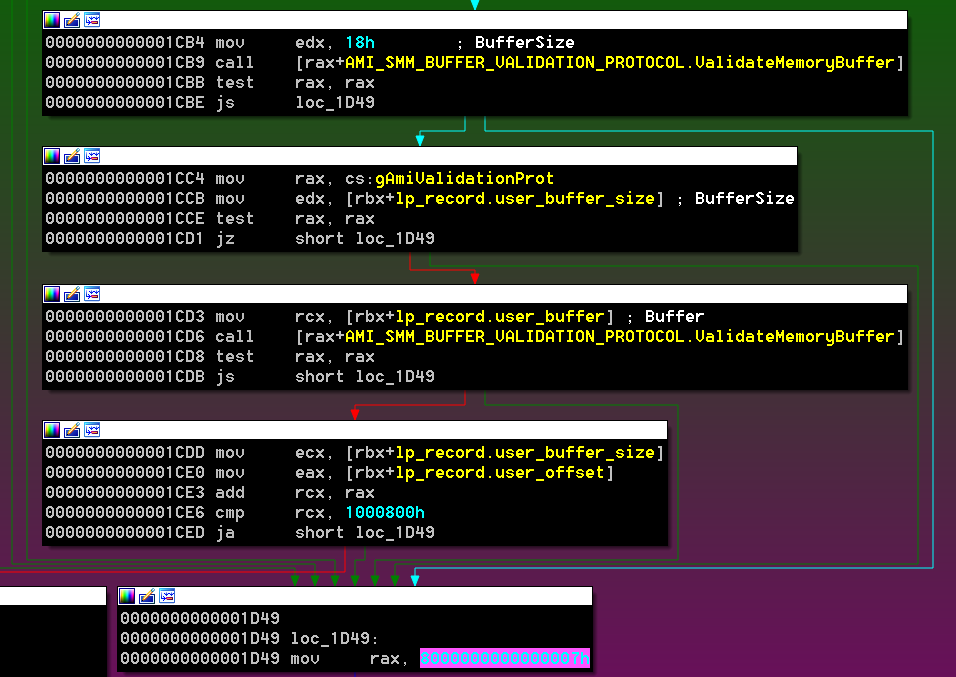

The buffer address is put into RBX and then validated again but this time it checks that the size is at least 0x18 bytes (outside SMRAM).

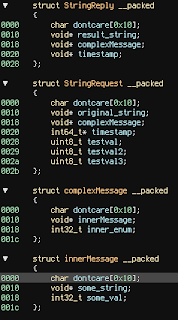

The buffer is used as a record defined as follows:

| typedef struct { /* 0x00 */ void * user_buffer; /* 0x08 */ unsigned int user_offset; /* 0x0C */ unsigned int user_buffer_size; /* 0x10 */ unsigned int status; /* 0x14 */ unsigned int unk; } lp_record; |

Another pointer is extracted from memory (the user_buffer member), which is validated to be user_buffer_size bytes, followed by a check that attempts to make sure that the provided offset and size are within the allocated bounds of g_pBufferImage.

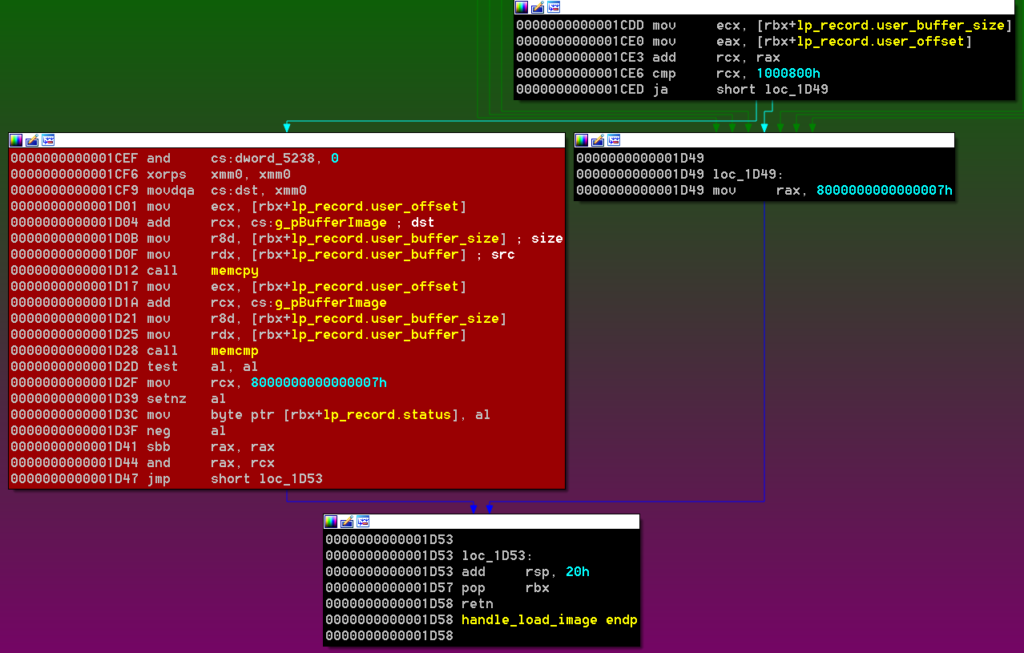

The problem is that there is a time-of-check to time-of-use (TOCTOU) condition that can be abused:

The block of code that performs the checks does not make local copies of the values into SMRAM. The values are retrieved again from user-controlled memory when the copy is done, which means the values could have changed.

Exploitation of this issue requires the use of a DMA agent.

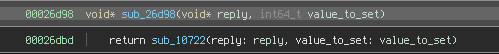

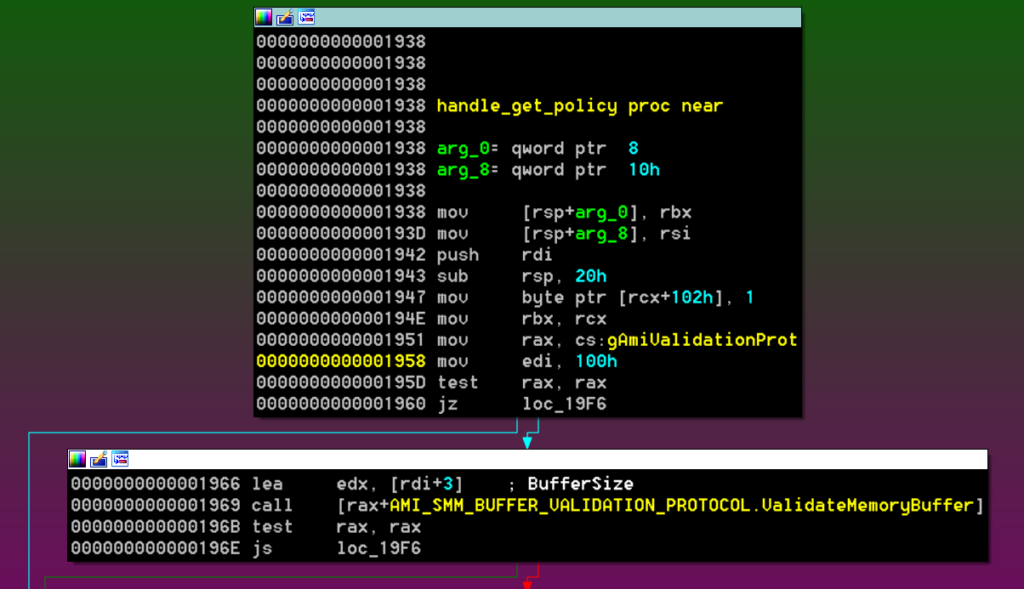

In the case of the handle_get_policy operation, the code presents a vulnerability in the first basic block:

Previously, the buffer was verified to only 8 bytes outside SMRAM, but here the code writes the value 1 at offset +102h and the ValidateMemoryBuffer check happens afterwards. Moreover, if ValidateMemoryBuffer fails, the handler simply bails out without doing anything else.

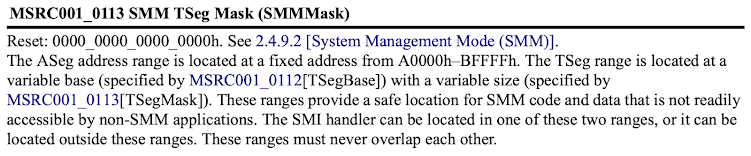

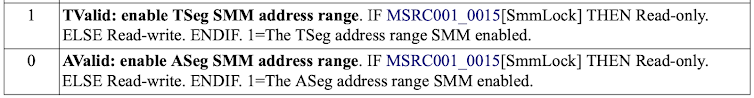

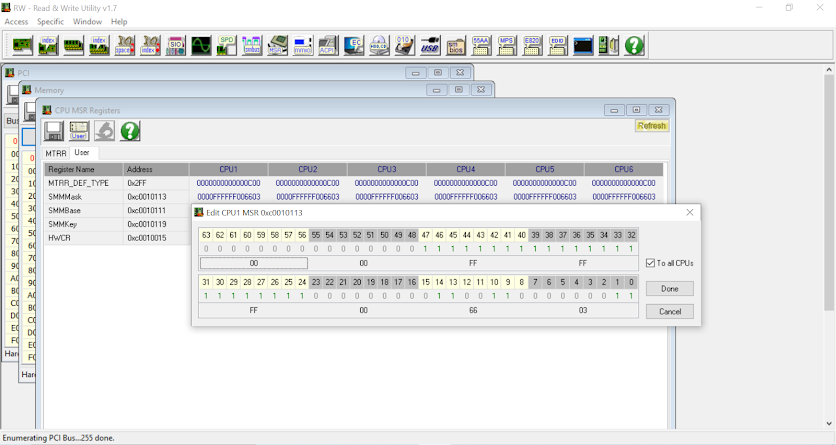

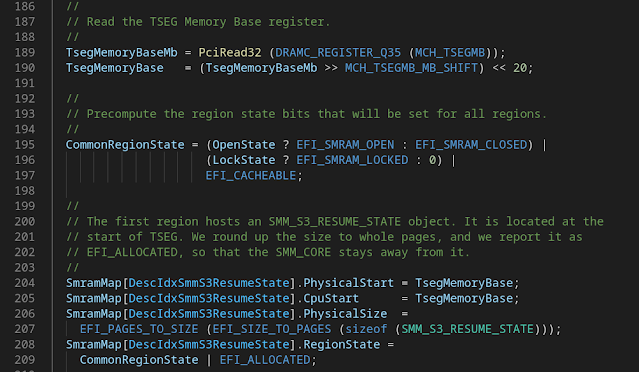

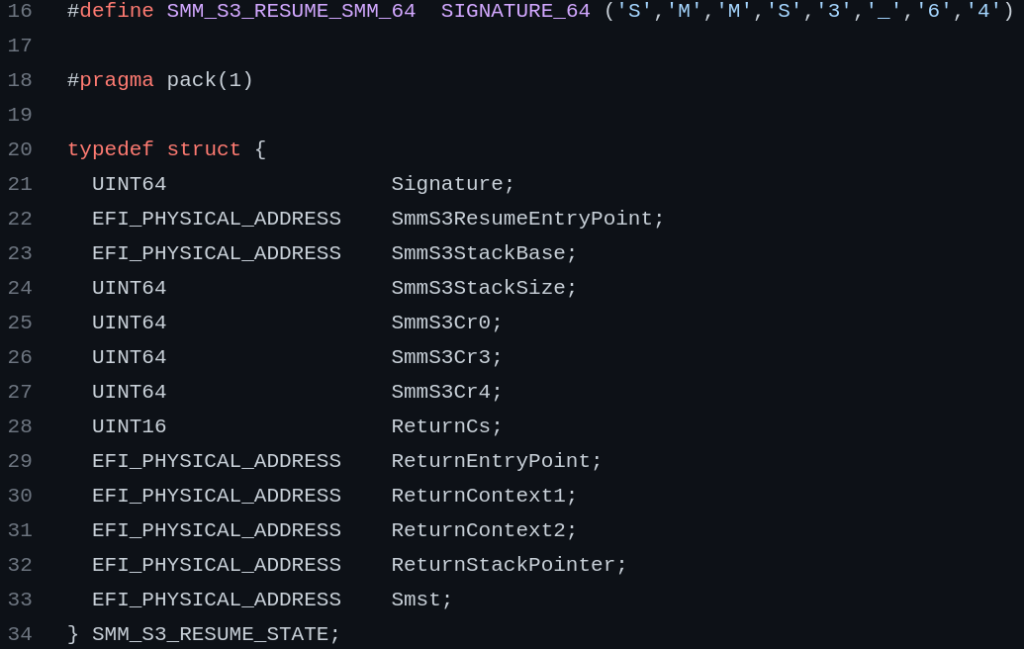

This out-of-bounds write condition allows the first 250 bytes (102h – 8) of the TSEG region to be written. The bottom of the TSEG region contains the SMM_S3_RESUME_STATE structure:

There are several EFI_PHYSICAL_ADDRESS pointers that could be targeted to achieve arbitrary SMM code execution.

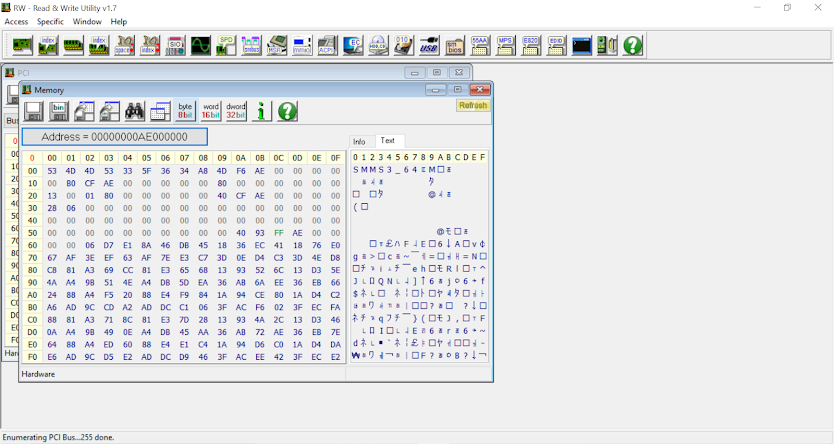

The following PoC code uses Platbox and the above primitive to write ones to the first 250 bytes of the TSEG region (0xef000000 in the machine used for testing):

Finally, for the handle_set_policy operation, the code suffers from a combination of the issues described above.

Responsible Disclosure Attempt

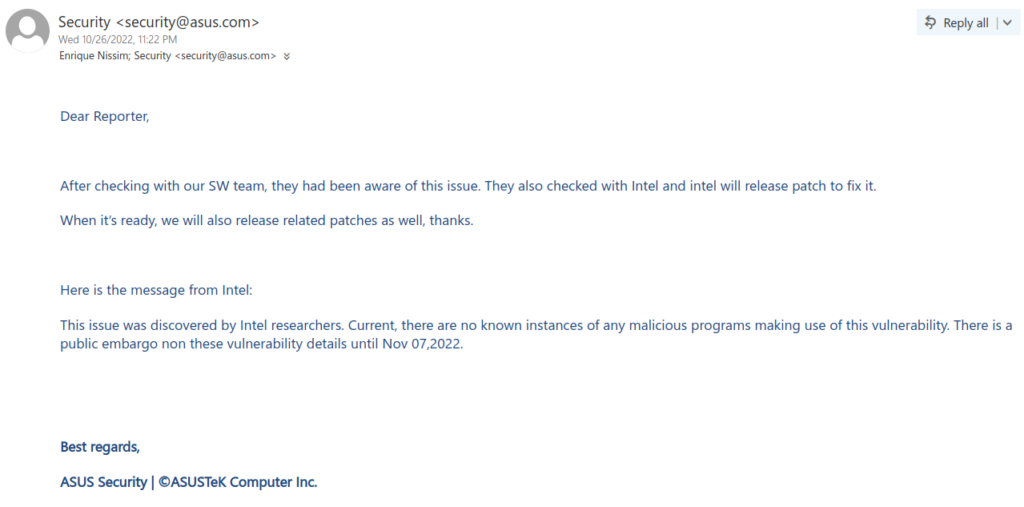

IOActive drafted a technical document and sent it over to ASUS. After a few weeks, ASUS replied with the following:

The response left us with some concerns:

1. They claimed all the reported issues were known by the team.

2. There was no ETA for the patch.

3. The issues were discovered by Intel and there was an embargo?

4. Is there a CVE assigned to track these?

We replied with a few questions along these lines and their response made it clear that the module is entirely handled by AMI and that Intel researchers may or may not apply a CVE number.

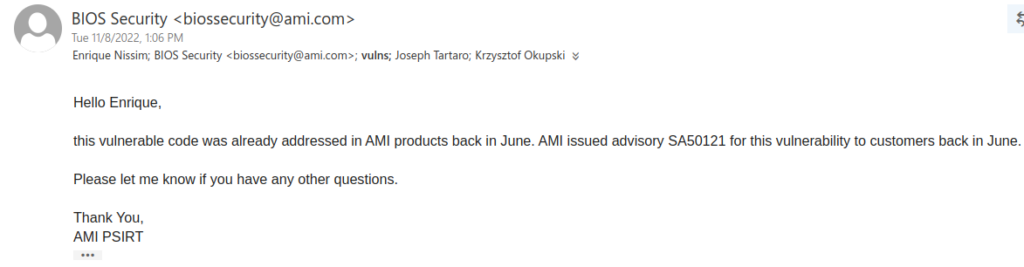

On the other hand, AMI provided a much quicker response, although quite unexpected:

Thursday, February 16, 2023

Adventures in the Platform Security Coordinated Disclosure Circus

by Enrique Nissim, Krzysztof Okupski and Joseph Tartaro

Platform security is one of the specialized service lines IOActive offers and we have worked with many vendors across the industry. Lately, we have been conducting research on various targets while developing tooling that we believe will help the industry make platform security improvements focused on AMD systems.

SecSMIFlash

In early October 2022, IOActive reported a number of security issues to ASUS and AMI in an SMM module called SecSMIFlash (GUID 3370A4BD-8C23-4565-A2A2-065FEEDE6080). SecSMIFlash is included in BIOS image G513QR.329 for the ASUS Rog Strix G513QR. This module garnered some attention after Alexander Matrosov (BlackHat USA 2017) demonstrated how the SMI handlers failed to check input pointers with SmmIsBufferOutsideSmmValid(), resulting in CVE-2017-11315.

IOActive discovered issues on one of our target platforms, a fully updated ASUS Rog Strix G513QR, while running an internally developed dumb SW SMI fuzzer. Almost immediately, the system appeared to hang on SMI handler 0x1D.

This module registers a single SW SMI handler for three different SwSmiInputs (0x1D, 0x1E, 0x1F) via EFI_SMM_SW_DISPATCH2_PROTOCOL:

Based on public information about this module, the above operations map to:

– 0x1D – LOAD_IMAGE

– 0x1E – GET_POLICY

– 0x1F – SET_POLICY

The handler uses EFI_MM_CPU_PROTOCOL to read the contents of the saved ECX and EBX registers and create a 64-bit pointer. This constructed buffer is verified to be exactly 8 bytes long and outside SMRAM using AMI_SMM_BUFFER_VALIDATION_PROTOCOL:

Depending on the value written on the SW-SMI triggering port (SwSmiInput), the execution continues in handle_load_image (0x1D), handle_get_policy (0x1E), or handle_set_policy (0x1F). These three functions receive a single argument which is the constructed pointer from the previous step:

The three operations have security issues.

Let’s start with handle_load_image. As part of its initialization, SecSMIFlash allocates 0x1001 pages of memory (g_pBufferImage) that are going to be used to store the BIOS image file.

The buffer address is put into RBX and then validated again but this time it checks that the size is at least 0x18 bytes (outside SMRAM).

The buffer is used as a record defined as follows:

| typedef struct { /* 0x00 */ void * user_buffer; /* 0x08 */ unsigned int user_offset; /* 0x0C */ unsigned int user_buffer_size; /* 0x10 */ unsigned int status; /* 0x14 */ unsigned int unk;} lp_record; |

Another pointer is extracted from memory (the user_buffer member), which is validated to be user_buffer_size bytes, followed by a check that attempts to make sure that the provided offset and size are within the allocated bounds of g_pBufferImage.

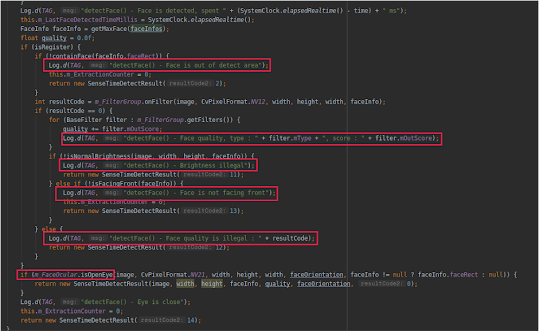

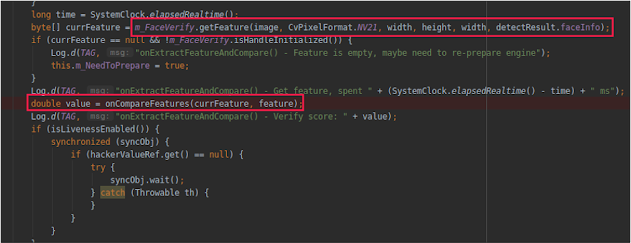

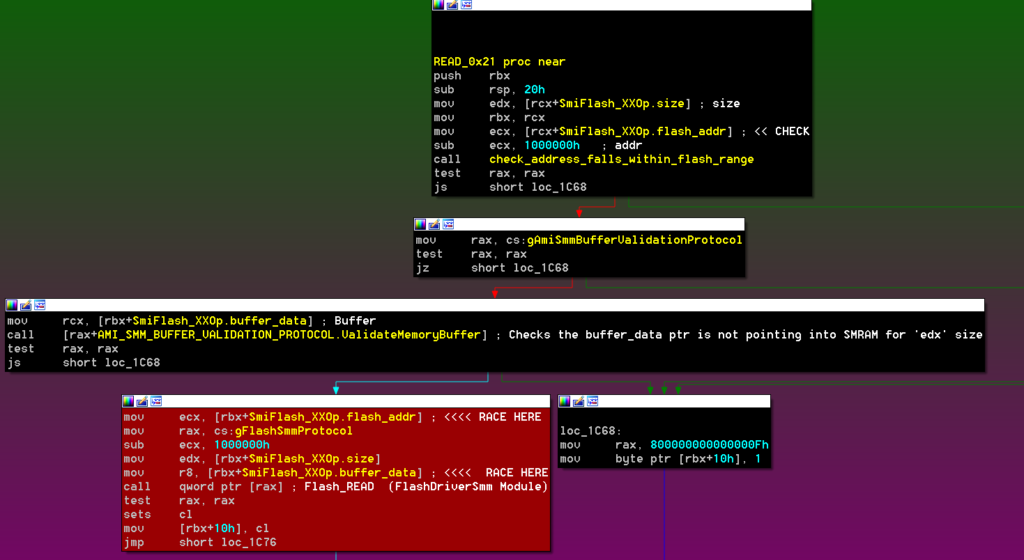

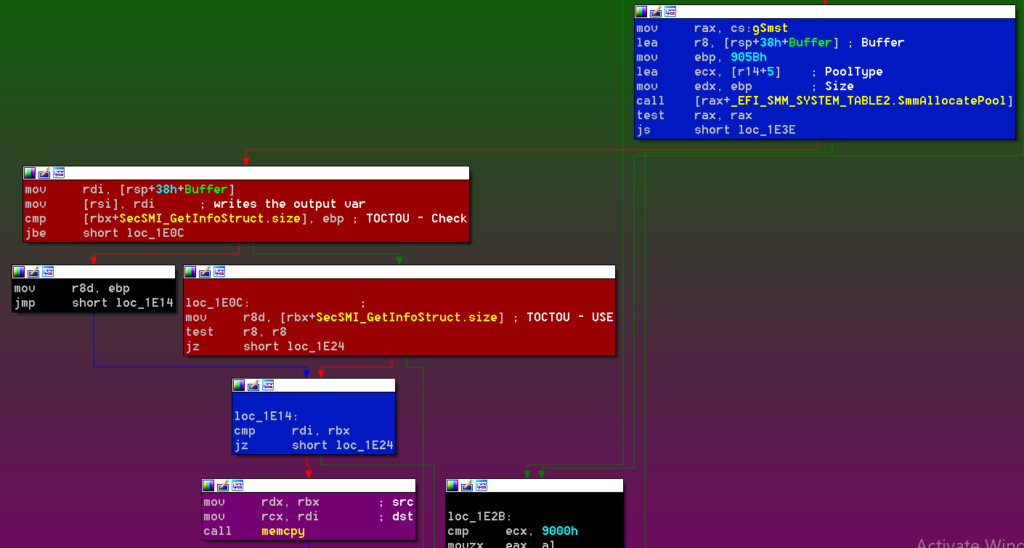

The problem is that there is a time-of-check to time-of-use (TOCTOU) condition that can be abused:

The block of code that performs the checks does not make local copies of the values into SMRAM. The values are retrieved again from user-controlled memory when the copy is done, which means the values could have changed.

Exploitation of this issue requires the use of a DMA agent.

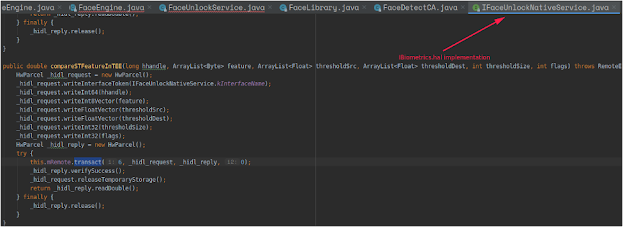

In the case of the handle_get_policy operation, the code presents a vulnerability in the first basic block:

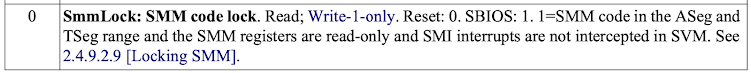

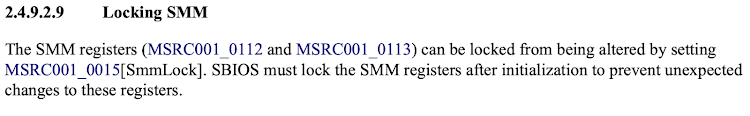

Previously, the buffer was verified to only 8 bytes outside SMRAM, but here the code writes the value 1 at offset +102h and the ValidateMemoryBuffer check happens afterwards. Moreover, if ValidateMemoryBuffer fails, the handler simply bails out without doing anything else.

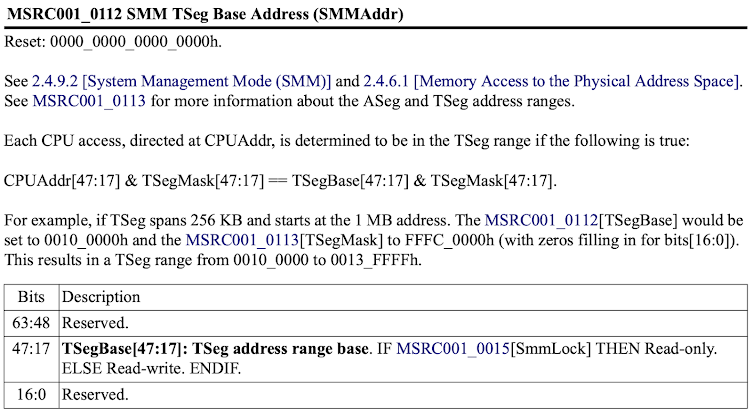

This out-of-bounds write condition allows the first 250 bytes (102h – 8) of the TSEG region to be written. The bottom of the TSEG region contains the SMM_S3_RESUME_STATE structure:

There are several EFI_PHYSICAL_ADDRESS pointers that could be targeted to achieve arbitrary SMM code execution.

The following PoC code uses Platbox and the above primitive to write ones to the first 250 bytes of the TSEG region (0xef000000 in the machine used for testing):

Finally, for the handle_set_policy operation, the code suffers from a combination of the issues described above.

Responsible Disclosure Attempt

IOActive drafted a technical document and sent it over to ASUS. After a few weeks, ASUS replied with the following:

The response left us with some concerns:

1. They claimed all the reported issues were known by the team.

2. There was no ETA for the patch.

3. The issues were discovered by Intel and there was an embargo?

4. Is there a CVE assigned to track these?

We replied with a few questions along these lines and their response made it clear that the module is entirely handled by AMI and that Intel researchers may or may not apply a CVE number.

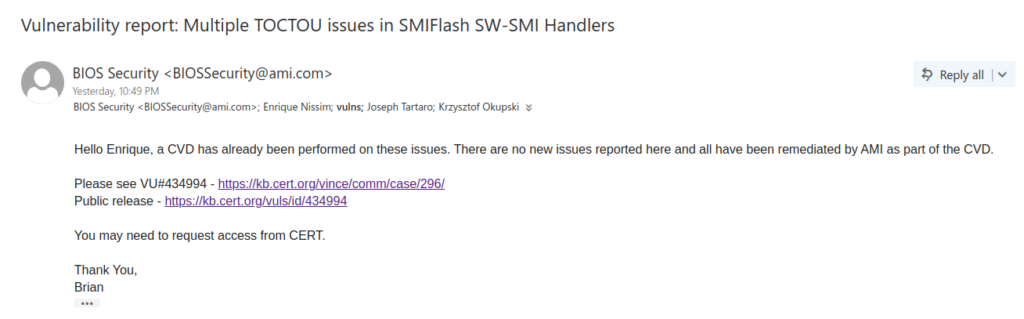

On the other hand, AMI provided a much quicker response, although quite unexpected:

We attempted to look for advisory SA50121 but did not find anything. It is probably only available to vendors. What is surprising though, is they say that a fix was released in June.

SMIFlash

At this point, we decided to look at the other related module Alex Matrosov’s presentation: SMIFlash.efi. SMIFlash (GUID BC327DBD-B982-4F55-9F79-056AD7E987C5) is one of the SMM modules included in BIOS image G513QR.330 for the ASUS Rog Strix G513QR. The module installs six SW-SMI handlers that are prone to double fetches (TOCTOU) that, if successfully exploited, could be leveraged to execute arbitrary code in System Management Mode (ring-2).

There are two public CVEs related to this module from 2017:

- CVE-2017-3753

- CVE-2017-11316

CVE-2017-3753 states that the module lacks proper input pointer sanitization and only mentions Lenovo as affected; however, this module is also part of our target ASUS Rog Strix BIOS, and after a bit of reverse engineering, we were able to identify three race conditions (TOCTOU) with different levels of impact.

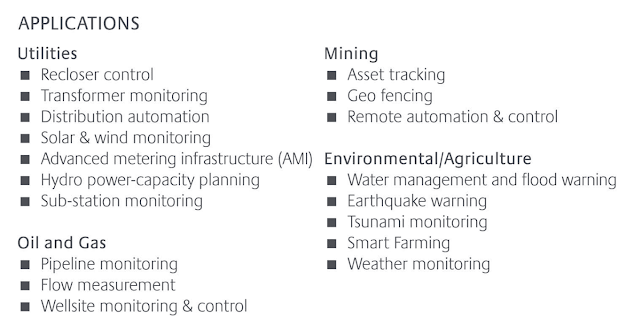

This module registers a single SW-SMI handler for six different SwSmiInputs (0x20, 0x21, 0x22, 0x23, 0x24, and 0x25) via EFI_SMM_SW_DISPATCH2_PROTOCOL:

Based on public information about this module, the above operations map to:

– 0x20 – ENABLE

– 0x21 – READ

– 0x22 – ERASE

– 0x23 – WRITE

– 0x24 – DISABLE

– 0x25 – GET_INFO

The handler uses EFI_MM_CPU_PROTOCOL to read the content of the saved ECX and EBX registers and create a 64-bit pointer. For all operations except for GET_INFO, this constructed address is verified to be exactly 18h bytes outside SMRAM using AMI_SMM_BUFFER_VALIDATION_PROTOCOL. 18h is therefore the size of the basic input record this module needs to work with. Reverse engineering the structure led to the following layout:

Depending on the value written on the SW-SMI triggering port (SwSmiInput), the execution continues in one of the previously listed operations (ENABLE, READ, WRITE, etc.).

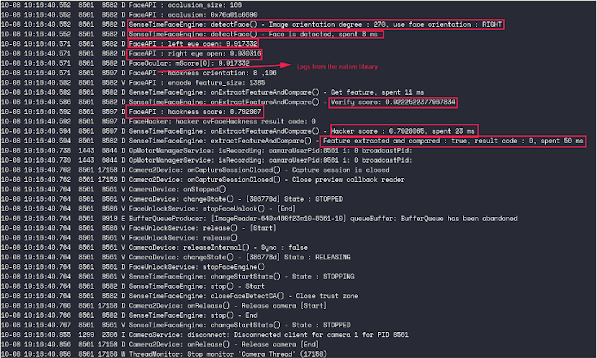

The READ and WRITE operations receive the pointer to the record as an argument and both are prone to the same TOCTOU vulnerabilities. Let’s look at the READ implementation:

RCX holds the controlled pointer and is copied into RBX. The function starts by checking that the flash_addr value falls within the intended flash range MMIO (0xFF000000-0xFFFFFFFF). It continues by using AMI_SMM_BUFFER_VALIDATION_PROTOCOL to ensure that the buffer_data pointer resides outside SMRAM. This is interesting because the reported issues in CVE-2017-3753 and CVE-2017-11316 seem to be related to the lack of validation over the input parameters. If the input pointers are not properly verified using SmmIsBufferOutsideSmmValid() (in this case ValidateMemoryBuffer()), an attacker can pass a pointer with an SMRAM address value and have the ability to read and/or write to SMRAM. In our current version, this is not the case and we can see verification is there.

Nevertheless, the code is retrieving the values from memory twice for all three members (flash_addr, size, and buffer_data). This means that the checked values do not necessarily correspond to the ones being passed to the FlashDriverSmm module. This is a race condition that an attacker can exploit through a DMA attack. Such an attack can easily be performed with a custom PCI device (e.g. PciLeech – https://github.com/ufrisk/pcileech).

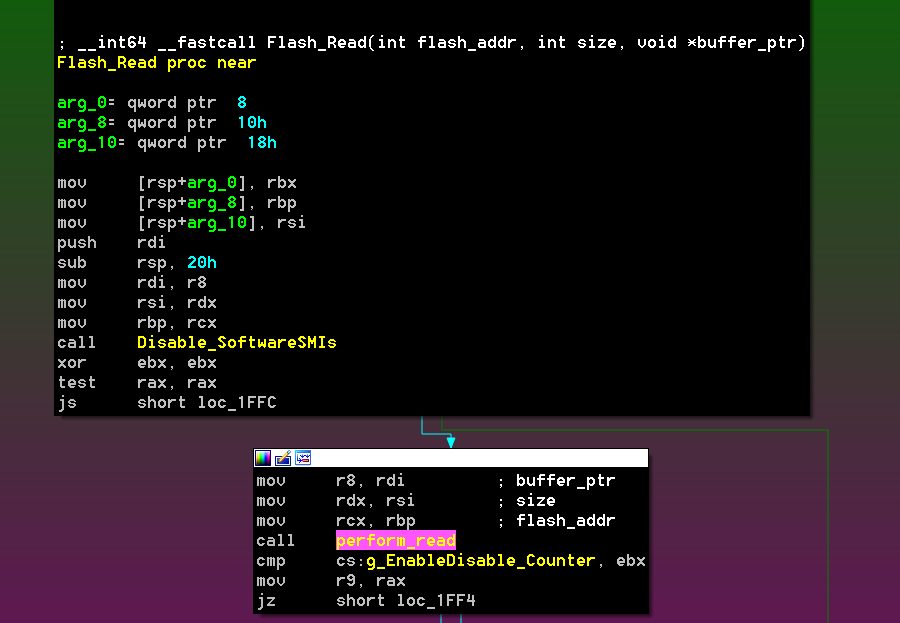

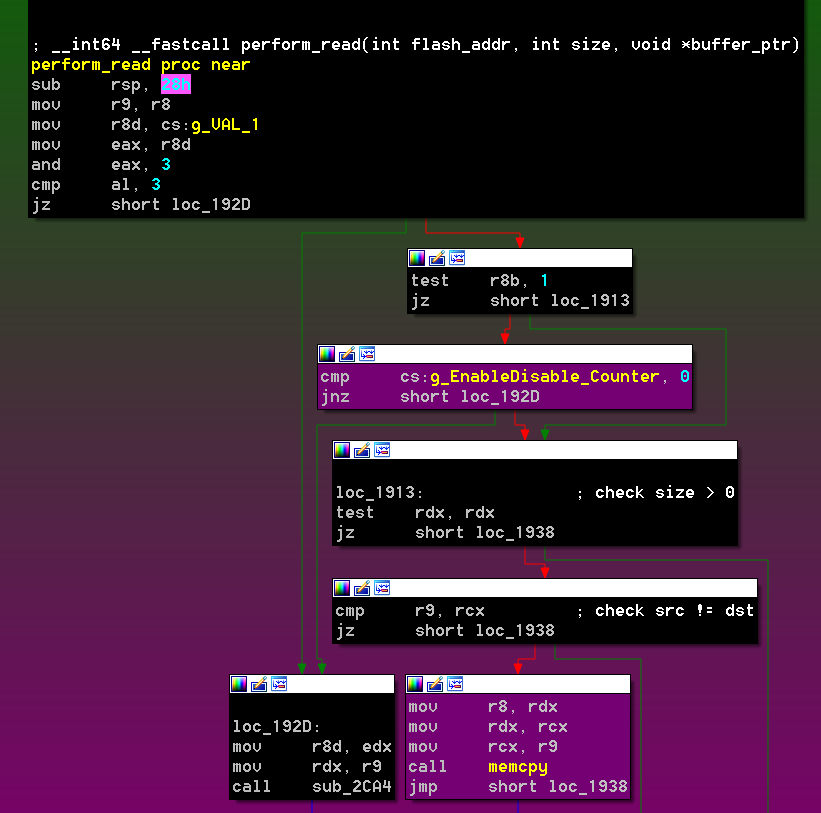

Winning the race for the READ operation leads to writing to SMRAM with values retrieved from flash; however, by disabling the Flash with the DISABLE operation first, the underlying implementation of FLASH_SMM_PROTOCOL (which resides in the FlashDriverSmm module), will use a simple memcpy to fulfill the request:

This is interesting because it provides an easier way to control all the bytes being copied.

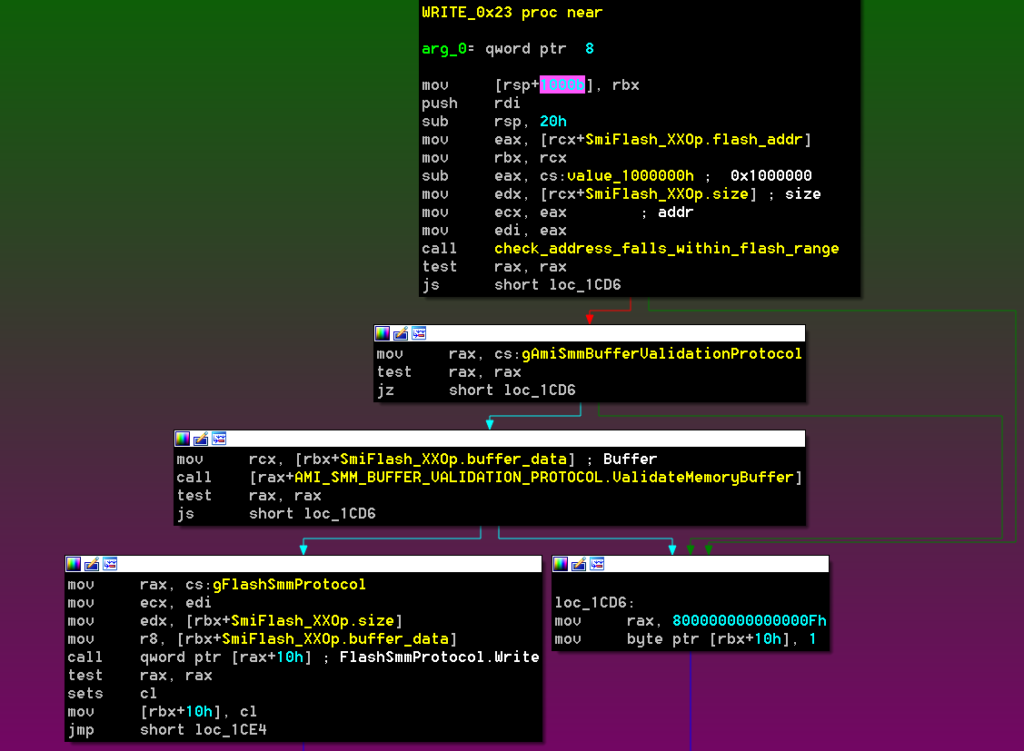

The WRITE operation has the exact same condition, although in this case, winning the race means leaking content from SMRAM into the Flash:

In summary, for both cases, the block of code performing the checks does not make local copies of the values into SMRAM. The values are retrieved again from user-controlled memory when they are about to be used, which means the values could have changed.

The GET_INFO (0x25) operation is affected by the same condition, although in a different way. In this case, as soon as the user pointer is constructed, the code verifies it is at least 1Ah bytes outside of SMRAM. Then, it retrieves the value of the first dword and uses it to further check the length of the provided region:

The reversed engineered structure looks as follows:

The code continues by calling into a function that allocates 905Bh bytes of SMRAM and attempts to copy the data into it. RBX is the pointer to the record, and the double-fetch is clear:

The code is trying to enforce 905Bh bytes as an upper limit for the copy but because the memory is fetched twice, the value could have changed after the check passed. As a result, SMRAM will be corrupted.

Responsible Disclosure Attempt

Q4 is always busy, and as a consequence, our team did not immediately report these issues to ASUS or AMI. Instead, four months passed since our initial report on SecSMIFlash.efi. On February 2 2023, after verifying the issues were still present in the latest available BIOS for our target ASUS laptop, we documented the findings and reported them to AMI.

This time AMI’s response only took two hours:

The CERT link was very helpful because it allowed us to better understand the full picture. The most important sections of the vulnerability note are reproduced below:

Multiple race conditions due to TOCTOU flaws in various UEFI Implementations

Vulnerability Note VU#434994

Original Release Date: 2022-11-08 | Last Revised: 2023-01-25

Overview

Multiple Unified Extensible Firmware Interface (UEFI) implementations are vulnerable to code execution in System Management Mode (SMM) by an attacker who gains administrative privileges on the local machine. An attacker can corrupt the memory using Direct Memory Access (DMA) timing attacks that can lead to code execution. These threats are collectively referred to as RingHopper attacks.

Description

The UEFI standard provides an open specification that defines a software interface between an operating system (OS) and the device hardware on the system. UEFI can interface directly with hardware below the OS using SMM, a high-privilege CPU mode. SMM operations are closely managed by the CPU using a dedicated portion of memory called the SMRAM. The SMM can only be entered through System Management Interrupt (SMI) Handlers using a communication buffer. SMI Handlers are essentially a system-call to access the CPU’s SMRAM from its current operating mode, typically Protected Mode.

A race condition involving the access and validation of the SMRAM can be achieved using DMA timing attacks that rely on time-of-use (TOCTOU) conditions. An attacker can use well-timed probing to try and overwrite the contents of SMRAM with arbitrary data, leading to attacker code being executed with the same elevated-privileges available to the CPU (i.e., Ring -2 mode). The asynchronous nature of SMRAM access via DMA controllers enables the attacker to perform such unauthorized access and bypass the verifications normally provided by the SMI Handler API.

The Intel-VT and Intel VT-d technologies provide some protection against DMA attacks using Input-Output Memory Management Unit (IOMMU) to address DMA threats. Although IOMMU can protect from DMA hardware attacks, SMI Handlers vulnerable to RingHopper may still be abused. SMRAM verification involving validation of nested pointers adds even more complexity when analyzing how various SMI Handlers are used in UEFI.

Impact

An attacker with either local or remote administrative privileges can exploit DMA timing attacks to elevate privileges beyond the operating system and execute arbitrary code in SMM mode (Ring -2). These attacks can be invoked from the OS using vulnerable SMI Handlers. In some cases, the vulnerabilities can be triggered in the UEFI early boot phases (as well as sleep and recovery) before the operating system is fully initialized.

[..]

Acknowledgements

Thanks to the Intel iStare researchers Jonathan Lusky and Benny Zeltser who discovered and reported this vulnerability.

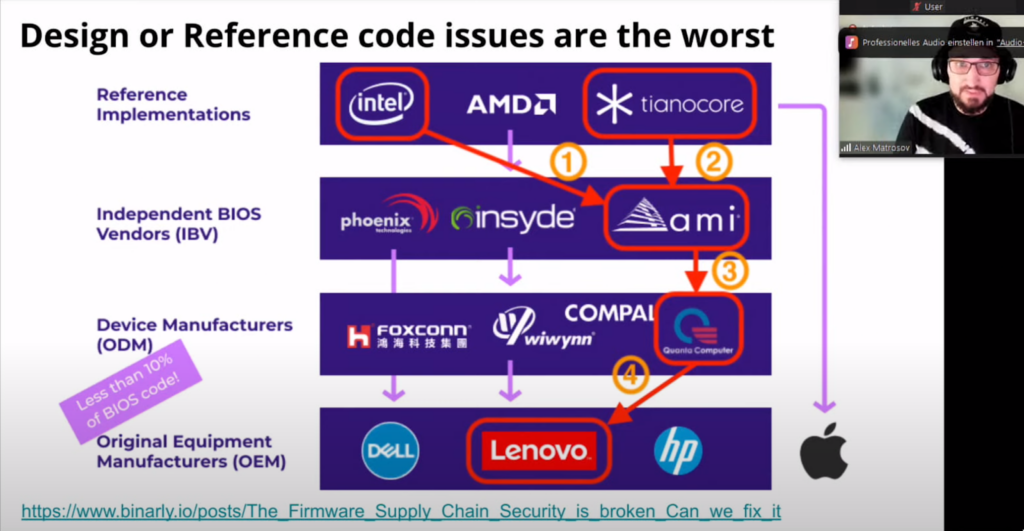

It is notable that there is no mention of SecSMIFlash.efi or SMIFlash.efi, and the information provided is quite generic. Nevertheless, we can see that the original release date matches what ASUS first said. It is also interesting that the vulnerability was last updated only a few days ago (Dell latest update). Additionally, the description refers to “RingHopper attacks” and mentions the Intel researchers that reported the issues.

These pieces of information immediately led to the following tweet:

Although we cannot be certain that they reported the exact same issues that we attempted to report, it does seem these two researchers documented findings on these modules before our team. It appears that the vulnerabilities were assigned CVE-2021-33164.

On November 16, 2022, Benny Zeltser tweeted that they had to withdraw the presentation from BlackHat USA and DefCon because the issues were not yet fixed. This suggests that a lot of the affected vendors have not been able to produce a new BIOS image, including the fixed modules. Indeed, from the list of vendors shown in the CERT vulnerability note, only AMI, Dell, HPE, Insyde, and Intel are marked as “affected.” We can now confirm that ASUSTeK is also affected.

Conclusion

Responsible coordinated disclosure in the platform security space is a bit of a circus. This issue was highlighted by Matrosov himself in his 2022 presentation at OffensiveCon.

ASUS mentioned an embargo that, to the best of our knowledge, expired on November 2, 2022. When reading the CERT link from AMI, all reported vendors reference CVE-2021-33164, specifically calling out issues on Intel NUCs and mentioning that the patches were released back in June of 2022. When analyzing the affected vendors list on the CERT page, you will find that all vendors noted as affected have released patches as of February 3, 2023. All vendors listed as unknown, or in our case ASUS, have yet to release patches but have been well aware of the vulnerabilities and the reports based on the communication we had with them.

IOActive has decided to publish the technical details about these issues after understanding that AMI released patches back in mid-2022 and that no new information was provided by us in our report of both modules. In addition, the CVE was filed back in 2021 and all vendors appear to have had more than enough time to responsibly patch and disclose these issues to consumers.

The CERT advisory refers to these threats as RingHopper attacks. The abstract of a presentation about RingHopper suggests billions of devices are affected by these issues. Our efforts to report these vulnerabilities to vendors stopped with the replies that they were already aware of these issues and have remedies in place. The fact that some vendors appear to still be vulnerable at this time is surprising. Ultimately, we feel it’s more important to shed light on this in order to get the issues fixed on those platforms that appear to be delayed, instead of leaving consumers and the industry in the dark for such an extended period of time.