My name is Jonathan Brossard, but you may know me under the nic Endrazine. Or maybe as the CEO of Toucan System. Nevermind: I’m a hacker. Probably like yourself, if you’re reading this blog post. Along with my friends Matthieu Suiche and Philippe Langlois,—with the invaluable help of a large community worldwide—we’re trying to build a conference like no other: Hackito Ergo Sum.

First, a bit of background on conferences as I have discovered them:

I remember really well the first conference I attended almost a decade ago: it was PH-Neutral in Berlin. The first talk I’d ever seen was from Raoul Chiesa on attacking legacy X25 networks, specifically how to root satellites. (For those unfamiliar with X25, it was the global standard for networking before the internet existed. Clearly, if you sent a satellite to space in the 1980s, you weren’t going to get it back on earth so that you could path it and upgrade its network stack, so it would remain in space, vulnerable for ages, until its owner eventually decided to change its orbit and destroy it).

The audience comprised some of the best hackers in the world and I got to meet them. People like Dragos Riou, FX, Julien Tinnes, and various members of the underground security industry were asking questions or completing what the presenter was saying in a relaxed, respectful, and intelligent atmosphere. It was a revelation. That’s when I think I decided I’d spend the rest of my life learning from those guys, switch my career plans to focus on security full time, and eventually become one of them: an elite hacker.

Back in those days, PH-Neutral was a really small conference (maybe 50 or 100 people, invitation only). Even though I had many years of assembly development and reverse engineering behind me, I realized those guys were way ahead in terms of skills and experience. There were exactly zero journalists and no posers. The conference was put together with very little money and it was free; anyone could pay for their travel expenses and accommodations, and, as a result, all the people present were truly passionate about their work.

Since then I’ve traveled the world, gained some skills and experience, and eventually was able to present my own research at different security conferences. I have probably given talks or trainings at all the top technical security conferences in the world today, including CCC, HITB, BlackHat U.S., and Defcon. I couldn’t have done half of it without the continuous technical and moral help and support of an amazing group of individuals who helped me daily on IRC.

Building the Team

I remember the first talk I ever gave myself: it was at Defcon Las Vegas in 2008. Back in those days, I was working in India for a small security startup and was quite broke (imagine the salary of an engineer in India compared to the cost of life in the U.S.). I was presenting an attack, working against all the BIOS passwords ever made, as well as most disk encryption tools (Bitlocker, Truecrypt, McAfee). I remember Matthieu knocking at my door after his own BlackHat talk on RAM acquisition and forensics: he was only 18 and had no place to stay!

We slept in the same bed (no romantic stuff involved here). To me, that’s really what hacking was all about: sharing, making things happen in spite of hardcore constraints, friendship, knowledge. I also started to realize that those big conferences had nothing to do with the small elite conferences I had in mind. A lot of the talks were really bad. And it seemed to me that attitude, going to as many parties as possible, and posing for journalists was what attendees and most speakers really expected from those conferences.

In 2008 during PH-Neutral (once again), I met Philippe Langlois. For those of you who don’t know him by any of his numerous IRC nics, you might know him as the founder and former CTO of Qualys. An old-school guy. Definitely passionate about what he was doing. Phil was feeling equally unsatisfied with most conferences: too big, too commercial, too much posing, and very little actual content. At that time in France the only security conference was organized by the army and the top French weapons sellers. To make it even worse, all the content was in French (resulting in zero international speakers, which is ridiculous given that we collaborate daily with hackers literally from around the globe, even when coding in our bedrooms, at our desks, or in a squat).

So, we decided to make our own conference with Matt.

Breaking the Rules and Setting Our Own

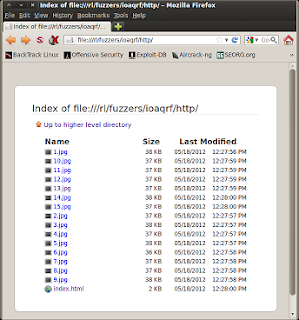

We agreed immediately that the biggest problem with modern conferences was that they had turned into businesses. You can’t prioritize quality if your budget dictates you have famous, big-name speakers. So we decided that Hackito would be a spin-off from the /tmp/lab, the first French hackerspace, which was a 100% non-profit organization housed in a stinky basement of an industrially-zoned Paris suburb. At first we squatted until we reached an agreement with the landlord, who agreed to let us squat and eventually pay for both our electricity (which is great for running a cluster of fuzzing machines) and water. It was humid, the air was polluted by a neighboring toxic chemical plant, and trains passed by every 10 minutes right outside the window. But it didn’t matter because this spot was one of the most important hacker headquarters in France.

One thing that played a major role in creating the spirit of Hackito was the profile of the people who joined this hackerspace: sure there were software programmers, but also hardware hackers, biologists, artists, graphic designers, and general experimenters who wanted to change the world from a dank, humid garage. This was a major inspiration to us because (just like the internet) anyone was welcome to the space, without discrimination. Hackerspaces by nature are open and exchange a lot of information by having joint events such as the HackerSpace Festival or hosting members from other hackerspaces for extended period of times. We modeled this by wanting to share with other conferences instead of competing, which led to the Security Vacation Club (it started as a joke, but today allows us to share good speakers, good spirit, and mutual friendship with other hacking conferences we respect).

We then called our irc friends for help. Some could make it and others couldn’t, but all of them contributed in one way or another, even if it was only with moral support.

Early Days

Building your own conference out of thin air is more challenging than you might expect and, of course, we wanted to do it with minimal sponsorship. We agreed straight away with sponsors that they’d get nothing in exchange for their support (no anticipated disclosure, no right to vote on what talks would be presented, no paid talk or keynote). We requested help from friends to help us select solid technical talks and to come speak. You’d be surprised how the hackers you respect most (and are seriously busy) are willing to help when they share the spirit of what you’re doing.

So, we ended up with the scariest Programming Committee on earth, for free—I don’t think there’s a company in existence with a security team half as talented. I can’t express here how much we value the time and effort that they, and our speakers, spend helping us. Why would they do this? Because a lot of people are unsatisfied with the current conference offerings. Now don’t get me wrong, commercial and local conferences do offer value, if only to gather disparate communities, foster exchange of ideas, and sometimes even introduce business opportunities. If you’re looking after your first job in the security industry, there’s no better choice than attending security conferences and meeting those who share the same taste for security.

Hackers Prize Quality—Not Open Bars, Big Names, or Bullshit

To give you some perspective: two of the talks nominated in last year’s pwnie awards at BlackHat were given first at Hackito. Tarjei Mandt and his 40 kernel Windows Exploit (winner of the Pwnie award for best local exploit) and Dan Rosenberg and John Obereide with their attack against grsecurity exploit. That’s what Hackito is all about: giving an opportunity to both known and unknown speakers, judging them based solely on their work—not their stardom or their capacity to attract journalists, or money.

I think it’s important to make clear that most Hackito speakers have paid for their own plane tickets and accommodations to come and present their work in Paris. I can’t thank them enough for this; they are true hackers. It is common practice for so-called security rock stars to not only pay for nothing, but to ask for a four-digit check to present at any conference. In contrast, we believe our hacking research is priceless and that sharing it for free (or even at your own cost) with your peers is what makes you a hacker. That’s the spirit of Hackito.

Without any rock stars, Hackito can feature what we believe represents some of the most innovative security researchers worldwide. The content is 100% in English and must be hardcore technical—if you can’t code, you can’t talk for the most part. If it’s not new or offensive, we don’t care. If you’re asking yourself why anyone would present years of hard research for free at Hackito instead of selling it the highest bidder, the answer is simple: respect from your peers. That’s what hackers do: distribute software, share knowledge, collaborate. Period.

Hackito is More Than Just Talks

I’ve used the words quality and best a lot in this post; to be honest, I believe competition is a bad thing in general and for hacking in particular. Hacking is not about being better than others. It’s about being better than the machine and getting better yourself. It has everything to do with sharing and being patient, polite, passionate, respectful, innovative…that is, being an accomplished human being.

If you remember only one thing from this post, make it that message.

In the same vein, I don’t see Hackito as directly competing with other conferences. We actually speak at other conferences of similar quality and I strongly believe that any conference that promotes hacking is a good thing. We need diverse offerings to match all skills and expectations. Hackito focuses on the hardcore top end of security research, but that doesn’t mean newbies shouldn’t be allowed to progress in other cons.

The Hackito framework allows us to offer more than just talks, which are important, but like FX repeatedly told my in the PH-Neutral days: the conference is the people. Therefore, we try to maintain an open conference as much as possible. Anyone with a cool security-related project is welcome to submit it to us, making it part of Hackito and then labeling it Hackito. For example, Steven van Acker from the overthewire.org community has written a special war game for attendees every year.

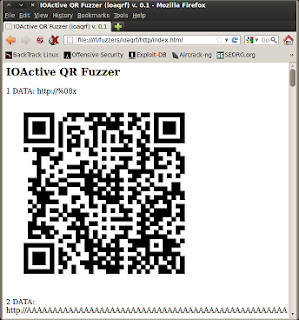

Our presenter line-up seriously rocks! This year, Matias Brutti from IOActive will offer a free workshop on Social Engineering and Walter Belgers from the highly respected Toool group will do the same with a Lockpicking workshop. Eloi just published a cryptographic challenge open to anyone on the internet with the valuable help of Steven Van Acker (who is hosting the challenge on the overthewire.org community servers). Other featured editions include an FPGA reverse engineering challenge by the incredible hardware hacker LeKernel.

We Still Party Hard

Hackito unites hackers from across the globe—Korea, Brazil, Israel, Australia, Argentina, Germany, Sweden, U.S., Portugal, Switzerland, Russia, Egypt, Romania, Chile, Singapore, Vietnam, New Zealand—so of course we have to party a bit. I remember the first Hackito party in our /tmp/lab garage space; imagine the anti-Vegas party: no sponsors, live hardteck music, artists spanking each other in a crazy performance, raw concrete walls, bad cheap beer, virtually no females, zero arrogance, zero drama, zero violence—just 300 people going nuts together. That was one of the best parties of my entire life.

Greetings

Thanks heaps to (in no particular order): itzik, Kugg, redsand, Carlito, nono, Raoul, BSdeamon, Sergey, Mayhem, Cesar Cerrudo, Tarjei, rebel, #busticati, baboon, all those I can’t possibly exhaustively name here, plus the Hackito team of Matt and Phil.

I also must thank:

- All of our speakers.

- All of our sponsors (who help us and don’t ask much in exchange).

- The incredible team behind Hackito that spends countless hours in conference calls on their weekends to make things happen during an entire year so that others can present or party.

- Our respected Programming Committee of Death (you guys have our highest respect; thank you for allowing us to steal some of your time in return).

- Every single hacker who comes to Hackito, often from very far, to contribute and be part of the Hackito experience. FX was right: the conference is the people!!