Taking aim at the attack surface of these buzzy devices uncovers real-world risks

In the grand theater of innovation, drones have their spot in the conversation near the top of a short list of real game changers, captivating multiple industries with their potential. From advanced military applications to futuristic automated delivery systems, from agricultural management to oil and gas exploration and beyond, drones appear to be here to stay. If so, it’s time we start thinking about the security of these complex pieces of airborne technology.

The Imperative Around Drone Security

Now, I know what you’re thinking. “Drone security? Really? Isn’t that a bit… extra?”

Picture this: A drone buzzing high above a bustling city, capturing breathtaking views, delivering packages, or perhaps something with more gravitas, like assisting in life-saving operations. Now, imagine that same drone spiraling out of control, crashing into buildings, menacing pedestrians — or worse, being used as a weapon.

Drone security isn’t just about keeping flybots from crashing into your living room. It’s about ensuring that these devices, which are increasingly a part of our everyday lives, are hardened against the actions of those with malicious intent. It’s about understanding that while drones can be used for good, they can also be used for nefarious purposes.

And BTW, hacking a drone remotely is just plain cool.

But let’s not get carried away. While the idea of hacking a drone might sound like something out of a spy movie, it’s a very real threat. And it’s a threat that we need to take seriously. Let’s dive a little deeper.

Drones: The Other Kind of Cloud Vulnerabilities

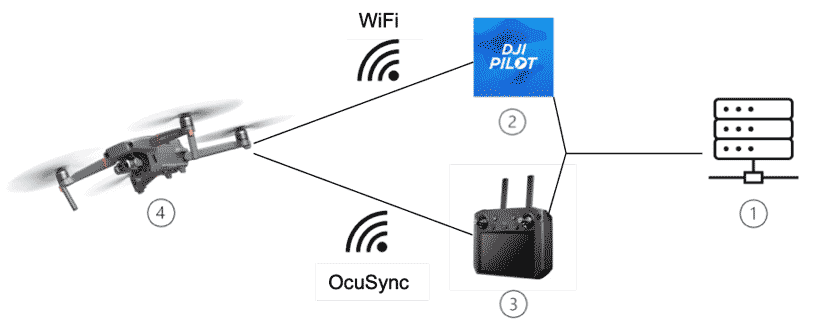

Before we delve into the nitty-gritty of drone hacking, let’s take a moment to understand them. Drones are either controlled remotely or they fly autonomously through software-controlled flight plans embedded in their systems working in conjunction with onboard sensors and the satellite-based Global Positioning System (GPS).

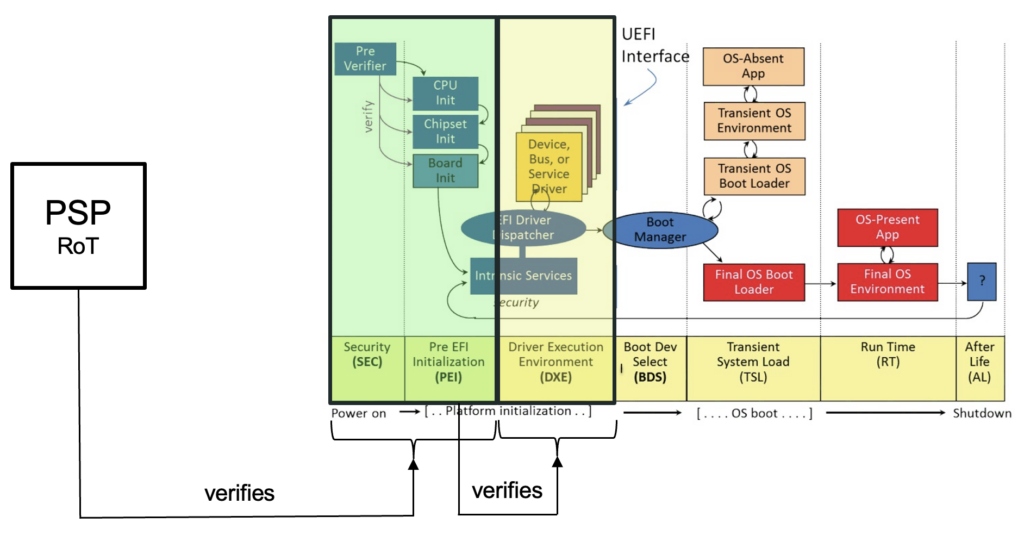

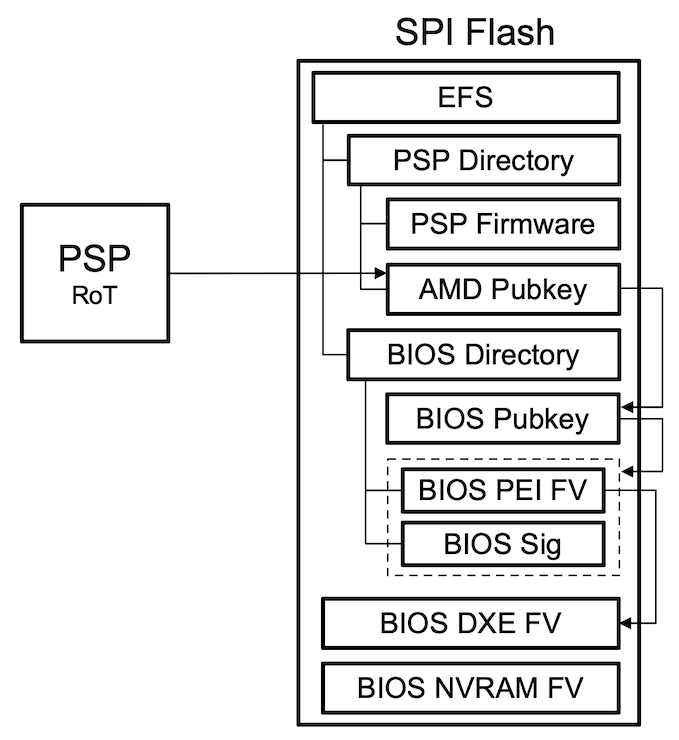

At the heart of a drone’s operation — and the bullseye for any security research — is its firmware and its associated microcontroller or CPU. This is the software/chip combination that controls the drone’s flight and functionality. It’s the drone’s brain. And just like any brain, we’re sorry to report, it has weaknesses.

Drones, like any other piece of technology, are not impervious to attack. They present us with a few attack surfaces – the backend, mobile apps, RF communication, and the physical device itself.

Electromagnetic (EM) Signal: A Powerful Tool for Hacking

Now that we’ve covered the basics let’s move on to the star of the show – the Electromagnetic (EM) signal. EM signals are essentially waves of electric and magnetic energy moving through space. They’re all around us, invisible to the naked eye but controlling much of our daily life.

These pulses can be used to interfere with the way a drone “thinks”, creating unexpected bad behavior in the core processor, disrupting its operations, or pushing the onboard controller to reveal information about itself and its configurations. It’s like having a magic wand that can bypass all the security systems, influence critical systems behavior, and even potentially take control of the drone. Sounds exciting, doesn’t it?

The potential of EM signals to bypass drone security systems is a concern and a threat that needs to be addressed quickly.

Case Study: Hacking a Drone with EM Fault Injection

Let’s walk through our real-world example of a drone being hacked using EM signals.

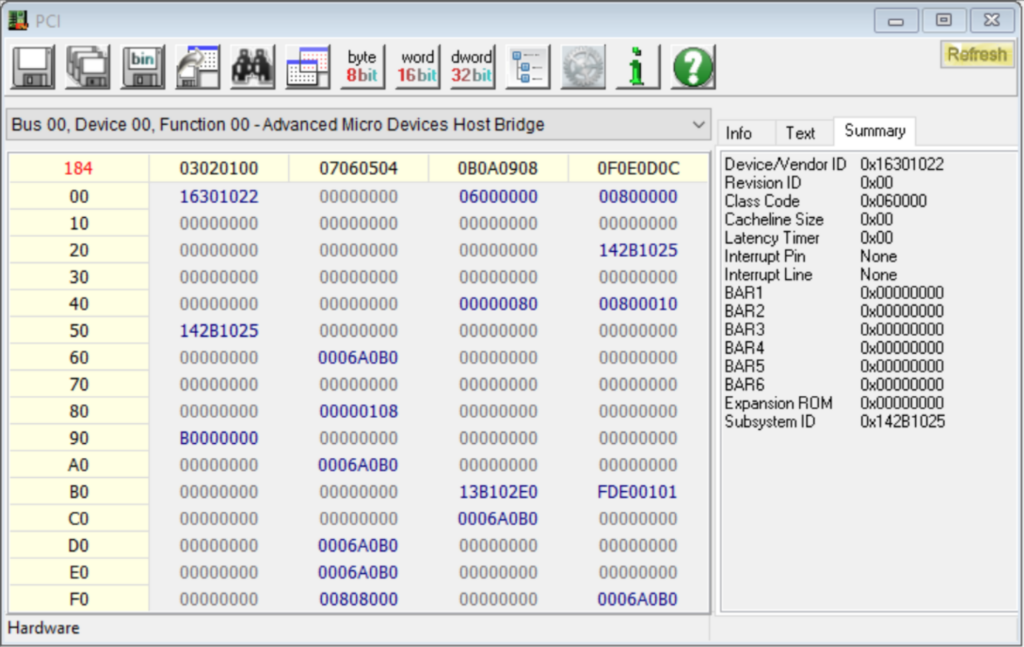

In this particular IOActive research, we used EM signals to interfere with the drone’s functionality by disrupting the routine processing of the device’s “neural activity” in its core microprocessor “brain” and branching out to its various onboard peripheral systems.

Most people are familiar with Electroencephalography (EEG) and Deep Brain Stimulation (DBS) — using electrodes and electrical impulses to both monitor and influence activity in the human brain. Our approach here is analogous to that, but with fewer good intentions and at a much greater distance.

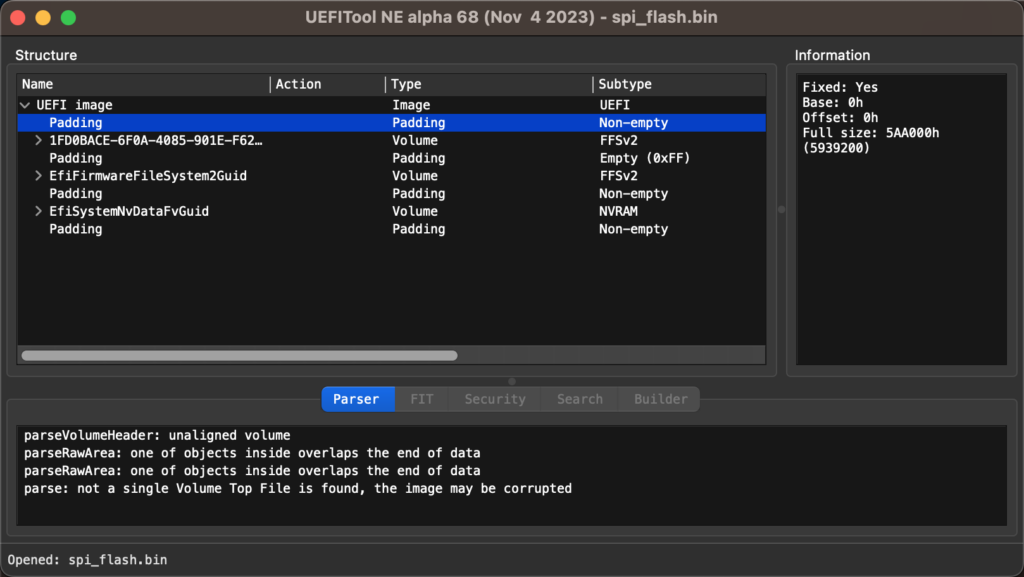

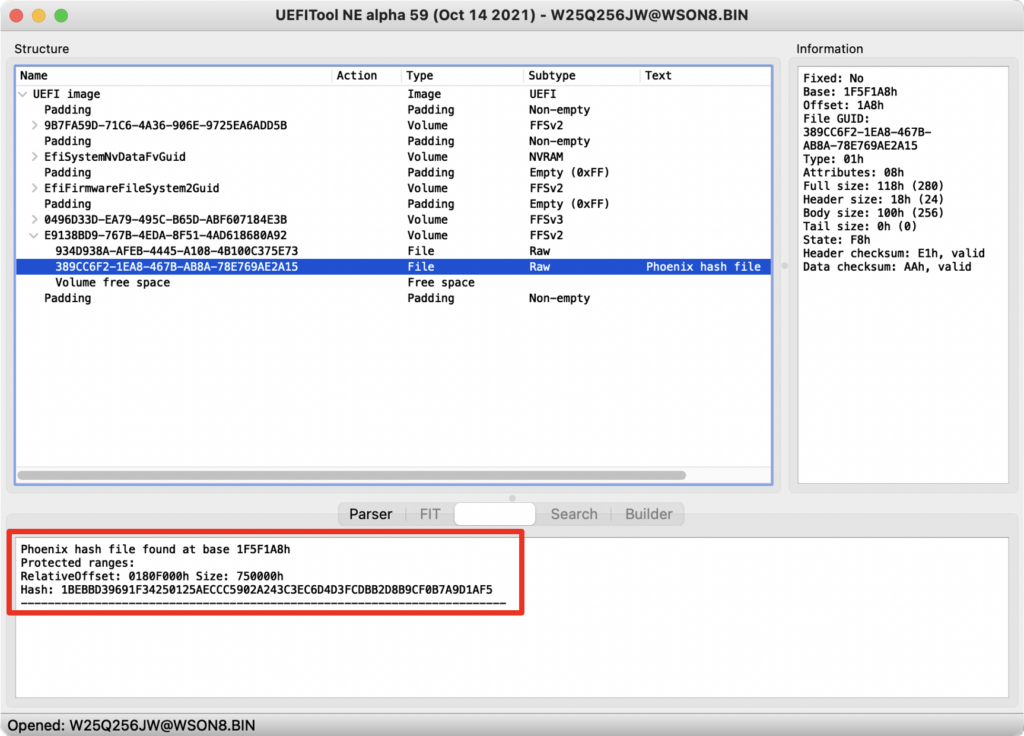

Our initial strategy involved attempting to retrieve the encryption key using EM emanations and decrypting the firmware. We began by locating an area on the drone’s PCB with a potent EM signal to place a probe and record sufficient traces to extract the key.

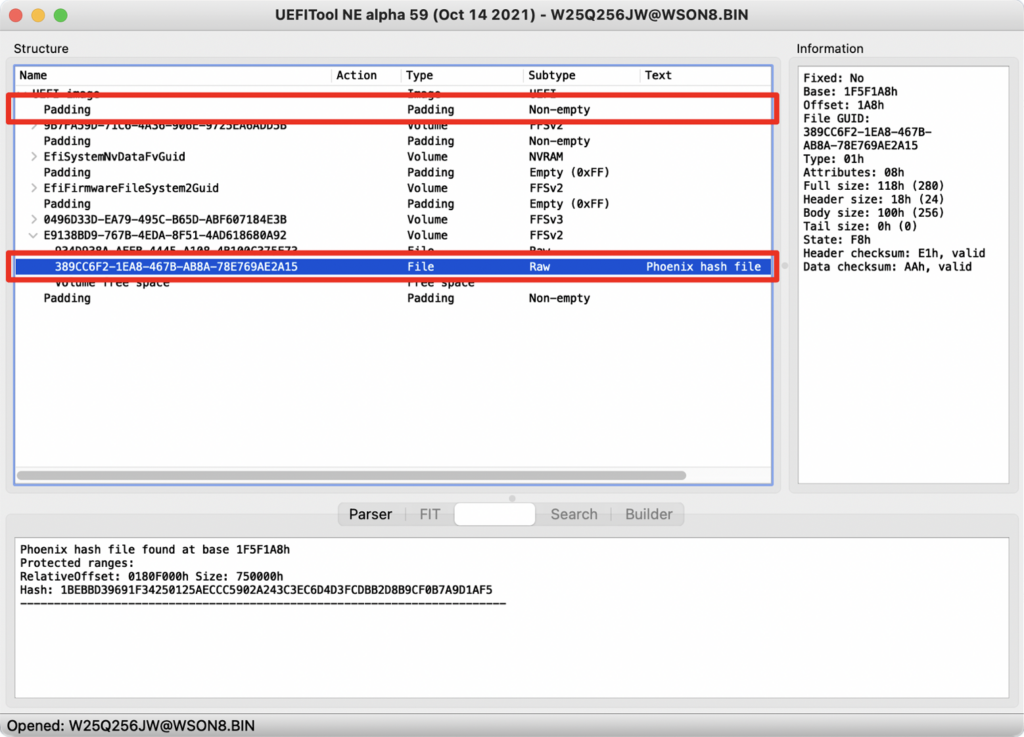

After identifying the location with the strongest signal, we worked on understanding how to bypass the signature verification that takes place before the firmware is decrypted.

After several days of testing and data analysis, we found that the probability of a successful signature bypass was less than 0.5%. This rendered key recovery unfeasible since it would have required us to collect tens of thousands of traces.

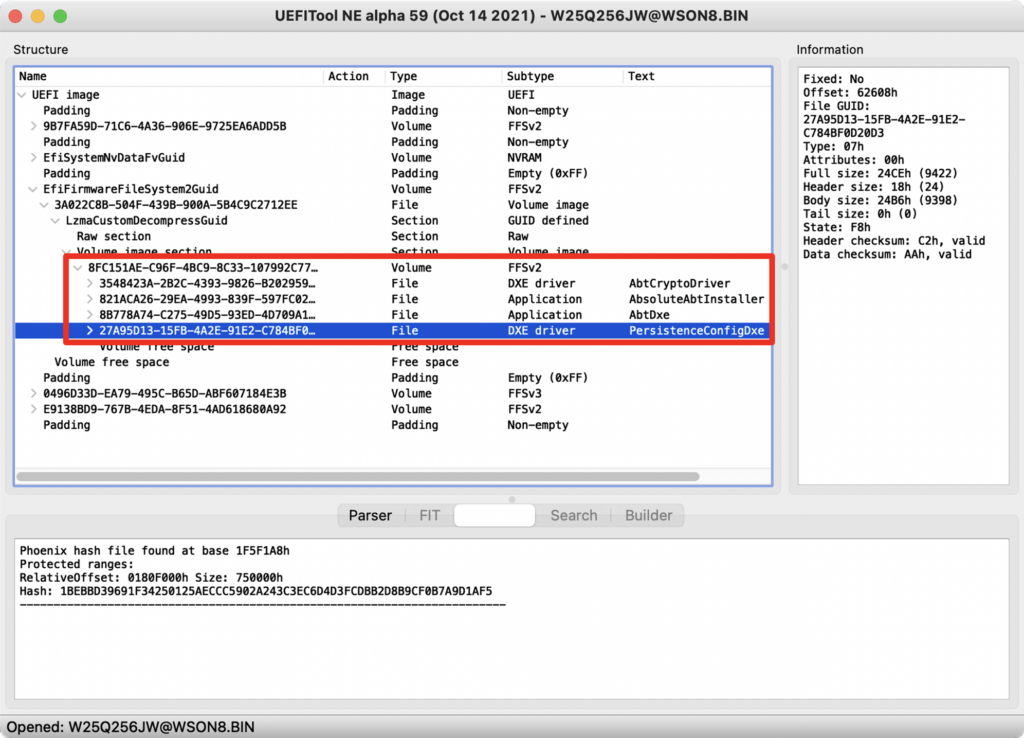

Our subsequent — and more fruitful — strategy involved using ElectroMagnetic Fault Injection (EMFI), inspired by ideas published by Riscure. With EMFI, a controlled fault can be used to transform one instruction into another, thereby gaining control of, say, the PC register. We can generate an EM field strong enough to induce changes within the “live bytes” of the chip. It’s very much like sending DBS current to the human brain and getting the muscles to behave in an unconscious, uncontrolled way.

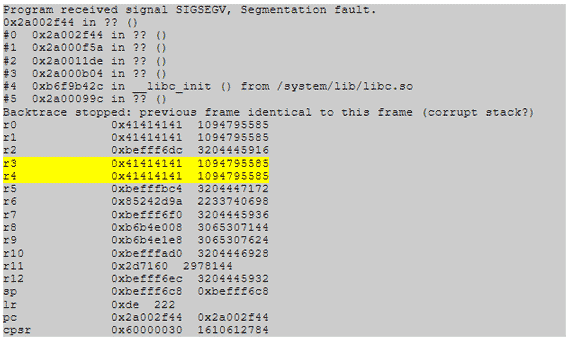

After identifying a small enough area on the PCB, we tweaked the glitch’s shape and timing until we observed a successful result. The targeted process crashed, as shown here:

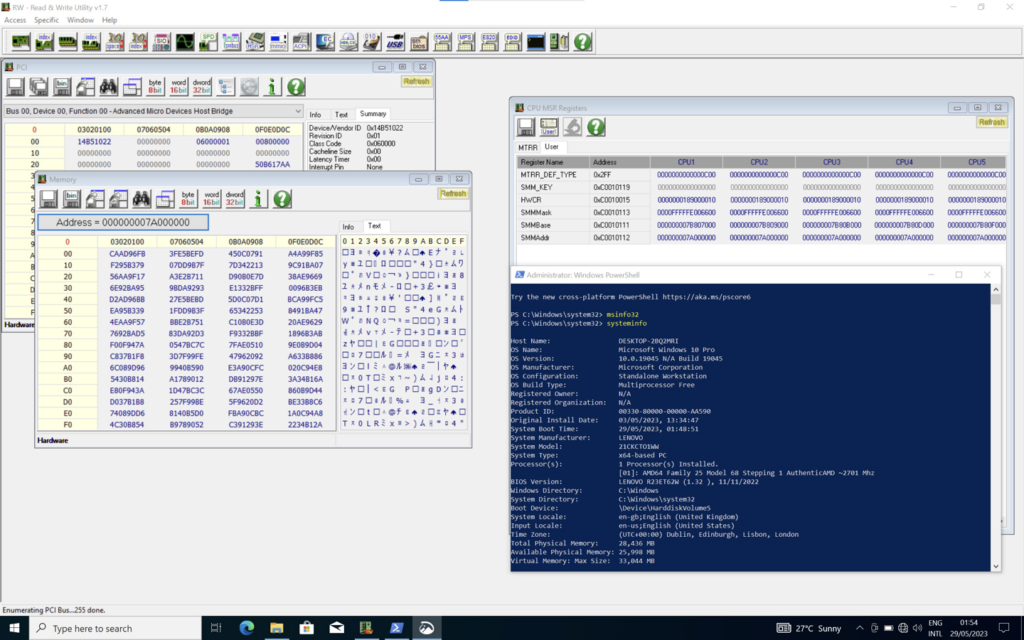

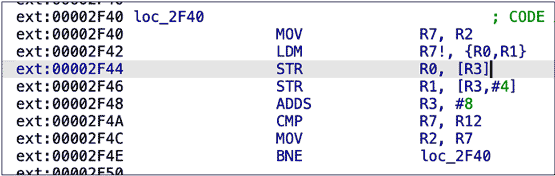

Our payload appeared in several registers. After examining the code at the target address, we determined that we had hit a winning combination of timing, position, and glitch shape. This capture shows the instruction where a segmentation error took place:

Having successfully caused memory corruption, the next step would be to design a proper payload that achieves code execution. An attacker could use such an exploit to fully control one device, leak all of its sensitive content, enable access to the Android Debug Bridge, and potentially leak the device’s encryption keys.

Drone Security: Beyond the Horizon

So, where does this leave us? What’s the future of drone security?

The current state of drone defenses is a mixed bag. On one hand, we have advanced security systems designed to protect drones from attacks. On the other hand, we have researchers — like us — constantly scheming new ways to bypass such systems.

The future of drone cyber-protections lies in ongoing research and development. We must stay one step ahead and identify weaknesses so manufacturers can address them. This post is just a summary of a much longer research paper on the topic; I encourage you to check out the full report.

Follow along with us at IOActive to keep up with the latest advancements in the field, understand the threats, and take action. The sky is not just the limit. It’s also a battlefield, and we need to be prepared.