World-Wide Electric Vehicle (EV) Charging Infrastructure Trends

The global push to meet rising EV adoption with sufficient EV smart charger infrastructure is astoundingly challenging. Bloomberg estimates the global charging infrastructure market opportunity to be $1.9T between 2022 and 2050. That opportunity will be seized upon by a host of organizations large and small, public and private. From EV fleet depots to fast charging stations along highways, parking garages, smart chargers for employees, and home chargers, EV supply equipment (EVSE) are already becoming a common sight. The graph below depicts the world-wide cumulative global public charging connections:

World-wide trends of transition and adoption of EVs is due to climate control and carbon pollution-free electricity sector goals and policies that are being mandated over the coming years around the world, such as:

- In the USA, Executive Order 14057[1] restricts all government agencies’ new acquisitions of light-duty vehicles to only EVs by 2027 and mid- and heavy-duty vehicle acquisitions to only EVs by 2035.

- In California, Executive Order N-79-20[2], ends sales of ICE passenger vehicles and trucks by 2035[3].

- The EU and UK have banned sales[4] of new combustion engine cars from 2035.

The Battery Electric Vehicle (BEV) and charging infrastructure landscape is rapidly evolving technologically and operationally in a market where cost and time-to-market are prioritized higher than security[5]. Technologies used to build the BEV ecosystem suffer from well-known cybersecurity issues, which expose vulnerabilities and risk. Current charging stations are operated as build-and-forget devices that are highly exposed and network connected, with cyber and physical vulnerabilities which pose a great challenge to the ecosystem, including bulk electric and distribution system stability, with limited current threat mitigation.

Securing such an advanced, fully connected, and heterogeneous supply grid will take a similar effort to the Information and Communication Technology (ICT) sectors that secure webservers and cloud infrastructure, and this would also include mitigations around the cyberphysical vulnerabilities unique to the BEV ecosystem.

HPC standards for the Megawatt Charging System (MCS) are being developed by the CharIN (Charging Interface Initiative eV.) international standards organization[6].

Modern electrified transportation vehicles will require a HPC infrastructure. Cybersecurity vulnerabilities in HPC systems operating at very high levels of power pose a serious cyberphysical threat to the new electric vehicles and supporting infrastructure, but also to the electrical grid (bulk and distribution) that supplies power to the HPC systems. These cyberphysical vulnerabilities will require focused, skillful mitigation.

The potential consequences of a successful skillful attack on a BEV or ESVE system could produce remote code execution on BEVs or EVSEs, physically damaged vehicles or chargers, local or regional power outages, and larger coupling effects across countries from induced cascading failures.

IOActive’s Vehicle Cybersecurity Vulnerability Findings

In-vehicle technology is a top selling point for today’s car buyers[7]. What was once simply a “connected vehicle” is now increasingly more feature-rich, with software systems like self-driving and driver assist, complex infotainment systems, vehicle-to-other communication and integration with external AI. More than ever, all of this exciting technology turns modern vehicles into targets for malicious cyberattacks such as ransomware. It is imperative that automotive manufacturers take additional action now to infuse cybersecurity into their vehicles and mitigate potential threats. Moreover, EVSE manufacturers and utilities need to increase efforts to manage their highly impactful risks.

IOActive’s pioneering vehicle cybersecurity research began with the ground-breaking 2015 Jeep hack[8] that evolved into our ongoing vehicle research that has included commercial trucks, EVSE, and autonomous vehicles.

For over a decade, IOActive has been publishing original research blogs and papers:

- Remote Exploitation of an Unaltered Passenger Vehicle (2015): This IOActive research paper outlined the research into performing a remote attack against an unaltered 2014 Jeep Cherokee and similar vehicles. IOActive reprogrammed a gateway chip in the head unit to allow it to send arbitrary CAN messages and control physical aspects of the car such as steering and braking. This testing forced a recall of 1.4 million vehicles by FCA and mandated changes to the Sprint carrier network. https://www.ioactive.com/pdfs/IOActive_Remote_Car_Hacking.pdf

- Uncovering Unencrypted Car Data in Luxury Car Connected App (2020): IOActive conducted research to determine whether a luxury car used encrypted data for its connected apps. Unencrypted data was found in the app that could be used to stalk or track someone’s destination, including identification of the exact vehicle and its location. IOActive used Responsible Disclosure channels and the manufacturer implemented encryption to protect the sensitive data via key management. https://labs.ioactive.com/2020/09/uncovering-unencrypted-car-data-in-bmw.html

- Commonalities in Vehicle Vulnerabilities (2016, 2018, 2023): With automotive cybersecurity research is growing, IOActive has been on the leading edge, amassing a decade of real-world vulnerability data illustrating the general issues and potential solutions to the cybersecurity issues facing today’s vehicles. The paper describes automotive vulnerability data that illustrates issues and potential solutions from 2016 to 2023.

https://www.ioactive.com/ioactive-commonalities-vehicle-vulnerabilities-22update/ IOActive Commonalities in Vehicle Vulnerabilities - NFC Relay Attack on the Tesla Model Y (2022): IOActive reverse-engineered the Near Field Communications (NFC) protocol used by an EV automaker between the NFC card and vehicle. Created custom firmware modifications that allowed the device to relay NFC communications over Bluetooth/WiFi using a BlueShark module. It was possible to perform the attack via Bluetooth from several meters away (as well as via WiFi from much greater distances) https://www.ioactive.com/wp-content/uploads/2025/05/NFC-relay-TESLA_JRodriguez.pdf

EVSE Cybersecurity Incidents Are Increasing

The growing popularity of Electric Vehicles (EVs) attracts not only gas-conscious consumers but also cybercriminals interested in using EV charging stations to conduct large-scale cyberattacks for monetization purposes, espionage attacks, politically motivated attacks, theft of private/sensitive data (e.g., drivers’ data), falsifying EV ranges, and more. EVSEs, whether in a private garage or on a public parking lot, are connected IoT devices, running software that interacts with payment systems, maintenance systems, OEM back-end systems, telecommunications, and the smart grid. Therefore, charging stations pose significant cybersecurity risks.

Early incidents of cyberattacks on charging stations include the following:

- In July 2021, 13 EVSE vulnerabilities affecting an EV charging controller firmware manufactured by a major EVSE vendor were discovered, including three critical vulnerabilities, eight high-severity and two medium-severity. https://portswigger.net/daily-swig/schneider-electric-fixes-critical-vulnerabilities-in-evlink-electric-vehicle-charging-stations. These security flaws could enable attackers to exploit EVSEs remotely, escalate privileges, perform remote code execution, and even gain a full takeover of the EV charging device. Three CVEs were assigned a CVSS score of 9.4. Following the incident, the EVSE vendor recommended using operational network-capable devices in a closed and protected network with a suitable firewall.

- Russian EVSEs were attacked in February 2022, A Ukrainian Company Hacked Russian EV Charging Stations to Protest the Invasion (motorbiscuit.com) in response to the Ukraine war, and three more were compromised in the United Kingdom in April 2022, Electric car charging points hacked to show PORN on screens | The Sun. Both situations were considered “cyber pranks,” and displayed rude messages on the screens of the units.

- A major oil company patched a database vulnerability in September 2023 that could have exposed millions of charging logs from across its EV charging network. CloudDefense.AI Discovers Critical Security Data Breach for Oil Giant Shell

- In January 2023, a security researcher from Kilowatts was able to expose potential vulnerabilities and security issues in a major EVSE vendor by using a program known as TeamViewer to gain full access to the charger’s internal computer, which was reportedly wide open. The researcher was able to navigate with a mouse, type on a keyboard, collect personal information through the touchscreen and enter various programs with ease, all of which concerned the EV owner and social media influencer significantly. https://www.teslarati.com/electrify-america-chargers-hacking-vulnerability-bug

https://insideevs.com/news/642914/electrify-america-charging-station-bugs-easy-hacking/ - In July 2021, a cybersecurity research firm spent 18 months analyzing seven popular EV charger models, finding that five had critical flaws. For instance, a software bug in an EVSE network could be exploited by hackers to obtain sensitive user information. Also, an EVSE sold in the UK by Project EV allowed researchers to overwrite its firmware. https://www.pentestpartners.com/security-blog/smart-car-chargers-plug-n-play-for-hackers/

EVSE cybersecurity incidents are on the increase. Links to information on several other cybersecurity hacks, as well as further reading regarding EVSE cybersecurity, are listed at the end of this blog post.

EVSE cybersecurity risk and threat scenarios include a wide variety of potential issues:

- EVSE malware attacks threatening the integrity the electric grid/transportation network, leading to widespread disruptions in power supply and electric grid load balancing concerns

- Ransomware attacks

- Leakage/manipulation of sensitive data (e.g., PII, credentials, and payment card data)

- Physical attacks to disable EVSEs, steal power, or and infect EVSEs with malware via accessible USB ports

- Authentication RFID, NFC, or credit card chip attacks that could deny EVSE charging sessions or perform false billing

- EVSE or grid Denial of Service attacks, impacting drivers’ ability to recharge during a hurricane or wildfire evacuation

- Firmware/software update attacks, causing access disruption to the necessary cloud services for payment processing

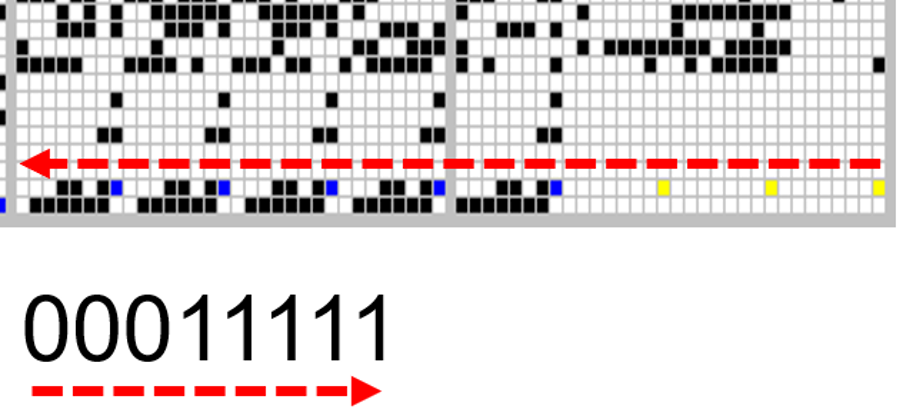

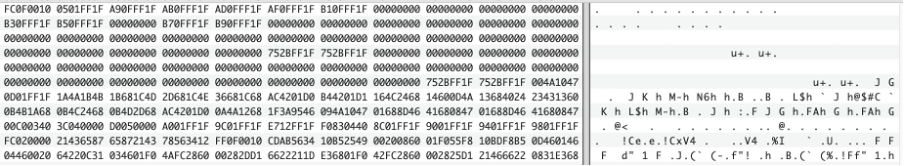

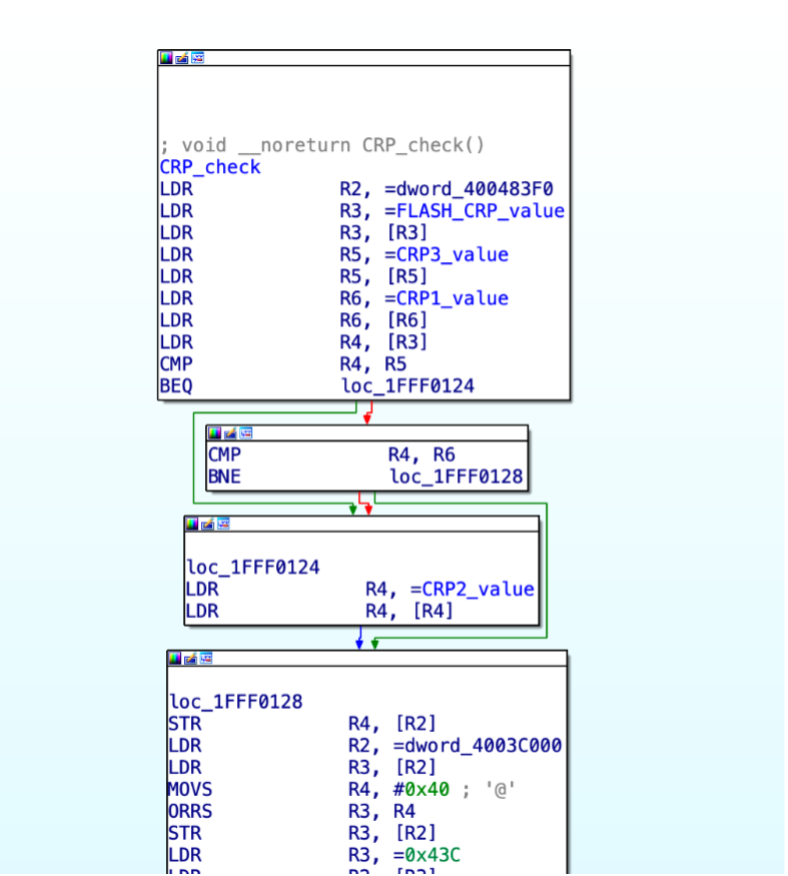

- Bypassing bootloader protections, which can allow attackers with physical access to gain root access into EVSEs to launch attacks on the backend infrastructure while appearing as a trusted device

- An EVSE attack through the charging cable could compromise an EV, causing fire or other damage

IOActive’s Electric Vehicle Charging Infrastructure Vulnerability Findings

Over the past five years, IOActive has conducted several EVSE cybersecurity penetration testing engagements for automotive and commercial truck OEMs/suppliers and EVSE vendors. Examples of IOActive’s electrification penetration testing include assessments of Level 2 EVSEs, DC Fast Chargers (DCFCs), Open Charge Point Protocol (OCPP)/cloud services, front-end/back-end web applications, onsite network configuration reviews, and EV vans.

For the past year, IOActive has led an international EVSE standards working group which has developed a public EVSE Threat Model White Paper that identifies EVSE risks, vulnerabilities, and design flaws. The paper also includes threat scenarios ranked based on magnitude, duration, recovery effort, safety costs, effect and confidence/reputation damage. This White Paper can be shared with industry members upon request.

IOActive Welcomes Future EVSE Cybersecurity Discussions with Industry

We would like to continue to support the key industries impacted by the transition to electrified vehicles. Much of the most detailed work that we have done cannot be shared publicly. We welcome those with a need to know about the risks of and mitigations for BEVs and EVSEs to engage with us for a briefing on example extant vulnerabilities, technical threat models, threat actors, consequences of operationalized attacks, and other threat intelligence topics, as well as potential mitigations and best practices.

If you are interested in hosting IOActive for a briefing, and/or would like copies of the aforementioned presentations or white paper please contact us.

EVSE Cybersecurity Incident References:

- EV Charging Stations Still Riddled With Cybersecurity Vulnerabilities (darkreading.com)

- MiTM phishing attack can let attackers unlock and steal a Tesla (bleepingcomputer.com)

- Is Your EV Charging Station Safe? New Security Vulnerabilities Uncovered (thehackernews.com)Examining Log4j Vulnerabilities in Connected Cars and Charging Stations (Trend Micro), https://thehackernews.com/2023/02/is-your-ev-charging-station-safe-new.html https://www.trendmicro.com/en_us/research/21/l/examining-log4j-vulnerabilities-in-connected-cars.html

- The race against hackers to secure EV cyber-grid (Beta News) https://betanews.com/2022/04/30/hackers-secure-ev-cyber-grid/

- Hackers Exploiting the Growing Popularity of Electric Vehicles (Cyble) https://blog.cyble.com/2022/03/16/hackers-exploiting-the-growing-popularity-of-electric-vehicles/

- EV infrastructure vulnerabilities put cars, the grid at risk (Route Fifty) https://www.route-fifty.com/cybersecurity/2022/05/ev-infrastructure-vulnerabilities-put-cars-grid-risk/366694/

- Hackers Are Starting To Target EV Charging Stations (CarBuzz) https://carbuzz.com/news/hackers-are-starting-to-target-ev-charging-stations

- Vulnerability in the Combined Charging System for Electric Vehicles (Brokenwire) https://www.brokenwire.fail/

- Ladeinfrastruktur für Elektroautos: Ausbau statt Sicherheit – english translation (EVSE hacking demonstration) (YouTube) https://www.youtube.com/watch?v=szYeqOIQ9Bw

- ChargePoint Home security research (Kaspersky) https://media.kasperskycontenthub.com/wp-content/uploads/sites/43/2018/12/13084354/ChargePoint-Home-security-research_final.pdf

- EV Charger Hacking Success! (Medium) https://ryanteck42.medium.com/ev-charger-hacking-success-428c449e0ecb

Suggested Reading:

- ChargePrint: A Framework for Internet-Scale Discovery and Security Analysis of EV Charging Management Systems: https://www.ndss-symposium.org/wp-content/uploads/2023/02/ndss2023_s84_paper.pdf

- Uncovering Covert Attacks on EV Charging Infrastructure: How OCPP Backend Vulnerabilities Could Compromise Your System (researchgate.net)

- The Third-Annual Digital Conference Supporting and Accelerating Infrastructure Development (March 2024), Electric Vehicle Charging Infrastructure Conference, an SAE Sustainability Event – Electric Vehicle Charging Infrastructure Conference

- Cybersecurity Risk Analysis of Electric Vehicles Charging Stations: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10422437/

- 12 Future Transportation Technologies to Watch: https://builtin.com/articles/future-transportation

- How Electric Trucks are Becoming the Norm for Moving Cargo: How electric trucks are becoming the norm for moving cargo (cnbc.com)

- Advanced Clean Fleets Regulation – Drayage Truck Requirements | California Air Resources Board

- Practical Considerations for Implementation and Scaling ISO 15118 into a Secure EV Charging Ecosystem: https://www.chargepoint.com/files/15118whitepaper.pdf

- Public Plug-In Electric Vehicles + Grid Data: Is a New Cyberattack Vector Viable? https://arxiv.org/pdf/1907.08283.pdf

- Securing Vehicle Charging Infrastructure Against Cybersecurity Threats: Securing Vehicle Charging Infrastructure Against Cybersecurity Threats (osti.gov)

- Review of Electric Vehicle Charger Cybersecurity Vulnerabilities, Potential Impacts, and Defenses: Energies | Free Full-Text | Review of Electric Vehicle Charger Cybersecurity Vulnerabilities, Potential Impacts, and Defenses (mdpi.com)

- Cybersecurity for Electric Vehicle Charging Infrastructure: 1877784 (osti.gov)

- Megawatt Charging Systems (MCS)- Consequence-driven Cybersecurity for High-Power Electric Vehicle Charging Infrastructure https://www.sae.org/publications/technical-papers/content/2023-01-0047/

- Power Jacking Your Station: In-Depth Security Analysis Of Electric Vehicle Charging Station Management Systems: https://www.sciencedirect.com/science/article/abs/pii/S0167404821003357

- DOE/DHS/DOT Volpe Technical Meeting on Electric Vehicle and Charging Station Cybersecurity Report, https://rosap.ntl.bts.gov/view/dot/34991

- Government Fleet and Public Sector Electric Vehicle Supply Equipment (EVSE) Cybersecurity Best Practices and Procurement Language Report: https://rosap.ntl.bts.gov/view/dot/43606

- Sandia National Lab Recommended Cybersecurity Practices for EV Charging Systems Infographic: https://www.researchgate.net/publication/344888849_Recommended_Cybersecurity_Practices_for_EV_Charging_Systems

- Cyber Security Research and Development Cyber Assessment Report of Level 2 AC Powered Electric Vehicle Supply Equipment: https://avt.inl.gov/sites/default/files/pdf/reports/Level2EVSECyberReport.pdf

- Circontrol CirCarLife EVSE CWE: https://www.cisa.gov/news-events/ics-advisories/icsa-18-305-03

[1]https://bidenwhitehouse.archives.gov/briefing-room/presidential-actions/2021/12/08/executive-order-on-catalyzing-clean-energy-industries-and-jobs-through-federal-sustainability/

[2]https://ww2.arb.ca.gov/resources/fact-sheets/governor-newsoms-zero-emission-2035-executive-order-n-79-20

[3]https://www.gov.ca.gov/wp-content/uploads/2020/09/9.23.20-EO-N-79-20-Climate.pdf

[4]https://www.europarl.europa.eu/topics/en/article/20221019STO44572/eu-ban-on-sale-of-new-petrol-and-diesel-cars-from-2035-explained

[5]https://www.iea.org/reports/global-ev-outlook-2023/trends-in-charging-infrastructure

[6]https://www.charin.global/

[7]https://finance.yahoo.com/news/connected-vehicle-technology-becoming-key-140000573.html

[8]https://www.wired.com/2015/07/hackers-remotely-kill-jeep-highway/