It has been a while since I published something about a really broken router. To be honest, it has been a while since I even looked at a router, but let me fix that with this blog post.

(more…)

Year: 2018

HooToo Security Advisory

HT-TM05 is vulnerable to unauthenticated remote code execution in the /sysfirm.csp CGI endpoint, which allows an attacker to upload an arbitrary shell script that will be executed with root privileges on the device. (more…)

Robots Want Bitcoins too!

Ransomware attacks have boomed during the last few years, becoming a preferred method for cybercriminals to get monetary profit by encrypting victim information and requiring a ransom to get the information back. The primary ransomware target has always been information. When a victim has no backup of that information, he panics, forced to pay for its return.

(more…)

Security Theater and the Watch Effect in Third-party Assessments

Before the facts were in, nearly every journalist and salesperson in infosec was thinking about how to squeeze lemonade from the Equifax breach. Let’s be honest – it was and is a big breach. There are lessons to be learned, but people seemed to have the answers before the facts were available.

At IOActive we guard against making on-the-spot assumptions. We consider and analyze the actual threats, ever mindful of the “Watch Effect.” The Watch Effect can be simply explained: you wear a watch long enough, you can’t even feel it.

The industry-wide point here is: Everyone is asking everyone else for proof that they’re secure.

Well, sure, they do mean something. In the case of questionnaires, you are asking a company to perform a massive amount of tedious work, and, if they respond with those questions filled in, and they don’t make gross errors or say “no” where they should have said “yes”, that probably counts for something.

But the question is how much do we really know about a company’s security by looking at their responses to a security questionnaire?

The answer is, “not much.”

At IOActive we conduct full, top-down security reviews of companies that include business risk, crown-jewel defense, and every layer that these pieces touch. Because we know how attackers get in, we measure and test how effective the company is at detecting and responding to cyber events – and use this comprehensive approach to help companies understand how to improve their ability to prevent, detect, and ever so critically, RESPOND to intrusions. Part of that approach includes a series of interviews with everyone from the C-suite to the people watching logs. What we find is frightening.

We are often days or weeks into an assessment before we discover a thread to pull that uncovers a major risk, whether that thread comes from a technical assessment or a person-to-person interview or both.

That’s days—or weeks—of being onsite with full access to the company as an insider.

Here’s where the Watch Effect comes in. Many of the companies have no idea what we’re uncovering or how bad it is because of the Watch Effect. They’re giving us mostly standard answers about their day-to-day, the controls they have in place, etc. It’s not until we pull the thread and start probing technically – as an attacker – that they realize they’re wearing a broken watch.

Then they look down at a set of catastrophic vulnerabilities on their wrist and say, “Oh. That’s a problem.”

So, back to the questionnaire…

If it takes days or weeks for an elite security firm to uncover these vulnerabilities onsite with full cooperation during an INTERNAL assessment, how do you expect to uncover those issues with a form?

You can’t. And you should stop pretending you can. Questionnaires depend far too much upon the capability and knowledge of the person or team filling it out, and often are completed with impartial knowledge. How would one know if a firewall rule were updated improperly to “any/any” in the last week if it is not tested and verified?

To be clear, the problem isn’t that third party assessments only give 2/10 in security assessment value. The problem is that executives THINK it’s giving them 6/10, or 9/10.

It’s that disconnect that’s causing the harm.

Eventually, companies will figure this out. In the meantime, the breaches won’t stop.

Until then, we as technical practitioners can do our best to convince our clients and prospects to understand the value these types of cursory, external glances at a company provide. Very little. So, let’s prioritize appropriately.

Cryptocurrency and the Interconnected Home

There are many tiny elements to cryptocurrency that are not getting the awareness time they deserve. To start, the very thing that attracts people to cryptocurrency is also the very thing that is seemingly overlooked as a challenge. Cryptocurrencies are not backed by governments or institutions. The transactions allow the trader or investor to operate with anonymity. We have seen a massive increase in the last year of cyber bad guys hiding behind these inconspicuous transactions – ransomware demanding payment in bitcoin; bitcoin ATMs being used by various dealers to effectively clean money.

Because there are few regulations governing crypto trading, we cannot see if cryptocurrency is being used to fund criminal or terrorist activity. There is an ancient funds transfer capability, designed to avoid banks and ledgers called Hawala. Hawala is believed to be the method by which terrorists are able to move money, anonymously, across borders with no governmental controls. Sound like what’s happening with cryptocurrency? There’s an old saying in law enforcement – follow the money. Good luck with that one.

Many people don’t realize that cryptocurrencies depend on multiple miners. This allows the processing to be spread out and decentralized. Miners validate the integrity of the transactions and as a result, the miners receive a “block reward” for their efforts. But, these rewards are cut in half every 210,000 blocks. A bitcoin block reward when it first started in 2009 was 50 BTC, today it’s 12.5. There are about 1.5 million bitcoins left to mine before the reward halves again.

This limit on total bitcoins leads to an interesting issue – as the reward decreases, miners will switch their attention from bitcoin to other cryptocurrencies. This will reduce the number of miners, therefore making the network more centralized. This centralization creates greater opportunity for cyber bad guys to “hack” the network and wreak havoc, or for the remaining miners to monopolize the mining.

At some point, and we are already seeing the early stages of this, governments and banks will demand to implement more control. They will start to produce their own cryptocurrency. Would you trust these cryptos? What if your bank offered loans in Bitcoin, Ripple or Monero? Would you accept and use this type of loan?

Because it’s a limited resource, what happens when we reach the 21 million bitcoin limit? Unless we change the protocols, this event is estimated to happen by 2140. My first response – I don’t think bitcoins will be at the top of my concerns list in 2140.

The Interconnected Home

So what does crypto-mining malware or mineware have to do with your home? It’s easy enough to notice if your laptop is being overused – the device slows down, the battery runs down quickly. How can you tell if your fridge or toaster are compromised? With your smart home now interconnected, what happens if the cyber bad guys operate there? All a cyber bad guy needs is electricity, internet and CPU time. Soon your fridge will charge your toaster a bitcoin for bread and butter. How do we protect our unmonitored devices from this mineware? Who is responsible for ensuring the right level of security on your home devices to prevent this?

Smart home vulnerabilities present a real and present danger. We have already seen baby monitors, robots, and home security products, to name a few, all compromised. Most by IOActive researchers. There can be many risks that these compromises introduce to the home, not just around cryptocurrency. Think about how the interconnected home operates. Any device that’s SMART now has the three key ingredients to provide the cyber bad guy with everything he needs – internet access, power and processing.

Firstly, I can introduce my mineware via a compromised mobile phone and start to exploit the processing power of your home devices to mine bitcoin. How would you detect this? When could you detect this? At the end of the month when you get an electricity bill. Instead of 50 pounds a month, its now 150 pounds. But how do you diagnose the issue? You complain to the power company. They show you the usage. It’s correct. Your home IS consuming that power.

IOActive has proven these attack vectors over and over. We know this is possible and we know this is almost impossible to detect. Remember, a cyber bad guy makes several assessments when deciding on an attack – the risk of detection, the reward for the effort, and the penalty for capture. The risk of detection is low, like very low. The reward, well you could be mining blocks for months without stopping, that’s tens of thousands of dollars. And the penalty… what’s the penalty for someone hacking your toaster… The impact is measurable to the homeowner. This is real, and who’s to say not happening already. Ask your fridge!!

What’s the Answer – Avoid Using Smart Home Devices Altogether?

In the meantime, consider the entry point for most cyber bad guys. Generally, this is your desktop, laptop or mobile device. Therefore, ensure you have suitable security products running on these devices, make sure they are patched to the correct levels, be conscious of the websites you are visiting. If you control the available entry points, you will go a long way to protecting your home.

Easy SSL Certificate Testing

During application source code reviews, we often find that developers forget to enable all the security checks done over SSL certificates before going to production. Certificate-based authentication is one of the foundations of SSL/TLS, and its purpose is to ensure that a client is communicating with a legitimate server. Thus, if the application isn’t strictly verifying all the relevant details of the certificate presented by a server, it is susceptible to eavesdropping and tampering from any attacker having a suitable position in the network.The following Java code block nullifies all the certificate verification checks:

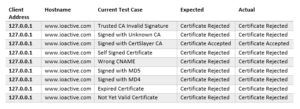

Currently, the following certificate test-cases are available (and will run in order):

- CertificateInvalidCASignature

- CertificateUnknownCA

- CertificateSignedWithCA

- CertificateSelfSigned

- CertificateWrongCN

- CertificateSignWithMD5

- CertificateSignWithMD4

- CertificateExpired

- CertificateNotYetValid

Stand-alone Mode

Proxy mode is not useful when testing a web application or web service that allows fetching resources from a specified endpoint. In most instances, there won’t be a way to install a root CA at the application backend for doing these tests. However, there are applications that include this feature in their design, like, for instance, cloud applications that allow interacting with third party services.

In these scenarios, besides checking for SSRF vulnerabilities, we also need to check if the requester is actually verifying the presented certificate. We do this using Certslayer standalone mode. Standalone mode binds a web server configured with a test-certificate to all network interfaces and waits for connections.

After this, I instructed the application to perform the request to my server:

Here, the connection succeeded because the tool presented a valid certificate signed with Certslayer CA:

+ Setting up WebServer with Test: Wrong CNAME

A similar tool exists called tslpretense, the main difference is that, instead of using a proxy to intercept requests to targeted domains, it requires configuring the test runner as a gateway so that all traffic the client generates goes through it. Configuring a gateway host this way is tedious, which is the primary reason Certslayer was created.

SCADA and Mobile Security in the IoT Era

Today, no one is surprised at the appearance of an IIoT. The idea of putting your logging, monitoring, and even supervisory/control functions in the cloud does not sound as crazy as it did several years ago. If you look at mobile application offerings today, many more ICS- related applications are available than two years ago. Previously, we predicted that the “rapidly growing mobile development environment” would redeem the past sins of SCADA systems.

The purpose of our research is to understand how the landscape has evolved and assess the security posture of SCADA systems and mobile applications in this new IIoT era.

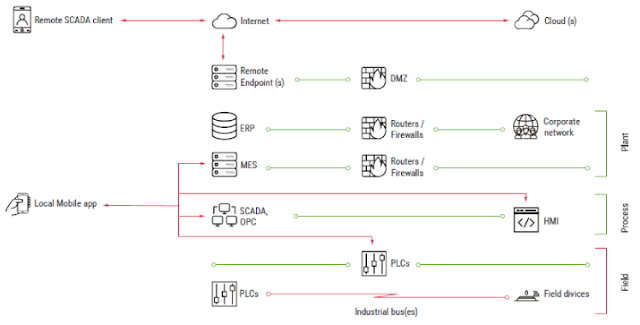

SCADA and Mobile Applications

ICS infrastructures are heterogeneous by nature. They include several layers, each of which is dedicated to specific tasks. Figure 1 illustrates a typical ICS structure.

Mobile applications reside in several ICS segments and can be grouped into two general families: Local (control room) and Remote.

Local Applications

Local applications are installed on devices that connect directly to ICS devices in the field or process layers (over Wi-Fi, Bluetooth, or serial).

Remote Applications

Remote applications allow engineers to connect to ICS servers using remote channels, like the Internet, VPN-over-Internet, and private cell networks. Typically, they only allow monitoring of the industrial process; however, several applications allow the user to control/supervise the process. Applications of this type include remote SCADA clients, MES clients, and remote alert applications.

In comparison to local applications belonging to the control room group, which usually operate in an isolated environment, remote applications are often installed on smartphones that use Internet connections or even on personal devices in organizations that have a BYOD policy. In other words, remote applications are more exposed and face different threats.

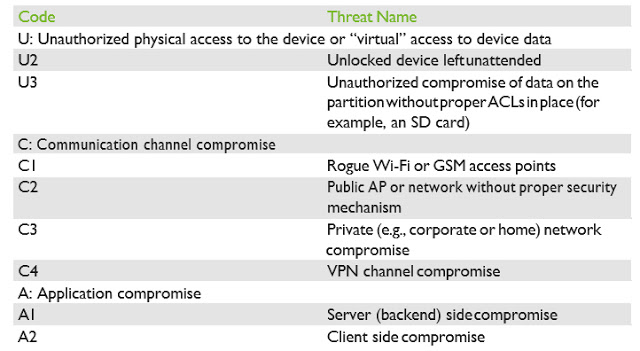

Typical Threats And Attacks

- Unauthorized physical access to the device or “virtual” access to device data

- Communication channel compromise (MiTM)

- Application compromise

Table 1 summarizes the threat types.

- Perform analysis and fill out the test checklist

- Perform client and backend fuzzing

- If needed, perform deep analysis with reverse engineering

- Application purpose, type, category, and basic information

- Permissions

- Password protection

- Application intents, exported providers, broadcast services, etc.

- Native code

- Code obfuscation

- Presence of web-based components

- Methods of authentication used to communicate with the backend

- Correctness of operations with sessions, cookies, and tokens

- SSL/TLS connection configuration

- XML parser configuration

- Backend APIs

- Sensitive data handling

- HMI project data handling

- Secure storage

- Other issues

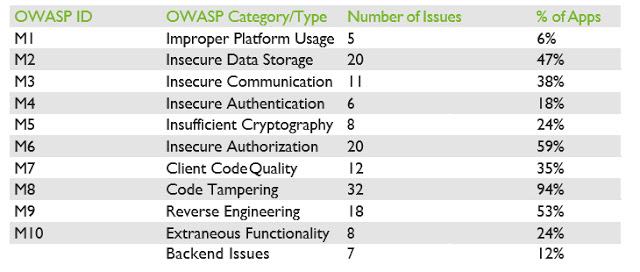

In our white paper, we provide an in-depth analysis of each category, along with examples of the most significant vulnerabilities we identified. Please download the white paper for a deeper analysis of each of the OWASP category findings.

Remediation And Best Practices

In addition to the well-known recommendations covering the OWASP Top 10 and OWASP Mobile Top 10 2016 risks, there are several actions that could be taken by developers of mobile SCADA clients to further protect their applications and systems.

In the following list, we gathered the most important items to consider when developing a mobile SCADA application:

- Always keep in mind that your application is a gateway to your ICS systems. This should influence all of your design decisions, including how you handle the inputs you will accept from the application and, more generally, anything that you will accept and send to your ICS system.

- Avoid all situations that could leave the SCADA operators in the dark or provide them with misleading information, from silent application crashes to full subverting of HMI projects.

- Follow best practices. Consider covering the OWASP Top 10, OWASP Mobile Top 10 2016, and the 24 Deadly Sins of Software Security.

- Do not forget to implement unit and functional tests for your application and the backend servers, to cover at a minimum the basic security features, such as authentication and authorization requirements.

- Enforce password/PIN validation to protect against threats U1-3. In addition, avoid storing any credentials on the device using unsafe mechanisms (such as in cleartext) and leverage robust and safe storing mechanisms already provided by the Android platform.

- Do not store any sensitive data on SD cards or similar partitions without ACLs at all costs Such storage mediums cannot protect your sensitive data.

- Provide secrecy and integrity for all HMI project data. This can be achieved by using authenticated encryption and storing the encryption credentials in the secure Android storage, or by deriving the key securely, via a key derivation function (KDF), from the application password.

- Encrypt all communication using strong protocols, such as TLS 1.2 with elliptic curves key exchange and signatures and AEAD encryption schemes. Follow best practices, and keep updating your application as best practices evolve. Attacks always get better, and so should your application.

- Catch and handle exceptions carefully. If an error cannot be recovered, ensure the application notifies the user and quits gracefully. When logging exceptions, ensure no sensitive information is leaked to log files.

- If you are using Web Components in the application, think about preventing client-side injections (e.g., encrypt all communications, validate user input, etc.).

- Limit the permissions your application requires to the strict minimum.

- Implement obfuscation and anti-tampering protections in your application.

Conclusions

Two years have passed since our previous research, and things have continued to evolve. Unfortunately, they have not evolved with robust security in mind, and the landscape is less secure than ever before. In 2015 we found a total of 50 issues in the 20 applications we analyzed and in 2017 we found a staggering 147 issues in the 34 applications we selected. This represents an average increase of 1.6 vulnerabilities per application.

We therefore conclude that the growth of IoT in the era of “everything is connected” has not led to improved security for mobile SCADA applications. According to our results, more than 20% of the discovered issues allow attackers to directly misinform operators and/or directly/ indirectly influence the industrial process.

In 2015, we wrote:

SCADA and ICS come to the mobile world recently, but bring old approaches and weaknesses. Hopefully, due to the rapidly developing nature of mobile software, all these problems will soon be gone.

We now concede that we were too optimistic and acknowledge that our previous statement was wrong.

Over the past few years, the number of incidents in SCADA systems has increased and the systems become more interesting for attackers every year. Furthermore, widespread implementation of the IoT/IIoT connects more and more mobile devices to ICS networks.

Thus, the industry should start to pay attention to the security posture of its SCADA mobile applications, before it is too late.

For the complete analysis, please download our white paper here.

Acknowledgments