As a penetration tester, I encounter interesting problems with network devices and software. The most common problems that I notice in my work are configuration issues. In today’s security environment, we can accept that a zero-day exploit results in system compromise because details of the vulnerability were unknown earlier. But, what about security issues and problems that have been around for a long time and can’t seem to be eradicated completely? I believe the existence of these types of issues shows that too many administrators and developers are not paying serious attention to the general principles of computer security. I am not saying everyone is at fault, but many people continue to make common security mistakes. There are many reasons for this, but the major ones are:

- Time constraints: This is hard to imagine, because an administrator’s primary job is to manage and secure the network. For example: How long does it take to configure and secure a network printer? If you ask me, it should not take much time to evaluate and configure secure device properties unless the network has significant complex requirements. But, even if there are complex requirements, it is worth investing the time to make it harder for bad guys to infiltrate the network.

- Ignorance, fear, and a ‘don’t care’ attitude: This is a human problem. I have seen many network devices that are not touched or reconfigured for years and administrators often forget about them. When I have discussed this with administrators they argued that secure reconfiguration might impact the reliability and performance of the device. As a result, they prefer to maintain the current functionality but at the risk of an insecure configuration.

- Problems understanding the issue: If complex issues are hard to understand, then easy problems should be easy to manage, right? But it isn’t like that in the real world of computer security. For example, every software or network device is accompanied by manuals or help files. How many of us spend even a few minutes reading the security sections of these documents? The people who are cautious about security read these sections, but the rest just want to enjoy the device or software’s functionality. Is it so difficult to read the security documentation that is provided? I disagree. For a reasonable security assessment of any new product and software, reading the manuals is one key to impressive results. We cannot simply say we don’t understand the issue and if we ignore the most basic security guidance, then attackers have no hurdles in exploiting the most basic known security issues.

Here are a number of examples to support this discussion:

The majority of network devices can be accessed using Simple Network Management Protocol (SNMP) and community strings that typically act as passwords to extract sensitive information from target systems. In fact, weak community strings are used in too many devices across the Internet. Tools such as snmpwalk and snmpenum make the process of SNMP enumeration easy for attackers. How difficult is it for administrators to change default SNMP community strings and configure more complex ones? Seriously, it isn’t that hard.

A number of devices such as routers use the Network Time Protocol (NTP) over packet-switched data networks to synchronize time services between systems. The basic problem with the default configuration of NTP services is that NTP is enabled on all active interfaces. If any interface exposed on the Internet uses UDP port 123 the remote user can easily execute internal NTP readvar queries using ntpq to gain potential information about the target server including the NTP software version, operating system, peers, and so on.

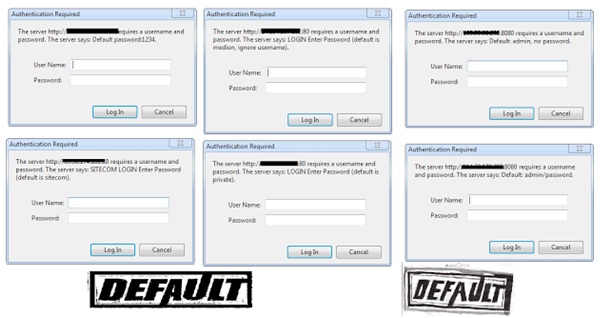

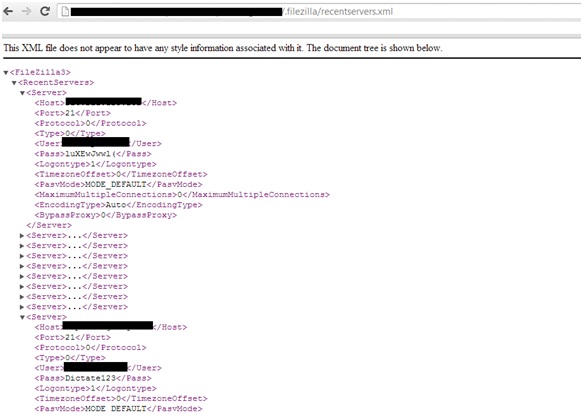

Unauthorized access to multiple components and software configuration files is another big problem. This is not only a device and software configuration problem, but also a byproduct of design chaos i.e. the way different network devices are designed and managed in the default state. Too many devices on the Internet allow guests to obtain potentially sensitive information using anonymous access or direct access. In addition, one has to wonder about how well administrators understand and care about security if they simply allow configuration files to be downloaded from target servers. For example, I recently conducted a small test to verify the presence of the file Filezilla.xml, which sets the properties of FileZilla FTP servers, and found that a number of target servers allow this configuration file to be downloaded without any authorization and authentication controls. This would allow attackers to gain direct access to these FileZilla FTP servers because the passwords are embedded in these configuration files.

It is really hard to imagine that anyone would deploy sensitive files containing usernames and passwords on remote servers that are exposed on the network. What results do you expect when you find these types of configuration issues?

|

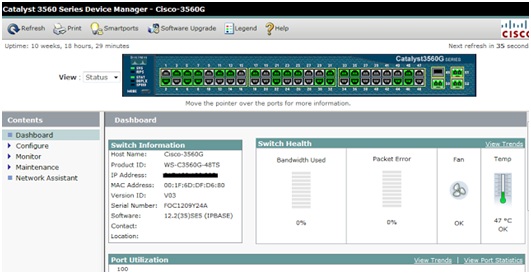

Cisco Catalyst – Insecure Interface

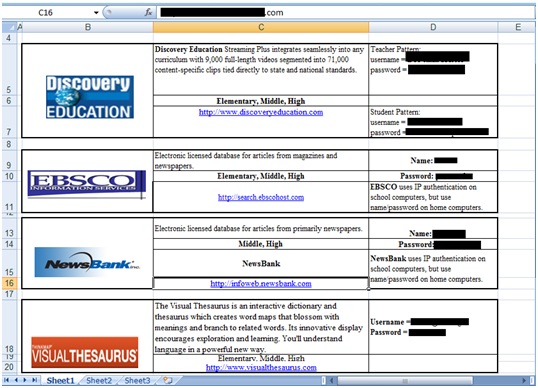

How can we forget about backend databases? The presence of default and weak passwords for the MS SQL system administrator account is still a valid configuration that can be found in the internal networks of many organizations. For example, network proxies that require backend databases are configured with default and weak passwords. In many cases administrators think internal network devices are more secure than external devices. But, what happens if the attacker finds a way to penetrate the internal network

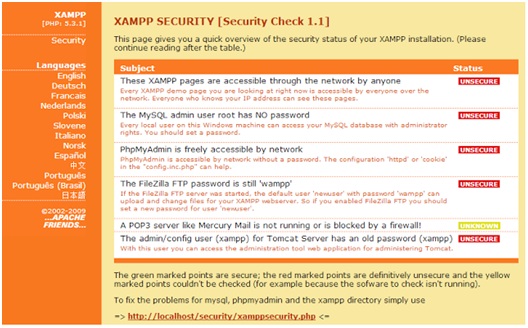

Thesimple answer is: game over. Integrated web applications with backend databases using default configurations are an “easy walk in the park” for attackers. For example, the following insecure XAMPP installation would be devastative.

|

|

XAMPP Security – Web Page

The security community recently encountered an interesting configuration issue in the GitHub repository (http://www.securityweek.com/github-search-makes-easy-discovery-encryption-keys-passwords-source-code), which resulted in the disclosure of SSH keys on the Internet. The point is that when you own an account or repository on public servers, then all administrator responsibilities fall on your shoulders. You cannot blame the service provider, Git in this case. If users upload their private SSH keys to the repository, then whom can you blame? This is a big problem and developers continue to expose sensitive information on their public repositories, even though Git documents show how to secure and manage sensitive data.

Another interesting finding shows that with Google Dorks (targeted search queries), the Google search engine exposed thousands of HP printers on the Internet (http://port3000.co.uk/google-has-indexed-thousands-of-publicly-acce). The search string “inurl:hp/device/this.LCDispatcher?nav=hp.Print” is all you need to gain access to these HP printers. As I asked at the beginning of this post, “How long does it take to secure a printer?” Although this issue is not new, the amazing part is that it still persists.

The existence of vulnerabilities and patches is a completely different issue than the security issues that result from default configurations and poor administration. Either we know the security posture of the devices and software on our networks but are being careless in deploying them securely, or we do not understand security at all. Insecure and default configurations make the hacking process easier. Many people realize this after they have been hacked and by then the damage has already been done to both reputations and businesses.

This post is just a glimpse into security consciousness, or the lack of it. We have to be more security conscious today because attempts to hack into our networks are almost inevitable. I think we need to be a bit more paranoid and much more vigilant about security.

|

|

|