Year: 2013

IOActive supports National Cyber Security Awareness Month

Seeing red – recap of SecurityZone, DerbyCon, and red teaming goodness

Vulnerability bureaucracy: Unchanged after 12 years

One of my tasks at IOActive Labs is to deal with vulnerabilities; report them, try to get them fixed, publish advisories, etc. This isn’t new to me. I started to report vulnerabilities something like 12 years ago and over that time I have reported hundreds of vulnerabilities – many of them found by me and by other people too.

- Vendor not responding

- Vendor responding aggressively

- Vendor responding but choosing not to fix the vulnerability

- Vendor releasing flawed patches or didn’t patch some vulnerabilities at all

- Vendor failing to meet deadlines agreed by themselves

- Independent researchers and security consulting companies report vulnerabilities on a voluntary basis.

- Independent researchers and security consulting companies have no obligation to report security vulnerabilities.

- Independent researchers and security consulting companies often invest a lot of time, effort and resources finding vulnerabilities and trying to get them fixed.

- Vendors and CERTs should be more appreciative of the people reporting vulnerabilities and, at the very least, be more responsive than they typically are today.

- Vendors and CERTs should adhere and comply with their own defined deadlines.

Emulating binaries to discover vulnerabilities in industrial devices

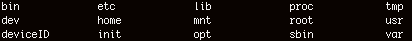

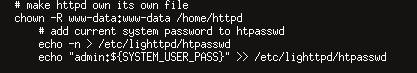

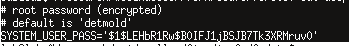

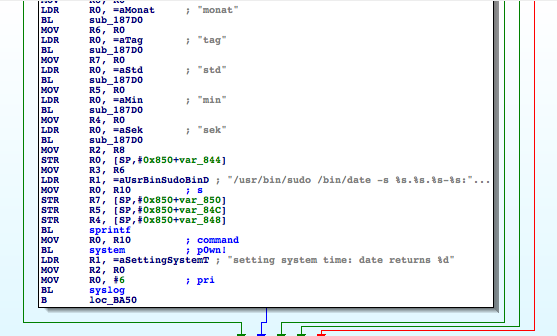

We see that ‘/etc/init.d/S60httpd’ starts the lighttpd web server and can be used to configure its authentication.

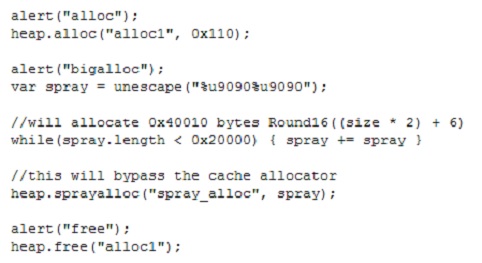

IE heaps at Nordic Security Conference

FDA Medical Device Guidance

Last week the US Food and Drug Administration (FDA) finally released a couple of important documents. The first being their guidance on using radio frequency wireless technology in medical devices (replacing a draft from January 3,2007), and a second being their new (draft) guidance on premarket submission for management of cybersecurity in medical devices.

The wireless technology guidance document seeks to address many of the risks and vulnerabilities that have been disclosed in medical devices (embedded or otherwise) in recent years – in particular those with embedded RF wireless functionality…

The recommendations in this guidance are intended for RF wireless medical devices including those that are implanted, worn on the body or other external wireless medical devices intended for use in hospitals, homes, clinics, clinical laboratories, and blood establishments. Both wireless induction-based devices and radiated RF technology device systems are within the scope of this guidance.

The FDA wishes medical device manufacturers to consider the design, testing and use of wireless medical devices…

In the design, testing, and use of wireless medical devices, the correct, timely, and secure transmission of medical data and information is important for the safe and effective use of both wired and wireless medical devices and device systems. This is especially important for medical devices that perform critical functions such as those that are life-supporting or life-sustaining. For wirelessly enabled medical devices, risk management should include considerations for robust RF wireless design, testing, deployment, and maintenance throughout the life cycle of the product.

For most of you reading the IOActive Labs blog, the most important parts of the guidance document are the advice on security and securing “wireless signals and data”. Section 3.d covers this…

Security of RF wireless technology is a means to prevent unauthorized access to patient data or hospital networks and to ensure that information and data received by a device are intended for that device. Authentication and wireless encryption play vital roles in an effective wireless security scheme. While most wireless technologies have encryption schemes available, wireless encryption might need to be enabled and assessed for adequacy for the medical device’s intended use. In addition, the security measures should be well coordinated among the medical device components, accessories, and system, and as needed, with a host wireless network. Security management should also consider that certain wireless technologies incorporate sensing of like technologies and attempt to make automatic connections to quickly assemble and use a network (e.g., a discovery mode such as that available in Bluetooth™ communications). For certain types of wireless medical devices, this kind of discovery mode could pose safety and effectiveness concerns, for example, where automatic connections might allow unintended remote control of the medical device.

FDA recommends that wireless medical devices utilize wireless protection (e.g., wireless encryption,6 data access controls, secrecy of the “keys” used to secure messages) at a level appropriate for the risks presented by the medical device, its environment of use, the type and probability of the risks to which it is exposed, and the probable risks to patients from a security breach. FDA recommends that the following factors be considered during your device design and development:

* Protection against unauthorized wireless access to device data and control. This should include protocols that maintain the security of the communications while avoiding known shortcomings of existing older protocols (such as Wired Equivalent Privacy (WEP)).

* Software protections for control of the wireless data transmission and protection against unauthorized access.

Use of the latest up-to-date wireless encryption is encouraged. Any potential issues should be addressed either through appropriate justification of the risks based on your device’s intended use or through appropriate design verification and validation.

Based upon the parts I’ve highlighted above, you’ll probably be feeling a little foreboding. From a “guidance” perspective, it’s less useful than a teenager with a CISSP qualification. The instructions are so general as to be useless.

If I was the geek charged with waving the security batton at some medical device manufacturer I wouldn’t be happy at all. Effectively the FDA are saying “there are a number of security risks with wireless technologies, here are some things you could think about doing, hope that helps.” Even if you followed all this advice, the FDA could turn around later during your submission for certification and say you did it wrong…

The second document the FDA released last week (Content of Premarket Submissions for Management of Cybersecurity in Medical Devices – Draft Guidance for Industry and Food and Drug Administration Staff) is a little more helpful – at the very least they’re talking about “cybersecurity” and there’s a little more meat for your CISSP folks to chew upon (in fact parts of it read like they’ve been copy-pasted right out of a CISSP training manual).

This guidance has been developed by the FDA to assist industry by identifying issues related to cybersecurity that manufacturers should consider in preparing premarket submissions for medical devices. The need for effective cybersecurity to assure medical device functionality has become more important with the increasing use of wireless, Internet- and network-connected devices, and the frequent electronic exchange of medical device-related health information.

Again, it doesn’t go in to any real detail of what device manufacturers should or shouldn’t be doing, but it does set the scene for understanding the scope of part of the threat.

If I was an executive at one of the medical device manufacturers confronted with these FDA Guidance documents for the first time, I wouldn’t feel particularly comforted by them – in fact I’d be more worried about the increased exposure I would have in the future. If a future product of mine was to get hacked, regardless of how close I thought I was following the FDA guidance, I’d be pretty sure that the FDA could turn around and say that I wasn’t really in compliance.

With that in mind, let me slip on my IOActive CTO hat and clearly state that I’d recommend any medical device manufacturer that doesn’t want to get bitten in the future for failing to follow this FDA “guidance” reach out to a qualified security consulting company to get advice on (and to assess) the security of current and future product lines prior to release.

Engaging with a bunch of third-party experts isn’t just a CYA proposition for your company. Bringing to bear an external (impartial) security authority would obviously add extra weight to the approval process; proving the companies technical diligence, and working “above and beyond” the security checkbox of the FDA guidelines. Just as importantly though, securing wireless technologies against today’s and tomorrow’s threats isn’t something that can be done by an internal team (or a flock of CISSP’s) – you really do need to call in the experts with a hackers-eye for security… Ideally a company with a pedigree in cutting-edge security research, and I know just who to call…

Car Hacking: The Content

Car Hacking Made Affordable

This research focuses on reducing the barrier to entry for automotive security assessments. The goal is to increase the number of security researchers working in this area by providing step-by-step information on how to evaluate, test, and assess Electronic Control Units (ECUs) without requiring a vehicle.

To accomplish the work described in this paper, you only need inexpensive electronics and an ECU. Most, if not all, of the equipment and vehicle parts can be acquired from third-party sources, such as eBay or Amazon.

Adventures in Automotive Networks and Control Units

Previous research has shown that an attacker can execute remote code on the electronic control units (ECU) in automotive vehicles via interfaces such as Bluetooth and the telematics unit: https://www.autosec.org/pubs/cars-usenixsec2011.pdf.

This paper expands on the topic and describes how an attacker can influence a vehicle’s behavior. It includes examples of mission critical controls, such as steering, braking, and acceleration, being manipulated using Controller Area Network (CAN) messages. (more…)